Home

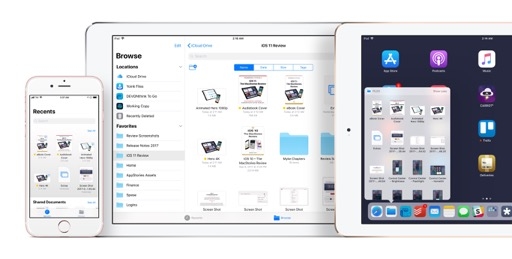

The Home app in iOS 11 isn’t visually different from iOS 10’s debut version, but under the hood it’s been updated to take advantage of changes to the HomeKit API and automation.

iOS 11 introduces two new categories of smart accessories: sprinklers and faucets.39 Those who purchase these devices will be able to combine them with other HomeKit sensors for even deeper control around and outside the house.

HomeKit now supports a total of 16 accessory types, but Apple wants to speed up adoption of the protocol and allow more companies to integrate their products with HomeKit. They are tackling this in a couple of ways.

iOS 11 supports software-based authentication for existing accessories that can add HomeKit functionality via software updates. This is a major difference from previous HomeKit guidelines, which required third parties to add dedicated HomeKit chips to their accessories and go through a lengthy certification process. With iOS 11, anyone who certifies their software with Apple will be able to update existing devices with HomeKit compatibility. This could increase the appeal of HomeKit for dozens of third-party manufacturers that haven’t considered Apple’s technology so far.

In addition, Apple is reducing the latency for Bluetooth LE devices with sub-second communications, and they’ve made the HomeKit protocol spec available to anyone with an iOS developer license. Developers and tinkerers who want to test HomeKit for personal use can now do so without limitations.

The setup flow for unpaired HomeKit devices has gotten easier in iOS 11 too as you’re no longer forced to turn on an accessory before scanning its pairing code. iOS 11 brings support for HomeKit QR codes, which manufacturers can put on the packaging of a device. You can scan the code on the box first, then turn on the accessory and finish setting it up. This is ideal for devices that are positioned in places hard to reach – such as security cameras or window shades; saving their code beforehand should be more convenient.

At the same time, iOS 11 enables you to skip the code scanning process altogether with NFC tags. Just like you can tap your iPhone on the AirPods’ case to pair them, in iOS 11 you can tap a compatible HomeKit accessory to instantly add it to your Home configuration. Thanks to Core NFC, HomeKit accessories with a tag attached to them should yield a seamless setup experience. Hopefully, third parties will adopt NFC tags for their HomeKit hardware and scanning the setup code will soon be obsolete.

If Apple’s extension of the platform is successful, we’re poised to see an influx of accessories gaining HomeKit support thanks to software updates and easier certification. It’s reasonable then that Apple wanted to ship even stronger automation features in iOS 11’s Home app: soon, it’ll need to scale for more accessories and more users.

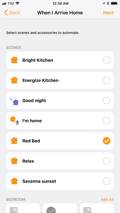

First, automations can now be triggered by significant events with time offsets. In the A Time of Day Occurs trigger, you can select local sunrise and sunset times, then add an offset to execute actions before or after the time occurs. For instance, you can turn on your lights 15 minutes after sunset, or raise your window shades 30 minutes before sunrise.

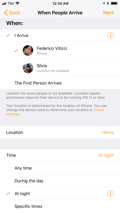

Alternatively, actions can be triggered when one or multiple people leave or arrive at a specific location. Integration with members of the same home is part of the bigger idea of HomeKit presence in iOS 11; for triggers, it acts as a multi-user geofence that can be filtered to specific times of the day (also with offsets). The trigger lets you run actions when anyone or the first person leaves or arrives at a preconfigured location.

If you want to trigger a scene when either you or your spouse gets home at night, iOS 11 lets you set up that kind of rule. Home is the default location for geofence triggers, but you can change it to any other address. Want to turn on the thermostat when you leave work? You can build that automation with iOS 11’s Home app.

Furthermore, iOS 11 brings triggers based on accessory state with value ranges. Strangely though, this option is only exposed in the HomeKit API and Apple doesn’t use it in their Home app.

This new kind of trigger works with thresholds: for instance, if the living room’s temperature falls under 18˚, or if humidity in the bedroom is between 50% and 70%, some actions will execute. If you have sensors that monitor specific conditions, these triggers will grant you the flexibility to design powerful and intelligent automations; imagine the ability to turn on the AC only when a certain temperature threshold is met, or to run a sprinkler scene when the humidity outside is low.

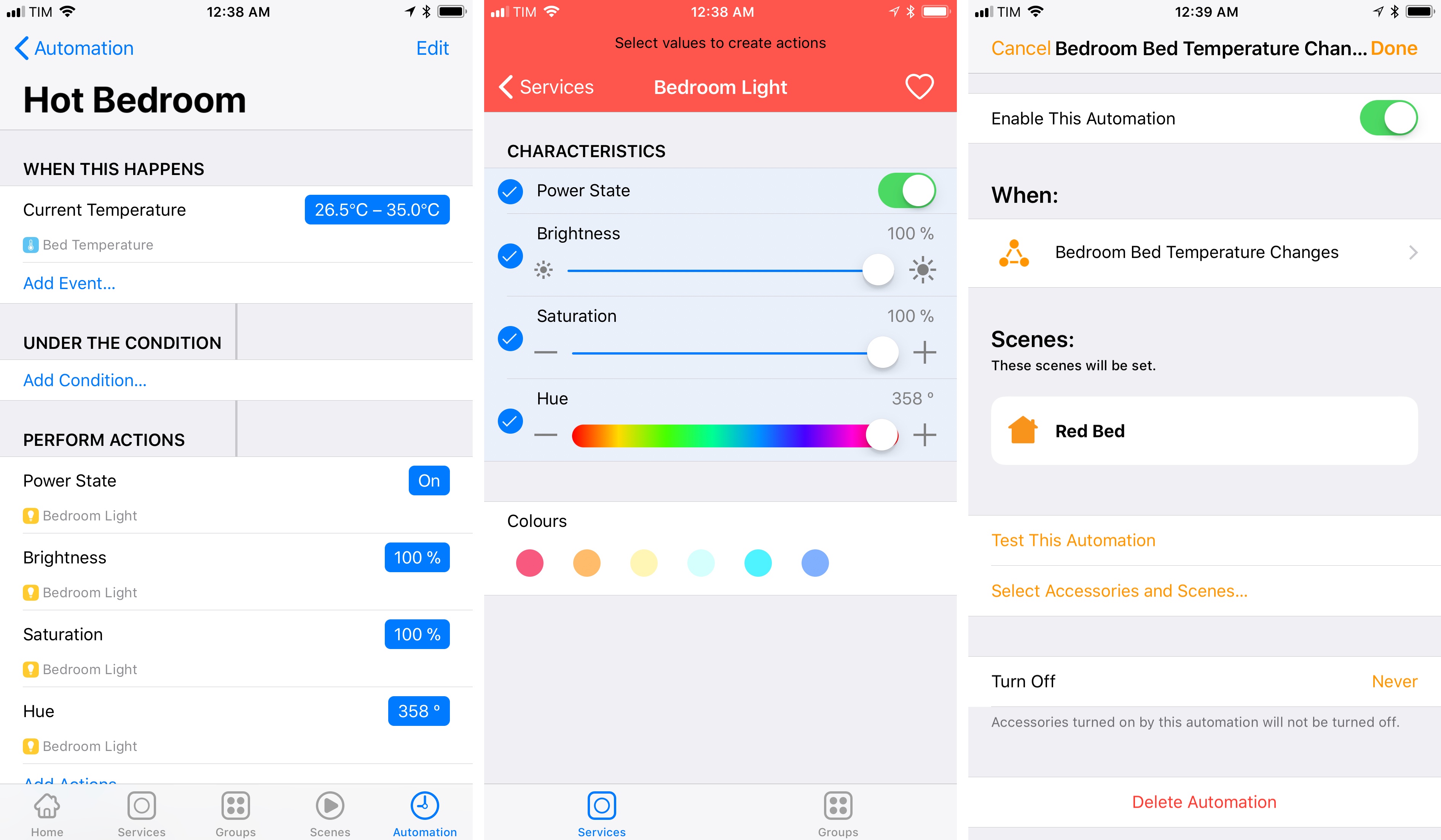

Value-based triggers worked well in my tests with Hue lights and Elgato sensors, but the lack of native support for these rules in the Home app was an annoying limitation. I was able to test this feature using Matthias Hochgatterer’s Home app – both design and functionality-wise, a powerful and intuitive HomeKit dashboard superior to Apple’s.

Value-based automations created in the third-party Home app (left, center) can be viewed, but not created, in Apple’s Home app (right).

Hochgatterer’s Home app comes with a Workflow-like rule creation tool where every step is clearly outlined. There are four types of event triggers (including accessory states with value ranges), plus conditions and actions. All of the app’s latest functionalities have been made possible by the HomeKit API in iOS 11; in many ways, Hochgatterer’s app is the best way to understand the changes to HomeKit this year.

iOS 11 also introduces the concept of end events with recurrences. You can create a trigger that runs only once and then disables itself, or you can attach an end event to deactivate an automation after a specific amount of time.

If you get one of the new HomeKit sprinklers for iOS 11, you’ll be able to create a trigger that says:

Turn on the sprinkler at 6 PM if I’m not at home, and turn it off automatically after 10 minutes.

Or, in HomeKit’s parlance:

- At 6 PM (time-based trigger);

- If I’m not at home (user presence condition);

- Turn on the sprinkler (action);

- For 10 minutes (end event).

This is a great way to avoid duplicating the same rule for start/end states, and it’s been integrated nicely into the automation setup UI of Apple’s Home app.

New triggers in iOS 11 bring the versatility I’ve long desired in HomeKit, but they aren’t the only improvements. As mentioned in the example above, conditions have been updated, with multi-user presence being the most important one. This was a glaring omission in the old HomeKit, and it’s embedded in nearly every aspect of Home in iOS 11.

Sometimes, it only makes sense to turn off the lights if nobody’s home; maybe you want to set up a routine that makes the bedroom light a suffused red between midnight and 2 AM only if you and your partner are together. Besides acting as a trigger, user presence can be an extra check for automations you want to run depending on who’s home at any given moment.

I’ve always wondered how Apple could pull this off, and the implementation in iOS 11 is elegant and straightforward. Each user’s device communicates with accessories and hubs to advertise its presence in the house. There’s nothing else to configure, but in order to take advantage of user presence, every device on the same Home setup has to run Apple’s latest operating systems.

After updating all iOS devices and the Apple TV hub in our household to iOS 11 and tvOS 11, we set up a handful of presence-based light control automations, which have been working reliably for the past month. Particularly for large families with dozens of accessories that can be combined in advanced automations, this will be a welcome upgrade.

Changes to HomeKit in iOS 11 are predicated on the assumption that users are buying into the ecosystem with dozens of accessories and that manufacturers will be on board with Apple’s plans to broaden HomeKit’s market. The power of HomeKit automation is unlocked by multiple accessories that serve different functions in the house, but, especially for those of us outside the U.S., finding enough HomeKit accessories has been tough.

There are encouraging signs of Apple moving in the right direction, both in terms of developer relationships and deeper automation in the Home app itself. It feels like we’re getting closer to the dream of a smart home that can be intelligently automated in every room, for every family member. The Home app in iOS 11 is better equipped for that vision. We just have to hope we’re going to see more HomeKit accessories going forward.

Apple News

There’s still no word from Apple on plans for a global expansion of Apple News, but the company is updating the app in iOS 11 with a Spotlight section, a gallery of daily curated videos, and smarter suggestions for stories and topics.

Spotlight is a hub where Apple editors will pick a topic every day and present it to readers with articles, photo essays, videos, and more. Apple News Spotlight covers a wide range of topics: during the beta, I read Spotlight roundups about U.S. politics as well as Comic-Con and indie rock albums. Think of it as a richer experience than newsletters like NextDraft, with a compelling presentation that leverages excerpts, media, and links curated by Apple’s staff.

Spotlight has a chance to become a great companion for Apple News, but, like daily curation on the iOS 11 App Store, it’ll be up to Apple to select interesting topics, showcase entertaining material around them, and present everything in a nice, digestible format.

Technically speaking, Spotlight works well: it has its own tab in Apple News and pulls off the retro-looking color scheme that Apple chose for it. I believe Apple’s onto something with the idea of helping people understand a topic without checking out a dozen news websites every day. From an editorial standpoint though, results will depend on the perseverance and timeliness of Apple’s editors.

Apple News’ other area of focus in iOS 11 is video. In the News Editors’ Picks channel, you’ll now see a Top Videos section aggregating the best videos from around the web that Apple’s editors think you should watch. Like Spotlight, this is a nice way to come across something interesting without having to cultivate a list of subscriptions – but there’s more. In iOS 11, the Apple News widget allows you to watch a selection of curated videos from the widget itself.

In a way, video in the Apple News widget reminds me of Tips’ surprisingly rich widget in iOS 10. When you tap a video, the widget expands to reveal an inline player. Videos start playing with no audio by default, but you can tap the volume icon to turn on sounds. At the bottom of the widget, you’ll see a progress bar that tells you how long a video is, and a button to skip to the next video.

It’s easy to flip through a series of videos in the News widget, and I’ve grown used to spending a couple of minutes in the Today view every few days to see what’s new. The app’s Top Videos section will likely be more popular than the widget’s simplified curation, but, if only from a technical perspective, the implementation in the widget is solid.

Intelligent recommendations in Apple News continue to be hit or miss with iOS 11. Despite the fact that Apple is leveraging Siri and other machine learning-powered techniques to learn topics you search for in apps, Apple News still highlights generic topic suggestions such as “Celebrities”, “Software”, or “Automobiles”.

iOS 11 displays the source of these suggestions, which can come from Siri (for topics learned elsewhere) or Apple News. In either case, I haven’t seen any specific suggestion that caught my attention. Perhaps the system is going to get better as more people start using Siri and News on iOS 11; if the past two years are any indication, I doubt that Apple News will achieve a quality of personalized recommendations comparable to Google’s Feed or Pocket.

I’ve accepted the fact that Apple News isn’t going to be the smart news assistant I was hoping it’d grow into. I like Apple News as an app to read about general topics outside of my narrow tech interests40, and it’s on my Home screen. I think the video-related updates are fantastic, and I’ve alternated between YouTube’s homepage and Apple News for my daily video consumption with great results. I’m curious to see how Apple’s editorial strategy is going to play out.

Health

Along with improvements to workouts and new supported data types41, Apple has brought iCloud integration and new charts to its Health app.

Support for storing Health data in iCloud is another facet of the company’s increased reliance on the service in iOS 11. A user’s Health database could only be manually exported as an archive file in previous versions of iOS42 – an inconvenient solution to the issue of restoring Health data on a new device without an iTunes backup (third parties capitalized on this). iCloud makes everything better: it’s encrypted, it keeps the complete set of Health types on Apple’s servers, and it doesn’t require any additional setup. iCloud for Health worked well for me on new installations of iOS 11, removing another common frustration from the iOS experience.

Health’s updated charts are stylish and informative. They use the color of the corresponding type, and they’ve been redesigned to display averages for the select time period. You can hold down with one finger and swipe on a chart to view average details for individual data points in a popup, or you can tap on the date header above a chart to open a calendar view where colored dots indicate days when a data point was tracked in Health.

Apple’s Health app is growing into a unified dashboard that appeals to average users as well as fitness buffs or patients who need precise measurements of certain data types. The complete package of Health, HealthKit for apps, Apple Watch, and iCloud is too good to even consider something else on the iPhone at this point.

Photos and Camera

When it comes to photography, Apple never rests on its laurels. Camera and Photos – arguably, the iPhone’s two most important apps – are expanding in multiple directions, from QR codes to a depth API and new live effects. But the company hasn’t stopped there. This year, Apple has looked beyond JPEG and H.264 and found their replacements. Thus, before looking at the updated apps, we ought to consider the implications of such a major technical shift.

HEIF and HEVC

The media that we capture every day on our devices has changed over time. In a short timespan, we’ve gone from low-resolution photos and crummy mobile videos to HDR photos with embedded animations and 4K videos that leverage wide color. Live Photos, bursts, depth photography, 4K: none of these technologies were commonplace when JPEG and H.264 became widely implemented standards. The formats used to represent our photos and videos haven’t evolved with the times, forcing Apple to retrofit them with new functionalities by doing custom work around them.

The JPEG format is especially problematic: it uses an old compression engine that is now obsolete (JPEG became standard in 1992), it doesn’t support alpha and depth channels, and it can’t be animated. JPEG had an amazing run and democratized sharing pictures on social networks and messaging apps, but when you consider its limitations from an iPhone perspective, it’s a format that doesn’t natively support Live Photos, doesn’t compress pictures as well as other algorithms would, and requires assistance from other formats or encoders to store more information about modern types of photos.

With iOS 11, Apple is making a new bet for the future by adopting the HEIF image format43 and the HEVC codec.44 These standards (which were ratified by ISO in 2015 and 2013, respectively) are profound changes that project iOS into the era of modular photography formats and H.265 video. Both were selected because they’re relatively future-proof given where the industry is moving with photos and video. However, the best consequence of HEIF and HEVC is that users won’t notice any of the technicalities but will simply enjoy the results: more available storage, better-looking pictures, and faster performance.

HEIF matches all the requirements Apple set for the successor to JPEG. It‘s based on containers, so a single file can contain multiple variations or additional representations of a file as auxiliary images, plus metadata. For instance, HEIF natively supports sequences of photos shot within a short timeframe (bursts), photos with associated video (Live Photos), and it accurately describes depth data for Portrait photos. This allows Apple to bundle more than one representation of an image within the same package.

More importantly, HEIF has considerably better compression than JPEG: up to 2x the old standard. This not only lets you store twice the amount of images in the same storage space – when compression is very high, HEIF also produces more pleasing images than JPEG, with fewer artifacts. The increase in compression and quality allowed Apple to bump the size of photo thumbnails to 320x240; this is four times the size of a typical JPEG thumbnail, and it’ll help bring better thumbnail quality to modern Retina displays.

The “secret sauce” behind HEIF is the fact that it can use hardware acceleration, it supports parallel operations, and it relies on HEVC for compression (which explains why HEIF images have a .heic file extension in iOS 11). Tiles provide a practical example of these technologies at play. Among its various options, HEIF can slice large images in multiple tiles and use parallelism to decode them more quickly. Instead of decoding a whole image at once, HEIF can decode each tile, rescale it, and then assemble the final image. This yields impressive performance gains when cropping as well as zooming into details of large images.

In a demo that Apple showed at WWDC, the iOS 11 Photos app smoothly zoomed in and out of a 2.9 gigapixel photo of Yosemite National Park with no stuttering. The original file was a 2 GB TIFF that had been converted to a 160 MB HEIF; the Photos app never used more than 70 MB of memory during the zooming process.45 In tests I repeated with gigapixel photos from the Hubble telescope converted to HEIF, I noticed similar results: zooming in Photos was buttery smooth both on the iPhone and iPad; my 2015 MacBook Pro struggled doing the same with the original TIFF versions.

Apple has added support for HEIF everywhere on iOS 11: the Camera now captures photos in HEIF, built-in software like Photos and Quick Look all handle HEIF images, and frameworks such as Image I/O, CoreImage, and Photos support HEIF so developers can start integrating the format with their apps.

The adoption of HEVC, the codec used for HEIF images and H.265 video, originates from the same technical requirements and advantages. HEVC comes with up to 40% better compression than H.264, resulting in either the same video at a smaller size, or the same size for a higher quality video – which is paramount now that the industry is embracing 4K content. HEVC is supported in AVFoundation, Photos, WebKit, and even HTTP Live Streaming for improved network throughput.

In terms of compatible apps, Quick Look, Photos, and FaceTime natively support HEVC video; unlike HEIF, the file extensions stay the same as before: .mov and .mp4. I’d be surprised if implementing HEVC wasn’t a necessary step for Apple’s move towards selling 4K iTunes content that is optimized for hardware acceleration and uses less storage on modern devices.

Apple hopes that as few users as possible will notice the transition to HEIF and HEVC on their iOS devices. The Camera and other system apps will switch to HEIF by default with no user input needed, although those who don’t want to use HEIF and HEVC can disable them under Settings ⇾ Camera ⇾ Formats by choosing Most Compatible. iOS 11 won’t retroactively convert old photos and videos (it wouldn’t make sense to re-compress old JPEG and H.264 files) and the new high efficiency formats only apply to media captured on iOS 11.

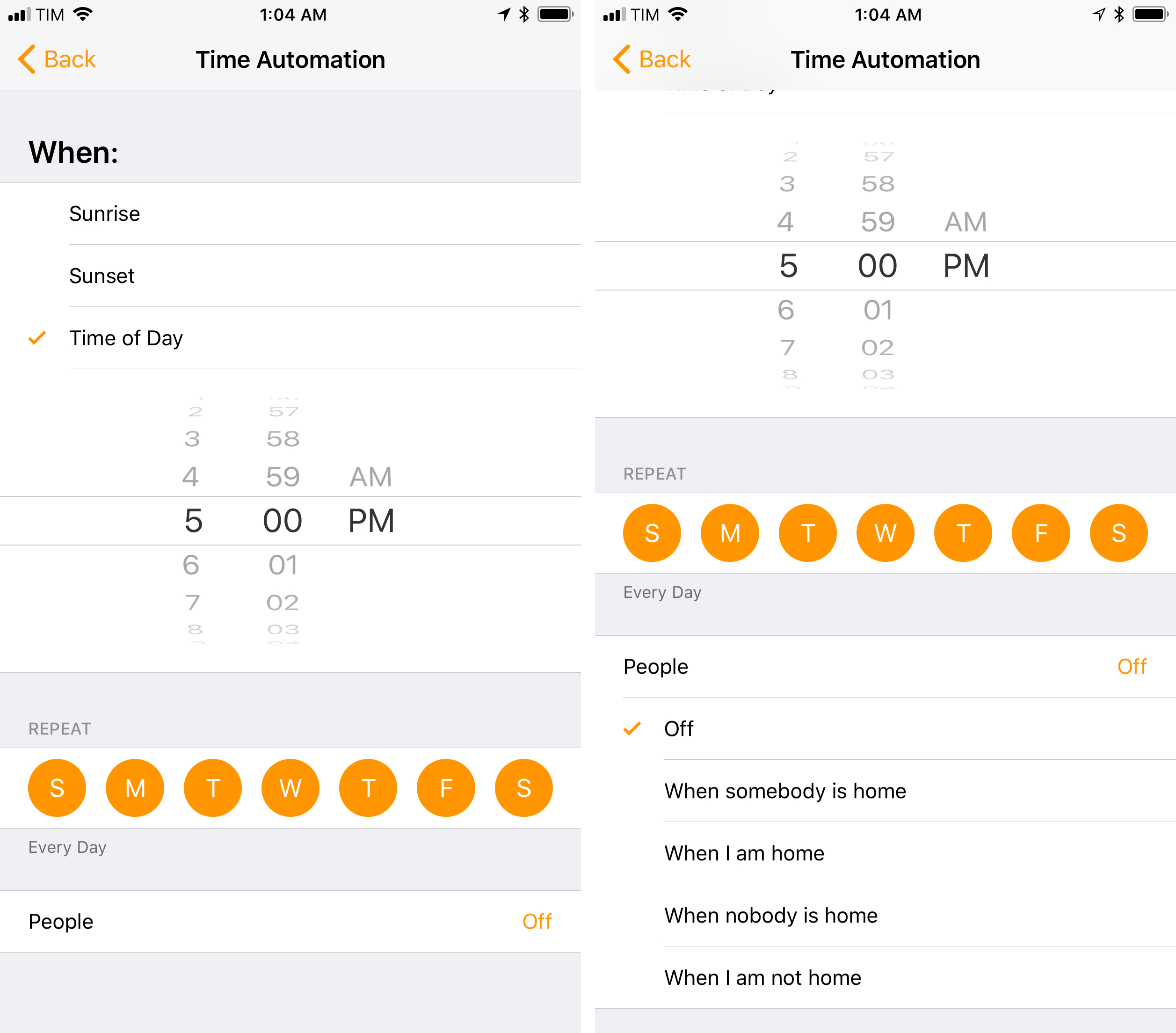

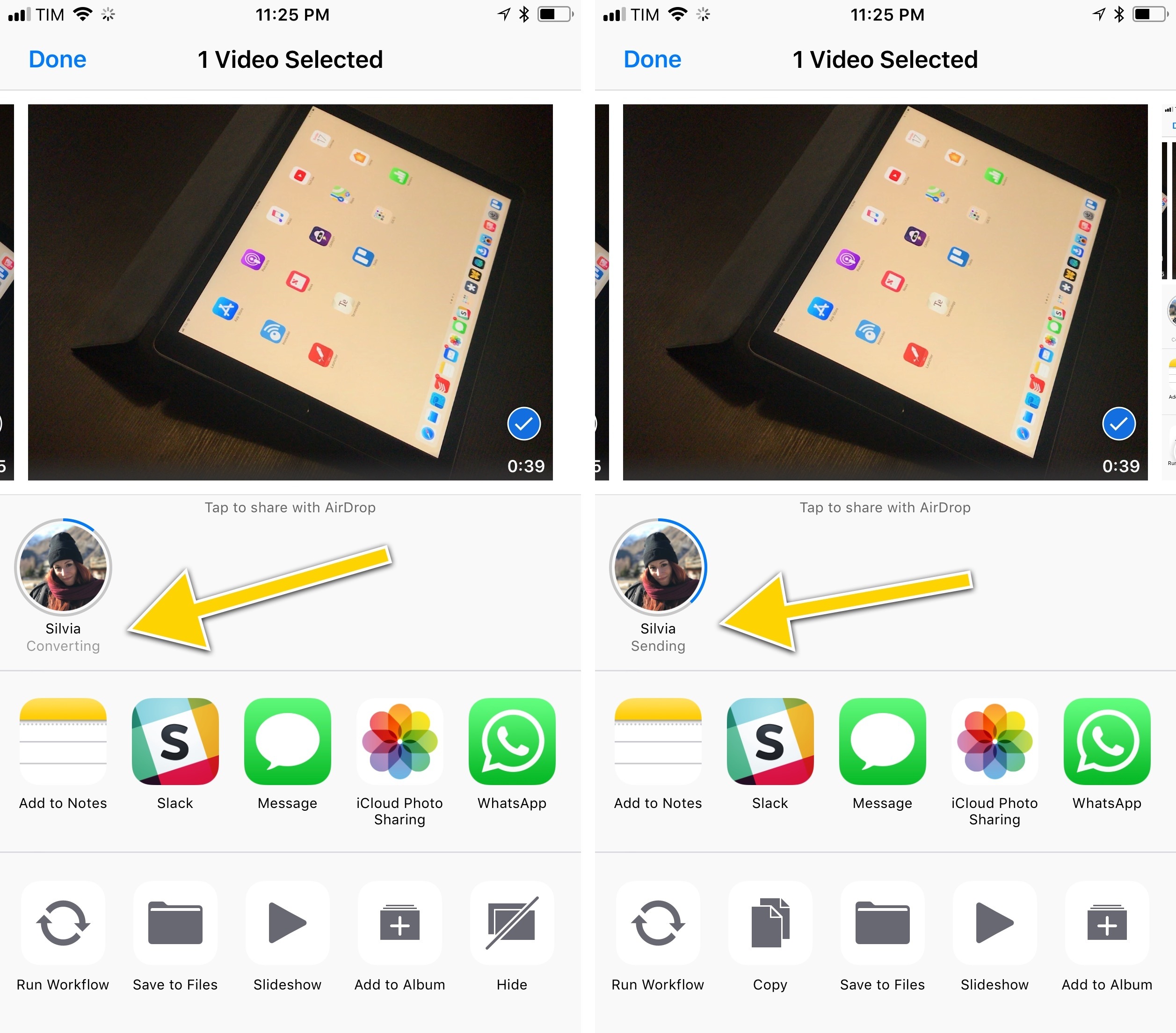

Furthermore, Mail and other share extensions always transcode HEIF and HEVC before handing media off to other apps to maximize compatibility. The peer-to-peer AirDrop transfer evaluates the receiver’s capabilities before deciding if it can send HEIF/HEVC content: iOS 11 to iOS 11 photo exchanges keep the HEIF version of a photo, while iOS 11 to iOS 10 will transcode them to JPEG.

The most practical way to think about the impact of HEIF and HEVC is this: if, a year from now, you’ll feel as if you’ve taken more pictures and videos without filling your local or iCloud-based storage, it means Apple succeeded and picked the right formats. Judging by the technical specs and performance of HEIF and HEVC, both seem like safe bets for the future.

Camera

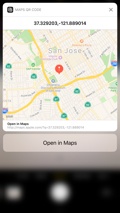

The biggest user-facing change in the iOS 11 Camera is native support for QR code detection. Point the camera at a QR code, wait a second, and iOS 11 will show you a notification with the recognized text or link. Tap it, and the code will open in another app if necessary.46

Apple is supporting a wide range of possible destinations for scanned QR codes. You can open links in Safari or places in Maps, but you can also scan router codes and join Wi-Fi networks, place phone calls, send SMS and Mail messages, add contacts and Calendar events, and even trigger URL schemes in third-party apps for automation purposes. Anything that can be opened or launched on iOS 11 can be embedded in a QR code. Bundling the scanning feature with the Camera app is certainly going to hurt third-party scanner apps that can’t benefit from privileged access on the Lock screen or Control Center.

Apple has also done a nice job with notifications that display QR contents, which can often be expanded to reveal quick actions and rich previews. I rarely have to scan QR codes; having support for them directly in the system camera is all I need from iOS.

With the Grid option enabled in Settings ⇾ Camera, a level will appear in the middle of the screen when you’re trying to capture a top-down shot. If you like to obsessively document your food consumption or latest purchases on Instagram, this feature will help you ensure all your pictures are perfectly aligned.

Lastly, filters in the Camera have been reimagined with new names and color schemes based on classic photography styles. You can now swipe up while taking a picture to view a thumbstrip of filters, which you can scroll to preview the effect in full screen.

Press with 3D Touch to compare the filter with the original camera view.Replay

If you press a selected filter with 3D Touch (even though you won’t feel a haptic feedback) and hold your finger down, you’ll be able to preview it alongside the unfiltered camera view. I’ve started doing this a lot when taking Portraits with filters applied in real time.

Photos

There are a variety of solid improvements in Photos, but the launch of Live Photo effects is the salient one.

Apple must have seen the rise in popularity of animated formats such as Instagram’s Boomerang and Google’s Motion Stills, and their response is a built-in edit panel to tweak the animation of Live Photos.

By default, Live Photos are animated as before, but now you can swipe up on them to reveal the Effects section. Here, you can switch from Live to Loop (a continuous looping video) and Bounce (a loop that plays the action backward and forward). There’s also Long Exposure, which simulates a DSLR-like effect by stacking multiple Live Photo frames to blur the subject.

Playing with Live Photo effects.Replay

I’ve had fun changing some of my cute puppy pictures to Loop and Bounce, and the system will be more useful when third-party apps will be able to read these animations from Photos and play them back accordingly. I’m not a particularly creative photographer, but I think we’ll see some clever uses of Bounce and Long Exposure showcased in Apple keynotes and ad campaigns.

It’s also now possible to choose the key frame for a Live Photo: in the edit screen, tap on a thumbnail in the scrubber at the bottom, choose Make Key Photo, and that frame will be used as the static image preview in the photo gallery. You can even trim and mute Live Photos from the same editing interface in iOS 11.

Speaking of animations, Photos can finally visualize animated GIFs, which, together with Bounce and Loop photos, end up in a new Animated album automatically created by iOS 11. GIF power users will still seek more advanced solutions such as GIFwrapped and GIPHY, but basic GIF support in Photos is a welcome change.

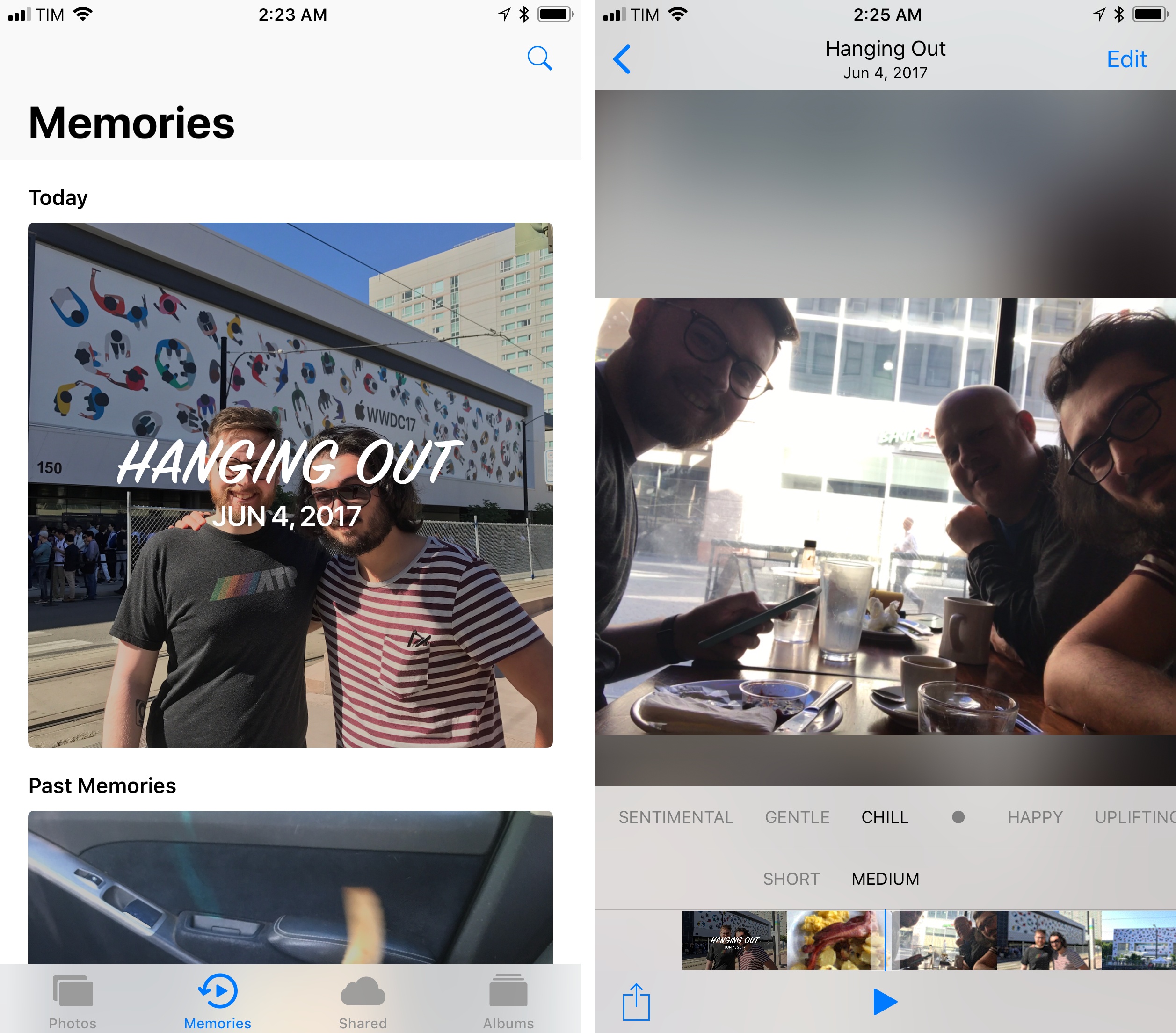

The Memories feature has gained about a dozen new categories: Apple says that iOS 11 generates personal memories for #TBT, sporting events and performances, outdoor activities, night out gatherings, weddings, anniversaries, and, of course, pets and babies.

Of these new memories, I’ve only received a Fluffy Friends one, which, because all dogs are good boys and girls, didn’t disappoint. I haven’t received other new Memories, but it’s reassuring to see Apple iterating on a functionality that Google has so finely tuned with their Google Photos service.

There are other technical enhancements for Memories in iOS 11. To ensure that only the best photos and videos are selected, Memories now detects smiles, blinks, and duplicates, and chooses the optimal exposure among similar photos. Scene detection is also used to find the relevant key photo and the best music to fit the memory’s mood. In addition, Memory Movies are now optimized for viewing in portrait orientation: content is intelligently cropped on the fly without excluding faces, and you can rotate your device while watching a Memories Movie to dynamically switch views.

Last, iOS 11 has gained the ability to sync faces recognized in photos across devices with iCloud. There’s no setup for this, and no settings to tweak: Photos will automatically merge faces between devices using iCloud Photo Library, and it’ll then start keeping the same set in the People album. Even better, the feature now uses the same intelligence that powers Memories, resulting in greater accuracy and fewer duplicate groupings for each person than iOS 10.

In my tests, syncing faces between the iPhone, iPad, and Mac worked without problems. After I confirmed a new face on one device, it was available on others after a few seconds. This is another part of the bigger narrative of iCloud syncing more of our personal data across devices in iOS 11. Now, Photos only needs a better interface to suggest recognized faces instead of hiding them in the detail view of individual pictures.

It’s not a surprise that Photos hasn’t gained as many new user features as last year’s update: Apple focused on deeper foundational changes in iOS 11, reworking the entire app and its iCloud infrastructure to fully support HEIF and HEVC, laying the groundwork for years to come.

It’s fair to argue that Google is ahead of Apple in family sharing, intelligent photo and album suggestions, and even printed photo books (which are surprisingly absent from the iOS version of Photos); Apple has to play catch-up in each field. However, we shouldn’t dismiss the adoption of a new format and codec as self-indulgent whims of a company constantly pursuing state-of-the-art tech: HEIF and HEVC are strategic assets that will better prepare iOS for richer photography and 4K. When you’re upgrading the engine, new features can wait.

- I haven’t been able to test either of them. ↩︎

- For which I use RSS with Inoreader. ↩︎

- Such as waist circumference and insuline delivery. For Apple Watch users, iOS 11's HealthKit brings read/write access to resting heart rate, read access to walking heart rate, and additional metadata on all heart rates. ↩︎

- This is still possible. ↩︎

- Quite the repetition, as HEIF already stands for High Efficiency Image Format. ↩︎

- Same here: High Efficiency Video Codec. ↩︎

- This wouldn’t be possible with JPEG, which maxes out at 64k by 64k pixels. HEIF doesn’t max out. ↩︎

- QR code detection can be disabled in Settings ⇾ Camera ⇾ Scan QR Codes. ↩︎