Everything Else

As a feature-rich release for the iPhone and iPad, iOS 11 offers a treasure trove of other foundational changes as well as smaller improvements that enhance the overall experience.

ARKit

Normally, a large tech company’s first foray into augmented reality would be deserving of its own story, but as far as this review is concerned, Apple’s ARKit is a developer framework that can’t be tested with first-party apps baked into iOS. ARKit is a set of APIs made by Apple for developers; to play with ARKit, you’ll have to download compatible apps from the App Store. There are no pre-installed ARKit apps in iOS 11, nor does the system include any major AR options or special modes for existing apps. And yet, despite Apple’s hands-off approach, ARKit is likely going to spawn one of the biggest App Store trends in years. Third-party ARKit apps and games will be a fantastic demo for iOS 11.

ARKit lets developers build augmented reality apps to create the illusion of virtual objects blending into the physical world. If you watched any of the videos on Made with ARKit, you should have a broad idea of its capabilities. ARKit is natively supported on devices with the A9, A10, or A11 systems-on-a-chip; literally overnight, Apple is launching an AR platform with a potential userbase of millions of compatible devices.

The primary goal of ARKit is to abstract the complexity of designing credible and compelling augmented reality experiences for developers. Out of the box, the framework provides a series of core functionalities that would have required third-party app makers years of work to get right.

Using visual inertial odometry, ARKit fuses camera data with CoreMotion for world tracking, which enables an iOS device to sense movement in space and measure distance/scale accurately without additional calibration. Then, ARKit performs scene understanding with real-world topology: the system can determine attributes and properties of the surrounding environment such as horizontal planes and light estimation. These two factors, for example, help in recognizing surfaces like tables or the ground floor to place objects and information on, and they can cast the correct lighting on virtual objects so they’re consistent with the physical world. Finally, ARKit returns a constant stream of camera images to SceneKit and SpriteKit (which have been updated with AR-specific views) and it can integrate with Metal for custom rendering; the third-party game engines Unity and Unreal have also been updated with native hooks for ARKit to help developers draw virtual content.

My favorite ARKit app I’ve tried so far is MeasureKit, a digital measuring tool that relies on augmented reality to let you measure straight lines, corners and angles, distances, and even a person’s height using a combination of virtual rulers and the iPhone’s camera.

MeasureKit is extremely intuitive and unlike anything I’ve seen on iOS before: you point the camera at an object, tap the screen to set the starting point, then, depending on the tool you’ve selected, you physically move in a space or tap the screen elsewhere (or both) to measure what you’re looking at. It’s like having a camera with a built-in smart ruler; the Person Height mode of the app is especially impressive, as it combines ARKit with iOS 11’s face recognition APIs to detect the ground floor, find a person’s face, and estimate their height.72

Like other ARKit apps, MeasureKit can display iOS 11’s world visualization in real time: after moving your device around to facilitate the initial plane detection process73, MeasureKit can show you the detected surfaces and feature points recognized by ARKit.

According to MeasureKit’s developer Rinat Khanov, even though there’s room for improvement, this part of the framework is already surprisingly capable. ARKit doesn’t natively support vertical plane detection (by default, ARKit can’t recognize walls), but Khanov and his team were able to create their own solution based on ARKit’s feature points.

In our conversation, Khanov mentioned aspects of ARKit that Apple should improve in the future: low-light performance, far away surfaces, and obstruction detection – situations where the first ARKit apps indeed struggle to render virtual objects onscreen. Still, in spite of these shortcomings, it’s clear that Apple created a powerful framework for developers to devise fresh iOS experiences that can reach millions of users today. This is a remarkable achievement, and the primary advantage of ARKit over Google’s recently announced technology, ARCore.

After using ARKit and understanding the potential of augmented reality apps on a portable computer that’s always with us, it’s fair to wonder why Apple hasn’t shipped a first-party AR app in iOS 11. The answer seemingly lies in the reverse strategy the company has chosen for AR: rather than building an app, Apple wants ARKit to be a platform, creating an ecosystem for developers to design iOS-only AR apps.

The App Store is Apple’s AR moat: if AR truly is the next big thing, to make ARKit just an app would be selling it short. It feels wiser on Apple’s part to release the creation tools first and let the developer community innovate through apps and games. This could kickstart a virtuous cycle of unique AR experiences available on the App Store, made by developers who are providing constant feedback to Apple, who will make the platform better over time until they launch their own AR product.

What’s also evident with ARKit is that we’re going to see two macro categories of AR experiences: old app concepts that have been retrofitted for AR just because it’s possible, and new kinds of AR-first software that couldn’t exist before. ARKit will be defined by the latter category – apps where AR isn’t a gimmick, but the premise that shapes the entire experience. It’ll be fun to experiment with ARKit in hundreds of apps, but only few – at least initially – will make sense as something deeper than a five-minute demo.

For many, ARKit apps will be the reason to upgrade to iOS 11. We’re witnessing the rise of a new App Store trend, and, possibly, Apple’s next mass-market platform. Curiosity will be ARKit’s selling point in the short term, but it feels like we’re on the cusp of a bigger revolution.

Vision, Core ML, and NLP

With iOS 11, Apple is also making great strides to keep up with AI and machine learning tools provided to developers by its biggest competitors in this field – Google and Microsoft. The new technologies baked into iOS 11 – Core ML, Vision, and Natural Language Processing (NLP) – won’t be as easily identifiable as a new UI design or drag and drop, but they’re going to show up in a variety of apps we use every day.

Vision is a high-level framework that brings advanced computer vision to apps that deal with photography and images, and it’s comprised of multiple features. Face detection, previously available in CoreImage and AVCapture, has been rewritten for higher precision and recall in Vision so that more faces (with more related details, such as eyes and mouth) can be recognized by the API. Powered by deep learning, Vision’s face recognition detects small faces, profiles, and faces that are partially obstructed by objects like glasses and hats. With a slightly longer processing time and higher power consumption than CoreImage and AVCapture, Vision offers the best accuracy for face detection and takes most of the complexity away from developers.

In addition to faces, Vision can also detect rectangular shapes74, barcodes, and text, which developers can integrate with other frameworks for additional processing. Plus, unlike other services, all of this is done on-device, with minimal latency, while keeping user information secure and private. It’s safe to assume the majority of third-party camera, photo, and video editing apps will adopt Vision for recognition and tracking in still images and videos.

With NLP, Apple is opening up the same API that drives the system keyboard, Messages, and Safari to apps that want to perform advanced text parsing. NLP can identify a specific language (29 scripts and 52 languages are supported), tokenize the text into meaningful chunks of sentences and words, then apply deeper extraction of specific elements such as parts of speech, lemmas (root forms of words), and named entities (for example, person or organization names).75

While this sounds incredibly complex (and it is), the API is backed by great performance. On a single thread, Apple claims that NLP’s part of speech tagging can evaluate up to 50,000 tokens in 1 second; on parallel threads, it becomes 80,000 tokens per second. This means that entire articles and manuscripts can be parsed in seconds, creating a new avenue for developers of text editors and research tools, but, more broadly, all kinds of apps that deal with language identification, like calendars and PDF annotation apps.

Finally, there’s Core ML, which is aimed at making it easy to integrate trained machine learning models with apps. In simple terms, Apple has created a way for developers to take a model file – machine learning code that has already been written with other tools (like Keras and Caffe) – drop it into Xcode, and add machine learning features to their apps. As such, Core ML isn’t a language or a machine learning development suite per se – it’s a framework to convert machine learning models and enable iOS apps to support existing ones shared by the developer community.

As a layer that sits below NLP and Vision, Core ML doesn’t enable any specific feature; it’s up to developers to find a model that accomplishes what they need, then implement it via Core ML. There will be apps that add fast object and scene recognition through Core ML; others will do sentiment analysis of text; some email clients and calendar apps will probably figure out ways to predict your schedule and busy times based on past patterns analyzed by a Core ML model. By improving the last mile of implementing neural networks in commercial software, Apple is relying on existing expertise to imbue the iOS app ecosystem with deeper intelligence. Time will tell if this opportunistic approach will work.

So far, I’ve tested a handful of apps with Core ML, NLP, and Vision functionalities, and came away impressed with the results.

An update to Shapego, an app to generate word clouds on iOS, has added integration with iOS 11’s PDFKit (to quickly extract text from PDF documents) and NLP to process text, tag individual words, and generate a contextual word cloud.

According to Shapego developer Libero Spagnolini, NLP has improved nearly every aspect of the app: users no longer have to manually select the language type because iOS 11 recognizes it automatically; he removed all the custom code that dealt with punctuation and word boundaries; with lemmatization, the system can now find words with a common root, so Shapego can more easily avoid duplicates when composing a word cloud. This is a huge amount of work removed from the developer’s side, and it’s all handled by a native iOS framework. I was able to benchmark NLP’s part of speech API using Shapego and a PDF of last year’s iOS 10 review. On a 10.5-inch iPad Pro, NLP tagged 50,924 words in 1.5 seconds – slower than Apple’s advertised performance, but impressive regardless.

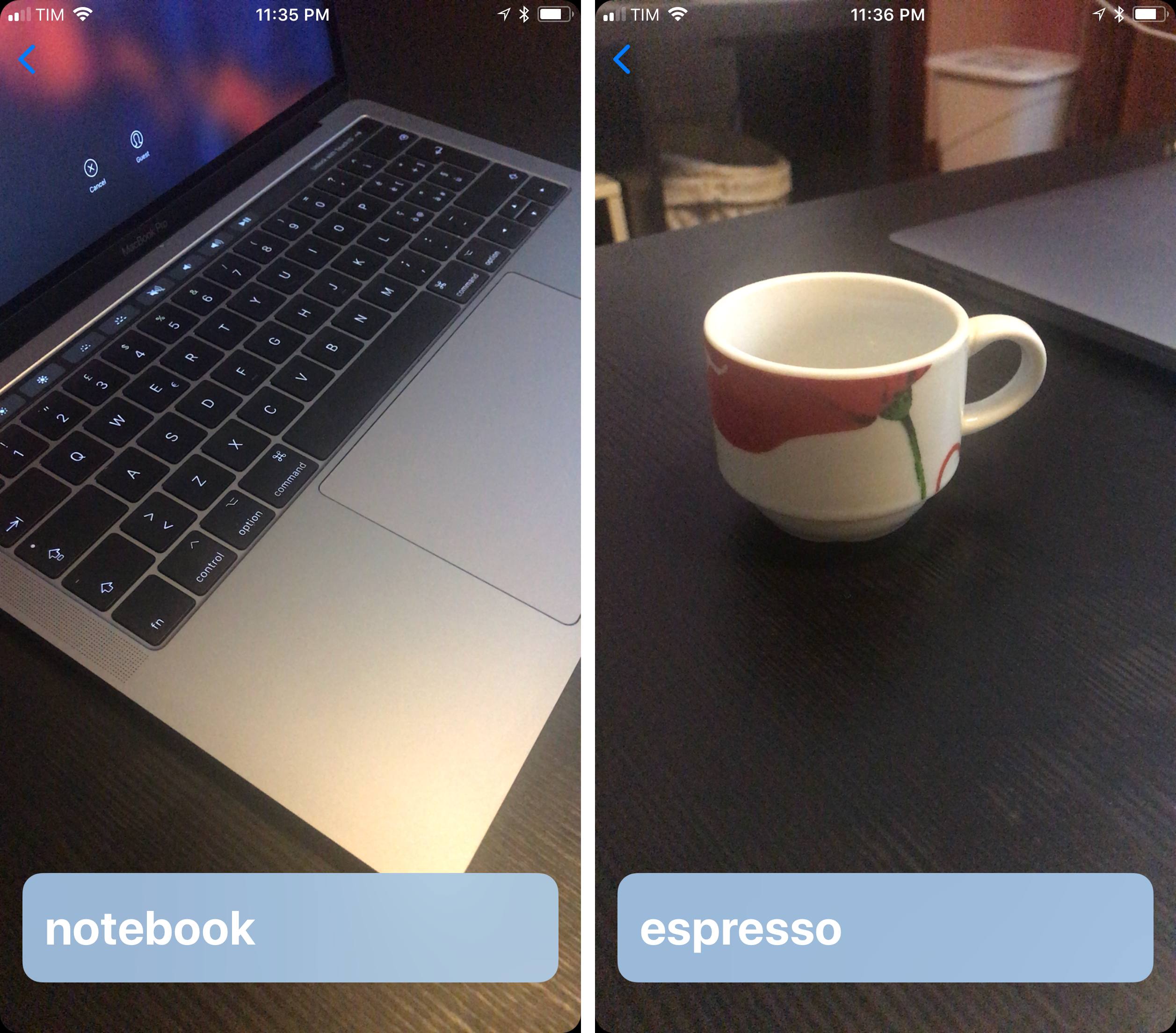

I’ve also tested a new version of LookUp, a dictionary app, which uses Core ML to recognize objects and provide a definition overlay in a camera view.

Using a variety of trained models converted to Core ML, LookUp can now be pointed at everyday objects to find out their definition in less than a second. LookUp’s real-world definitions aren’t always accurate, but the app is an impressive demo of the new deep learning features available in iOS 11.

Core NFC

After years of Apple Pay, Apple is starting to open up the iPhone’s NFC chip with Core NFC, a framework that allows developers to create apps to read NFC tags on iOS.

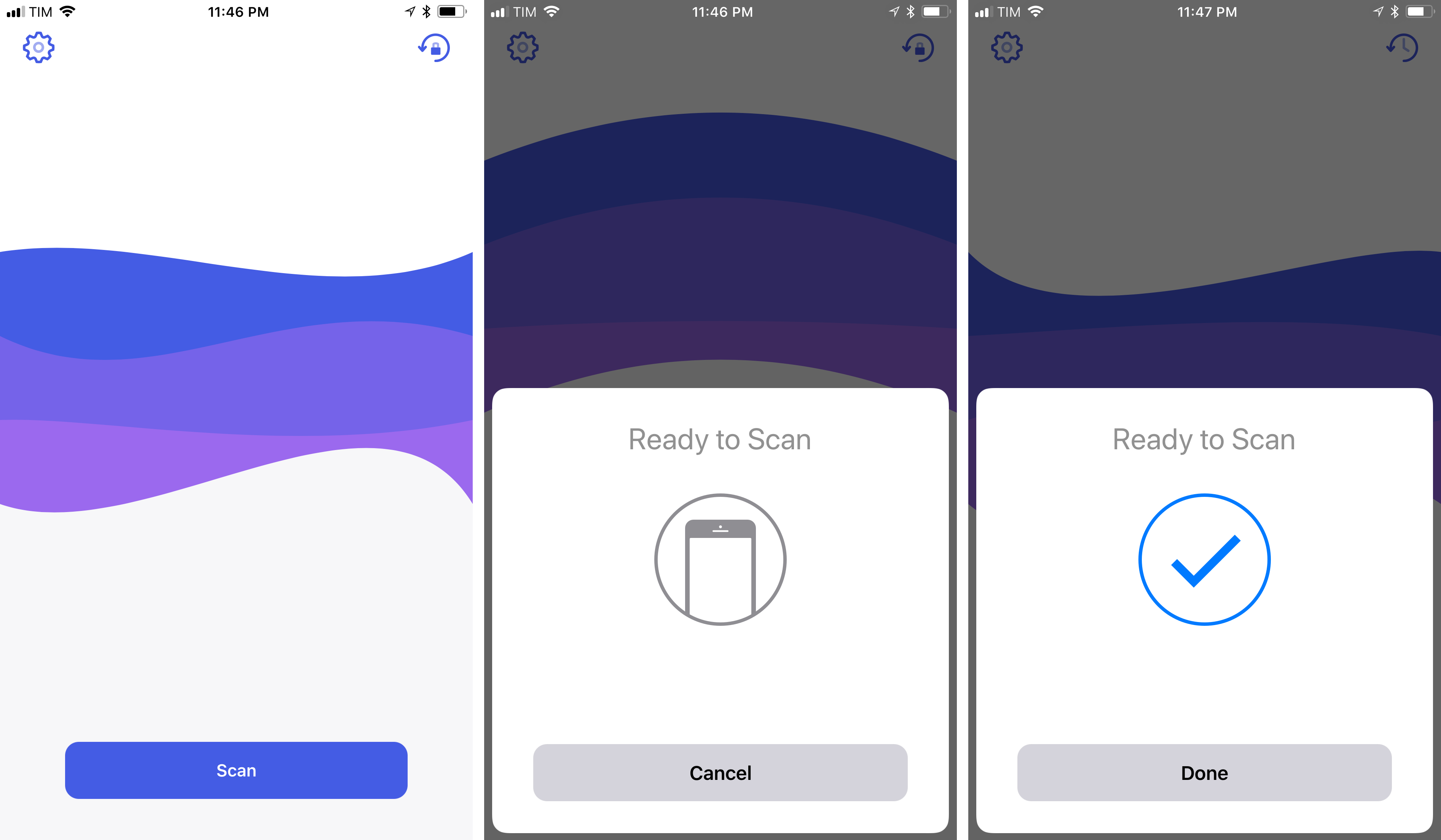

Available on the iPhone 7 generation and above, Core NFC leverages the same card-style UI implemented by other forms of contactless communication on iOS: in apps that can scan NFC tags, you’ll be presented with an AirPods and Automatic Setup-like dialog to begin a tag reading session. Hold the top of your iPhone near a card, wait a fraction of a second, and you’ll hear a “ding” (or feel a tap) once a tag has been read.

The Core NFC dialog is a necessary bridge between the physical world of NFC tags and apps: unlike on Android, developers can’t present their own custom interfaces for scanning tags. Once a tag has been scanned, its data is passed to the app, which can do whatever it wants with it. For example, Hold, an upcoming NFC tag reader for iOS 11, can either open a URL in Safari View Controller or copy the text contained in a tag to the clipboard.

Also unlike on Android, this first version of Core NFC doesn’t allow for writing data to NFC tags, which is going to prevent the rise of a new category of NFC apps on the App Store.76 I suppose that museums and department stores will take advantage of Core NFC in their own apps to enhance the browsing experience in physical locations, but developers who hoped iOS 11 would let them write to NFC tags will have to wait for another iteration of the framework.

FaceTime

iOS’ built-in video-calling service still doesn’t offer support for group calls – an astonishing omission in 2017 – and Apple only enhanced it with a minor addition in iOS 11.

You can now capture Live Photos of a FaceTime conversation using a shutter button in the bottom left corner of the screen. This doesn’t generate an animation of the entire call: rather, a Live Photo in FaceTime is an animated photo of the other person on the call – it doesn’t show the FaceTime UI, and it excludes the tiny rectangle preview of your end of the call too.

While it’s nice to grab a live selfie of someone being goofy on FaceTime, perhaps you may not want others to save videos of yourself every time you’re on a video call with them. Thankfully, Apple included an option to disable FaceTime Live Photos under Settings ⇾ FaceTime. Other people will still be able to take screenshots of the call without causing an alert to appear, but at least they won’t have a Live Photo.

Screen Recording and ReplayKit

Along with screenshot markup, another surprising new iOS 11 feature that’s especially useful for tech bloggers is a built-in screen recording mode.

As someone who writes about iOS and apps for a living, I’ve always wanted QuickTime-like screen recording on iOS – and I always assumed the feature was too niche to make the cut as a system-wide functionality. But not only did Apple ship a screen recording feature that is dramatically superior to any third-party hack we had in the past, they even made screen recording a first-class citizen in the new Control Center.

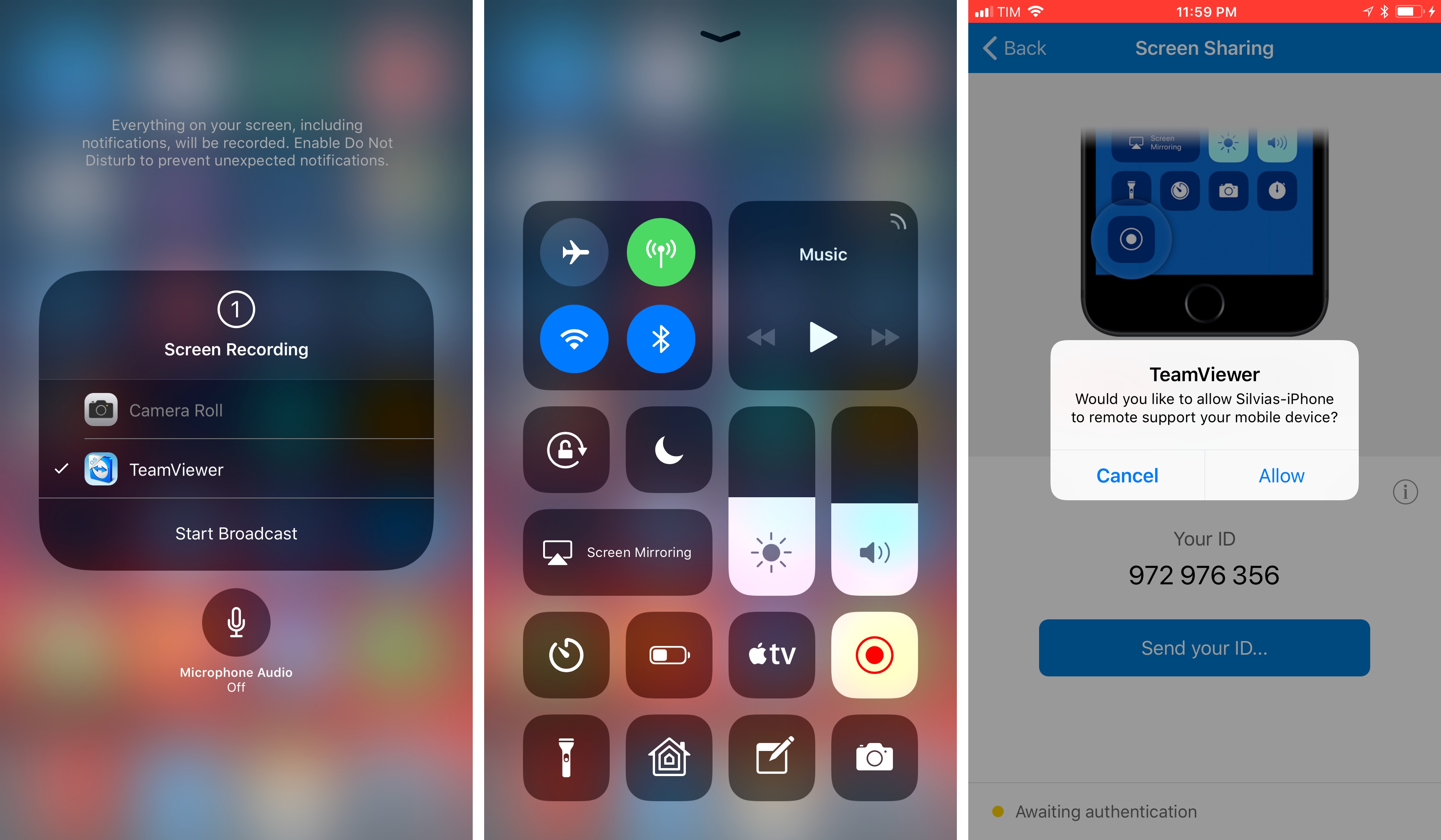

After tapping the record button in Control Center, the icon will display a three-second countdown. You can use this time to close Control Center and start a recording without showing the screen recording UI being dismissed. Alternatively, you can long-press the button for more controls, including an option to record audio from the microphone if you want to add real-time voiceover.

When screen recording is in progress, iOS 11 shows a red status bar. I would have liked Apple to follow QuickTime for macOS and automatically clean the status bar in the exported video, but I understand why that might be asking too much. You can tap the red status bar to stop a recording, which will be saved to Photos, ready for editing and sharing.

Native screen recording brings feature parity between iOS and macOS, which should alleviate the woes of users embracing the iPad as their primary computer. This leaves podcast recording as the only task I still have to use a Mac for.

Built-in screen recording is also part of Apple’s decision to make ReplayKit – the framework to record gameplay videos and share them online – easier to access for users and more powerful for developers of all apps.

In iOS 11, apps that integrate with ReplayKit 2’s live streaming can be listed as destinations in Control Center’s screen recording menu, which allows them to broadcast system-wide content – not just sandboxed app footage – to an online service.

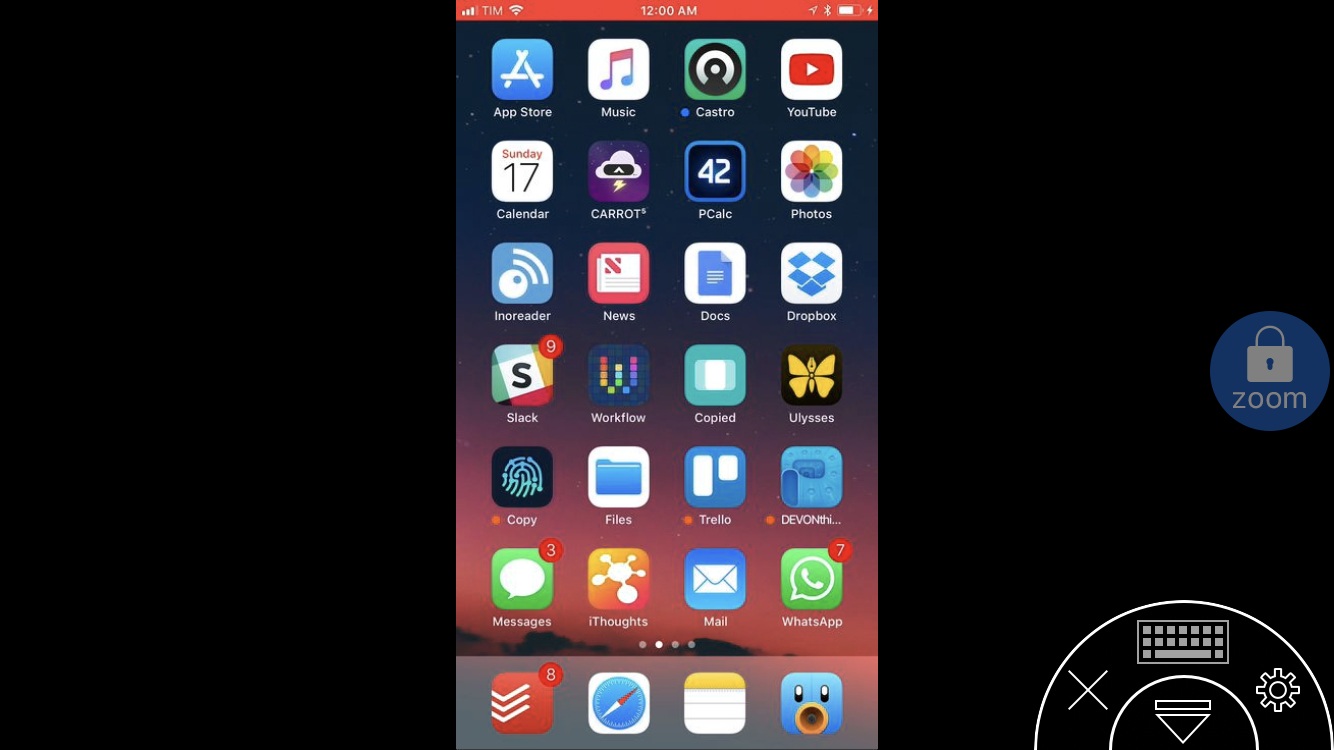

I was able to test this feature with TeamViewer’s QuickSupport app, which now offers an extension to start a live broadcast directly from iOS 11’s Control Center. After selecting QuickSupport from Control Center, the app began a live streaming session, which I shared with friends using a public link. On the other end, people who were watching could see me navigate my iPhone in real-time – not just inside the QuickSupport app, but the entire iOS interface, including Cover Sheet and the Home screen.

It’ll be interesting to see how companies such as Mobcrush, Twitch, and YouTube adopt ReplayKit 2 on iOS 11, and if other productivity or team collaboration services will ship new live broadcasting functionalities.

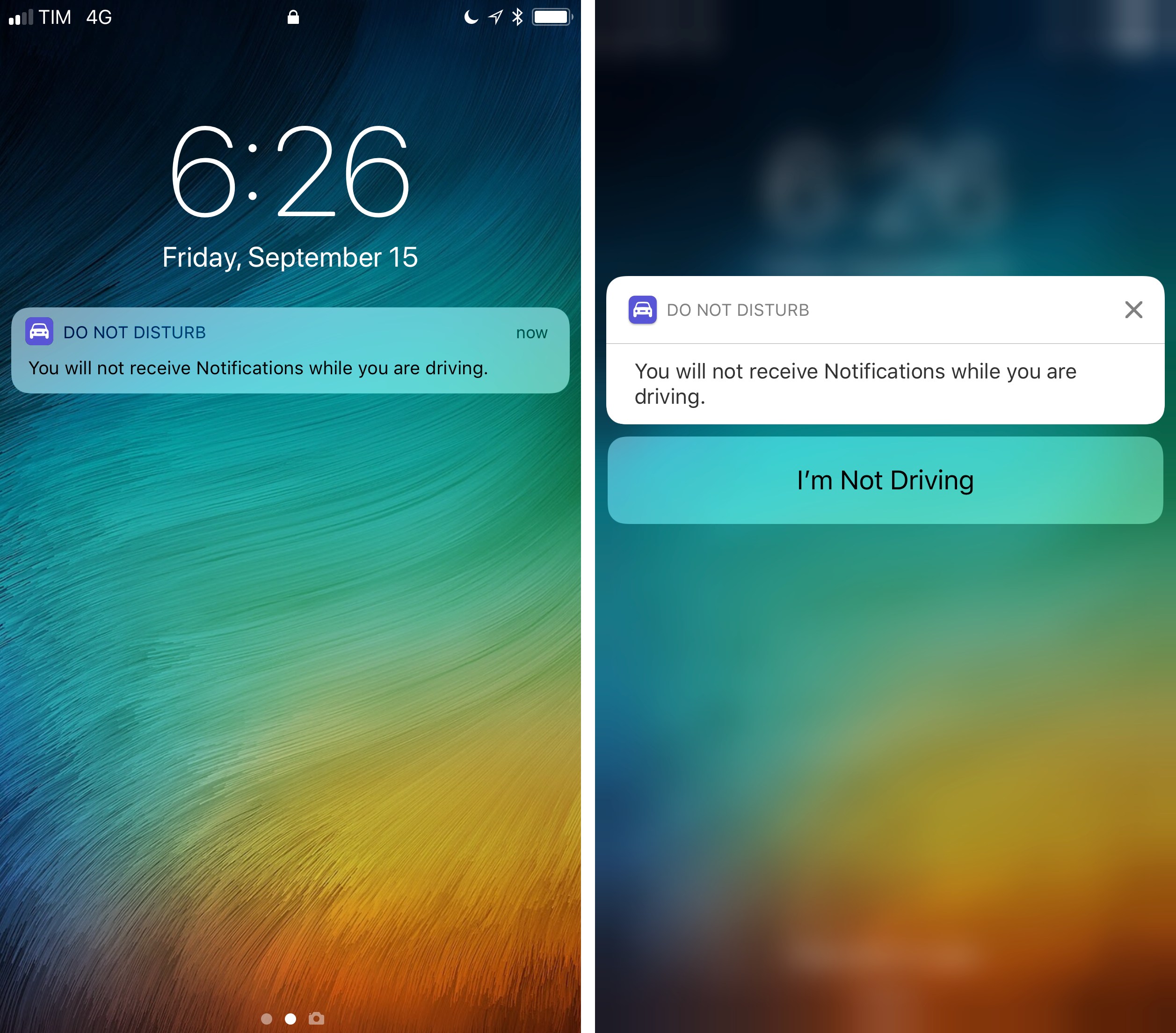

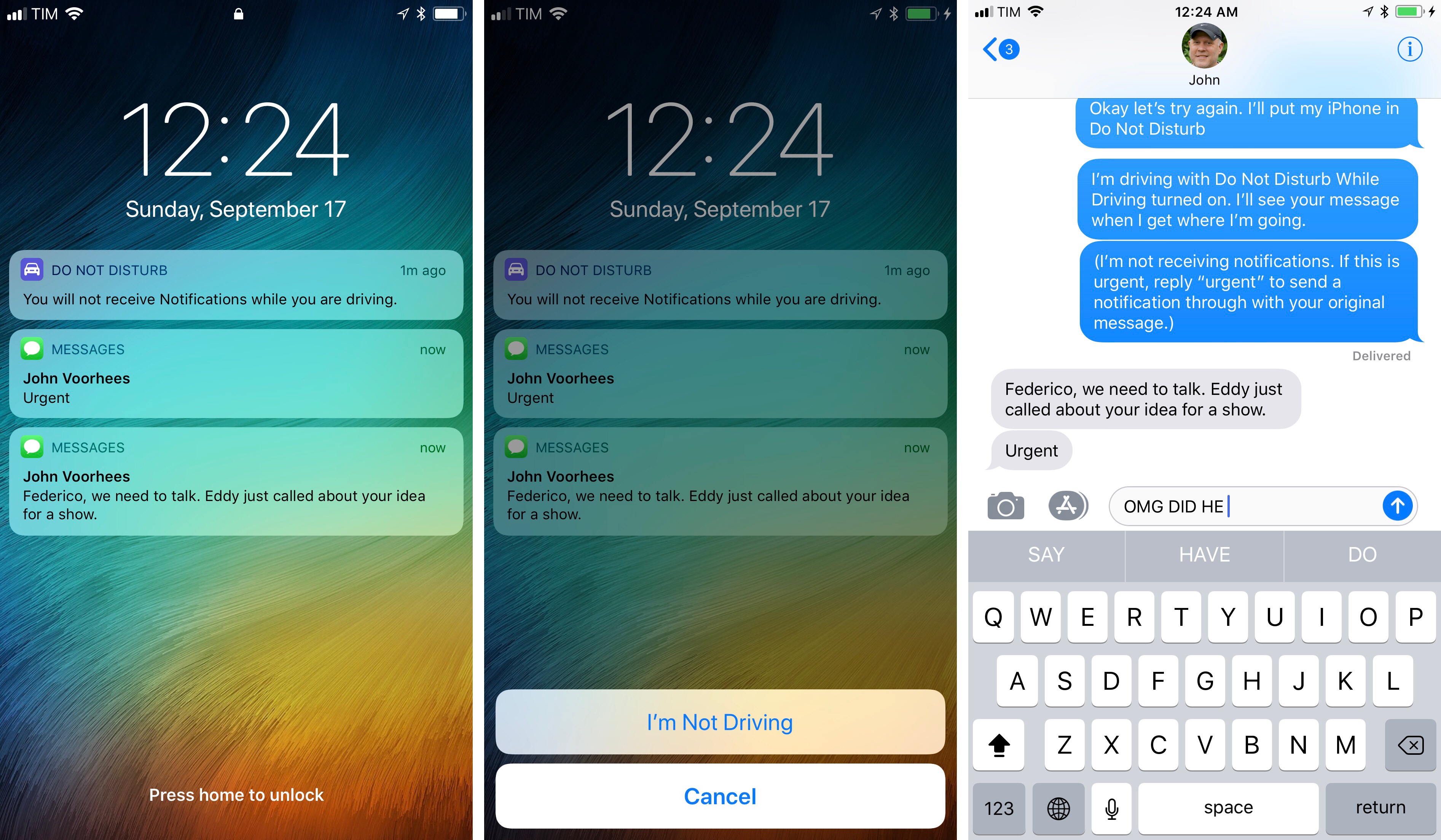

Do Not Disturb While Driving

To prevent drivers from being distracted by iPhone notifications at the wheel, Apple has extended Do Not Disturb with a smart ‘while driving’ option.

Do Not Disturb while driving can be activated manually from Control Center, or automatically engaged in two ways: when the iPhone is connected to hands-free car Bluetooth, or when a driving-like motion is detected by the accelerometer or through sensor data such as network triangulation. You can specify the behavior you prefer in Settings ⇾ Do Not Disturb. Car Bluetooth is the most accurate option as iOS 11 instantly knows when you’re in the car as soon as the device connects; automatic detection based on sensors might be slightly delayed when enabling and disabling the feature.

When Do Not Disturb while driving is active, the iPhone’s screen stays off so it doesn’t distract you with incoming notifications. If you try to wake the device while in driving mode, you’ll see a Do Not Disturb banner on the Lock screen telling you that notifications are being silenced. If you’re a passenger, or if Do Not Disturb has been accidentally enabled on a bus or train, you can unlock the iPhone by tapping I’m Not Driving from the Lock screen.

Existing methods to override Do Not Disturb still work with the ‘while driving’ flavor: you can enable phone calls from favorites and specific groups, allow a second call from the same person within three minutes, and receive emergency alerts, timers, and alarms. Even if the screen is off, you’ll retain access to Maps’ Lock screen navigation, hands-free Siri interactions, and controls for audio playback.

Your iPhone can also send an automatic message reply to recents, favorites, or all contacts when in Do Not Disturb while driving mode. The message can be customized in Settings if you want the auto-reply to be nicer than Apple’s default message. A contact can break through Do Not Disturb while driving by sending “urgent” as an additional message; Do Not Disturb will be temporarily disabled for that person, allowing you to read what’s happening.

Do Not Disturb while driving may seem like a relatively minor change to the daily iPhone experience, but it’s an important improvement that can quickly add up at scale. It won’t prevent drivers from looking at their iPhones outright – it’s an automatic deterrent that introduces just the right amount of friction to cut distractions and make people stay focused on the road.77 Even if a tiny percentage of the iPhone’s userbase will look at their screens less while driving, the feature will be a success for Apple and all of us.

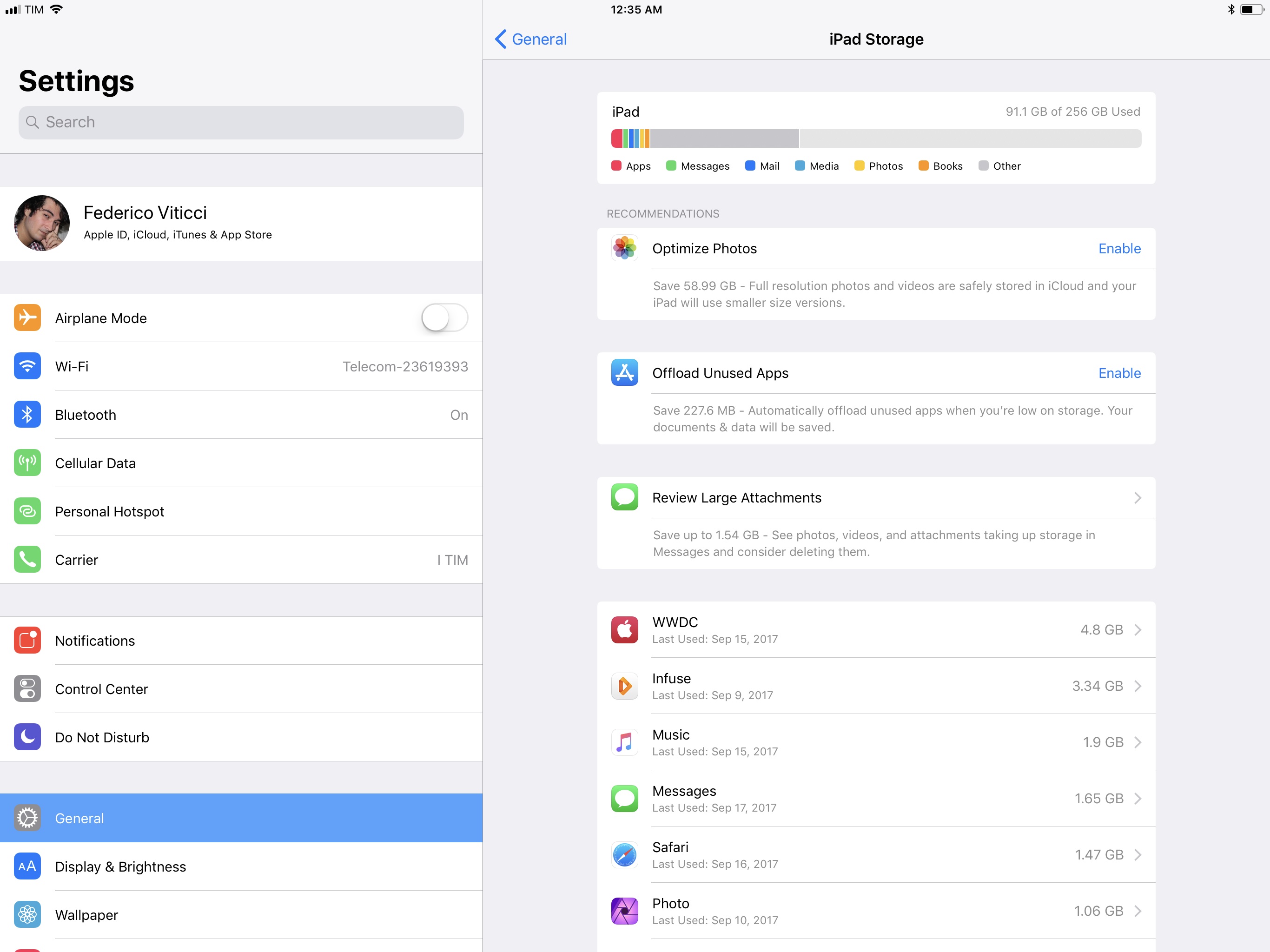

Storage Management

Storage settings have been revamped in iOS 11 with a detailed breakdown of categories of data that are using space on your device, as well as recommendations to reclaim storage. The storage bar at the top of the screen is reminiscent of iTunes’ presentation of an iPhone’s disk space: you’ll see colored sections for apps, photos, mail, media, and more.

Storage recommendations include tips such as reviewing large Messages attachments and, new in iOS 11, offloading apps. App offloading is the opposite of what Apple did with deletion of pre-installed apps last year: when you offload an app, you free the actual storage used by the app itself, but keep its documents and data. When the app is reinstalled, your data is restored as if the app never left.

Offloading is possible thanks to the App Store: while Apple’s built-in apps are “signed” into the iOS firmware and thus can never be truly deleted from the system, third-party apps can be redownloaded from the App Store, allowing iOS 11 to cache their data until the app’s binary is installed again. App offloading seems mostly aimed at games and other large apps that users may want to remove when they’re low on storage without losing their data. However, given how popular apps have ballooned in size over the past couple of years, offloading could be more useful than I originally imagined.

You can offload individual apps from Settings ⇾ General ⇾ Storage. When an app is offloaded, its Home screen icon is dimmed and gains a download glyph next to its name. Tapping the icon redownloads the app from the Store; when it’s ready, you’ll be able to launch the app and see your documents and data as you left them before offloading.

While offloading is a nice workaround to regain storage, it’s dependent on an app’s availability on the App Store. You should think carefully about offloading apps and games you care about: if, for some reason, they’re removed from sale while they’re offloaded from your device, you won’t be able to bring them back.

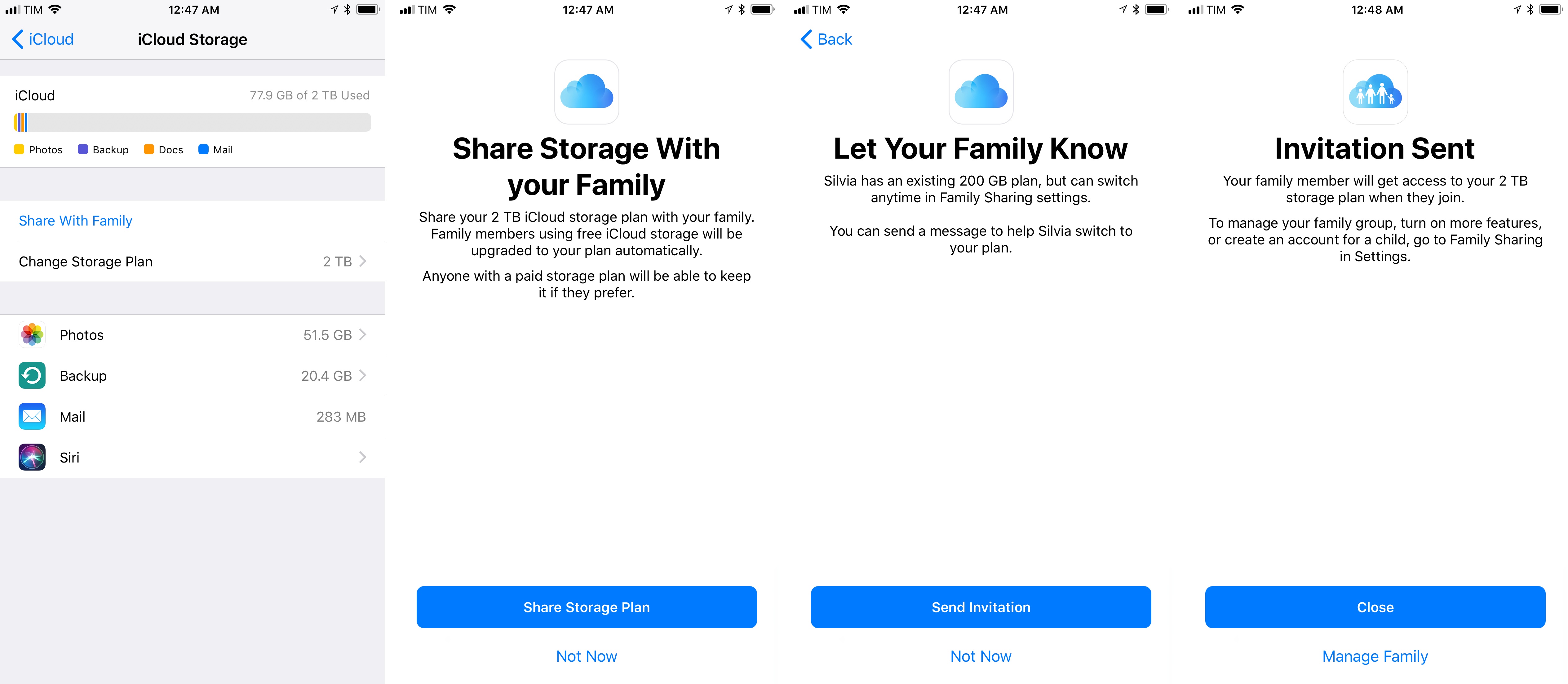

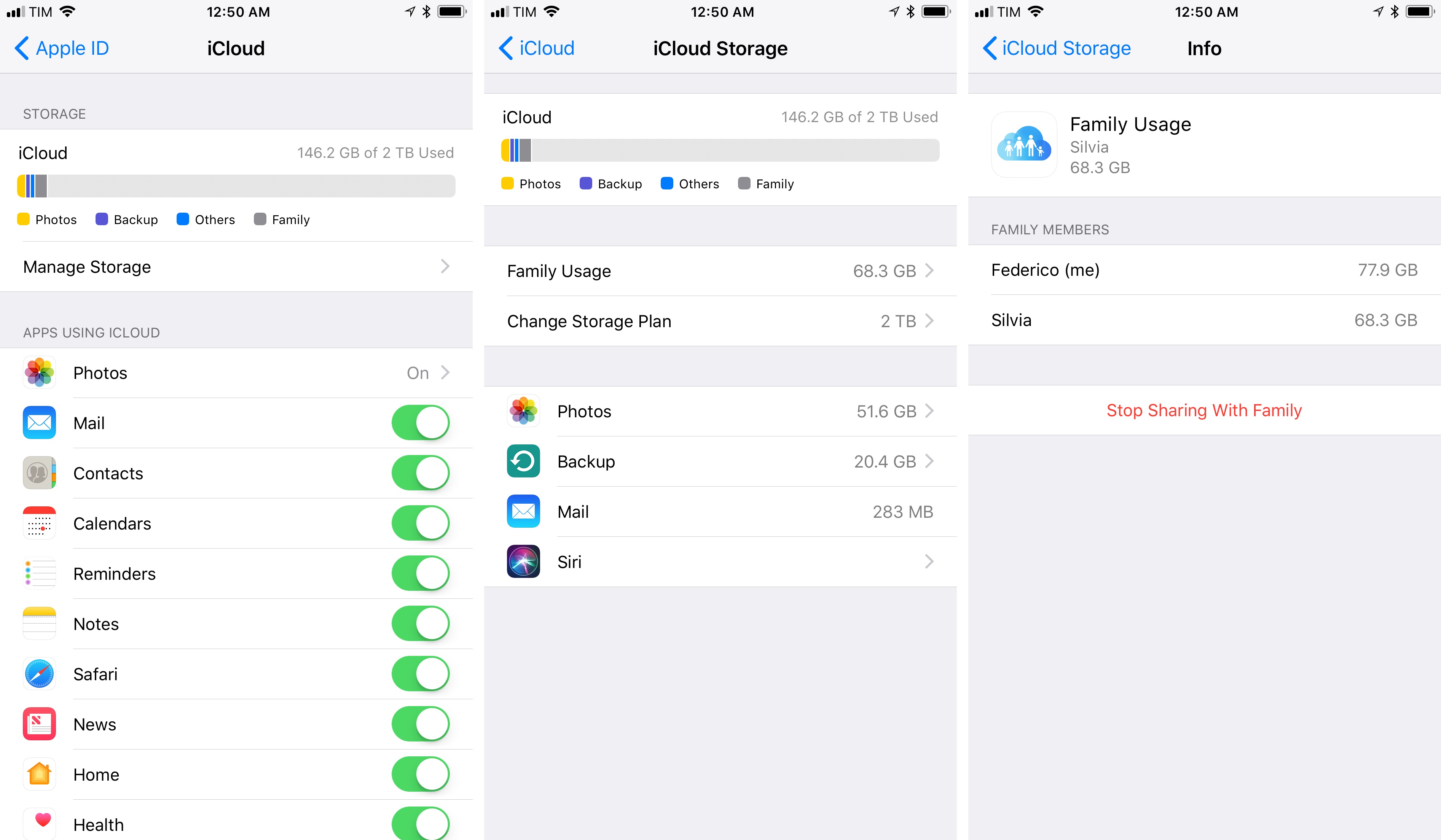

Also new in the storage department, iOS 11 allows you to share an iCloud storage plan with your family. The process is simple and unobtrusive: a family admin can invite other members to share the same paid plan, and iOS will intelligently allocate storage between them. Shared iCloud storage doesn’t work like, say, partitioning a drive on a Mac: you don’t preemptively choose how much storage to give to other people; iOS 11 and iCloud Drive take care of it automatically.

Once you invite a family member, they can downgrade their existing personal plan to free and start using the already-paid-for iCloud storage you’re sharing. At any point, you can go into Settings ⇾ iCloud ⇾ Manage Storage ⇾ Family Usage to check how much storage each member is using on iCloud and, optionally, stop sharing storage with your family.

Unfortunately, this is all there is to shared iCloud storage in iOS 11: there’s still no shared library in Photos and no special Family iCloud Drive in Files. Apple still has a long way to go in terms of Family Sharing and advanced controls on iOS. Shared iCloud storage is a great deal for families (we’ve enabled it in our household), but it needs deeper integration with the rest of the system.

Emergency SOS

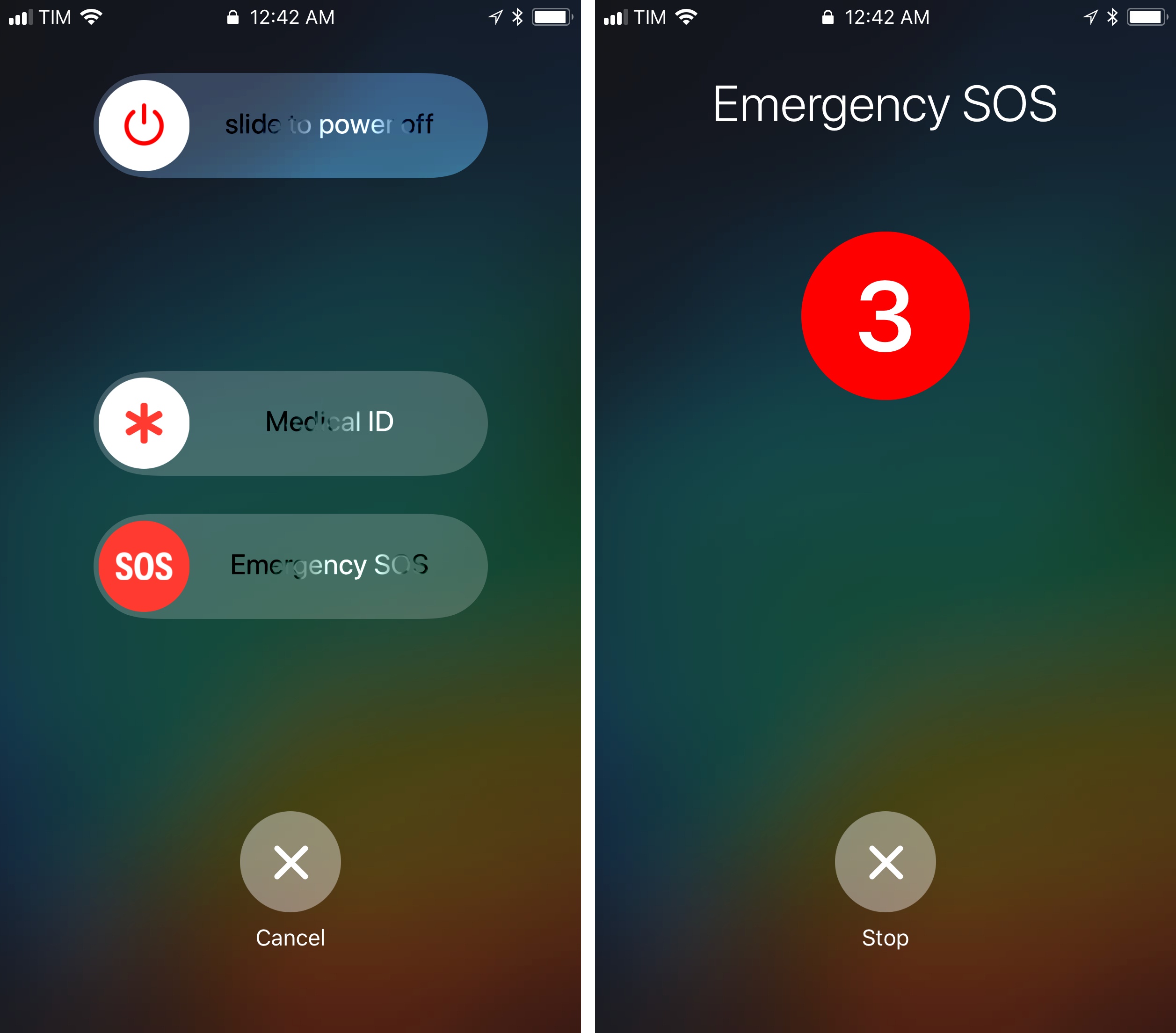

Similarly to watchOS 3, you can now rapidly press the iPhone’s power button five times to engage Emergency SOS mode. This feature can be used to quickly call emergency services, bring up your Medical ID card, and notify your emergency contacts with an SMS that contains your location on a map.

In Settings ⇾ Emergency SOS, you can enable an Auto Call option to automatically dial an emergency service in any given region; by default, an emergency auto call plays a loud siren sound that counts down to three before dialing. If Location Services are disabled, Emergency SOS temporarily enables them when calling or notifying your contacts; if necessary, iOS 11 may continue to monitor your location after Emergency SOS has been disabled, which you can manually turn off if no longer necessary. A list of your previously shared locations is available in Settings.

In addition, Emergency SOS automatically locks your iPhone and disables Touch ID or Face ID as soon as it’s activated. As others have noted, this makes Emergency SOS also useful for situations where you may want to prevent being compelled to unlock your device with your fingerprint or face. You can also enable Emergency SOS discretely while the iPhone is in your pocket: after clicking the power button five times, you’ll feel a haptic tap that indicates Emergency SOS mode is enabled and the device is locked. Then, you can dismiss Emergency SOS and the iPhone will require a passcode the next time you try to unlock it.

Emergency SOS is one of those functionalities you wish you never have to use, but which is great to have when needed. Apple’s design of Emergency SOS is thoughtful; like Do Not Disturb while driving, this option is deeply integrated with iOS.

And More…

It wouldn’t be a major iOS release without a collection of other miscellaneous changes and improvements.

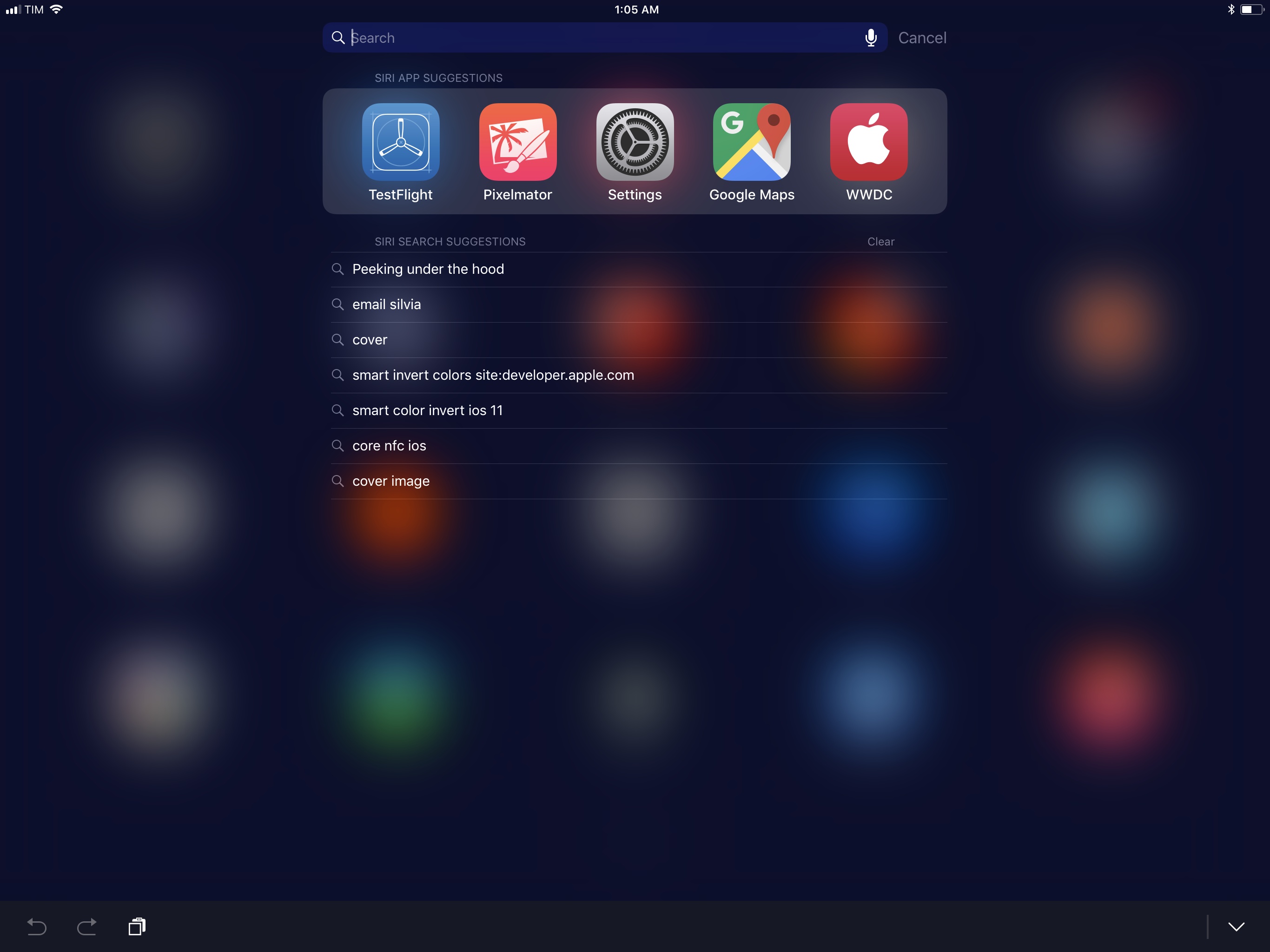

Spotlight. iOS’ native search feature has been updated with a machine learning-based ranker that is personalized and adaptive. Based on Core ML, Spotlight’s new ranker is trained in the cloud using features it learns on-device; details are private and do not include actual results or personal search queries. This is what constitutes Apple’s privacy-friendly way of applying machine learning to iOS: data sent to the cloud is only transmitted if you opt into analytics and after it’s gone through privacy-preserving techniques, such as Differential Privacy.

In practice, you’ll be seeing a lot more suggestions as soon as you start typing in iOS 11’s Spotlight. These are mostly based on text you’ve recently typed in apps; you can tap them to reopen a note, email thread, or iMessage. At this point, as soon as I start typing in Spotlight, I usually find what I’m looking for across hundreds of apps on my device.

Also worth noting: you can now clear previous searches from Spotlight, and on iPad you can drag results out of Spotlight to drop them somewhere else.

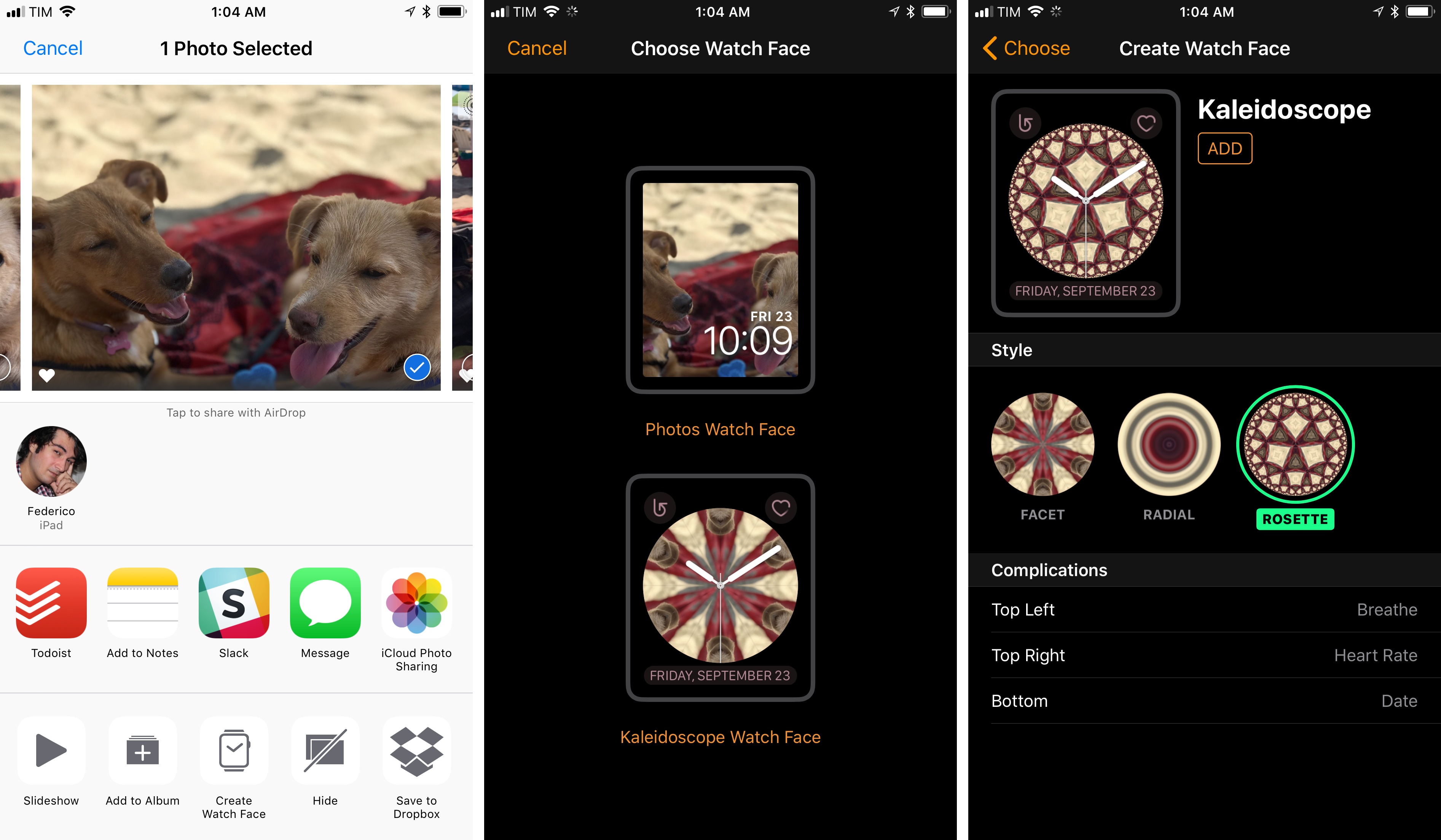

Create Watch Face. There’s a new extension to create a watch face from a photo in the share sheet. The extension can save two types of watch faces for watchOS 4: a traditional Photo watch face, or a Kaleidoscope one.

In both cases, you’ll be able to set various style and complication settings from the extension itself. In the Kaleidoscope face, you can preview three styles – facet, radial, and rosette – before saving the result to the My Faces section of the Watch app.

Social Accounts. In iOS 11, Apple has removed built-in integrations for social networks like Twitter and Facebook. The move comes with some repercussions: Safari no longer offers a Shared Links feature78 as it can’t see links from your Twitter timeline (it’s been replaced by your browsing history), and third-party apps that accessed your Twitter and Facebook accounts will have to find another way to authenticate your profiles. This includes Apple’s Calendar, which can’t automatically sync Facebook Events anymore in iOS 11.

In practice, most apps will likely implement Safari View Controller and SFAuthenticationSession to handle social logins. I’ve tried a handful of apps that had to remove native Twitter integration in favor of Safari-based login flows, and the transition has gone well. The old social framework predated the era of 2FA and Safari View Controller, and I think Apple made the right choice in removing all privileged social services from Settings.

3D Touch. Not a lot has changed for 3D Touch in iOS 11. You can now press on sections of the Look Up screen to preview word definitions, and you can share URLs from Messages conversations by swiping their peek preview upwards. Apple also removed the ability to press on the left edge of the iPhone’s screen to open the app switcher – likely to get users accustomed to the idea of swiping a Home indicator to enter multitasking on the iPhone X.

Full-swipe actions. Mail’s full-swipe gestures – the actions that allow you to quickly archive or delete a message – are now an API that apps can implement as well. Developers can use a custom color, a glyph, and a title for each action, and they can replace an action with a related one – such as Favorite and Unfavorite for a swipe on the same item. As an additional perk of this API, the iPhone plays a haptic feedback whenever an action is activated via a swipe.

Thermal state detection. Speaking of interesting new APIs, apps can now detect the current thermal state of the system. This was added so that at higher thermal states, apps can reduce usage of system resources while still maintaining some functionality. This way, the system doesn’t have to intervene and pause everything until the device cools down.

Passcode requirement for computer pairing. When connecting a device via USB to a computer, in addition to pressing the Trust button on a dedicated dialog, iOS 11 requires entering a passcode. This extra layer of security should prevent unauthorized access to private user data via desktop backup apps and other forensic tools.

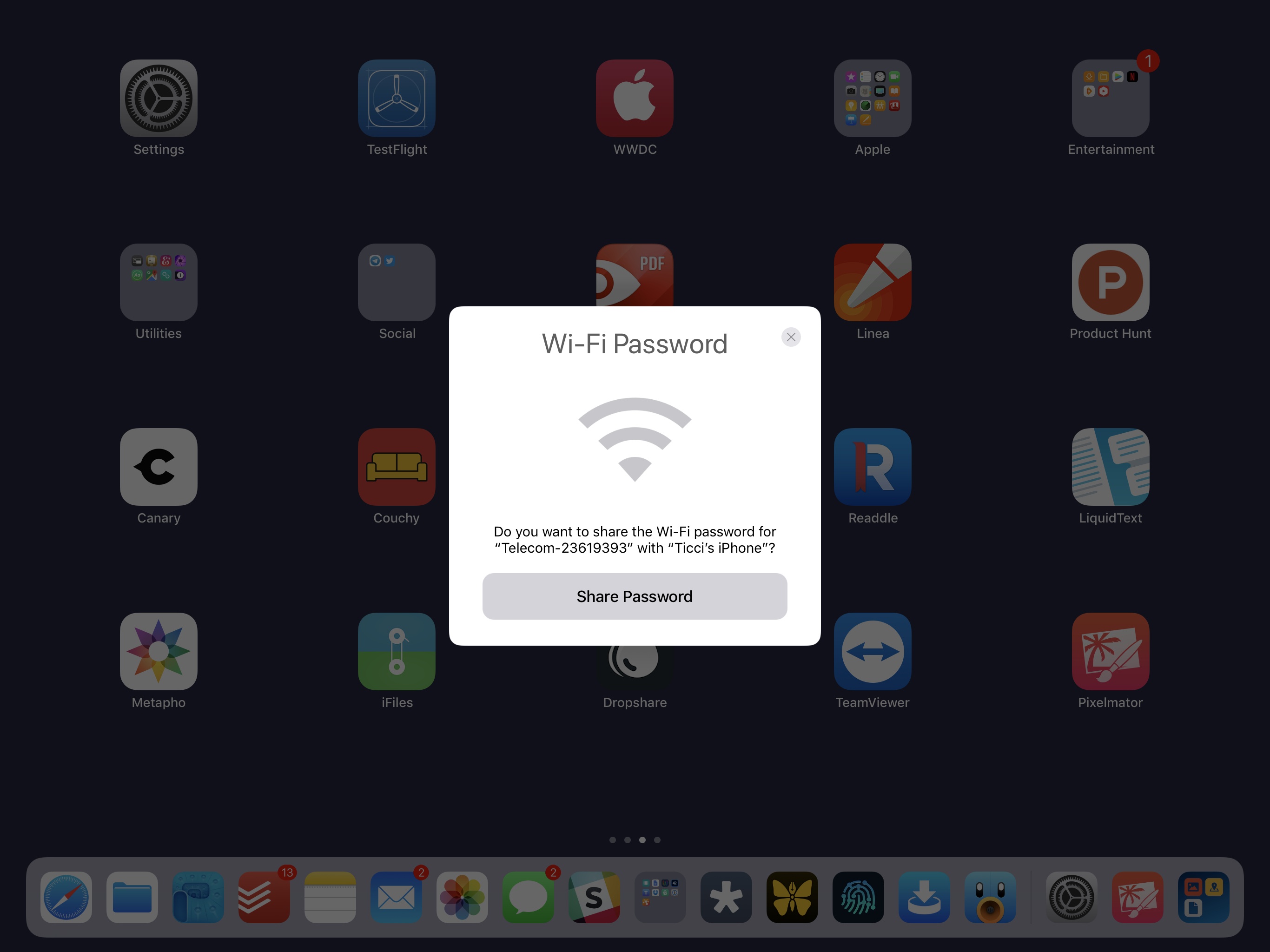

Wi-Fi sharing. We’ve all been in this situation: a friend comes over and asks for your Wi-Fi password, which is either too complex or embarrassing to reveal. iOS 11 removes the social awkwardness of Wi-Fi password sharing with the same tap-to-setup popup card employed by AirPods and Automatic Setup: if a new device is trying to connect to your network, you can hold your iPhone or iPad next to it to instantly share the password.

For this to work, both devices have to be running iOS 11 or macOS High Sierra, the new device has to be on the Wi-Fi password prompt in Settings, and your device has to be unlocked and nearby. In addition, the recipient has to be someone already in your Contacts. After the Wi-Fi sharing card pops up, all you have to do is confirm the connection and the password will be transferred securely with no copy and paste involved. I look forward to demoing this feature to my friends over the next few months.

New settings. The ever-growing Settings app offers a bevy of new controls worth mentioning. Some of the highlights:

- In Settings ⇾ General, you’ll find a virtual Shut Down button – useful for devices with an unresponsive power button.

- On the iPad, you can navigate sections of Settings with the Up/Down arrow keys.

- Background App Refresh now includes options for Wi-Fi, Wi-Fi and Cellular, or to turn it off entirely.

- Resetting a device now presents a new screen with toggles to keep copies of Contacts, News, Reminders, and Safari data locally.

- Auto-Brightness has been moved to Settings ⇾ General ⇾ Accessibility ⇾ Display Accommodations.

- And finally, iPad users can visit Settings ⇾ General ⇾ Multitasking & Dock to control other aspects of the multitasking experience, such as Picture in Picture, Slide Over, and Split View.

AirPods controls. iOS 11 allows the left and right AirPods to have separate controls for resuming playback, skipping to previous/next track, and activating Siri. I wish there was an easier way to open AirPods settings (they’re still buried into Settings ⇾ Bluetooth ⇾ AirPods), but this is everything I wanted from my favorite Apple device of 2016.

Accessibility. There are a number of noteworthy accessibility changes in iOS 11.

In Settings ⇾ Accessibility ⇾ Call Audio Routing, you can enable an Auto-Answer Calls feature that will automatically answer a call after a certain amount of time (by default, 3 seconds).

VoiceOver is getting smarter with image descriptions: with a three-finger tap on an image, iOS 11 will be able to describe its general setting and tone (including lighting and facial expressions) as well as detect text in the image and read it aloud. This is a fantastic change for visually impaired users and it demonstrates iOS’ intelligence applied to accessibility for the common good.

The Button Shapes setting has a new look, which isn’t a shape at all, but a simple underline. I don’t understand why Apple isn’t using actual button outlines, which should be easier to spot at a glance.

There’s also a new Smart Invert Colors functionality, which, unlike the old color invert option, does a better job at figuring out elements like images and media that shouldn’t be inverted.

What’s smart about this feature is that developers now have an API to fine-tune their app interfaces for color inversion, which results in a more pleasing aesthetic. Combined with the triple-click Home button accessibility shortcut (which can also be easily accessed from Control Center), iOS 11’s Smart Invert Colors feature is the closest we can get to a system-wide dark mode on iOS today.

- We'll have a full review of MeasureKit on MacStories later this week. ↩︎

- This step is common to ARKit apps, although developers can visualize it however they prefer. ↩︎

- Which is used by Notes' document scanner. ↩︎

- Lemmatization, part of speech, and named entity recognition are only available for English, French, Italian, German, Spanish, Portuguese, Russian, and Turkish. According to Apple, updates to the language models will be pushed on a regular basis. ↩︎

- I had to use an Android smartphone to write sample data to some cheap NFC stickers I bought on Amazon. ↩︎

- I’d like to see contextual Do Not Disturb extend to other common activities and scenarios – such as meetings, workouts, and watching video. ↩︎