Siri

“A delayed game is eventually good, but a rushed game is forever bad”, Nintendo’s Shigeru Miyamoto once quipped.

Unlike the console Miyamoto was concerned about, modern software and services can always be improved over time, but Apple knows the damage that can be caused by the missteps and perception of a rushed product. With iOS 10’s SiriKit, they’ve taken a different, more prudent route.

Ever since the company debuted its virtual assistant in 2011, it was clear Siri’s full potential – the rise of a fourth interface – could only be unlocked by extending it to third-party apps. And yet, as Siri’s built-in functionalities grew, a developer SDK remained suspiciously absent from the roster of year-over-year improvements. While others shipped or demoed voice-controlled assistants enriched by app integrations, Siri retained its exclusivity to Apple’s sanctioned services.

As it was recently revealed by top Apple executives, however, work on Siri has continued apace behind the scenes, including the rollout of artificial intelligence that cut error rates in half thanks to machine learning. In iOS 10, Apple is confident that the Siri backend is strong and flexible enough to be opened up to third-party developers with extensions. But at the same time, Apple is in no rush to bring support for any kind of app to Siri in this first release, taking a cautious approach with a few limitations.

Developers in iOS 10 can integrate their apps with Siri through SiriKit. The framework has been designed to let Siri handle natural language processing automatically, so developers only need to focus on their extensions and apps.

At a high level, SiriKit understands domains – categories of tasks that can be verbally invoked by the user. In iOS 10, apps can integrate with 7 SiriKit domains37:

- Audio and video calling: initiate a call or search the user’s call history;

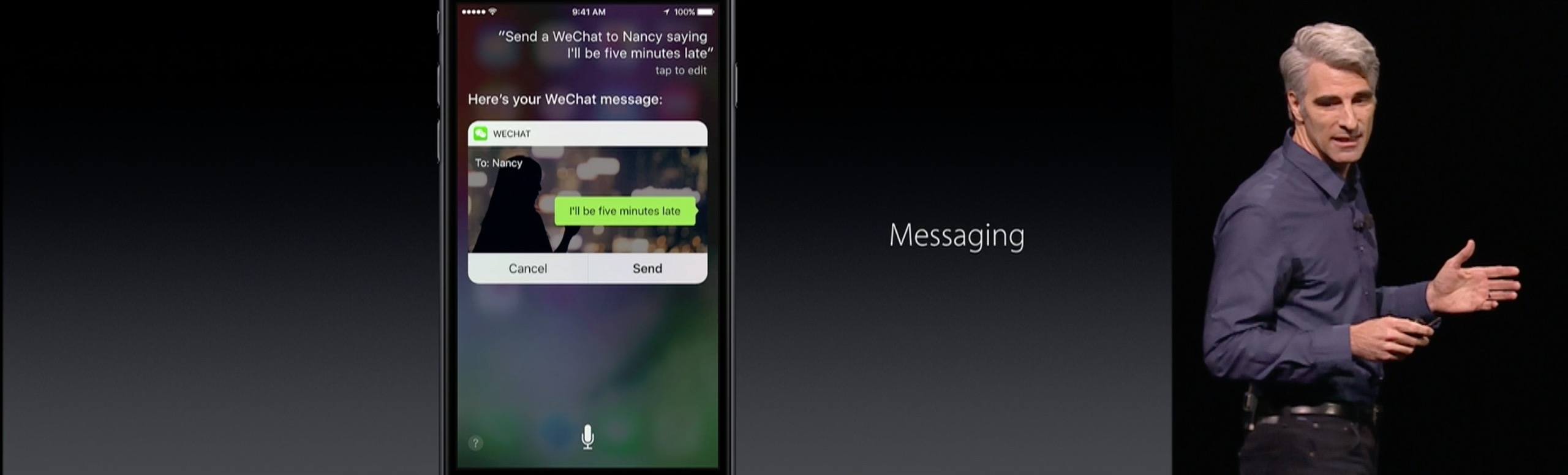

- Messaging: send messages and search a user’s message history;

- Payments: send and request payments;

- Photos: search photos or play slideshows;

- Book rides: shared by Maps and Siri. Siri can book a ride or get the status of a booked ride. Maps can also display a list of available rides for an area;

- Workouts: start, end, and manage workouts;

- CarPlay: manage vehicle environment by adjusting settings such as climate control, radio stations, defroster, and more.

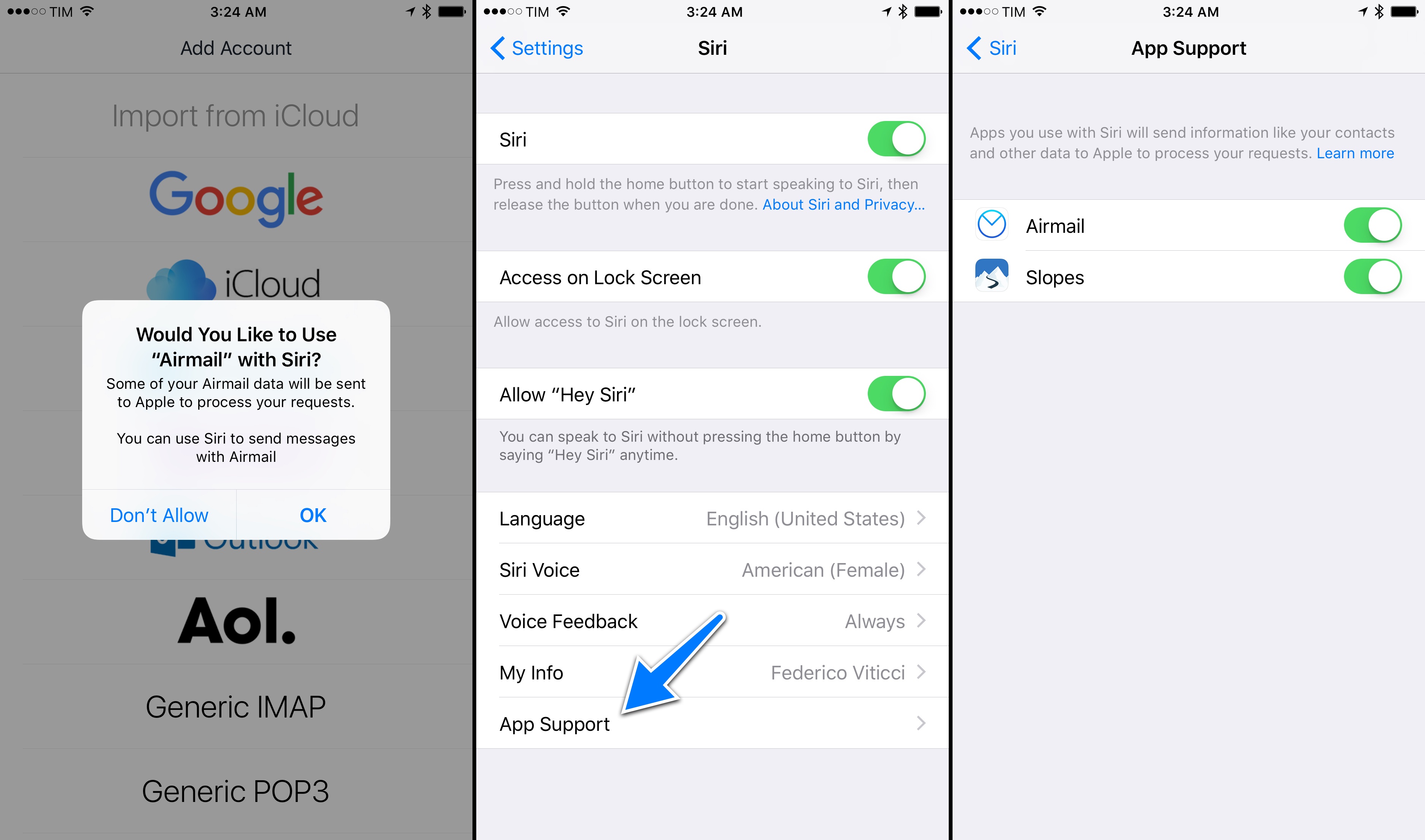

Inside a domain, Siri deals with intents. An intent is an action that Siri asks an app to perform. It represents user intention and it can have properties to indicate parameters – like the location of a photo or the date a message was received. An app can support multiple intents within the same domain, and it always needs to ask for permission to integrate with Siri.

SiriKit is easy to grasp if you visualize it like a Chinese box with a domain, inside of which there are multiple types of actions to be performed, where each can be marked up with properties. In this structure, Apple isn’t asking developers to parse natural language for all the expressions a question can be asked with. They’re giving developers empty boxes that have to be filled with data in the right places.

Imagine a messaging app that wants to support Siri to let users send messages via voice. Once SiriKit is implemented, a user would need to say something like “Tell Myke I’m going to be late using [app name]”, and the message would be composed in Siri, previewed visually or spoken aloud, and then passed to the app to be sent to Myke.

This basic flow of Siri as a language interpreter and middleman between voice and apps is the same for all domains and intents available in SiriKit. Effectively, SiriKit is a framework where app extensions fill the blanks of what Siri understood.

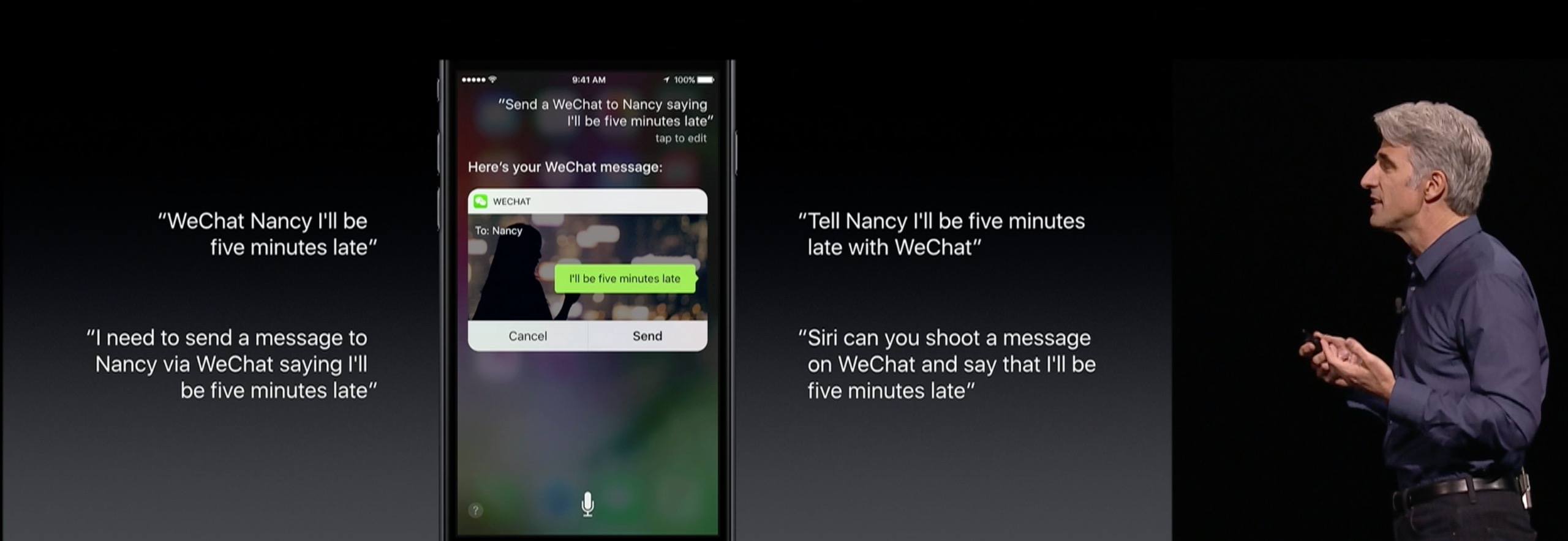

The syntax required by SiriKit simultaneously shows the rigidity and versatility of the framework. To summon an intent from a particular app, users have to say its name. However, thanks to Siri’s multilingual capabilities, developers don’t have to build support for multiple ways of asking the same question.

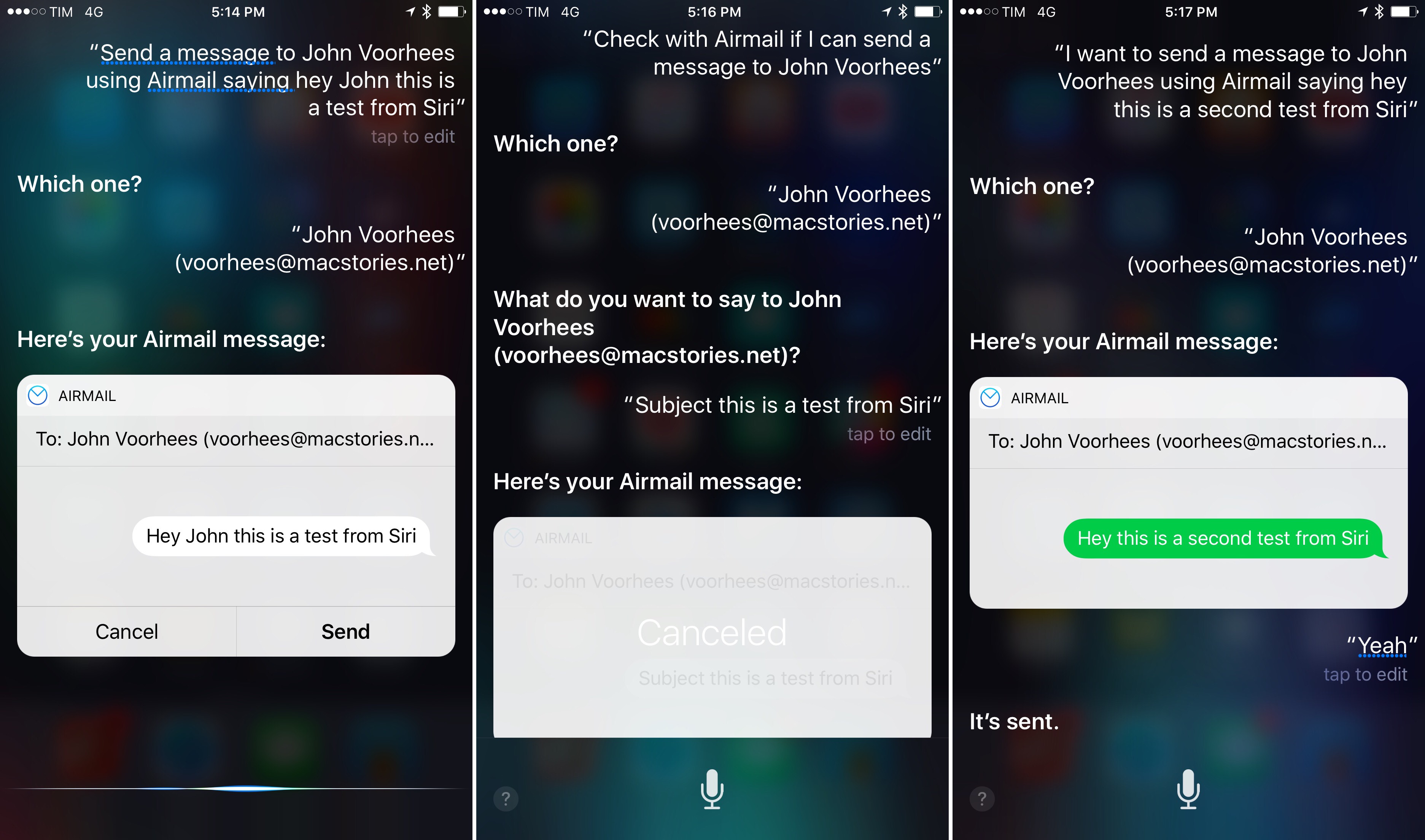

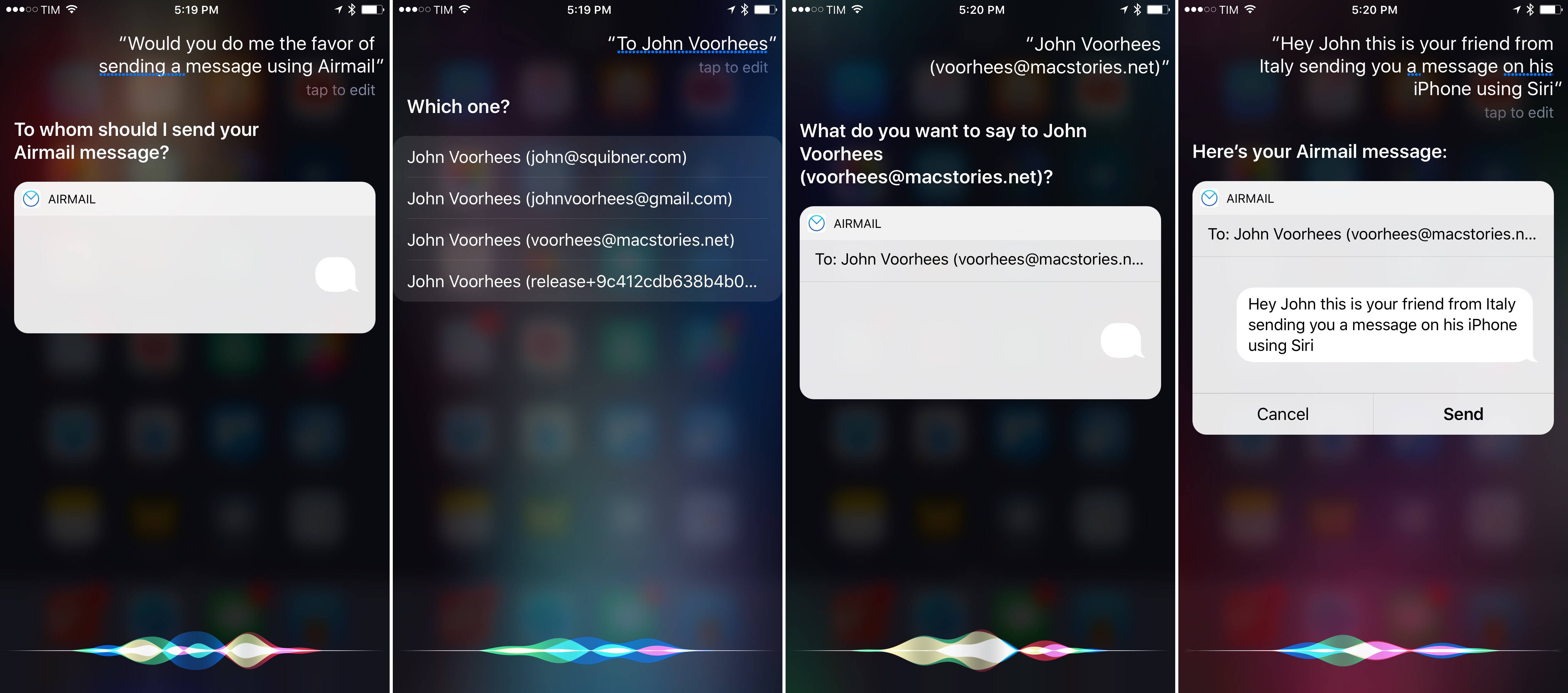

You could say “Hey Siri, send a message to Stephen using WhatsApp” or “message Stephen via WhatsApp”, but you could also phrase your request differently, asking something like “Check with WhatsApp if I can message Stephen saying I’ll be late”. You can also turn an app’s name into a verb and ask Siri to “WhatsApp Stephen I’m almost home”, and SiriKit will take care of understanding what you said so your command can be turned into an intent and passed to WhatsApp.

If multiple apps for the same domain are installed and you don’t specify an app’s name – let’s say you have both Lyft and Uber installed and you say “Hey Siri, get me a ride to the Colosseum” – Siri will ask you to confirm which app you want to use.

Apple has built SiriKit so that users can speak naturally, in dozens of languages, with as much verbosity as they want, while developers only have to care about fulfilling requests with their extensions. Apple refers to this multi-step process as “resolve, confirm, and handle”, where Siri itself takes care of most of the work.

Developers are given some control over certain aspects of the implementation. From a visual standpoint, they can customize their experiences with an Intents UI extension, which makes a Siri snippet look and feel like the app it comes from.

Customizing Siri extensions is optional, but I’d bet on most developers adopting it as it helps with branding and consistency. Slack, for instance, could customize its Siri snippet with their channel interface, while a workout extension could show the same graphics of the main app. Intents UI extensions aren’t interactive (users can’t tap on controls inside the customized snippet), but they can be used for updates on an in-progress intent (like a Uber ride or a workout session).

An app might want to make sure what Siri heard is correct. When that’s the case, an app can ask Siri to have the user double-check some information with a Yes/No dialog, or provide a list of choices to Siri to make sure it’s dealing with the right set of data. By default, Siri will always ask to confirm requesting a ride or sending a payment before the final step.

Other behaviors might need authentication from the user. Apps can restrict and increase security of their SiriKit extensions (such as when a device is locked) and request Touch ID verification. I’d imagine that a messaging app might allow sending messages via Siri from the Lock screen (the default behavior of the messaging intent), but restrict searching a user’s message history to Touch ID or the passcode.

Last, while Siri takes care of natural language processing out of the box, apps can offer vocabularies with specific terms to aid recognition of requests. A Siri-enabled app can provide user words, which are specific to a single user and include contact names (when not managed by Contacts), contact groups, photo tag and album names, workout names, and vehicle names for CarPlay; or, it can offer a global vocabulary, which is common to all users of an app and indicates workout names and ride options. For example, if Uber and Google Photos integrate with SiriKit, this means you’ll be able to ask “Show me photos from the Italy Trip 2016 album in Google Photos” or “Get me a Uber Black to SFO”.

SiriKit has the potential to bring a complete new layer of interaction to apps. On paper, it’s what we’ve always wanted from a Siri API: a way for developers to expose their app’s features to conversational requests without having to build a semantic engine. Precise but flexible, inherently elegant in its constraints, and customizable with native UIs and app vocabularies. SiriKit has it all.

The problem with SiriKit today is that it’s too limited. The 7 domains supported at launch are skewed towards the types of apps large companies offer on iOS. It’s great that SiriKit will allow Facebook, Uber, WeChat, Square, and others to build new voice experiences, but Apple is leaving out obvious categories of apps that would benefit from it as well. Note-taking, media playback, social networking, task management, calendar event creation, weather forecasts – the nature of these apps precludes integration with SiriKit. We can only hope that Apple will continue to open up more domains in future iterations of iOS.

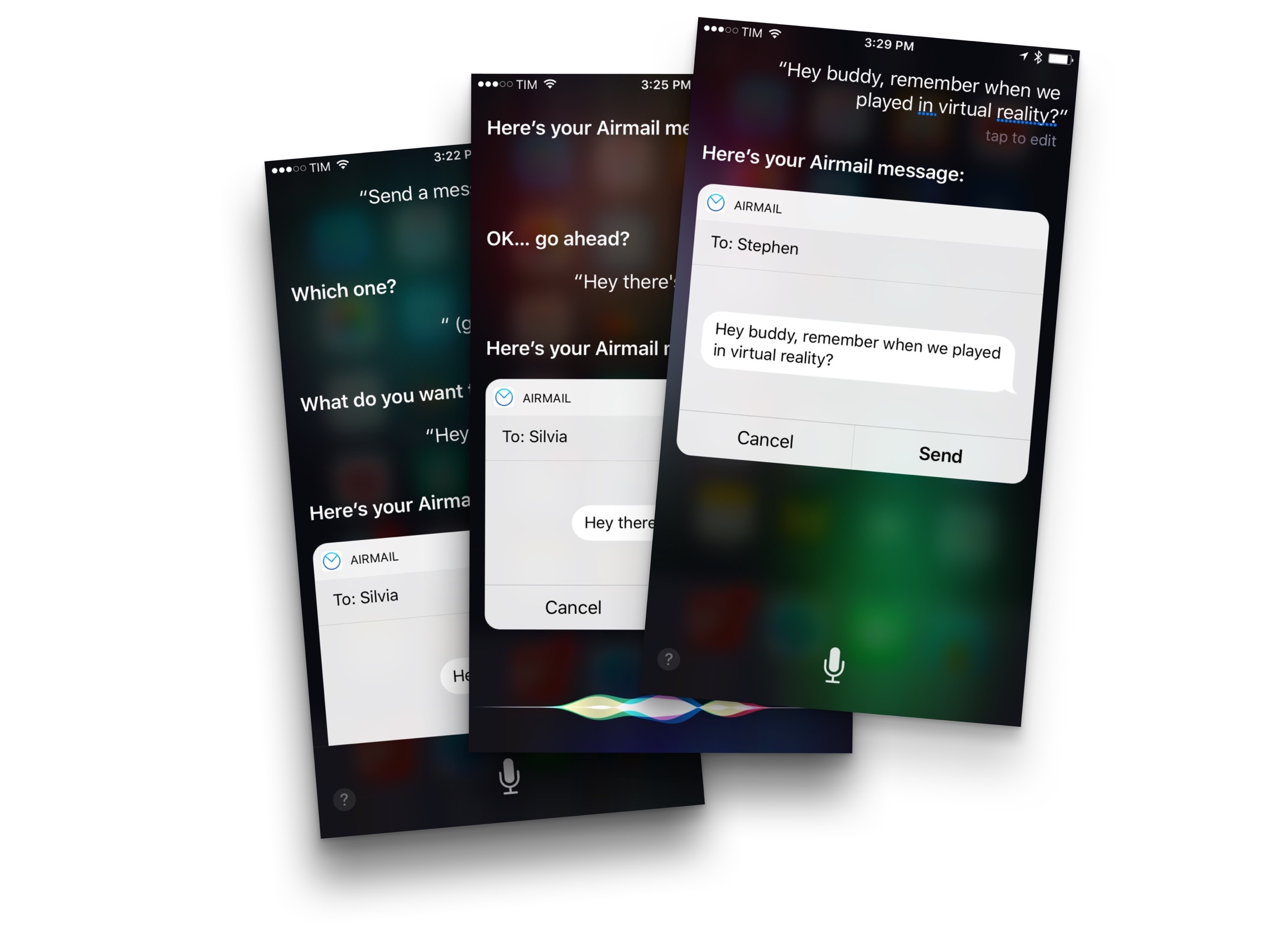

For this reason, SiriKit might as well be considered a public beta for now: it covers a fraction of what users do on their iPhones and iPads. I’ve only been able to test one app with SiriKit integration over the past few days – an upcoming update to Airmail. Bloop’s powerful email client will include a SiriKit extension of the messaging domain (even if email isn’t strictly “messaging”) to let you send email messages to people in your Airmail contact list.

In using Airmail and Siri together, I noticed how SiriKit took care of parsing natural language and multiple ways to phrase the same request. The “resolve, confirm, and handle” flow was exemplified by the steps required to confirm pieces of data required by Siri – in Airmail’s case, the recipient’s email address and message text.

As for other domains, I can’t comment on the practical gains of SiriKit yet, but I feel like messaging and VoIP apps will turn out to be popular options among iPhone users.

I want to give Apple some credit. Conversational interactions are extremely difficult to get right. Unlike interface elements that can only be tapped in a limited number of ways, each language supported by Siri has a multitude of possible combinations for each sentence. Offloading language recognition to Siri and letting developers focus on the client side seems like the best solution for the iOS ecosystem.

We’re in the early days of SiriKit. Unlike Split View or notifications, it’s not immediately clear if and how this technology will change how we interact with apps. But what’s evident is that Apple has been laying SiriKit’s foundation for quite some time now. From the pursuit of more accurate language understanding through AI to the extensibility framework and NSUserActivity38, we can trace back SiriKit’s origins to problems and solutions Apple has been working on for years.

Unsurprisingly, Apple is playing the long game: standardizing the richness of the App Store in domains will take years and a lot of patient, iterative work. It’s not the kind of effort that is usually appreciated by the tech press, but it’ll be essential to strike a balance between natural conversations and consistent behavior of app extensions.

Apple isn’t rushing SiriKit. Hopefully, that will turn out to be a good choice.

- There's actually an eighth domain – restaurant reservations – but it requires official support from Apple. It also works with Maps in addition to Siri. Intents for restaurant reservations include the ability to check available times, book a table, and get information for reservations and guests. Apple has set up a webpage to apply for inclusion here. ↩︎

- Which is used to continue an intent inside an app if it can't be displayed with a Siri snippet, such as playing a slideshow in a photo app. ↩︎