I enjoyed this thought-provoking piece by (award-winning developer) Matt Birchler, writing for Birchtree on how he’s been making so-called “micro apps” with AI coding agents:

I was out for a run today and I had an idea for an app. I busted out my own app, Quick Notes, and dictated what I wanted this app to do in detail. When I got home, I created a new project in Xcode, I committed it to GitHub, and then I gave Claude Code on the web those dictated notes and asked it to build that app.

About two minutes later, it was done…and it had a build error.

And:

As a simple example, it’s possible the app that I thought of could already be achieved in some piece of software someone’s released on the App Store. Truth be told, I didn’t even look, I just knew exactly what I wanted, and I made it happen. This is a quite niche thing to do in 2026, but what if Apple builds something that replicates this workflow and ships it on the iPhone in a couple of years? What if instead of going to the App Store, they tell you to just ask Siri to make you the app that you need?

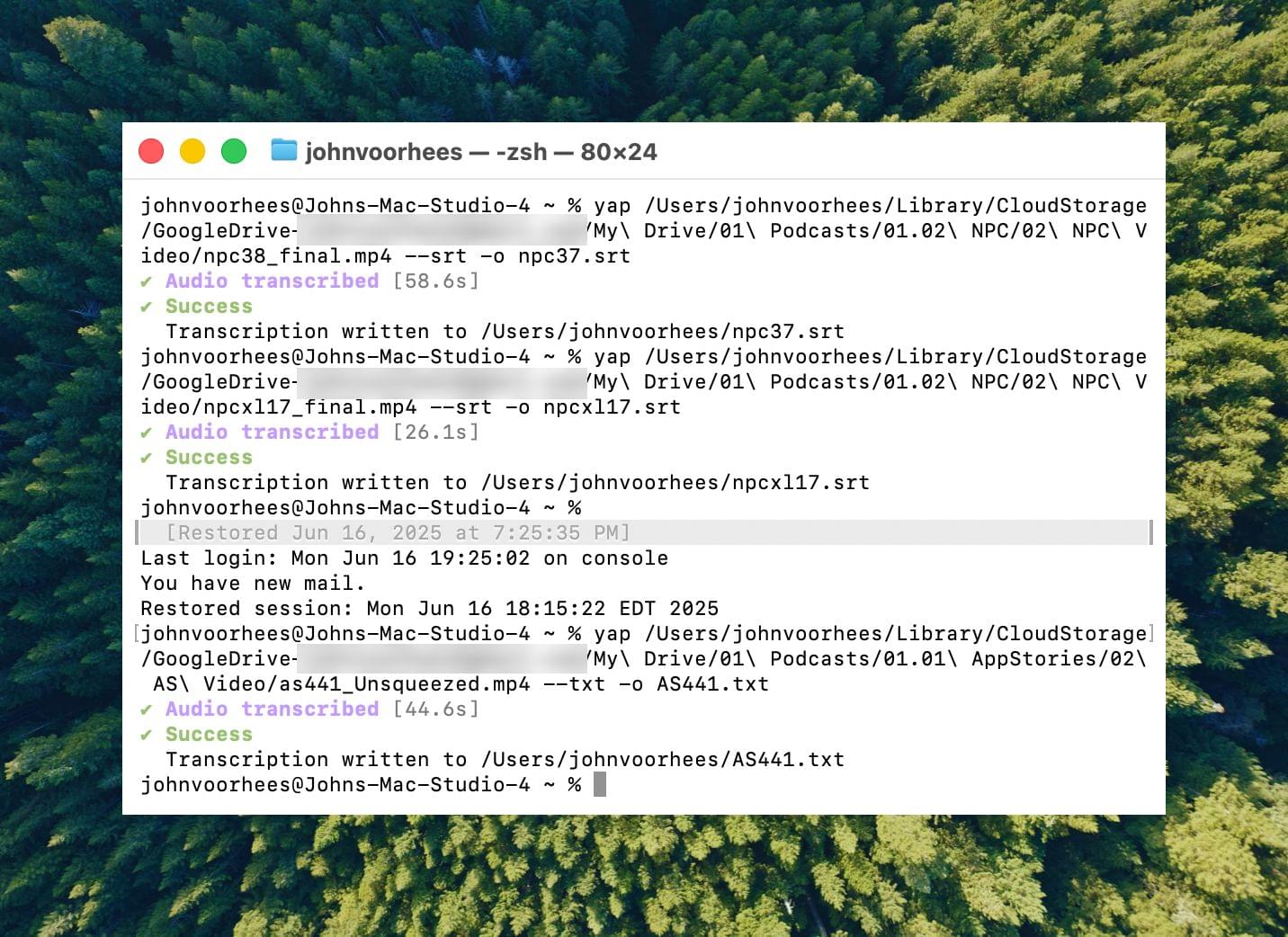

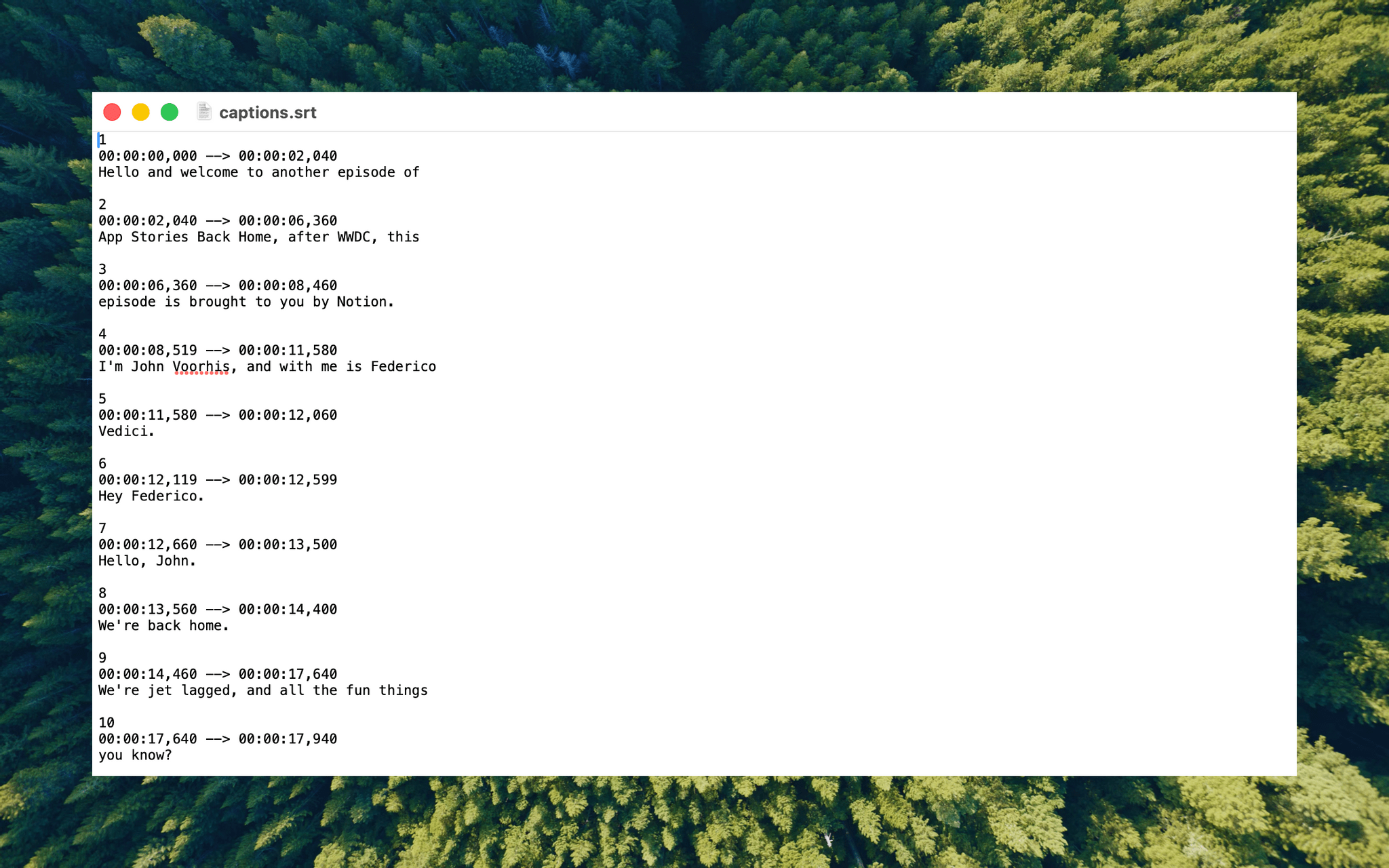

John and I are going to discuss this on the next episode of AppStories about the second part of the experiments we did over our holiday break. As I’ll mention in the episode, I ended up building 12 web apps for things I have to do every day, such as appending text to Notion just how I like it or controlling my TV and Hue sync box. I didn’t even think to search the App Store to see if new utilities existed: I “built” (or, rather, steered the building of) my own progressive web apps, and I’m using them every day. As Matt argues, this is a very niche thing to do right now, which requires a terminal, lots of scaffolding around each project, and deeper technical knowledge than the average person who would just prompt “make me a beautiful todo app.” But the direction seems clear, and the timeline is accelerating.

I also can’t help but remember this old rumor from 2023 about Apple exploring the idea of letting users rely on Siri to create apps on the fly for the then-unreleased Vision Pro. If only the guy in charge of the Vision Pro went anywhere and Apple got their hands on a pretty good model for vibe-coding, right?