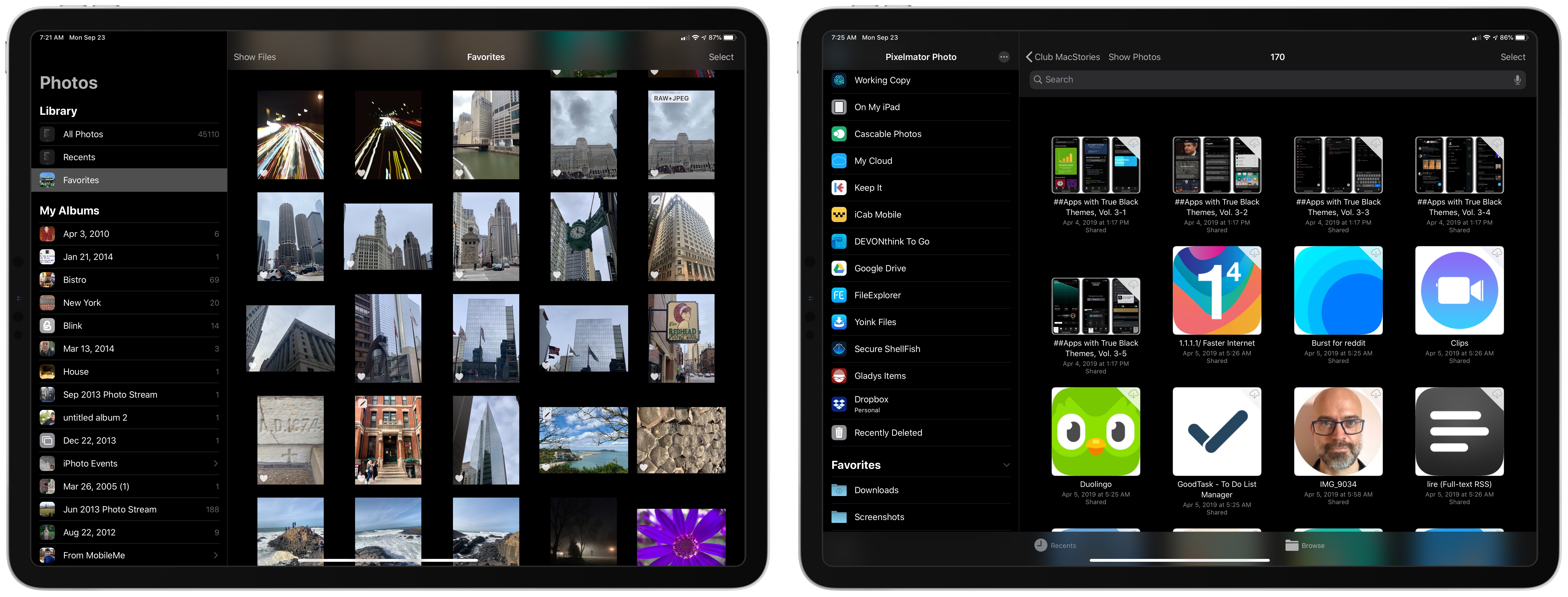

In a video shared earlier today, Tom Hogarty, who’s a Lightroom product manager at Adobe, demonstrated an upcoming feature of Lightroom for iPad – the ability to import photos from external devices (such as cameras, drives, or SD cards connected over USB-C) into Lightroom’s library without copying them to the Photos app first.

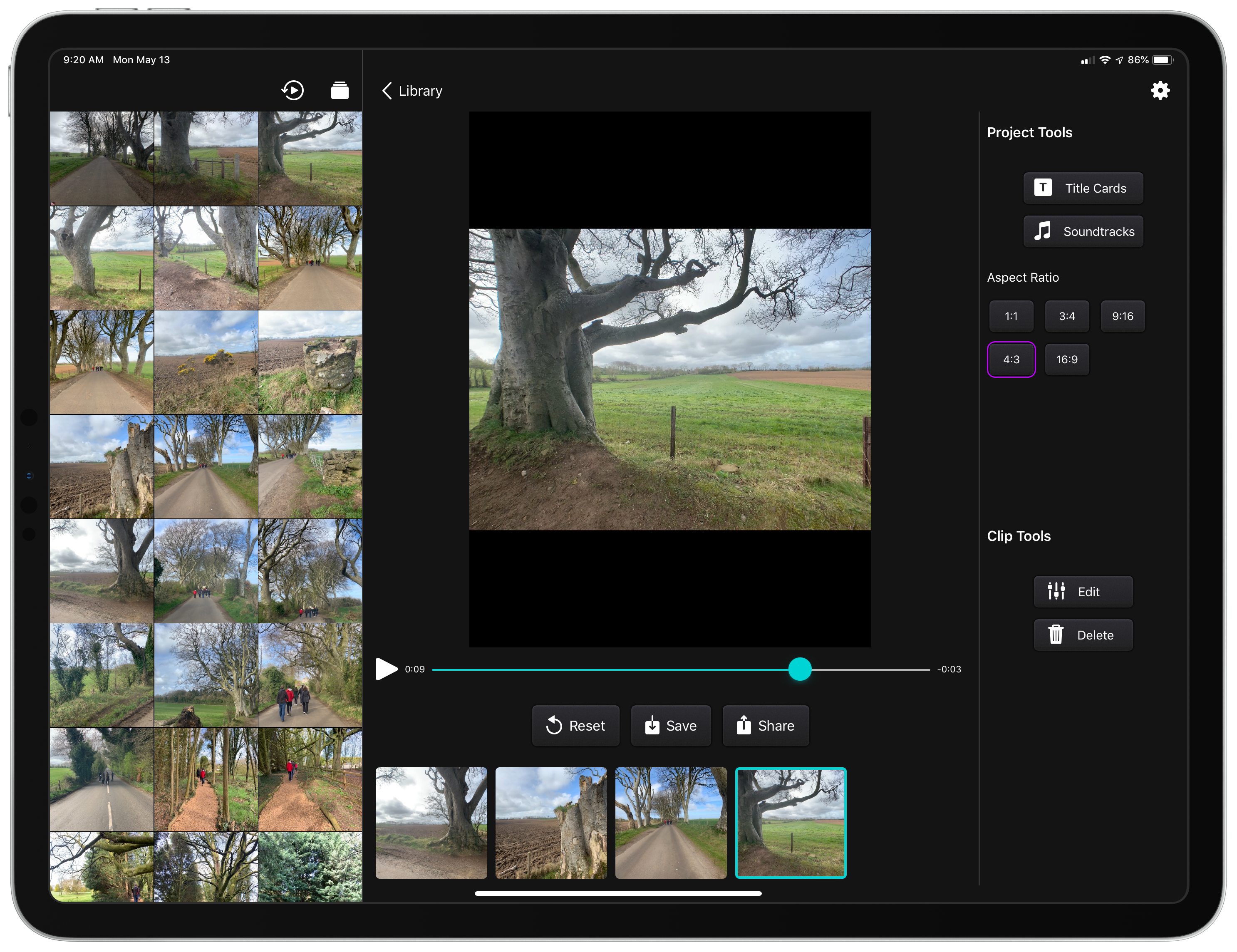

Here’s how it’s going to work:

The workflow looks very nice: an alert comes up as soon as an external device is detected, photos are previewed in a custom UI within Lightroom (no more Photos overlay) and they’re copied directly into the app. I think anyone who uses Lightroom for iPad to edit photos taken with a DSLR is going to appreciate this addition. Keep in mind that the 2018 iPad Pros support up to 10 Gbps transfers over USB-C, which should help when importing hundreds of RAW files into Lightroom.

Direct photo import from external USB storage devices was originally announced by Apple at WWDC 2019 as part of the “Image Capture API” for iPadOS. When I was working on my iOS and iPadOS 13 review, I searched for documentation to cover the feature, but I couldn’t find anything on Apple’s website (I wasn’t the only one). Eventually, I just assumed it was part of the functionalities Apple delayed until later in the iOS 13 cycle. It turns out that this feature was quietly introduced by Apple with iOS and iPadOS 13.2, as also suggested by Hogarty in the Lightroom video.

According to this thread on StackOverflow, direct photo import is part of the ImageCaptureCore framework, which is now also available for iOS and iPadOS. I still can’t find any documentation for it on Apple’s developer website.