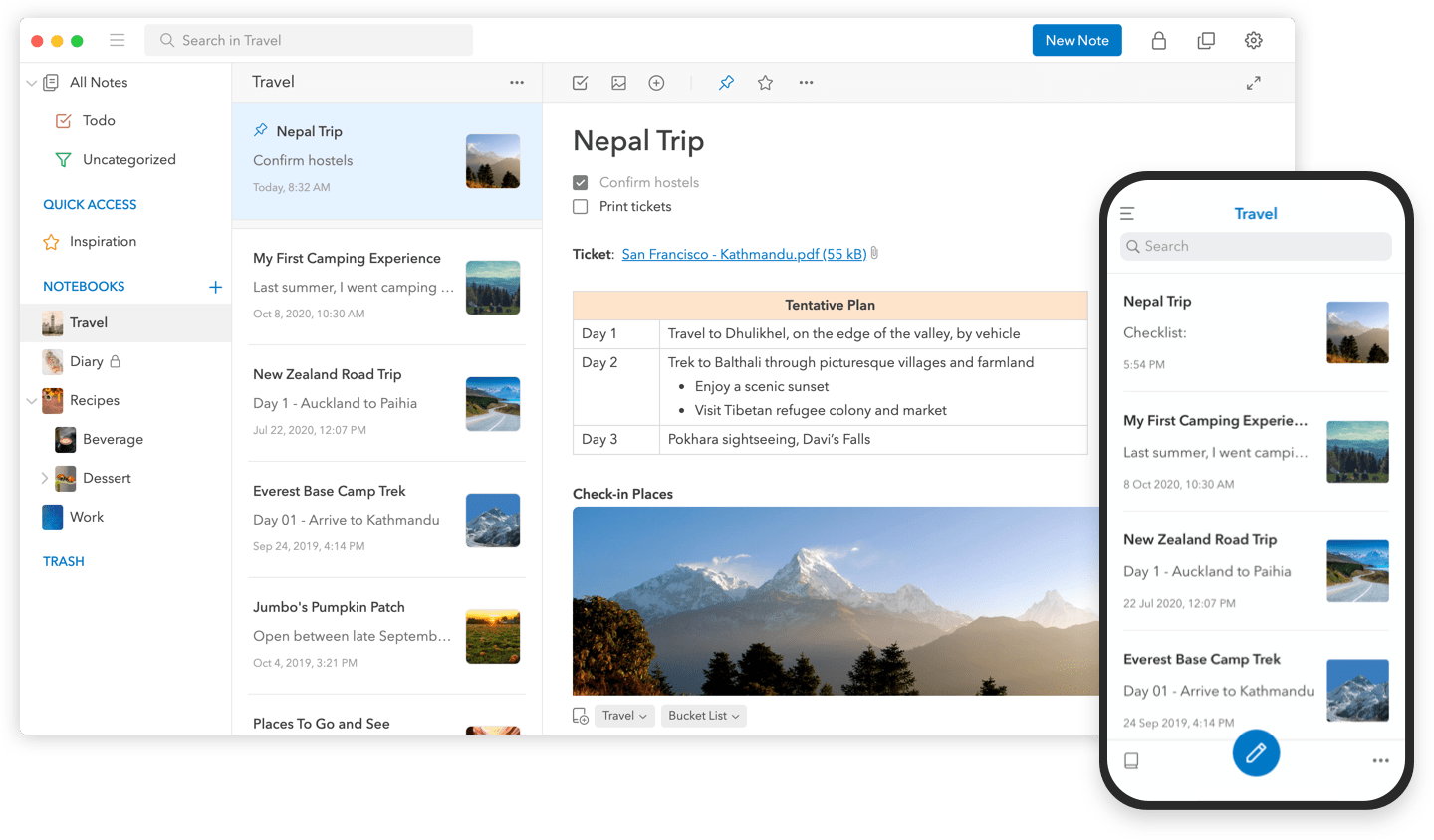

UpNote is an elegant and powerful note-taking app that works across multiple platforms. Designed to make it easy to take notes and stay focused, UpNote combines a beautiful interface with a fluid workflow for a refined note-taking experience.

The app works on iOS, the Mac, and Windows, with an Android version coming soon, making it the perfect solution for anyone who needs access to their notes across multiple platforms thanks to the app’s fast, reliable sync. With colorful themes and a lot of font choices, you can make UpNote your very own, organizing notes into notebooks and pinning and bookmarking notes for quick access too.

When it’s time to get your ideas down, UpNote’s focus mode eliminates distractions so you can capture your thoughts quickly and efficiently. That makes UpNote an excellent solution for all sorts of text beyond your notes. The app can be locked, which makes it perfect for journaling, for instance.

UpNote’s text editor is fully-featured, with support for rich-text, bi-directional linking, nested lists, images, attachments, tables, and code blocks. Of course, the app supports Markdown syntax too. And, when you need to use your notes elsewhere, you can export them as Markdown text or PDFs.

Now is the perfect time to try UpNote. The app includes subscription and lifetime upgrade options, and until the end of February, MacStories readers can purchase UpNote’s lifetime premium upgrade for 50% off. This is a terrific deal, so don’t delay. Go check out UpNote now and take advantage of this fantastic offer.

Our thanks to UpNote for sponsoring MacStories this week.