With Global Accessibility Awareness Day coming up this Thursday, May 15, Apple is back with its annual preview of accessibility features coming to its platforms later in the year. This year marks the 40th anniversary of the creation of the first office within Apple to address accessibility, and there’s no sign of any slowdown in the company’s development on this front. While no official release date has been announced for these features, they usually arrive with the new OS updates in the fall.

In addition to a new accessibility-focused feature in the App Store, Apple announced a whole raft of system-level features. Let’s take a look.

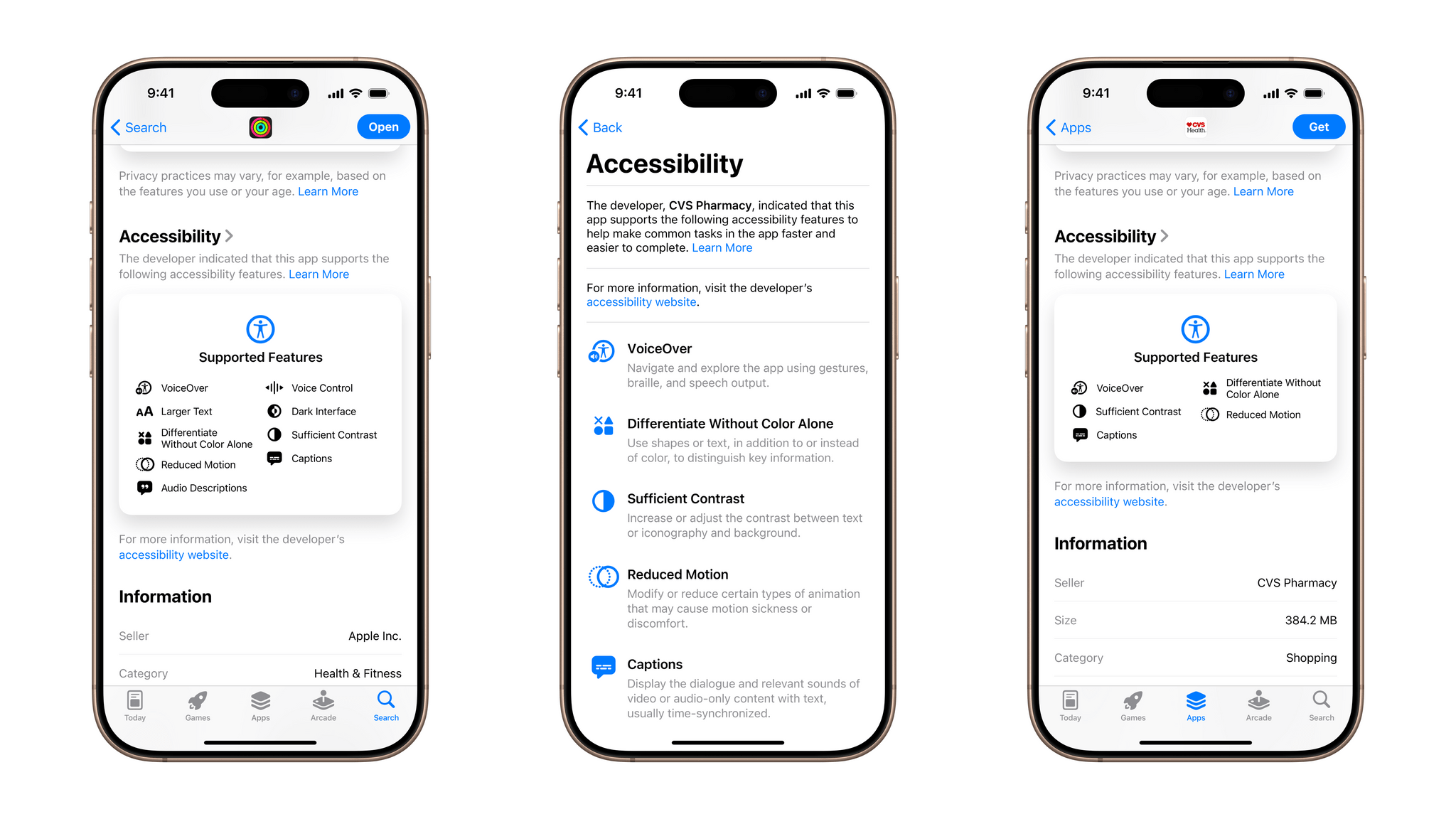

App Store Accessibility Nutrition Labels

Launched in December 2020, the App Store’s Privacy Nutrition Labels are part of an app’s listing that inform users what sort of private information is obtained and used by the app. Now, Apple is expanding this approach into accessibility, launching worldwide.

In the same way developers create Privacy Nutrition Labels, they can also create labels showing the accessibility features supported by their apps. Features such as VoiceOver, Reduce Motion, captions, and more can be listed, making it easier for those with accessibility needs to choose the right app for them. Developers can also place links to their own accessibility websites in this section.

As with Privacy Nutrition Labels, the information displayed in Accessibility Nutrition Labels is chosen by app developers. However, Apple says it will continue monitoring this feature going forward to ensure it works as intended.

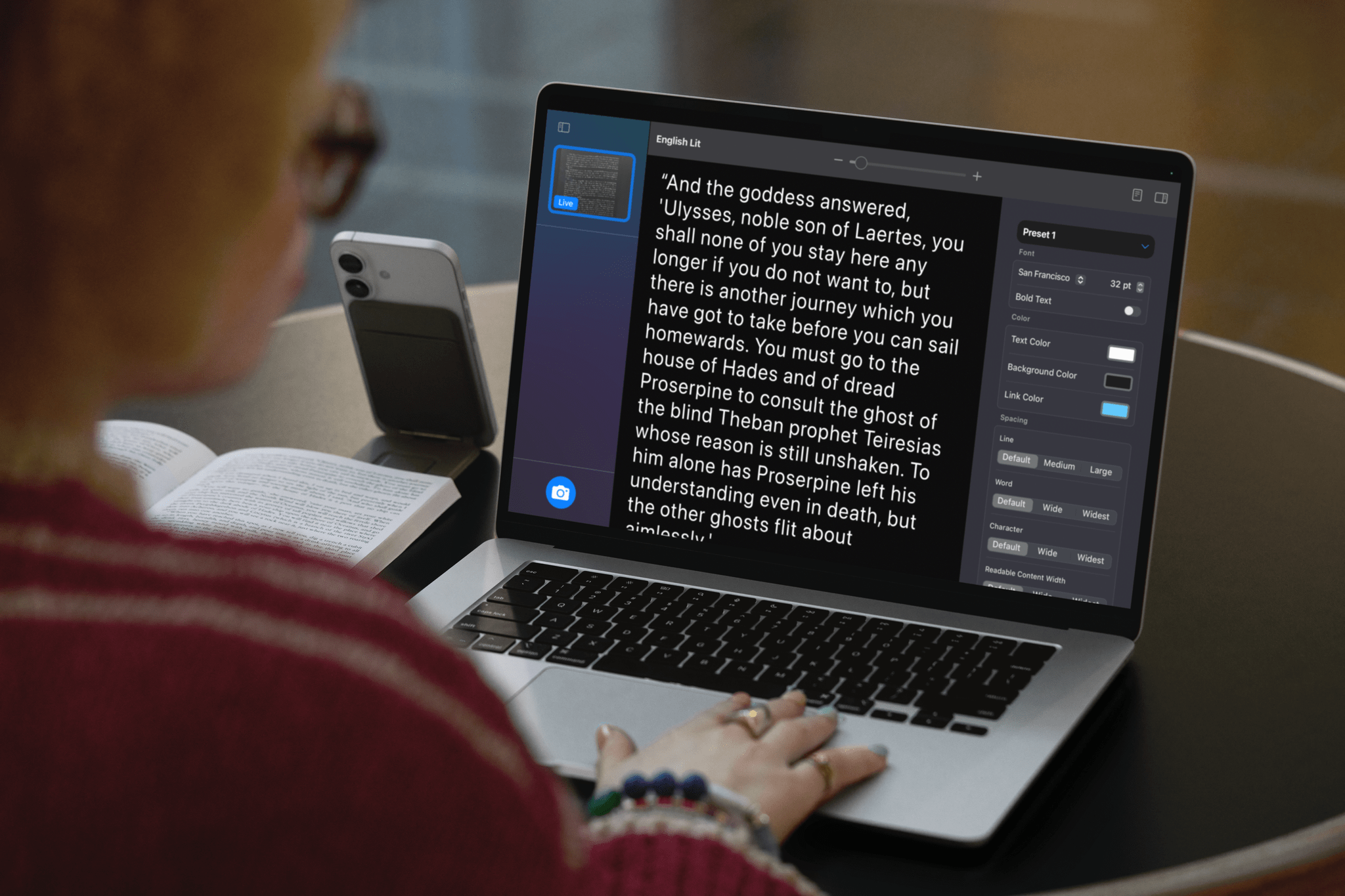

Magnifier Comes to the Mac

Apple’s Magnifier app on the iPhone offers a way to zoom in on objects around you, make text clearer, and identify the distance to objects like doors and people. Later this year, Apple will bring Magnifier to the Mac with some more tricks up its sleeve.

By connecting your iPhone to your Mac with Continuity Camera, or by using a USB-compatible camera, your Mac can take images and allow you to manipulate them in ways that make them easier to see. In one provided example, a student in a lecture attached a camera to their MacBook Air using a mount and pointed the camera at the whiteboard. Then, using the Magnifier app, they snapped some pictures of diagrams and notes, adjusted the contrast, changed the colors, and enlarged the text to make the lecture more accessible for them.

In the example image above, you can see the student pointing the camera at a textbook to get its content onto their MacBook to read using Desk View. Magnifier on the Mac even allows users to conduct multiple live sessions to follow along in a textbook while reading content on a large screen. Users can save images they capture in groups for use at a later date.

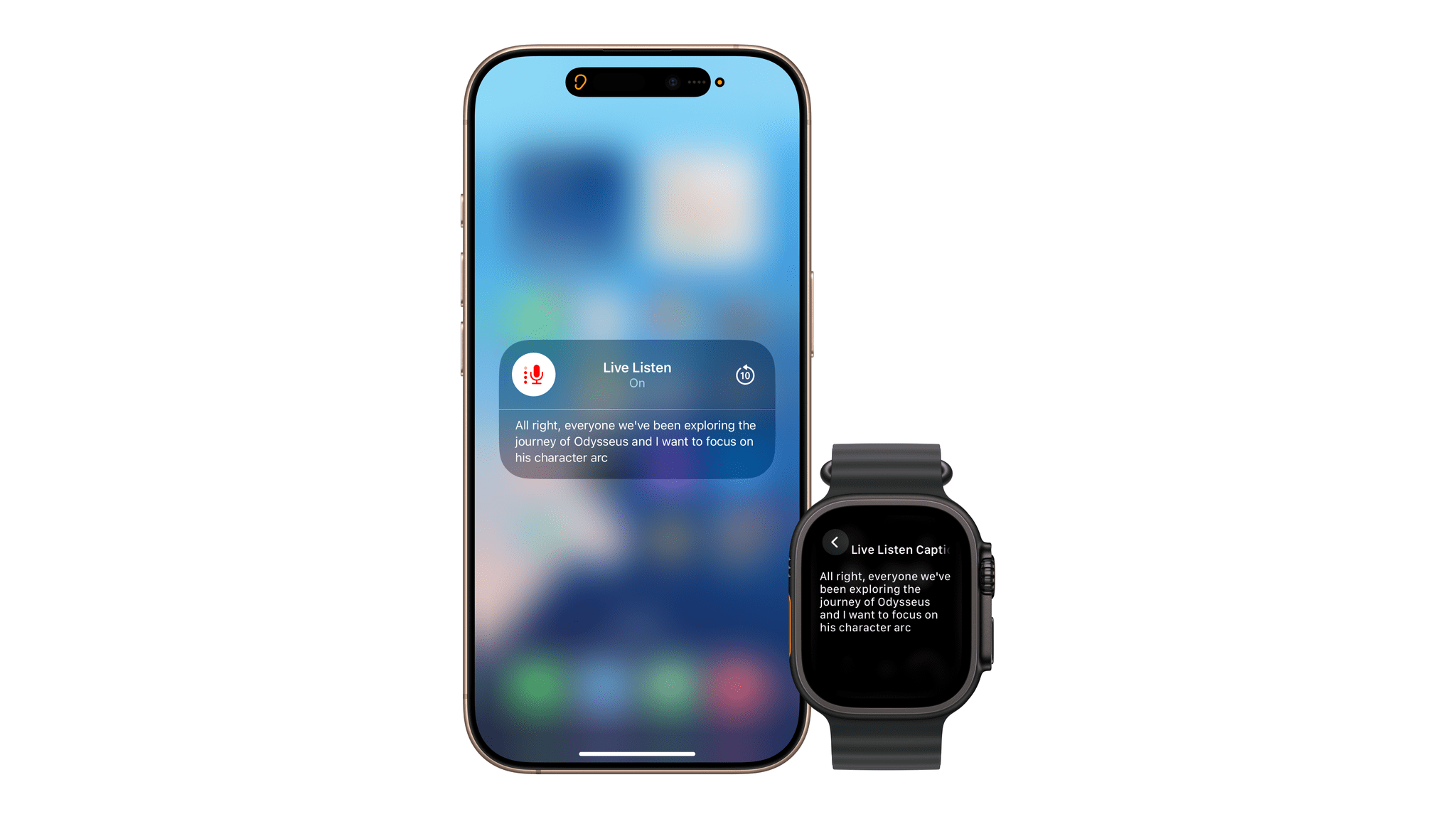

Live Listen + Live Captions

Live Listen is a feature that was announced in 2014 to allow users with compatible hearing aids to hear people in noisy environments or from across a room by placing an iPhone close to the speaker. AirPods gained this functionality in 2018, and now, Apple is pairing it with Live Captions and bringing the Apple Watch into the mix. Live Captions already captions live conversations on your iPhone, but later this year, you’ll be able to place your phone across the room and send the captions directly to your Apple Watch.

You can pause, rewind, start, and stop Live Captions directly from your Apple Watch, which means no more getting up in the middle of a lecture to turn off your iPhone’s microphone. Additionally, Live Captions is adding support for new languages: English (India, Australia, UK, Singapore), Mandarin Chinese (Mainland China), Cantonese (Mainland China, Hong Kong), Spanish (Latin America, Spain), French (France, Canada), Japanese, German (Germany), and Korean.

Improved Personal Voice

Personal Voice was introduced in 2023 with iOS 17. It allows you to generate a synthetic version of your own voice that can be used to read text. It’s an excellent feature that allows people who are losing the ability to speak to preserve their voices. One big downside of Personal Voice is the time and effort it takes to set it up. Apple has made significant improvements in this area.

To train your Personal Voice, you will need to speak only 10 phrases, down from 150. And the results will be available in minutes rather than overnight (or longer, in my case). In addition to these improvements to setup, Personal Voice will sound more natural and be available in Spanish for users in Mexico and the United States.

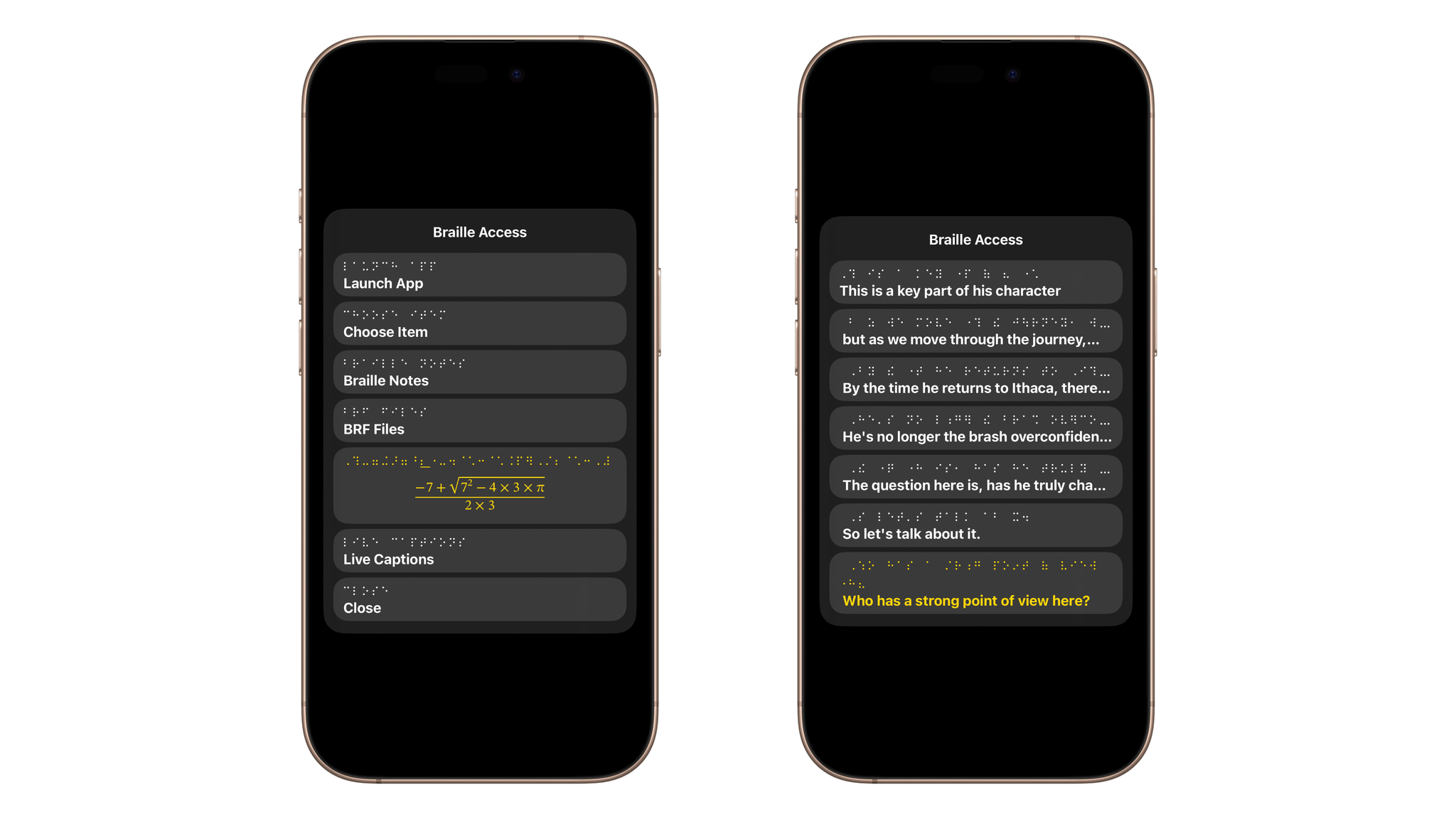

Braille Access

For braille users, Braille Access provides a way to connect a braille device to an iPhone, iPad, Mac, or Vision Pro and turn it into a full-featured braille note taker. Users can even use the Live Captions feature with their braille devices to transcribe conversations in real time.

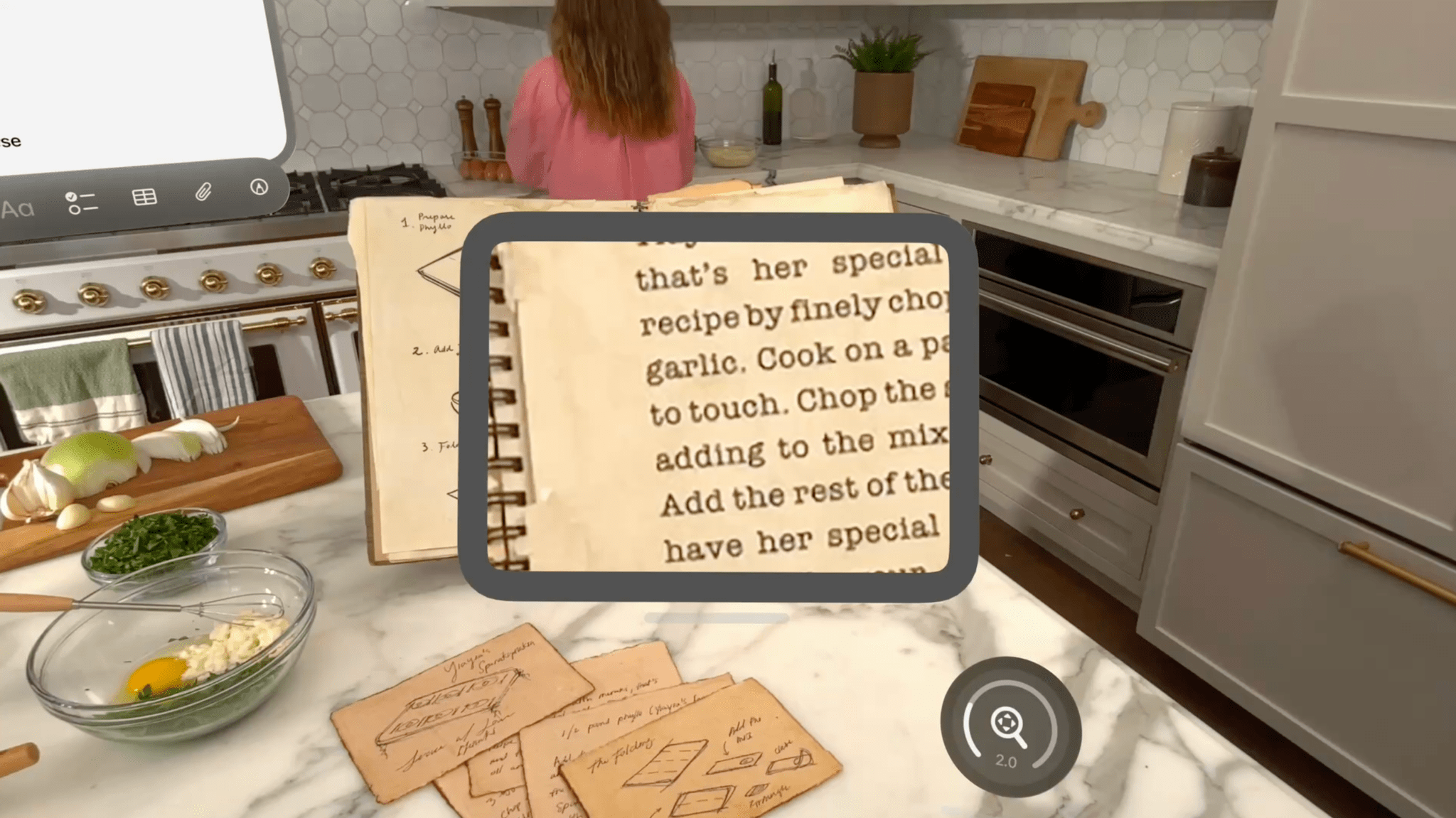

visionOS

For visionOS, Apple has revealed new capabilities that allow a user to zoom in on areas within their field of view. The company has also committed to letting approved accessibility apps access the Vision Pro’s camera with a new API. With that new access, apps will be able to provide person-to-person assistance and new ways for users to understand their surroundings.

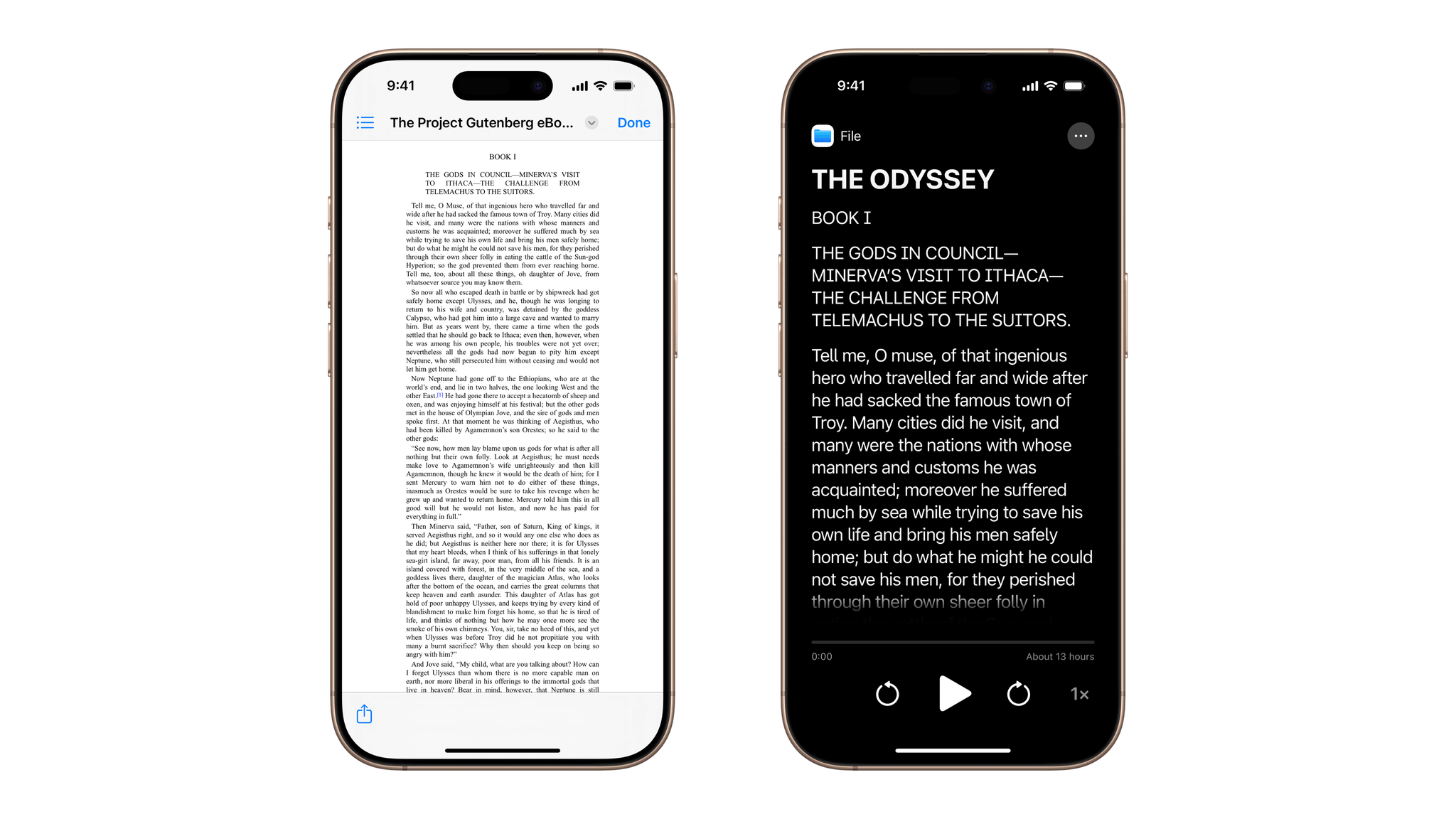

Accessibility Reader

In a new system-wide feature that will no doubt be used by a wide range of users, Accessibility Reader can transform areas of text in apps, documents, and more in any way you like to make them easier to read. You can quickly adjust spacing, enlarge text, change colors, and more without changing the original formatting of an app or a document.

Additional Tidbits

- Background Sounds can be customized with timers, new Shortcuts actions, and EQ settings.

- Vehicle Motion Cues make their way to the Mac.

- Eye Tracking on iPhone and iPad is faster and easier to use, and it now allows users to use either a switch or Dwell Control to select elements. Users can also now “swipe-type” with Switch Control.

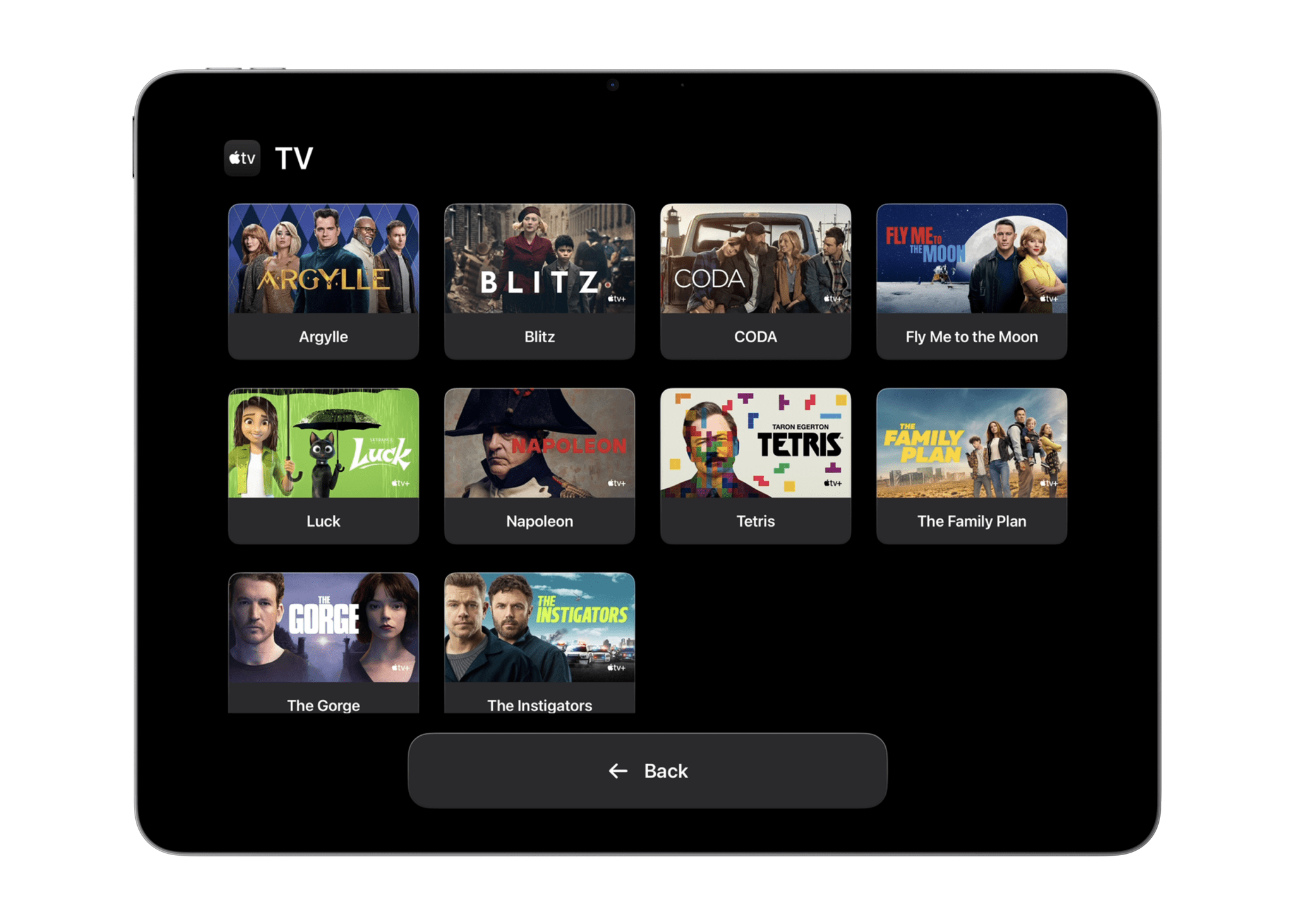

- Assistive Access adds a new Apple TV app, offering users a simplified media player. The Assistive Access API now allows users to build apps for people with intellectual and developmental disabilities.

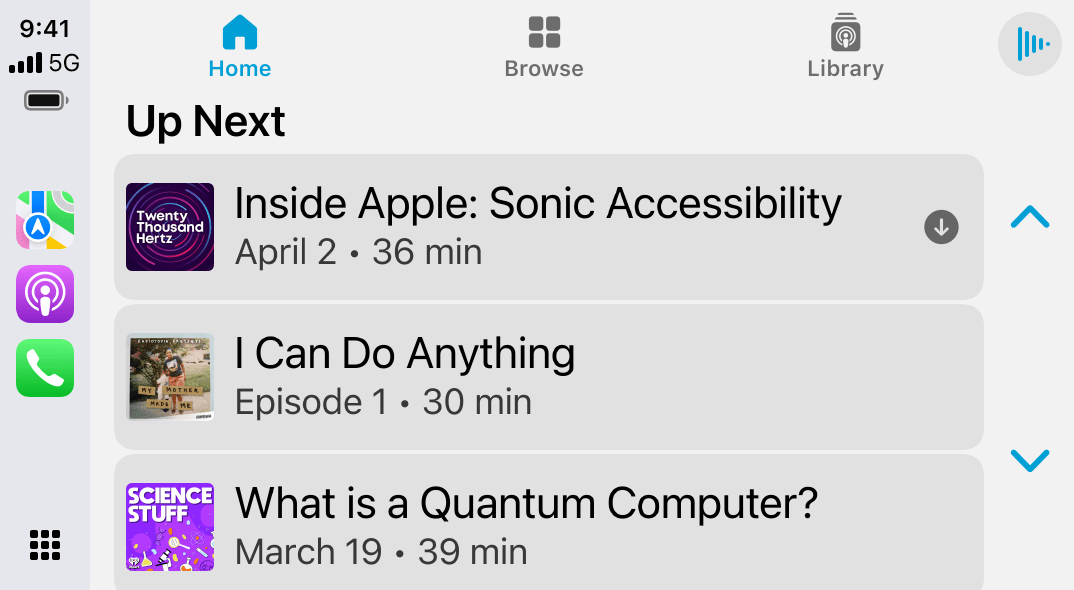

- CarPlay supports Large Text and the ability for Sound Recognition to detect crying babies.

- Music Haptics are more customizable and now available for either the whole track or just vocals.

- Sound Recognition is now able to recognize names being called.

- Users can temporarily share their accessibility settings with other iPhones or iPads.

Celebrating Global Accessibility Awareness Day

In addition to all of these feature announcements, Apple is celebrating GAAD with updates to its Haptics playlists in Apple Music, a special Fitness+ dance workout with Chelsie Hill, a behind-the-scenes look at Apple Original documentary film Deaf President Now! (streaming this Friday on Apple TV+), and spotlights in the App Store, Apple Books, Apple Podcasts, Apple News, and Apple TV.

Apple’s announcements for accessibility users always provide a fascinating look into the positive technologies the company is bringing to its platforms. This year is no different, and it’s great to see Apple’s continued commitment to this area.