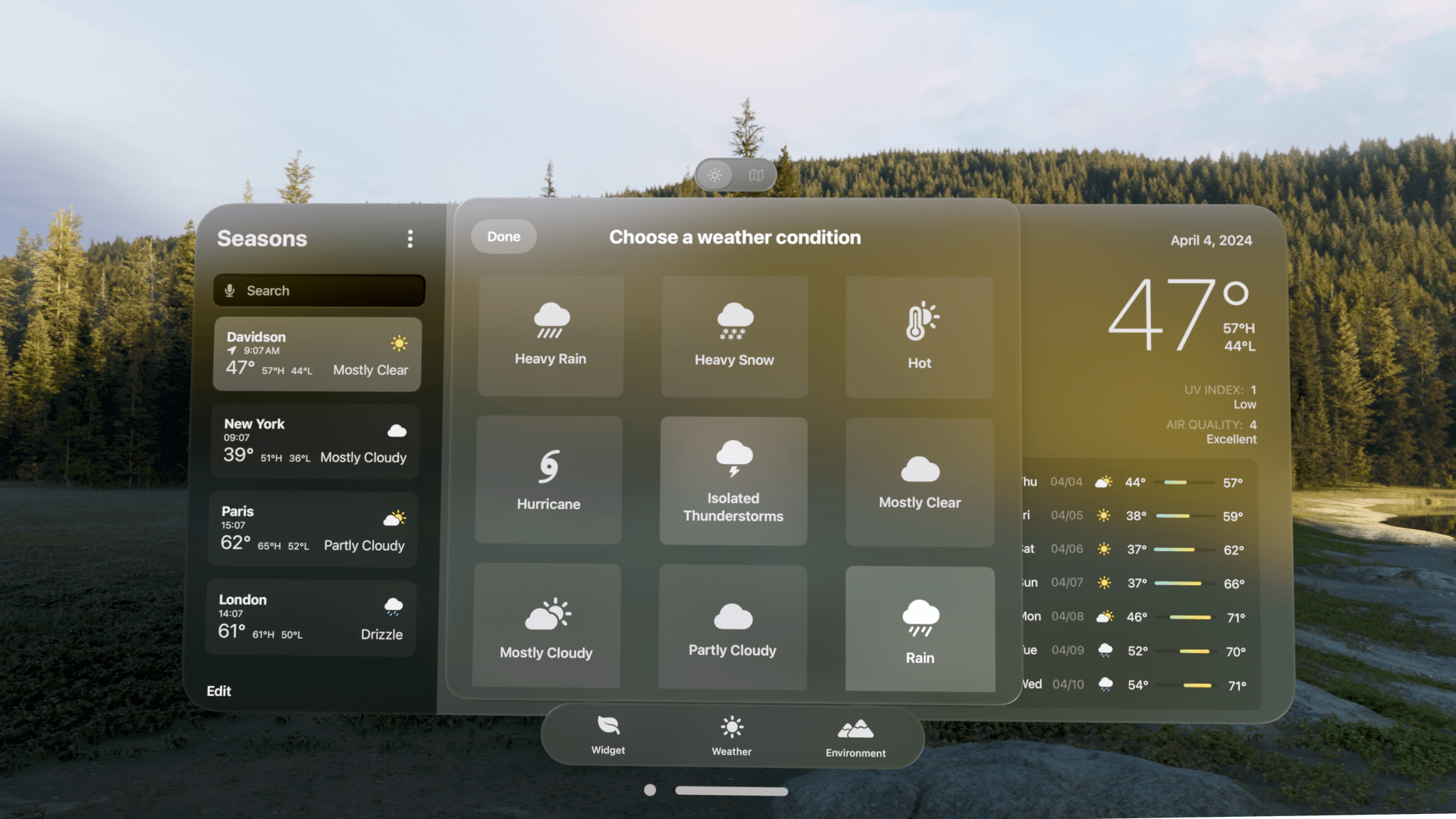

Seasons is the sort of weather app I’d hoped for ever since I ordered my Vision Pro. It’s a unique mix of detailed forecast data combined with an immersive spatial computing experience. There’s a gee-whiz, proof-of-concept aspect to the app, but at its core, Seasons is a serious weather app and a spatial widget that’s a pleasure to incorporate into an everyday Vision Pro workflow.

Posts tagged with "visionOS"

Vision Pro App Spotlight: Seasons Weaves Immersive Conditions Into a Comprehensive Weather App

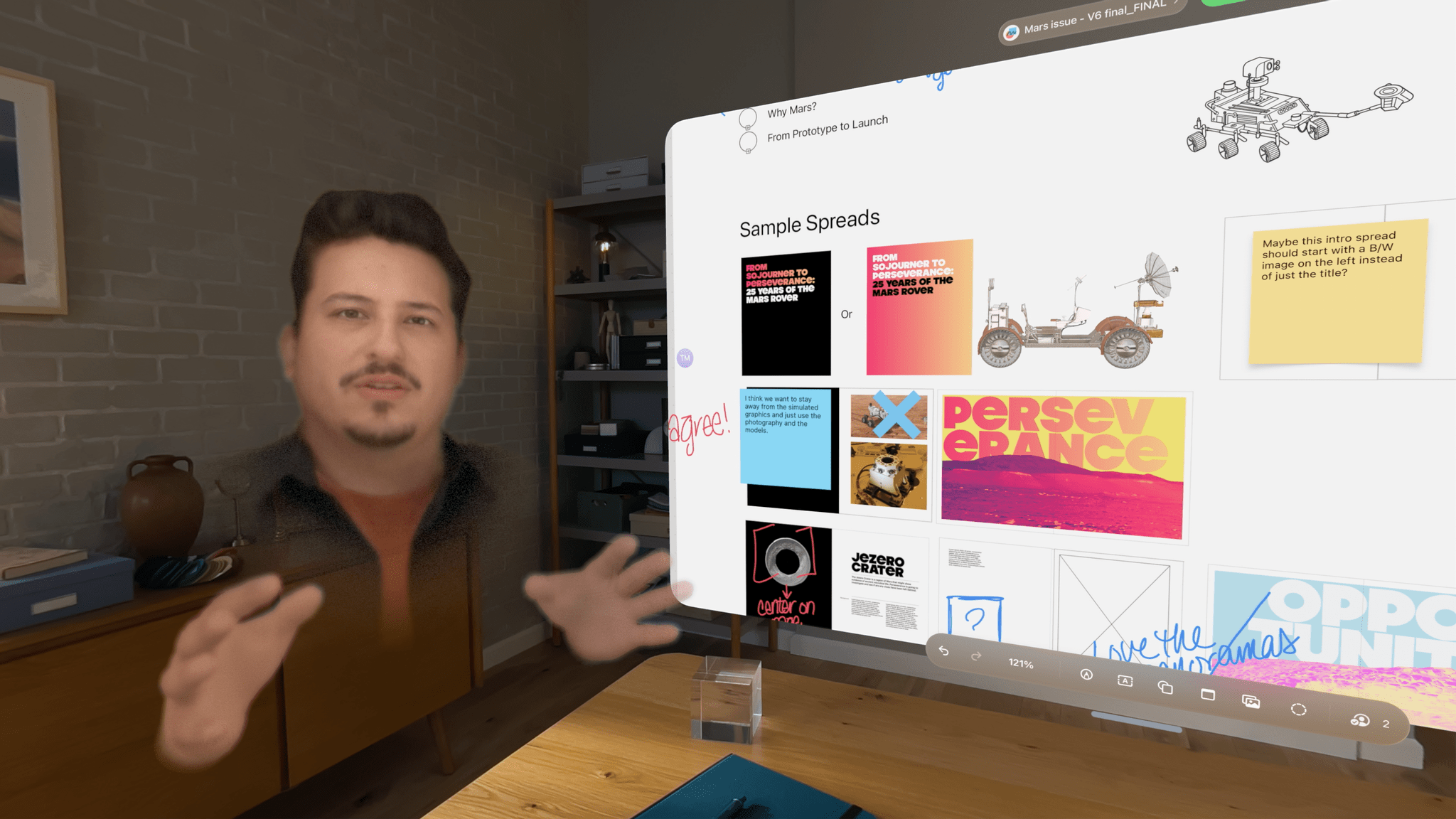

Apple Releases Spatial Personas Betas to visionOS 1.1 Users

Apple has added Spatial Personas to the Personas beta for all Vision Pro users running visionOS 1.1, the latest public release of the OS.

Spatial Personas are available in FaceTime where users can collaborate using SharePlay. That means you can work with colleagues on a presentation, watch TV with friends and family, play games, and more. According to Apple, Spatial Personas allow you to move around and interact with digital content, providing a greater sense of presence.

Apple says that each user can reposition content to accommodate their own surroundings without affecting the others participating in a SharePlay session. Spatial Personas are available to developers. The Spatial Personas feature also integrates with Spatial Audio, so audio tracks with the position of the other people participating in your FaceTime call.

If you have visionOS 1.1 installed, you may need to reboot your Vision Pro to see a new Spatial Personas button in the FaceTime app, although we’ve tried and don’t see the feature yet. Tapping on a Persona tile during a FaceTime call will also allow you to switch the Spatial Persona of the person you’re calling. Returning to a Persona from a Spatial Persona can be accomplished from the Vision Pro’s Control Center. There’s also a limit of five Spatial Personas per FaceTime call.

ALVR Beta Brings SteamVR Games with Joy-Con Support to the Vision Pro→

Ian Hamilton writing for UploadVR about his experience playing Half Life: Alyx with Nintendo Joy-Con and the Apple Vision Pro:

The tracking quality is far from ideal and I couldn’t get the gravity gloves to pull objects. Those limitations make much of Half-Life: Alyx still off-limits at present. The hand tracking is so sluggish, for example, I wouldn’t want to engage in combat like this. Still, I enjoyed exploring a laid back look through the early parts of Half-Life: Alyx on Vision Pro’s untethered system with developer commentary turned on. And that this works at all is a remarkable testament to the ingenuity of open-source developers.

Hamilton, who tested other games like Beat Saber, too, used ALVR, an open-source project that allows SteamVR games to be played on the Vision Pro over Wi-Fi and just released a beta visionOS app.

As with a lot of these cutting-edge experiments, the number of games that are playable using ALVR is limited. However, as a proof of concept, it’s interesting to see Joy-Con, which are supported by visionOS, working in concert with hand tracking. As limited as the experience is now, ALVR points to a potential hybrid solution for gaming on the Vision Pro that combines the natural interactions of hand tracking with the precision of controllers.

Job Simulator Devs Say They Feel ‘Vindicated’ By Apple Vision Pro’s Approach To VR→

Henry Stockdale, of UploadVR is at the annual Game Developers Conference (GDC) in San Francisco this week and interviewed Andrew Eiche, the CEO of Owlchemy Labs, creators of Job Simulator and other VR games:

Asked about the studio’s hand tracking focus and how Apple Vision Pro uses hand tracking as its primary control method, Eiche believes Apple’s system feels like “huge vindication” for Owlchemy’s strategy.

We had this similar pinch gesture in our previous hand tracking demo without knowing what Apple was going to do at all, so it’s great to see that we’ve all coalesced around this one interaction.

When we first tried the Apple Vision Pro, we were like, ‘yes!’ This is like what we have been hoping for in many cases. Making an app on a headset is difficult right now on every headset because you have to start from scratch. With Apple, you can use Swift UI. You can use all of these different tools to build an app to get the base layer. And then you add everything on top of it and it’s interesting. So yeah, it felt like a huge vindication.

Job Simulator first launched on Sony’s PlayStation VR in 2016, so it’s interesting to hear that its veteran VR development team independently arrived at a similar interaction scheme as Apple’s Vision Pro designers and engineers.

In addition to Job Simulator, Owlchemy Labs announced last month that it is also working on bringing Vacation Simulator to the Vision Pro.

Club MacStories Sample: BetterTouchTool Tips, Vision Pro Shortcuts, a Task Manager Review, and the Effect of AI on the Internet

We often describe Club MacStories as more of the MacStories you know and love reading on this website. That’s an apt shorthand for the Club, but when you’re being asked to sign up and pay for something, it still helps to see what you’re buying. That’s why every now and then, we like to share samples of some of what the Club has to offer every week.

So today, we’ve made Issue 408 of MacStories Weekly from a couple of Saturdays ago available to everyone. Just use this link, and you’ll get the whole issue. You can also use the links in the excerpts below to read particular articles.

Everybody in the Club gets MacStories Weekly and our monthly newsletter called the Monthly Log, but there’s a lot more to the Club than just email newsletters. All members also get MacStories Unwind+, an ad-free version of the podcast that we publish a day early for Club members. All Club members also have access to a growing collection of downloadable perks like wallpapers and eBooks.

Club MacStories+ members get all of those perks along with exclusive columns that are published outside our newsletters, access to our Discord community, discounts on dozens of iOS, iPadOS, and Mac apps, and advanced search, filtering, and custom RSS feed creation of Club content. Club Premier builds on the first two tiers by adding AppStories+, the extended, ad-free version of our flagship podcast that’s delivered a day early, as well as full-text search of AppStories show notes, making it the all-access pass for everything we do at MacStories.

To learn more and sign up, you can use the buttons below:

Join Club MacStories:

Join Club MacStories+:

Join Club Premier:

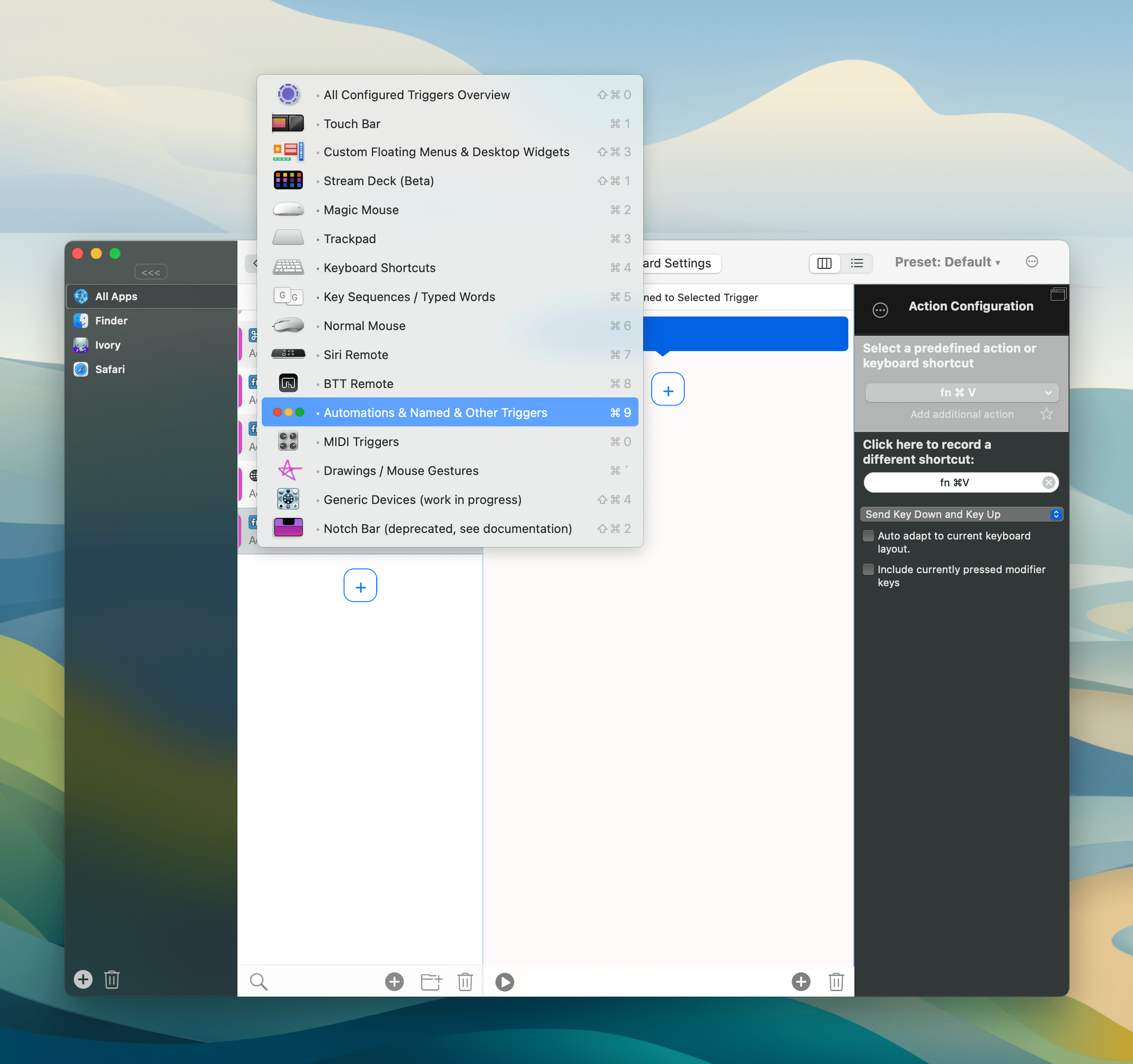

Issue 408 of MacStories Weekly, which you can access here, starts with two excellent tips from Niléane on how to use BetterTouchTool to remap the Mac’s yellow and green ‘stoplight’ buttons. Like a lot of tips and workflows we share, Niléane’s was inspired by a similar technique Federico employed a couple of weeks before:

Two weeks ago, in Issue 406 of MacStories Weekly, Federico shared a tip for BetterTouchTool that resonated with me. Just like him, I am used to minimizing my windows instead of hiding them, which can be annoying since minimized windows no longer come up when you Command (⌘) + Tab to their app’s icon…

…after poking around in BetterTouchTool for a few minutes, I realized that the app allows you to change what the red, yellow, and green window buttons do. As a result, I was able to make it so that the yellow button will actually hide a window instead of minimizing it to the Dock.

AppStories, Episode 374 – Examining Apple App and OS Design Trends→

This week on AppStories, we examine Apple’s Sports and Journal apps and visionOS for clues to what their designs may mean for the next major revisions of Apple’s OSes.

Sponsored by:

- Memberful – Help Your Clients Monetize Their Passion

Examining Apple App and OS Design Trends

- Sports

- Journal

- visionOS

On AppStories+, I tackle whether Federico needs an Apple Studio Display and offer a more portable solution.

We deliver AppStories+ to subscribers with bonus content, ad-free, and at a high bitrate early every week.

To learn more about the benefits included with an AppStories+ subscription, visit our Plans page, or read the AppStories+ FAQ.

The Best Small Feature of visionOS 1.1

Apple released visionOS 1.1 earlier today. The update focuses on a variety of performance enhancements (I’m intrigued to test improved cursor control and Mac Virtual Display), but, arguably, updated Personas are the most important feature of this release.

I haven’t recreated my Persona for visionOS 1.1 yet, but judging by comparisons I’ve seen online, Apple’s 3D avatars should now look more realistic and, well, less creepy than before. David Heaney at UploadVR has a good rundown of the changes in this update.

I’m here to talk about what is, for me, the best small feature of visionOS 1.1, which isn’t even mentioned in Apple’s changelog. In the launch version of visionOS last month, there was an annoying bug in the Shortcuts app (which is still running in compatibility mode) that caused every ‘Open App’ action to open the selected app but, at the same time, also remove every other window from your field of view.

I ran into this issue when I thought I could use shortcuts to instantly recreate groups of windows in my workspace; I was unpleasantly surprised when I realized that those shortcuts were always hiding my existing windows instead. And even worse, every other Shortcuts action that involved launching an app (like Things’ ‘Show Items’ action) also caused other windows to disappear. It was, frankly, terrible.

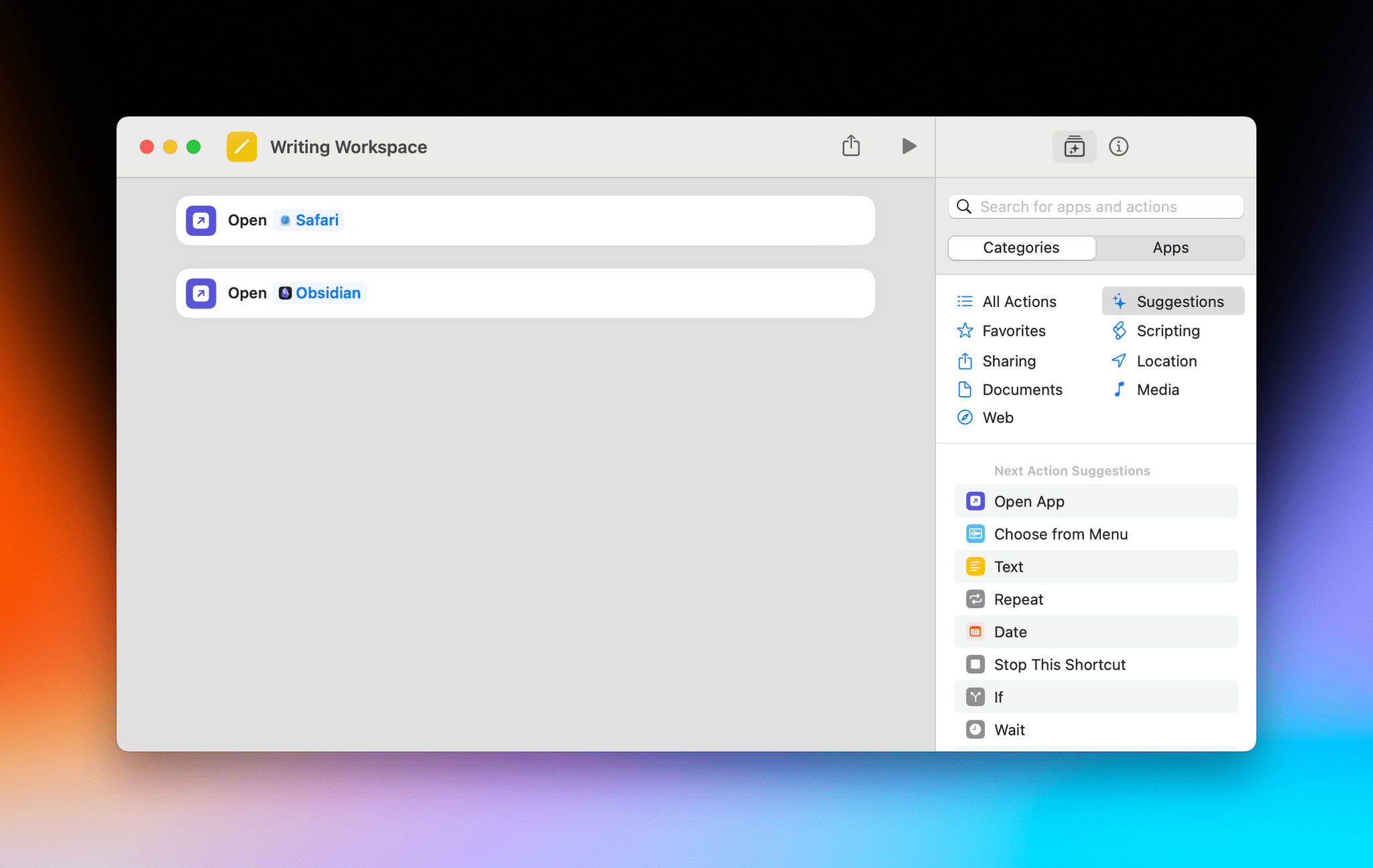

All of this has been fixed in visionOS 1.1. Using ‘Open App’ actions in Shortcuts doesn’t hide other currently open windows anymore: it just spawns new ones for the apps you selected. This means that a shortcut like this…

…when run on the Vision Pro with visionOS 1.1 will open a Safari window and an Obsidian one in front of other windows I have already open, without hiding them. This unlocks some interesting possibilities for “preset shortcuts” that open specific combinations of windows for different work contexts (such as my writing workspace above) with one tap, which could even be paired with a utility like Shortcut Buttons for maximum efficiency in visionOS. Imagine having quick launchers for your ‘Work’ windows, your ‘Music’ workspace, or your ‘Research’ mode, and you get the idea.

I guess what I’m saying is that my favorite feature of visionOS 1.1 is a bug fix that hasn’t even been mentioned by Apple in their release notes. Maybe I’ll change my mind if my updated Persona won’t make me look like an exhausted 50-year-old Italian blogger anymore, but, for now, this Shortcuts update made visionOS 1.1 worth installing immediately.

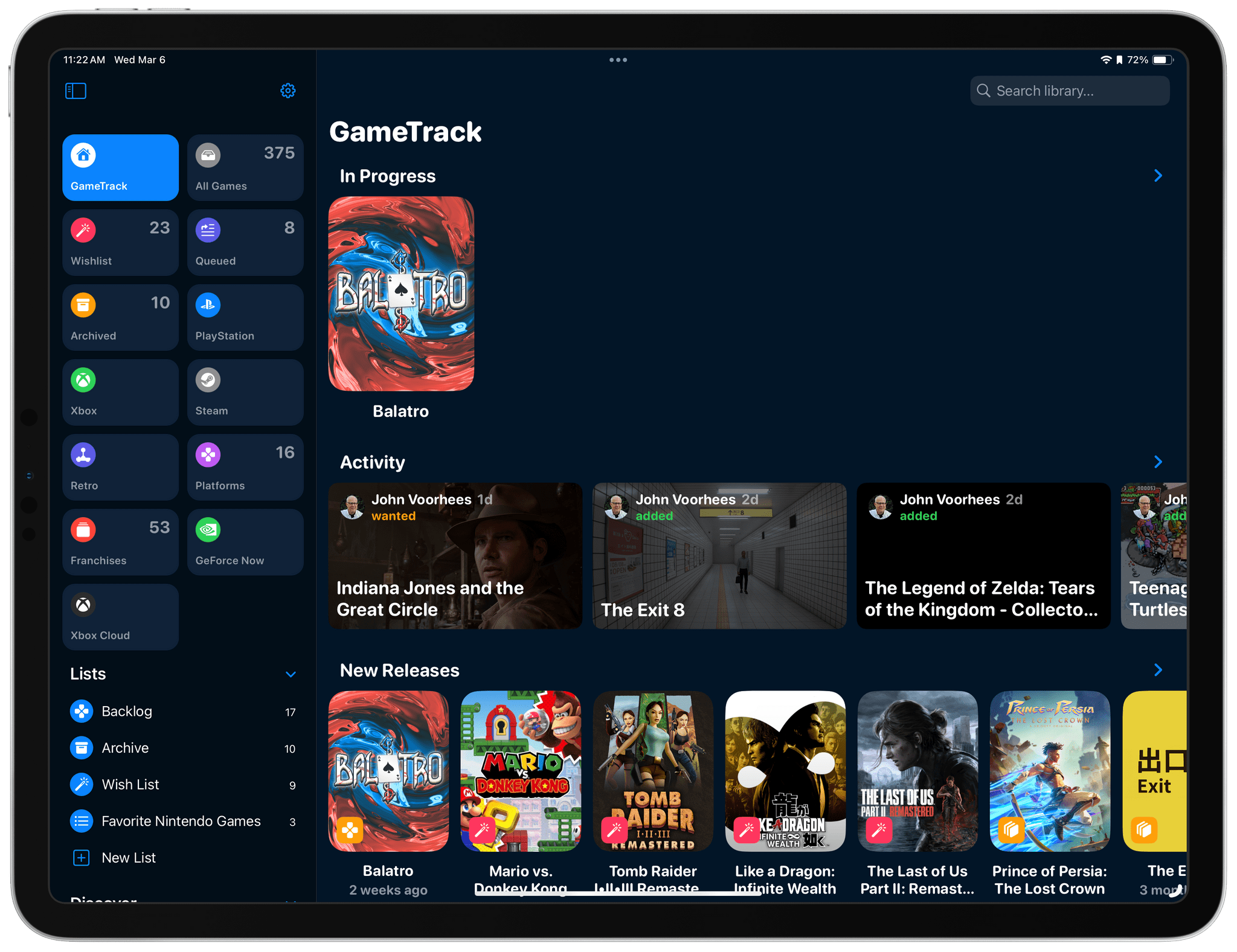

Vision Pro App Spotlight: GameTrack Updated with Built-In Cloud Streaming

Late last week, Joe Kimberlin released GameTrack 5.4, an update to the iOS, iPadOS, and visionOS versions of the app that enables new ways to access your favorite games and navigate the app’s UI. Of course, the Vision Pro version of GameTrack is completely new since the last time I wrote about the app, too. So, let’s take a closer look at the latest iOS and iPadOS updates, as well as the visionOS version, which has become one of my favorite media management apps for Apple’s headset.

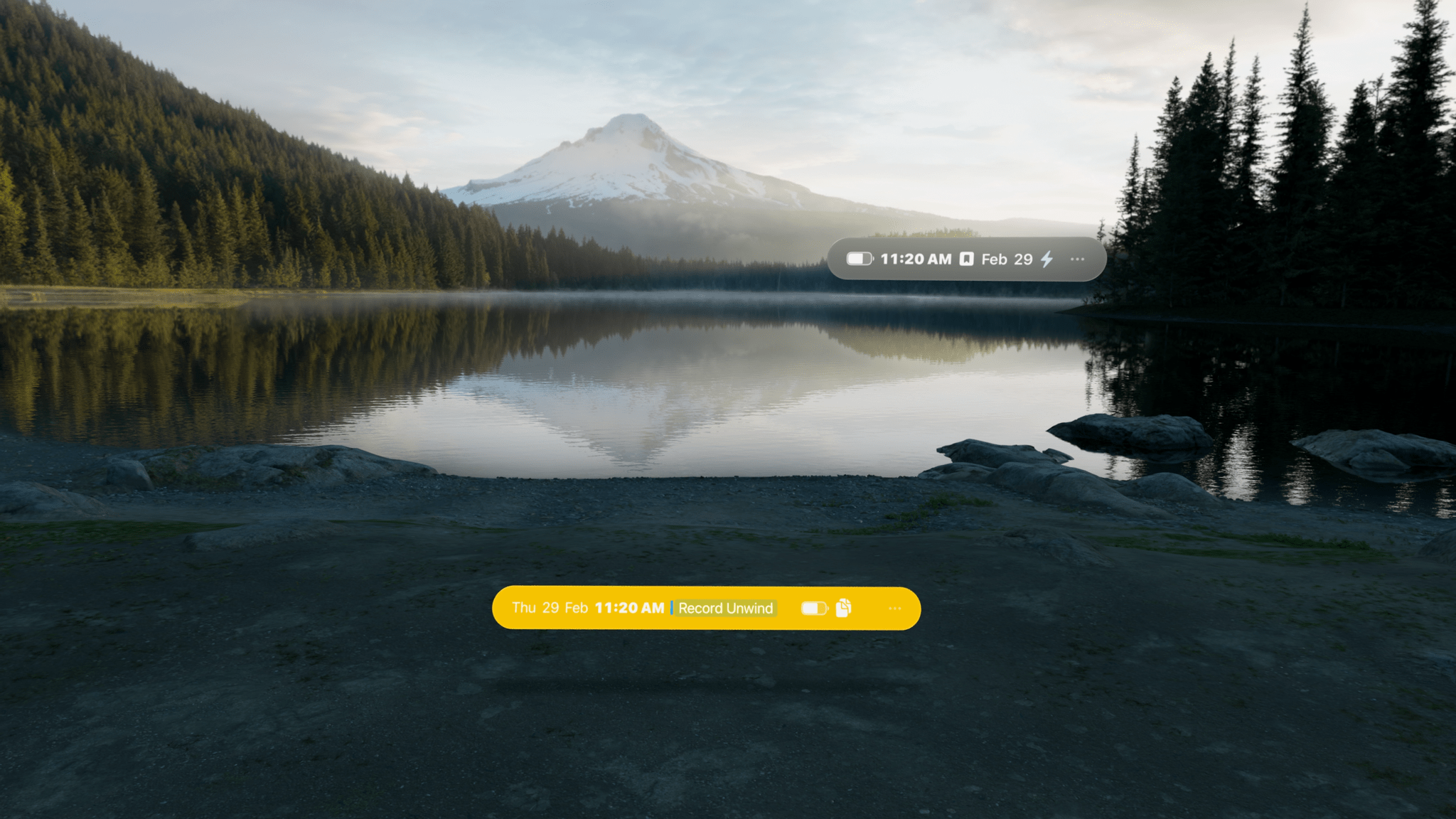

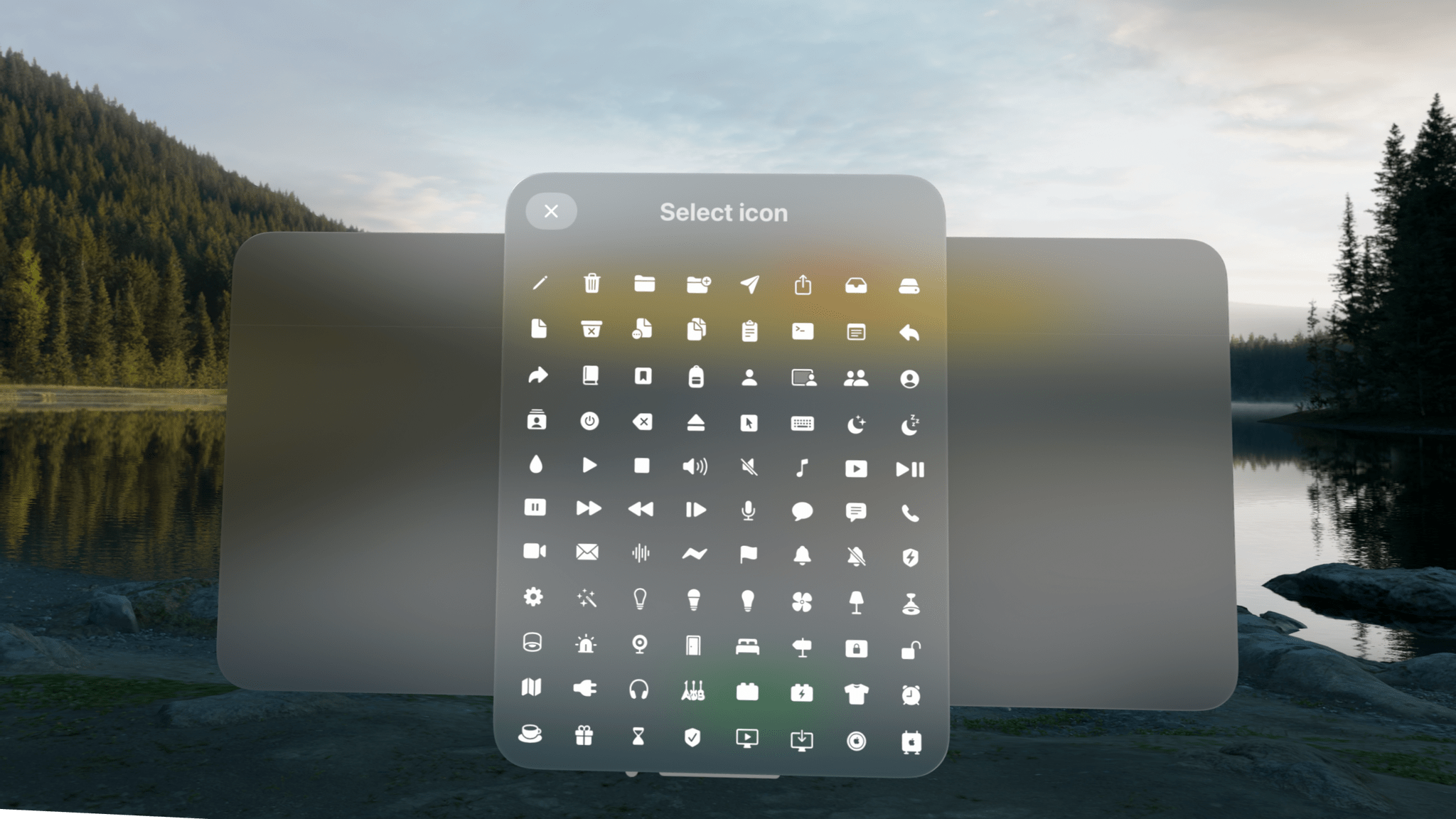

Vision Pro App Spotlight: Status Bar Builder’s Key Is Customization Combined with Simplicity

There’s an elegance in simplicity that I value. I use plenty of complex apps, but there’s a certain satisfaction in finding one that fits your needs perfectly. One such app that seems destined to claim a long-term spot on my Vision Pro is Status Bar Builder, a customizable utility that displays useful information without taking up much space.

The app allows you to build status bars, which are narrow, horizontal windows reminiscent of the Mac’s menu bar that you can place around your environment. Status bars come in three text sizes and can have no background, be translucent, or use a colored background.

Regardless of the styling you pick, status bars can include:

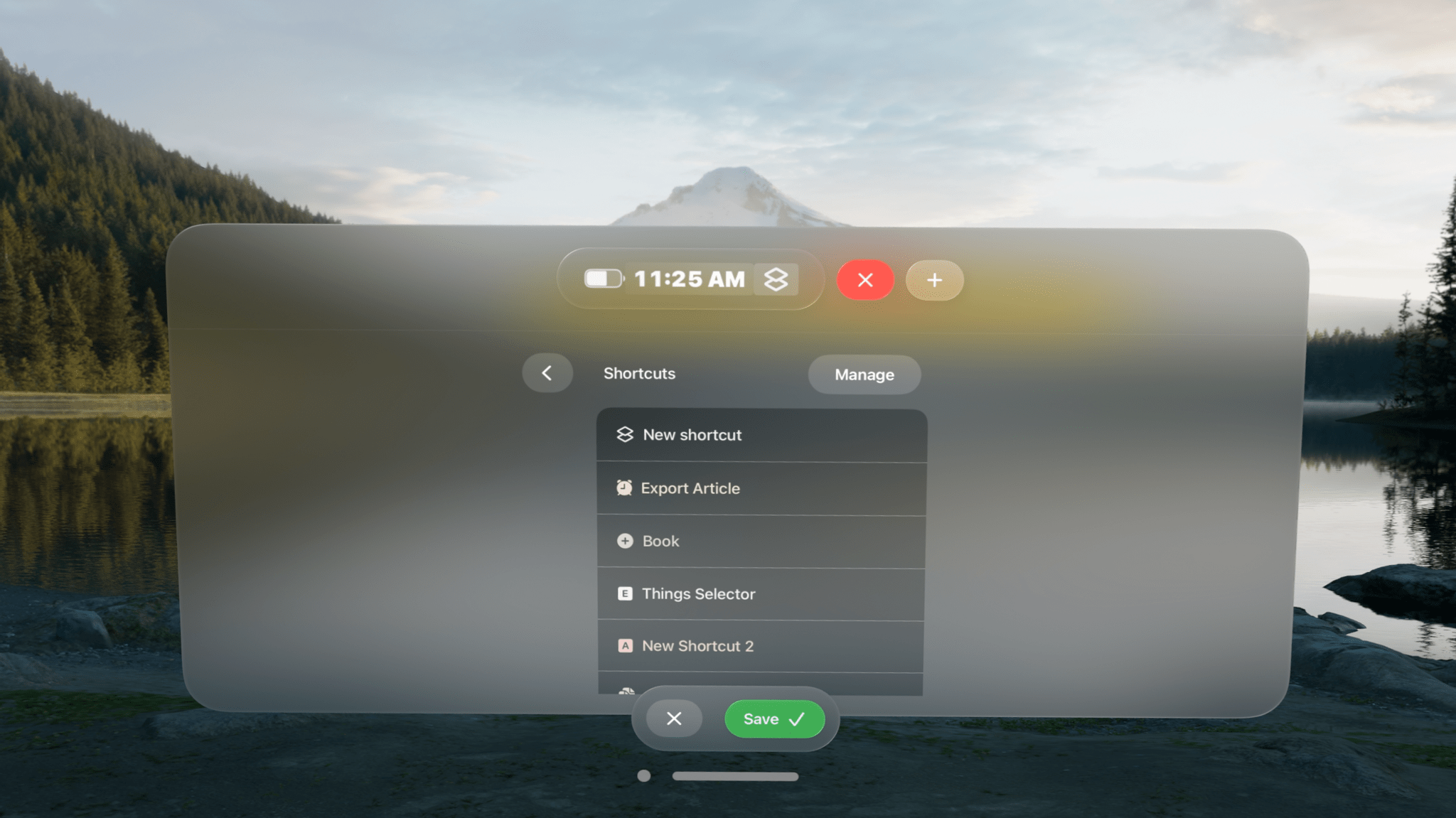

- Shortcuts

- Links

- Today’s date

- Weekday

- Numerical date

- Month

- Time

- Your calendar events

- A battery charge indicator

- A spacer

Designing status bars is easy. They’re created and stored in the app’s main window, which includes a preview of each along with buttons to open any existing status bars, make revisions, or delete them. At the bottom of the main window, there’s also a ‘New’ button to add to your collection.

You can make as many status bars as you’d like, sprinkling them around your room. There doesn’t seem to be a limit to how many items you can include in a status bar, either, but as a practical matter, if a status bar gets too long, it doesn’t look great, and some text will be truncated.

What’s more, you can add as many of each type of item as you’d like to a status bar. For Shortcuts, that means you can create a long list of shortcuts if you want. However, you better be able to identify them by their icons because the buttons in the status bar don’t include shortcut names or the colors you assigned to them.

Links work like a mini bookmark bar, allowing you to save frequently visited websites with a custom icon as a button in your status bar. However, like shortcuts, you’ll need a memorable icon because there are no link labels or other text to go by. Links support URL schemes too, offering additional automation options.

There are also a few other other item-specific settings available. For example, there are three sizes of spacers that can be added to a status bar, and calendar events can include the color of their associated calendars if you’d like. Plus, dates and times have formatting, color, size, and other style options. It’s worth noting that your next calendar event doesn’t include the scheduled time and events cannot be opened, both of which are things I’d love to see added to the app in the future.

Although you can overstuff your status bars with links, shortcuts, and other information, I’ve found that the best approach is to be picky, limiting yourself to a handful of items that make your status bars more glanceable. Then, if you find yourself adding more and more to a status bar, consider breaking it up into multiple status bars organized thematically. I find that works well and gives me more flexibility about where I put each.

I have to imagine that Apple will eventually release something like Status Bar Builder. It’s too easy to lose track of time in the Vision Pro. It needs a clock, battery indicator, date, and other basic data we’ve had on our iPhones since day one. Status Bar Builder fills that gap but goes one step further by adding links, calendar items, and shortcuts. I wouldn’t add a lot more to the app, but a weather item with the current conditions and temperature would be great. However, even though parts of Status Bar Builder may eventually wind up as parts of visionOS, I expect the app has a long and useful life ahead of it, thanks to the other components that go a step further than I expect Apple ever will.

Status Bar Builder is available on the App Store for $4.99.

](https://cdn.macstories.net/banneras-1629219199428.png)