Intelligence

I’ve covered Apple’s work in machine learning and on-device processing over the years in my iOS and iPadOS reviews, but never as a standalone ‘pillar’ of the operating systems. The broader landscape of artificial intelligence has, however, rapidly changed in the past few years. Apple and other tech companies find themselves fighting a public perception battle against upstarts that, for better or worse, have shifted the narrative toward large language models and assistants that are far smarter than tools we’ve seen before.

For this reason, I find it necessary (and timely) to start covering Apple’s efforts in system intelligence with the attention and focus that they deserve. And, I’ll also share my (potentially) contrarian opinion: I think Apple’s machine learning-based tools are smarter, more practical for most people, and more impactful than a lot of tech journalists give them credit for.

I’m not saying that Apple has a version of Siri as impressive at fetching results as ChatGPT on their hands yet. I hope that will happen at some point, but that’s not where iOS and iPadOS are today. What I’m saying is that while a lot of us are obsessed with the new wave of chat bots, Apple has been shipping intelligent features that permeate an entire operating system and have practical benefits at scale. They may not be as flashy as asking ChatGPT to write a high school essay, but they cover a wide spectrum of commonly performed tasks – from searching the web to extracting information from photos and videos – that tons of people use but don’t make for interesting headlines on tech blogs.

It’s essential to cover Apple’s evolution in this field, especially in preparation for visionOS and Vision Pro, which will build on the foundation established by the intelligent features of iOS and iPadOS. Let’s take a look.

Visual Look Up

Apple has been chipping away at Visual Look Up – the feature of the Photos app that lets you find out more details about objects and places contained in pictures – since its introduction with iOS 15 in 2021. In iOS 17, Visual Look Up is getting its biggest update yet thanks to a variety of newly supported content types and integration with videos.

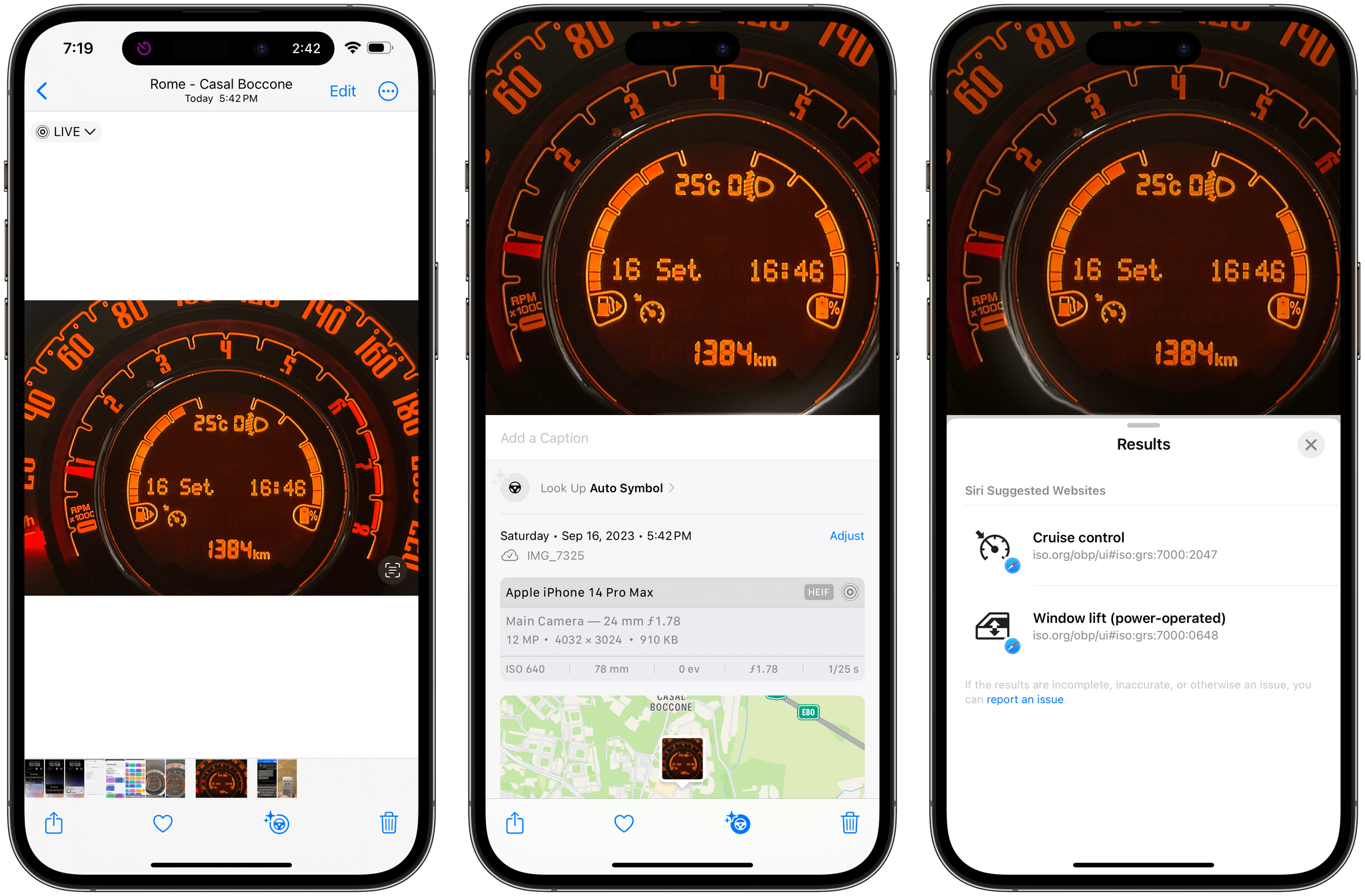

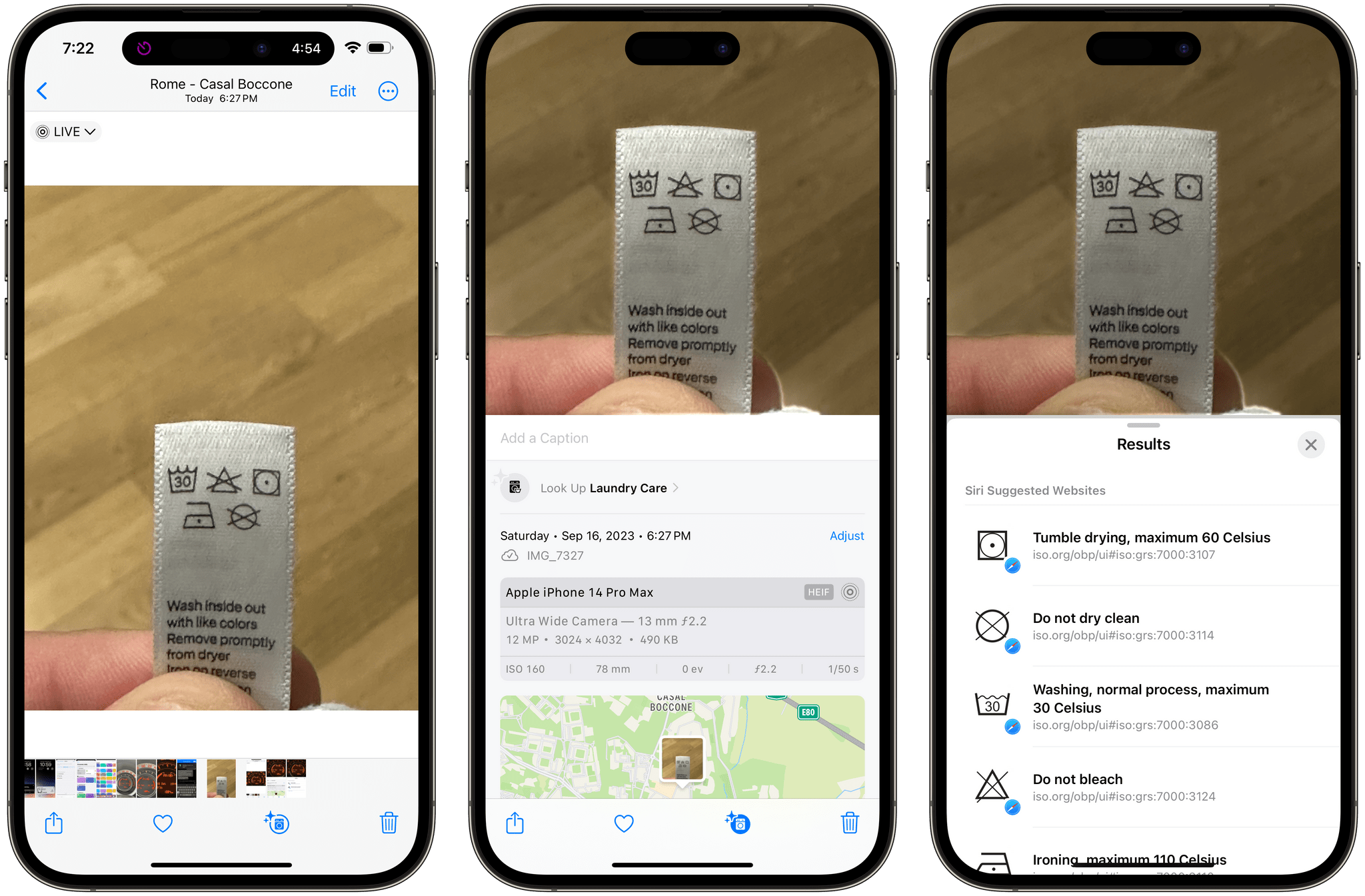

My favorite addition to Visual Look Up is recognition of laundry care and auto symbols. We’ve all been there: you check the tag of a nice shirt you want to put in the laundry, and you don’t know what any of the symbols mean. Or you’re driving, and suddenly an icon on your dashboard turns on, and you have no idea what’s going on and whether that requires your immediate attention or not. Well, now iOS can recognize all of these ISO-standardized symbols and tell you what they indicate without having to install a third-party app from the App Store.

Take a picture of a laundry tag or a symbol in your car, open the Photos app for iOS 17, and you’ll see a new icon at the bottom of the picture that tells you whether laundry care or auto symbols were found in the image. Tap on the ‘Look Up’ button, and you’ll see a summary of the symbols’ explanation with a link to open the original webpage on the ISO.org website.

When I say that Apple’s efforts in this field have useful, pragmatic effects, this is what I mean. Thanks to Visual Look Up in iOS 17, I discovered two things: that a very nice shirt I have is not supposed to be tumble-dryed (oops) and that my car’s passenger seat airbag symbol sometimes turns on to indicate a malfunction, which is something I need to have checked. iOS 17 does all this without any third-party app, without having to ask a chat bot, just by processing images on-device and using a neural network to understand their contents.

I have to wonder: this recognition of symbols is so good, when will it work in real-time inside the Camera app without having to a snap a picture first?

It’s not all perfect, though, and when things go wrong with Visual Look Up, results can be too generic, wrong, or just downright hilarious.

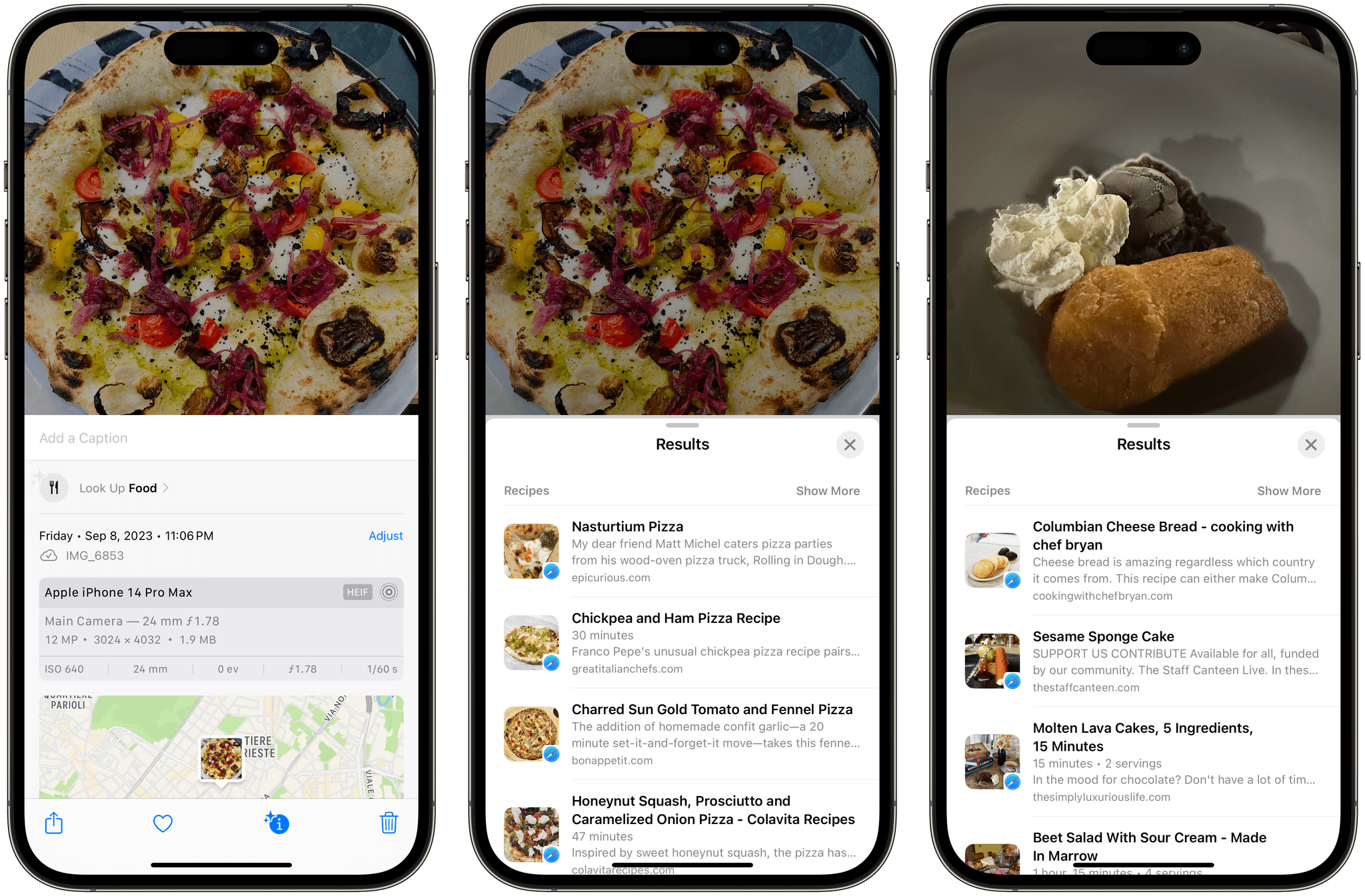

One of the additions to Visual Look Up this year is support for recognizing food in pictures and suggesting matching recipes found on the web. On paper, this sounds great: now whether you’re taking a picture of your breakfast for the ‘gram or saving someone else’s photo of a tasty meal, you have a way to find more information about it thanks to the Photos app.

Except that, well, 🤌 I’m Italian 🤌. We have a rich tapestry of regional dishes, variations, and local cuisine that is hard to categorize for humans, let alone artificial intelligence. So as you can imagine, I was curious to try Visual Look Up’s support for recipes with my own pictures of food. The best way I can describe the results is that Photos works well enough for generic pictures of a meal that may resemble something the average American has seen on Epicurious, but the app has absolutely no idea what it is dealing with when it comes to anything I ate at a restaurant in Italy.

Photos correctly recognized a Margherita pizza and seafood paella I recently had in two different restaurants. But that’s about where its success ended. Here’s a collection of recipes Photos suggested for different pictures categorized as ‘Food’:

- Rigatoni alla Carbonara had suggestions for ‘One-pot creamy squash pasta’ (dear Lord) and ‘TikTok Viral One Pot French Onion Pasta’ (someone please save me)

- A traditional Neapolitan Babà had links for ‘Colombian cheese bread’ and ‘Beet salad with sour cream’

- A delicious pizza I had in Lecce with Burrata and Capocollo di Martina Franca was boringly recognized as ‘Prosciutto’

- Paccheri with seafood and potato purée was recognized as ‘Squid and ‘nduja agnolotti with chorizo’ or ‘Black truffle fettuccine’

- Squid ink fettuccine with shrimp was recognized as ‘Oysters with cucumber’

You get the idea. I think this feature of Look Up should have waited, or perhaps it shouldn’t exist at all. What’s the point of surfacing wrong suggestions that point to a completely different recipe? If the system is not smart enough to recognize a particular meal (and likely will never be), it’s understandable: local cuisine is challenging to explain even for locals. Boiling it down to viral TikTok sensations or pasta with “creamy sauce” is, frankly, kind of useless.

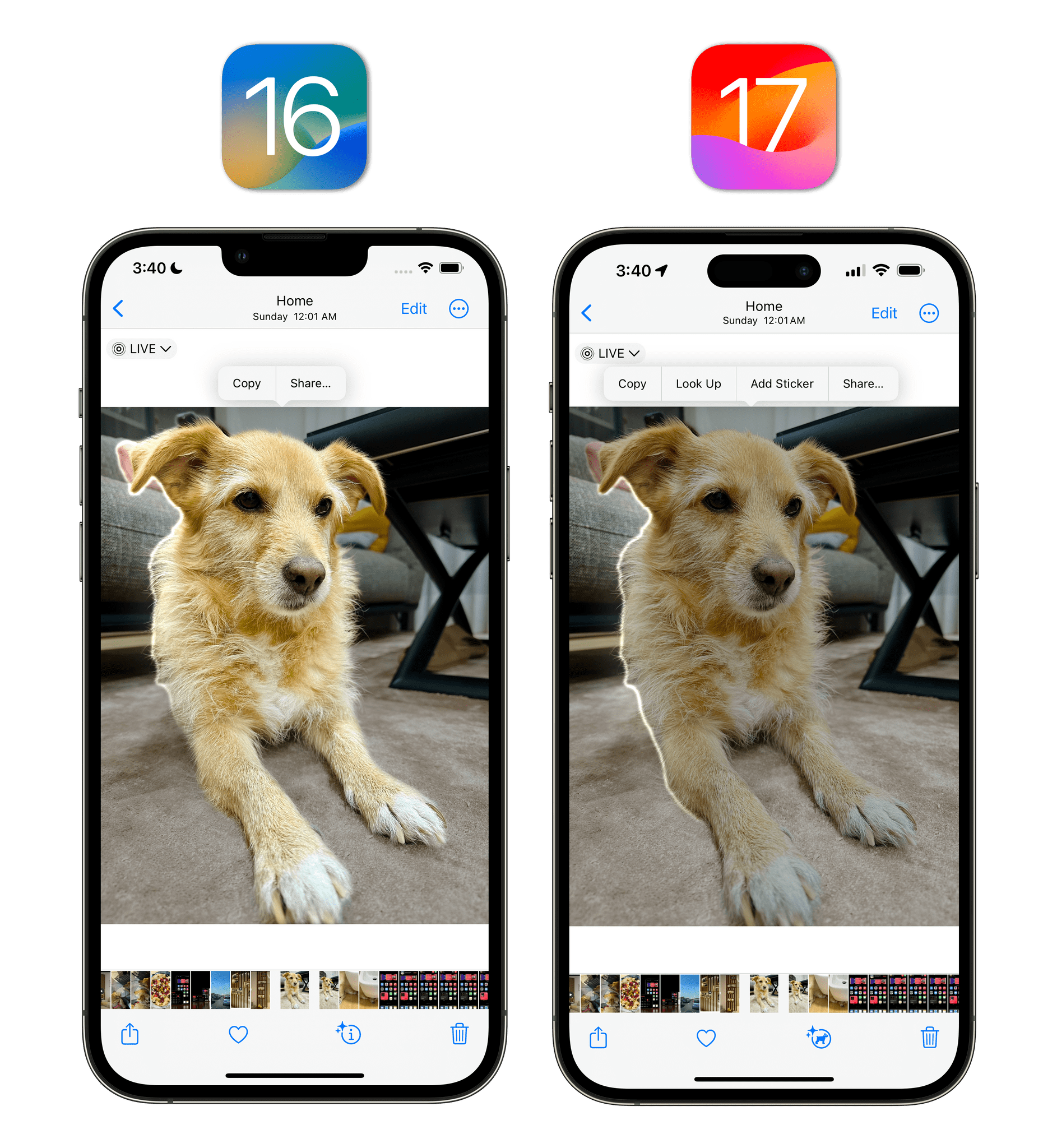

In addition to finding Visual Look Up details in a dedicated page, iOS 17 also brings up a ‘Look Up’ button when you lift an object from a photo; previously, you could only copy or share a lifted subject.

iOS 17 also lets you look up information about objects and pets that appear in paused video frames. When watching a video featuring a very good dog, for instance, hit the pause button, and the ‘i’ icon of Visual Look Up will instantly turn into a dog icon:

Visual Look Up now works in paused video frames, too. In this video, you can see when it recognizes Ginger.Replay

Lastly, Visual Look Up this year should also offer support for recognizing storefronts and looking up Maps details about them. I tried with a variety of storefront pictures in my library taken both in Italy and abroad, and I couldn’t get this functionality to work.

Spotlight

There are some nice improvements on the visual front in Spotlight this year, but, more importantly, the biggest change comes in the form of a tighter integration with App Shortcuts.

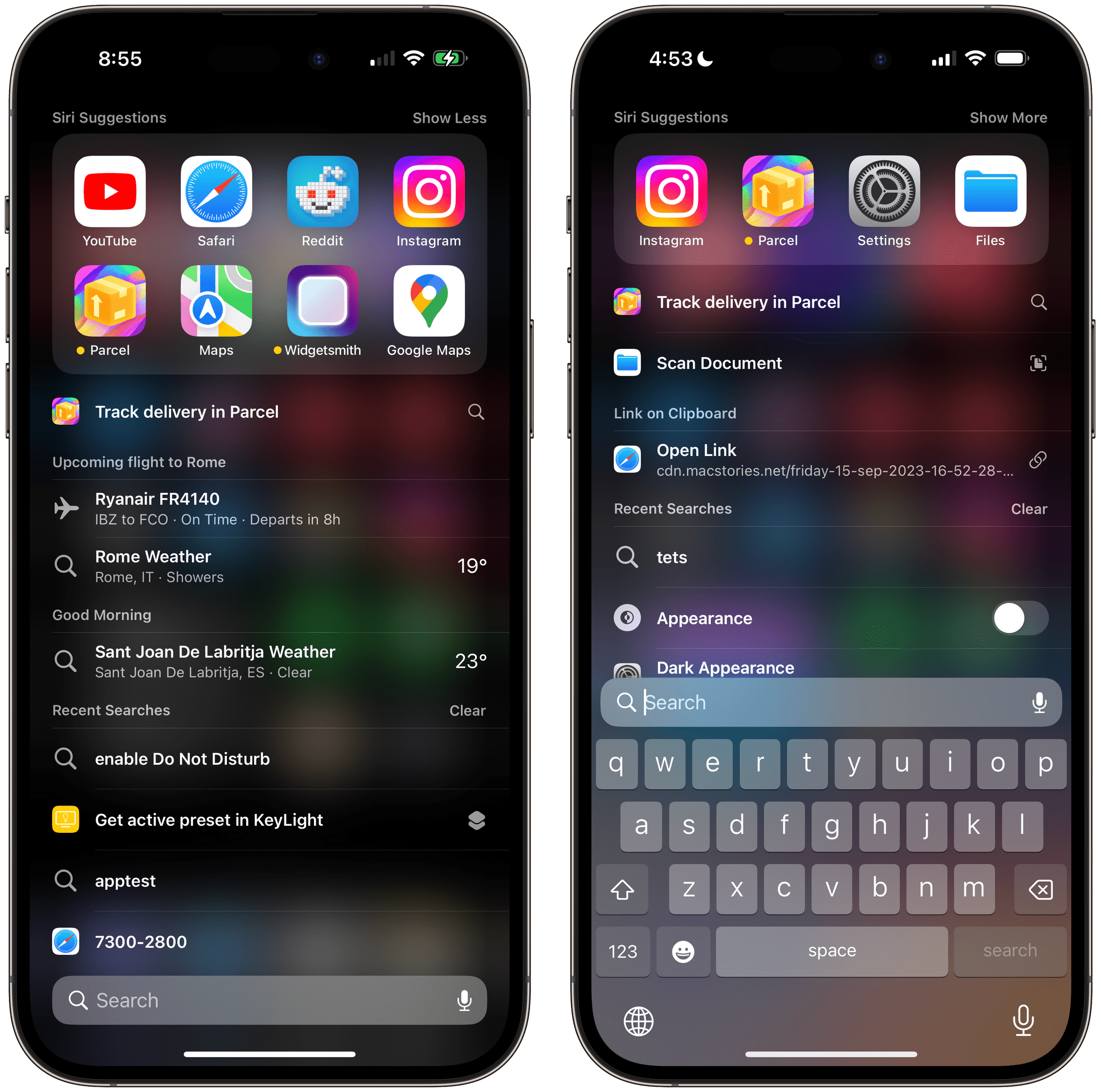

From a design perpective, the best way to describe Spotlight in iOS 17 is that everything is a bit more rounded and colorful compared to iOS 16. Certain types of results that were already supported before (such as weather queries and calculations) now appear as colorful, rounded cards that take onto the appearance of the app that provides them. Take, for instance, the Weather snippets:

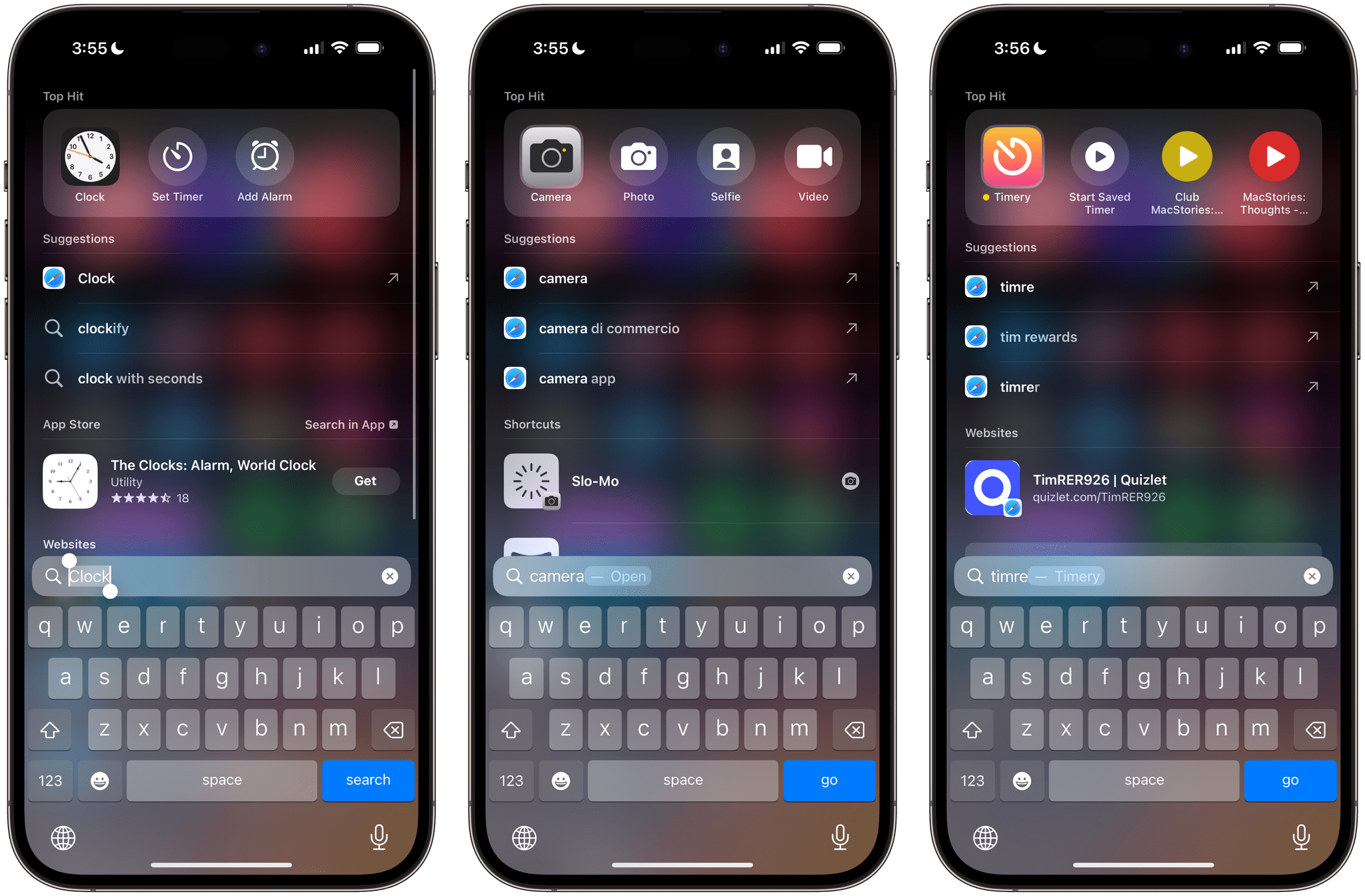

The more colorful appearance with larger iconography and app-specific themes also applies to Shortcuts results in top hits:

There is, however, a bigger, overarching narrative at play in the updated Spotlight: the more prominent role of App Shortcuts in iOS 17.

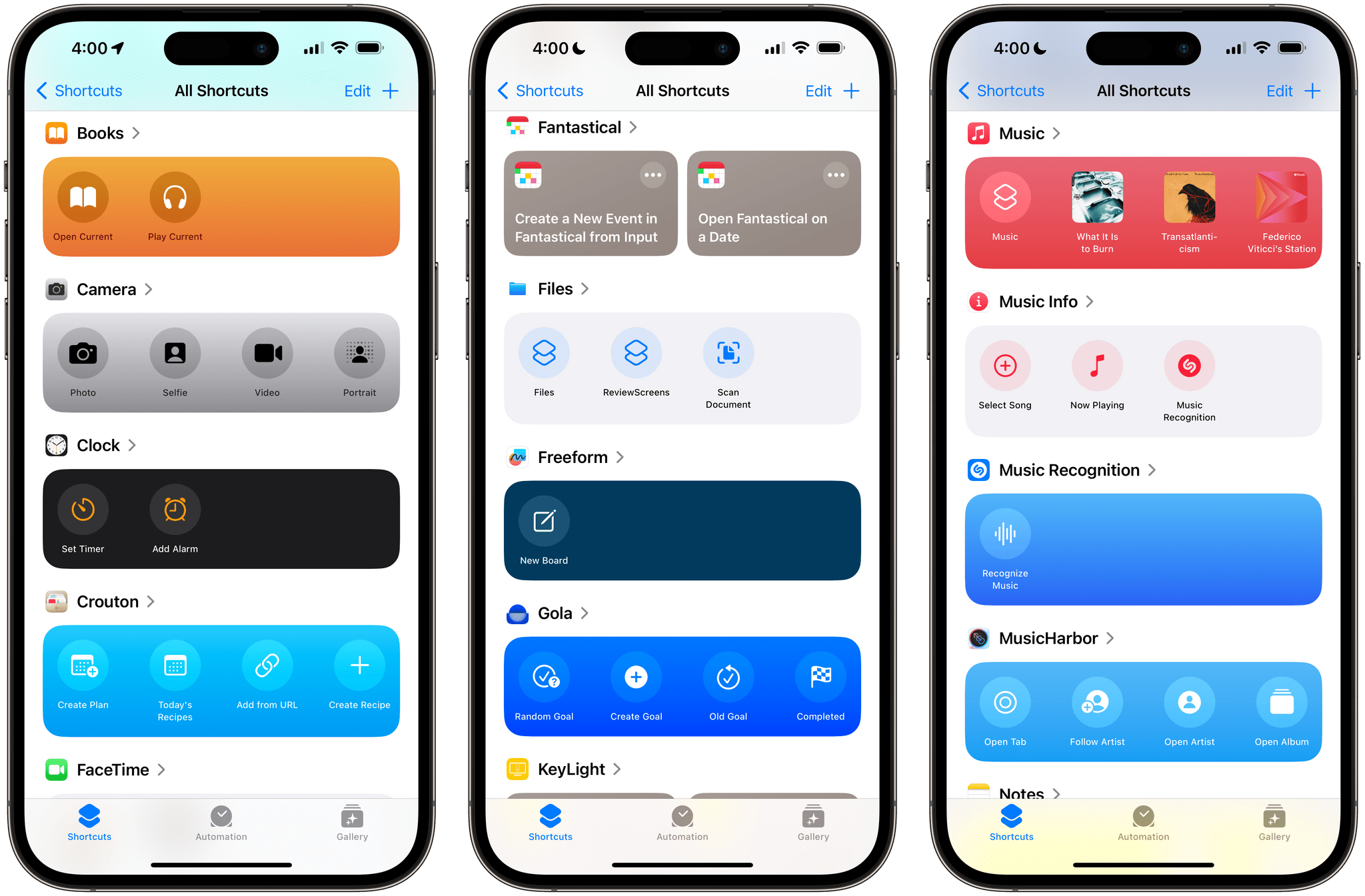

Launched last year as an easier way for people to get started with the Shortcuts app, iOS’ App Shortcuts (I know, these names are so confusing) have now turned into top-level results that show up right next to app names when you search in Spotlight. As a result, when you look for an app in iOS 17, in addition to the icon to launch the app, you’ll be presented with useful shortcuts to reopen a specific screen or document in that app or perform a quick action with it.

As you can see from the image above, Apple has fully embraced this new Spotlight integration with App Shortcuts: nearly every built-in app in iOS and iPadOS 17 offers a selection of shortcuts that appear as Top Hits results and also populate the Shortcuts app with a new, custom design.

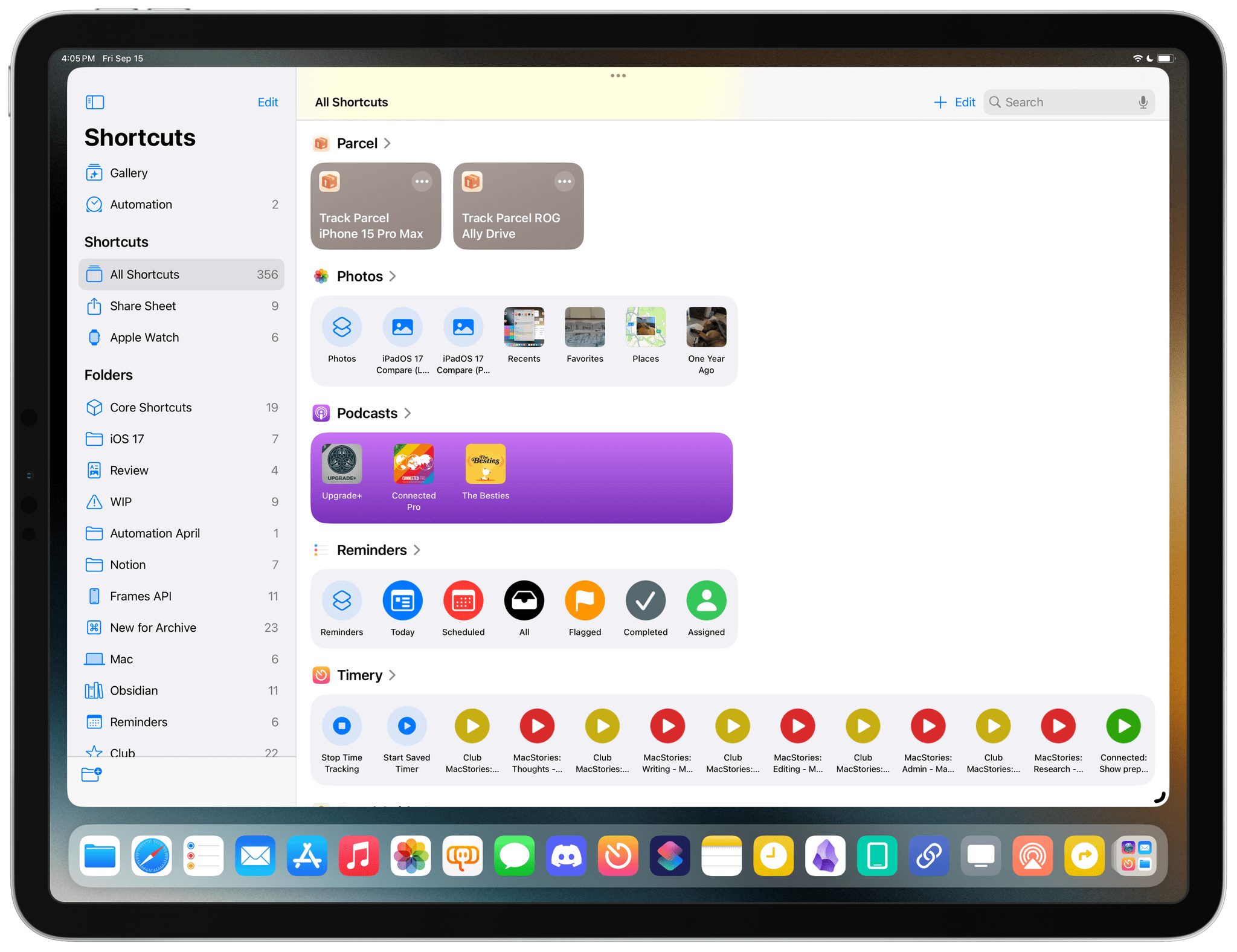

The same App Shortcuts have a new look in the Shortcuts app. Fantastical, which hadn’t been updated for iOS 17 when I captured this image, was still using the old style of App Shortcuts (center).

Music and Podcasts have personalized shortcuts for recently played albums and shows, respectively; Clock lets you quickly set a timer or create an alarm; Notes lets you quickly jump back to a recently viewed note or create a new one; Reminders has quick links to the Today and Scheduled lists. On an iPhone 14 Pro Max, up to three shortcuts at once can be displayed next to the app’s main icon in Spotlight; on an iPad, you can see up to five shortcuts next to the main app result.

App Shortcuts in Spotlight are one of my favorite additions to iOS and iPadOS this year because they provide an even smoother on-ramp to Shortcuts for millions of people. Even if you don’t care about the Shortcuts app at all, they’re genuinely useful launchers that offer valuable shortcuts into app screens and functions. Third-party developers can, of course, integrate with this new flavor of App Shortcuts in Spotlight and can even design custom ‘platters’ for the Shortcuts app and Spotlight to have a specific background color underneath their shortcuts.

Last year’s App Shortcuts felt like a way to introduce people to the Shortcuts app; in iOS 17, they’re still that, but they’ve also graduated to a core search feature that makes Spotlight faster, more useful, and better integrated with apps. Whoever thought of this feature at Apple deserves a raise.

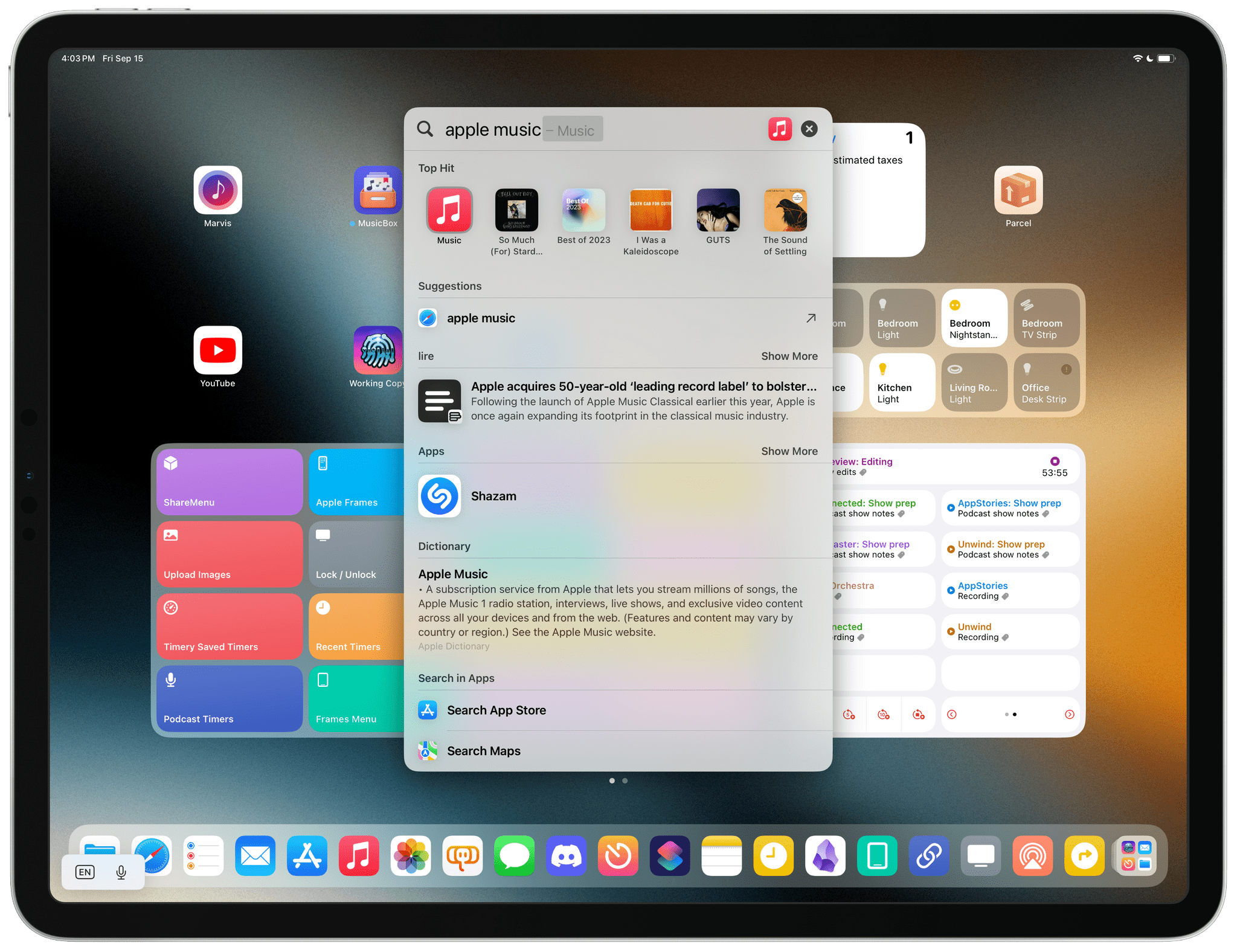

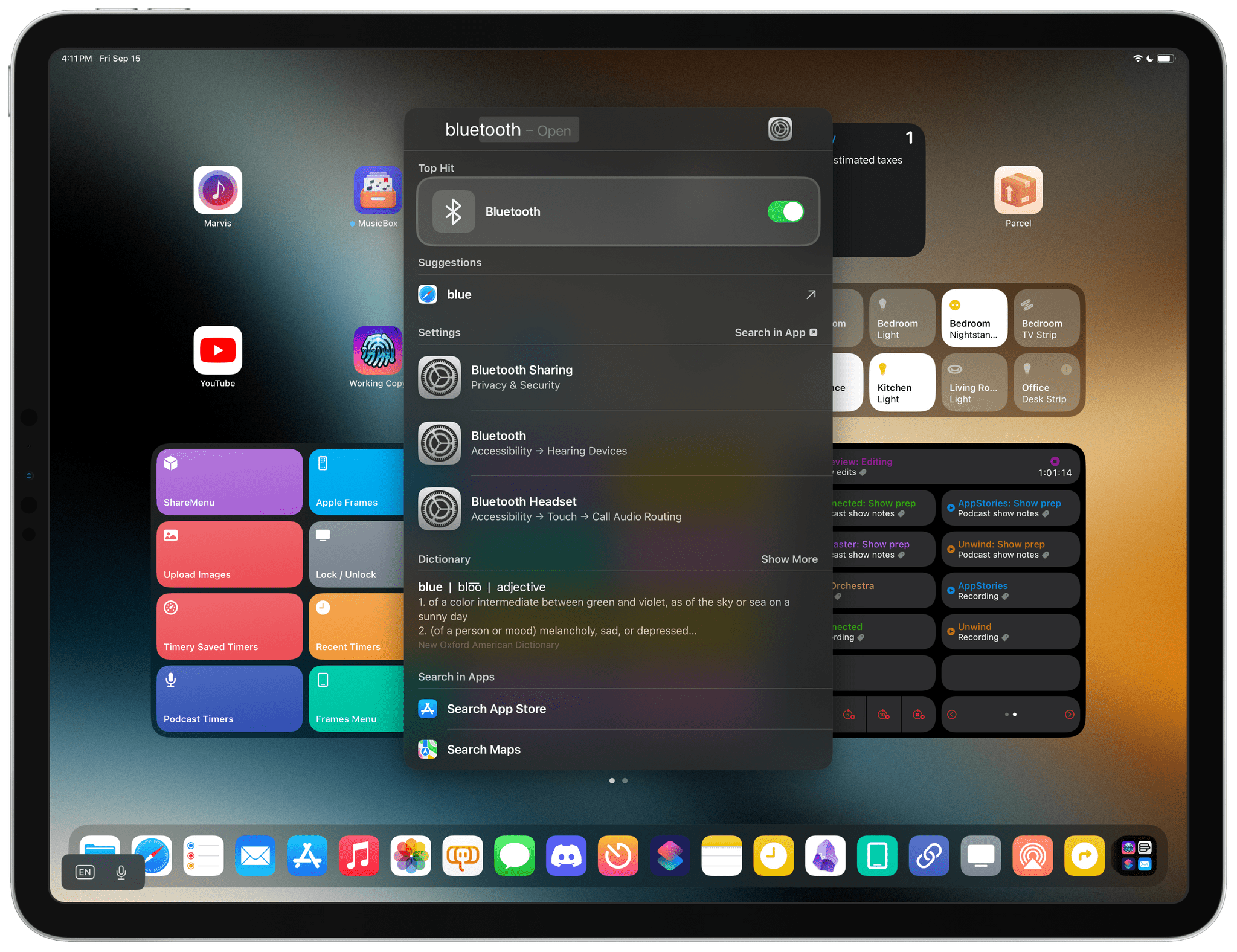

Technically speaking, these are not App Shortcuts, but Spotlight for iOS 17 also lets you to toggle certain settings (such as Wi-Fi and dark mode) inline, without having to open the Settings app. Search for ‘Settings’ or specific commands such as ‘Do Not Disturb’ or ‘Bluetooth’, and you’ll get the ability to toggle settings directly from Spotlight with one tap:

Spotlight should now be capable of finding scenes, people, and activities inside your videos with a dedicated video playback UI; no matter how much I searched, these video results never appeared for me.

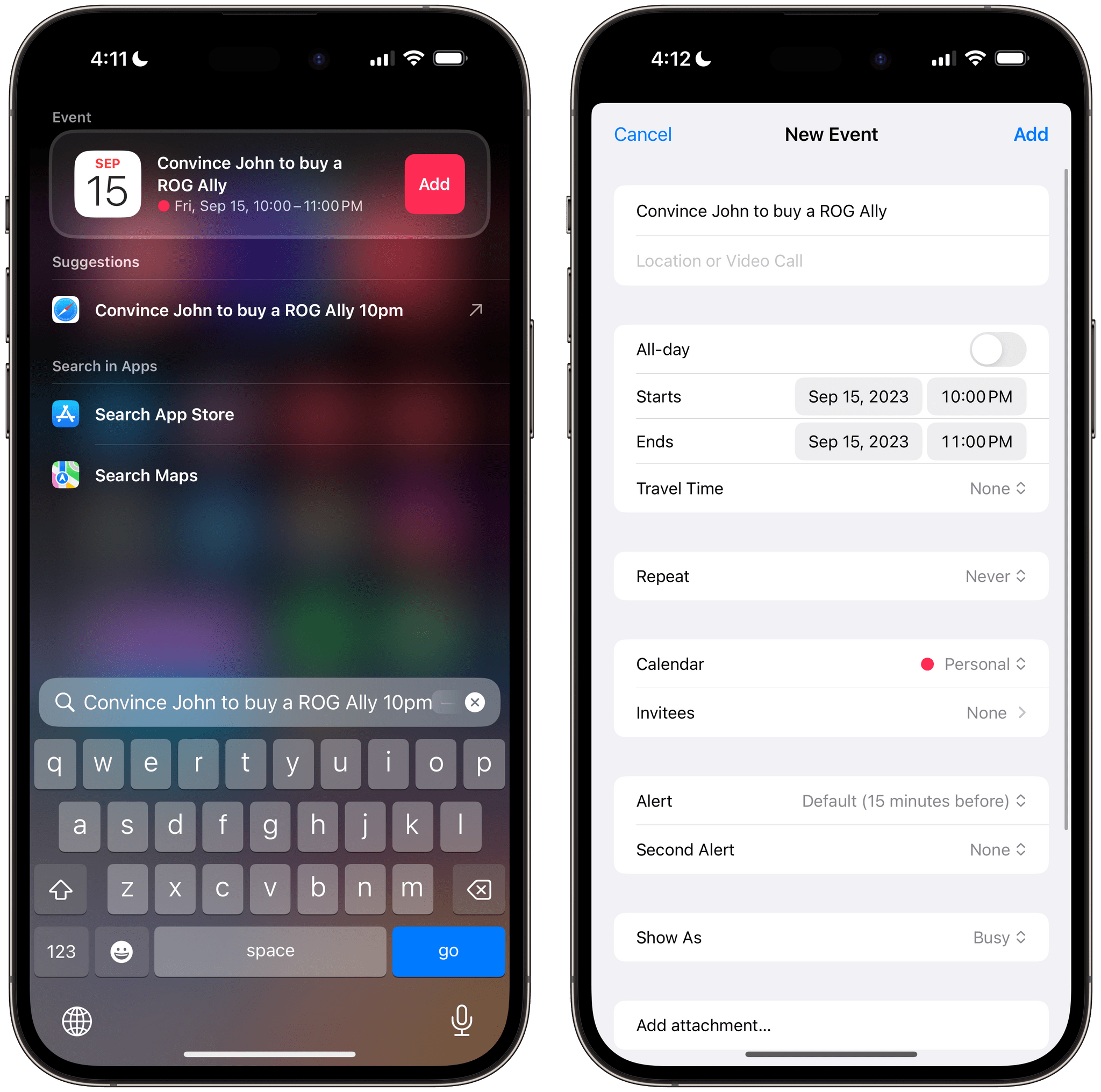

Spotlight has long offered integration with data detectors such as street addresses and phone numbers, and this functionality has been enhanced in iOS 17. Now you can create calendar events with natural language input in Spotlight. Type something like ‘Call John today at 10pm’ and you’ll get a special result with an ‘Add’ button to bring up a Calendar screen with pre-filled information for the event. In a lovely touch, the ‘Add’ button is tinted with the color of your default system calendar.

The one negative aspect of Spotlight for me this year has been its performance on iPadOS. Alas, invoking search from an external keyboard on iPad still isn’t as fast and reliable as it is on macOS: sometimes apps that I have installed on my iPad Pro don’t come up at all as results in Spotlight; other times they take a few seconds to populate the search box, or their App Shortcuts don’t load. I continue to wait for the day when Spotlight for iPad will be as immediate as its Mac counterpart.