I’m going to be direct with this story. My 30-minute demo with Vision Pro last week was the most mind-blowing moment of my 14-year career covering Apple and technology. I left the demo speechless, and it took me a few days to articulate how it felt. How I felt.

It’s not just that I was impressed by it, because obviously I was. It’s that, quite simply, I was part of the future for 30 minutes – I was in it – and then I had to take it off. And once you get a taste of the future, going back to the present feels…incomplete.

I spent 30 minutes on the verge of the future. I have a few moments I want to relive.

The Carousel

This device isn’t a spaceship, it’s a time machine. It goes backwards, and forwards…It takes us to a place where we ache to go again. It’s not called the wheel; it’s called the Carousel. It lets us travel the way a child travels: around and around, and back home again, to a place where we know we are loved.

I was reminded of this quote by Don Draper, the fictional advertising executive played by Jon Hamm in Mad Men, during my demo of spatial photos and videos with Vision Pro. I was transported to a place in time where I didn’t originally belong, and yet: it felt like I was there again.

Let me back up.

After putting on the Vision Pro headset (more on the setup process and onboarding experience below; this story is not in chronological order) and getting familiar with the Home View and some built-in apps, I was told to open Photos. The first few shots in Apple’s gallery were “regular” pictures that I was able to view in a Photos window virtually pinned to the wall in front of me in the room I was in at Apple Park. With a single “pinch tap” gesture, I was able to make the Photos UI go away and check out photos in full-screen. I then scrolled to the next item (again, more on gestures below) and I was suddenly staring at a panorama of Iceland that wrapped all around me. The room – which I could still see thanks to Vision Pro’s video passthrough – dimmed and it felt like I was standing there, where the pano shot was taken.

We then switched to a panorama of the Oregon coast, which was equally wrapping around me as I was sitting on Apple’s couch with two people guiding me through the demo. At that point, I said I felt like I was staring at a realistic painting in front of me, and I don’t think I was able to convey what I meant in that moment. Now I know what I wanted to say: I felt like the man standing on the cliff in Caspar David Friedrich’s Wanderer above the Sea of Fog. The illusion was so credible, and the image quality of Vision Pro’s two 4K displays was so high, that for a moment I did feel like I was alone, staring at an indefinite expanse stretching toward the horizon. And as I will repeat many times throughout this story: I keep thinking about how nice that felt.

Then we moved on to spatial photos and videos. The first scene was a static 3D photo of kids eating a birthday cake. It’s hard to describe what these feel like in an article, but I’m going to try. Imagine a little diorama in front of you, only instead of a cartoonish environment, it’s a realistic 3D reconstruction of a scene. A spatial photo is a fully three-dimensional scene where you can get closer, peek around corners, and almost “step” into it as it was captured, frozen in time. I thought it was remarkable, but then we moved to a spatial video of the same scene.

Once the video started, I was no longer looking at a static capture: it’s almost like I was re-witnessing a moment in time, saved and archived so I could forever loop it before my eyes. The kids were laughing and everybody was having a good time. At first I sat there, sort of unsure what to do. I’m fully aware that what I’m writing – without screenshots or videos to prove it – may sound extremely silly. But like I said, I’m just recalling how I felt. And for a second, I did feel petrified – like I stepped into someone else’s memories and didn’t want to disturb them. The quality of the 3D capture and model is that good.

It’s only when I was told I could move “around” the video that I realized I didn’t have to sit back and watch. So I got closer to a memory, which is not a sentence I thought I’d type on MacStories in 2023. And when the scene switched to another video of a group of friends just chilling around a bonfire at night and laughing together, I did it again. As the memory unfolded, I got closer to them, looked around, listened to them. I was a silent participant in someone else’s memory.

And that’s when it hit me. Spatial video capture isn’t just a fascinating piece of technology (which it absolutely is). It’s a time machine. Years from now, when this technology will be more affordable and capturing spatial photos and videos will be possible on an iPhone rather than a headset1, how much would I be willing to pay to relive, even for just a few seconds, a moment when my dogs were younger and playing together? How much to be “around” my grandma again? If I was given the ability to witness, with high fidelity, an old moment of happiness with my friends, how could I say no?

As Don Draper said, nostalgia is delicate – but potent. I don’t know if I was supposed to feel this during my demo, but here we are. Spatial video was the most delicate moment of my initial experience with Vision Pro, and the one that left the strongest impact on me.

The Spatial Computer

I’ve long been intrigued by the idea of using virtual reality as a multiplier for productivity. If you’re in a virtual environment, you’re no longer constrained by the physical limitations of your desk when it comes to spawning windows in front of and around you. That’s why a few months ago I purchased a Meta Quest 2 VR headset: as I covered on my podcast Connected, I wanted to understand what it would feel like to get work done with, effectively, an infinite combination of virtual PC monitors at my disposal. And after trying a handful of Vision Pro apps and its computer experience for a few minutes, I believe that Apple is going to outclass its competition in terms of visual fidelity, design clarity, and interactions with a 1.0 product.

The key aspect to understand about Vision Pro is that, once you put it on, the first thing you see is not a virtual environment: it’s your surroundings. Using video passthrough, Vision Pro keeps you grounded in your reality; as we heard last week, this was one of the underlying design principles of the product. When I put it on and pressed the Digital Crown2, I just continued seeing what was around me. I did not have to scan my room at any point during the demo; the perspective of what I was seeing inside the Vision Pro was not any different from what I normally see.

It may sound trivial, but consider all the work and machine learning tech that needs to go into making sure video passthrough feels like looking around a room with your own eyes while avoiding latency, image distortion, or visual glitches. Vision Pro’s cameras are not placed at eye level; and yet, wearing it felt like I was just looking around the room. I didn’t experience any kind of motion sickness during the demo.

The one thing I’ll note about video passthrough is that it doesn’t quite match the sharpness of real life as seen from your own eyes.3 I could tell it was a video feed, but it was a high-quality 4K one. The quality of Vision Pro’s video passthrough was leagues beyond anything I ever experienced with similar modes on the Quest 2 or PSVR 2. It was in full color, refreshing at 90Hz, and it didn’t have the chunky pixels seen on the Quest 2.4 So yes, I could tell it was a video of my surroundings, but it was a great one; it instantly felt like a great compromise to stay present and aware of what and who was around me.

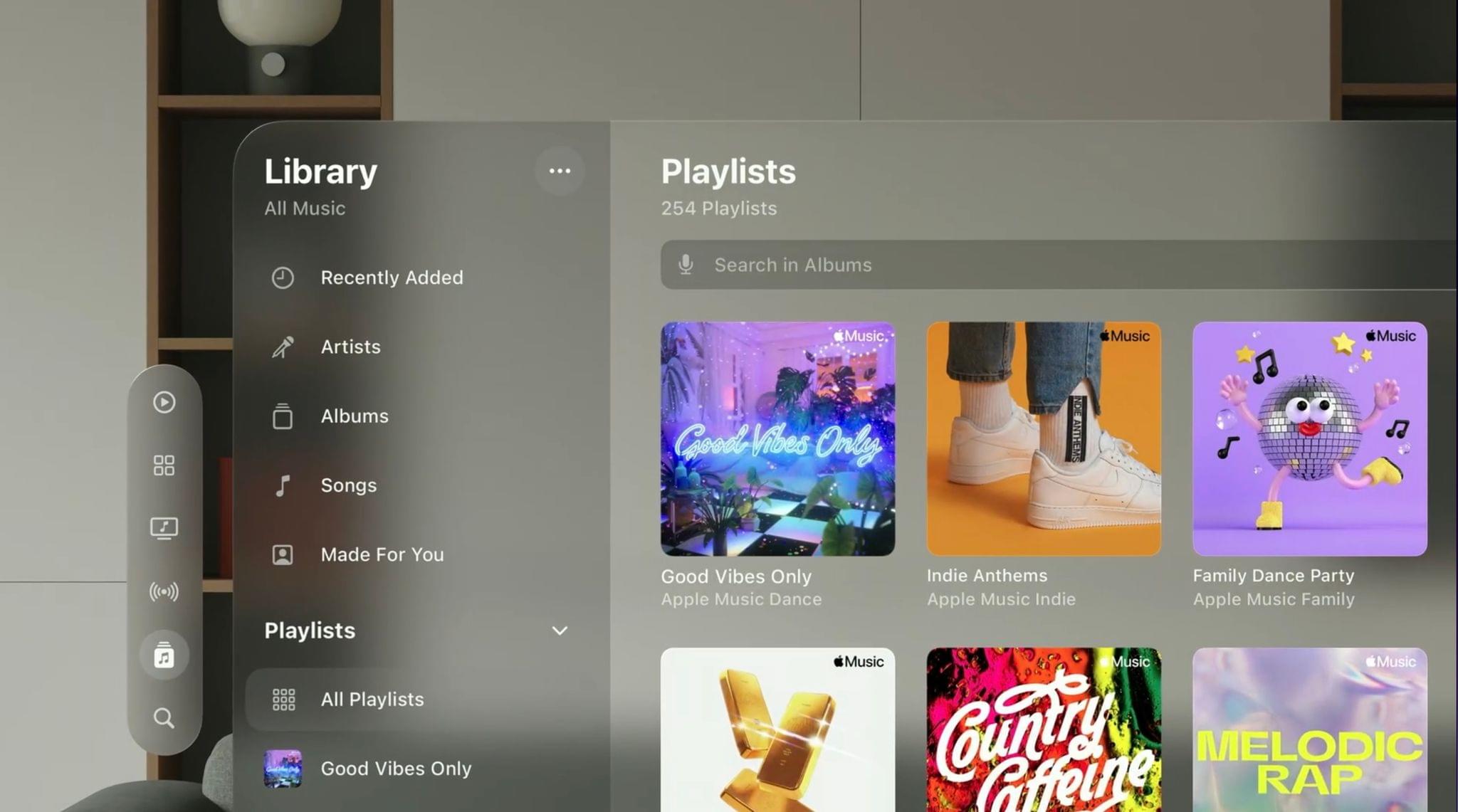

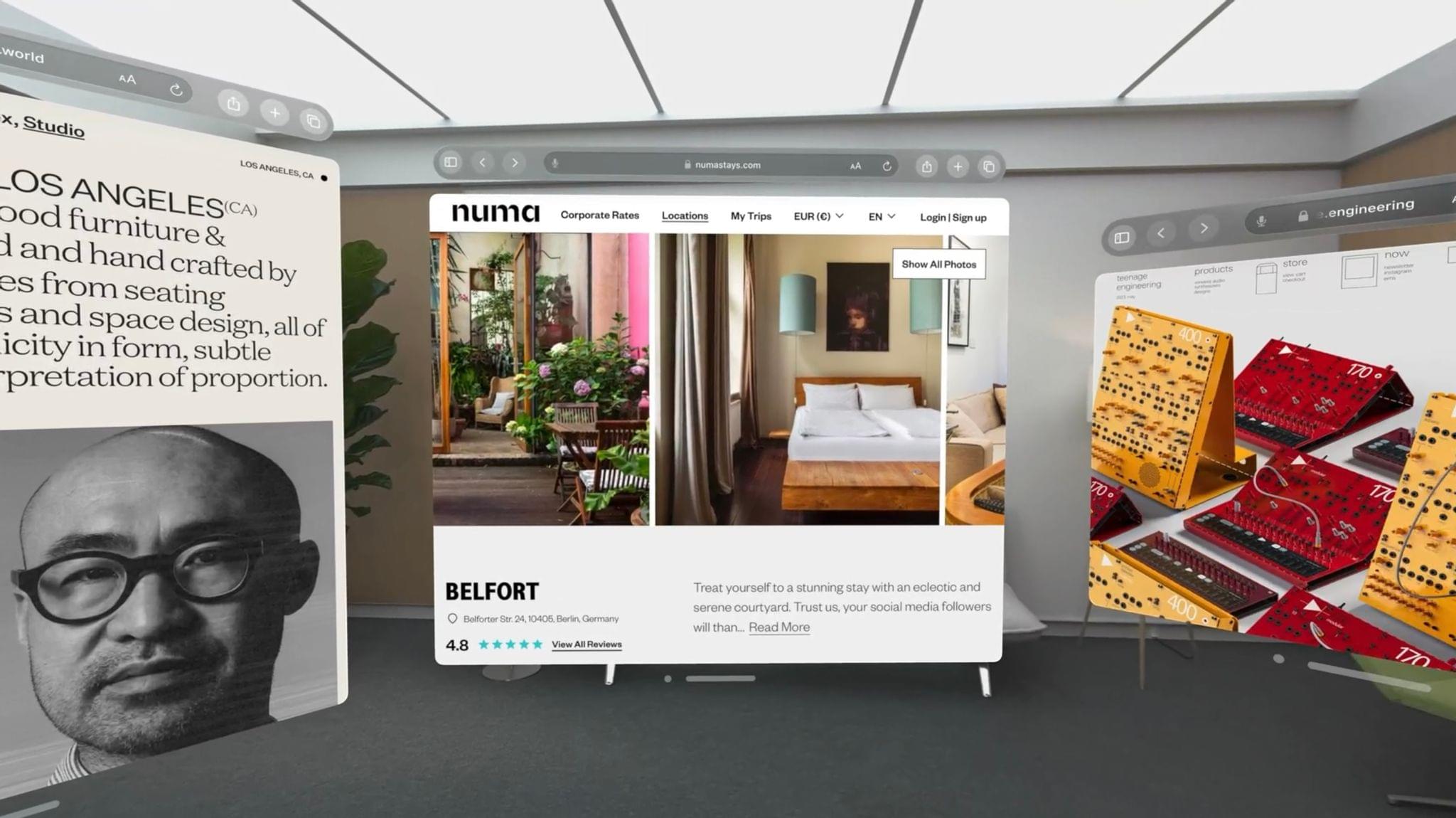

I then invoked the Home View (which is the equivalent of an iOS/iPadOS Home Screen and ironically similar to my beloved PS Vita’s one), looked at Safari, opened the app, and that’s when I realized: this is a computer. I wasn’t just dipping in and out of augmented reality for a few minutes at a time, like I would with any ARKit experience on my iPhone; I could spend time in it and use apps, like I would on an iPad – but without the limitations of a 12.9” display.

There are several things to unpack here, and I’ll try my best to recall all the thoughts I had during the demo.

Eye and hand tracking on the Vision Pro were remarkable and, again, vastly superior to their Quest 2 counterparts.

For the first minute I was in the Home View, I was paying attention to how eye tracking worked: I was thinking about it to see how it performed the same way I thought about touch control on the original Nintendo DS or multitouch on the iPad. But the thing is, after a couple of minutes, the novelty wears off and this new input method becomes natural. Of course it should work like this, I started thinking. And it was impressive: with no discernible latency, when I was told to find Safari, I just looked at the icon; when I had to switch from the grid of apps to the Environments tab (more on this below), I looked at the tab bar on the left side of the Home View, and it expanded into a sidebar. Once the sidebar was shown, scrubbing through a list of items was simply a matter of…looking up and down.

Like Apple’s other innovations in input systems, what I’m saying is that eye tracking disappears behind the scenes very quickly. After a few minutes, I just wasn’t thinking about the fact that my eyes were being used as a pointer anymore, which was wild.

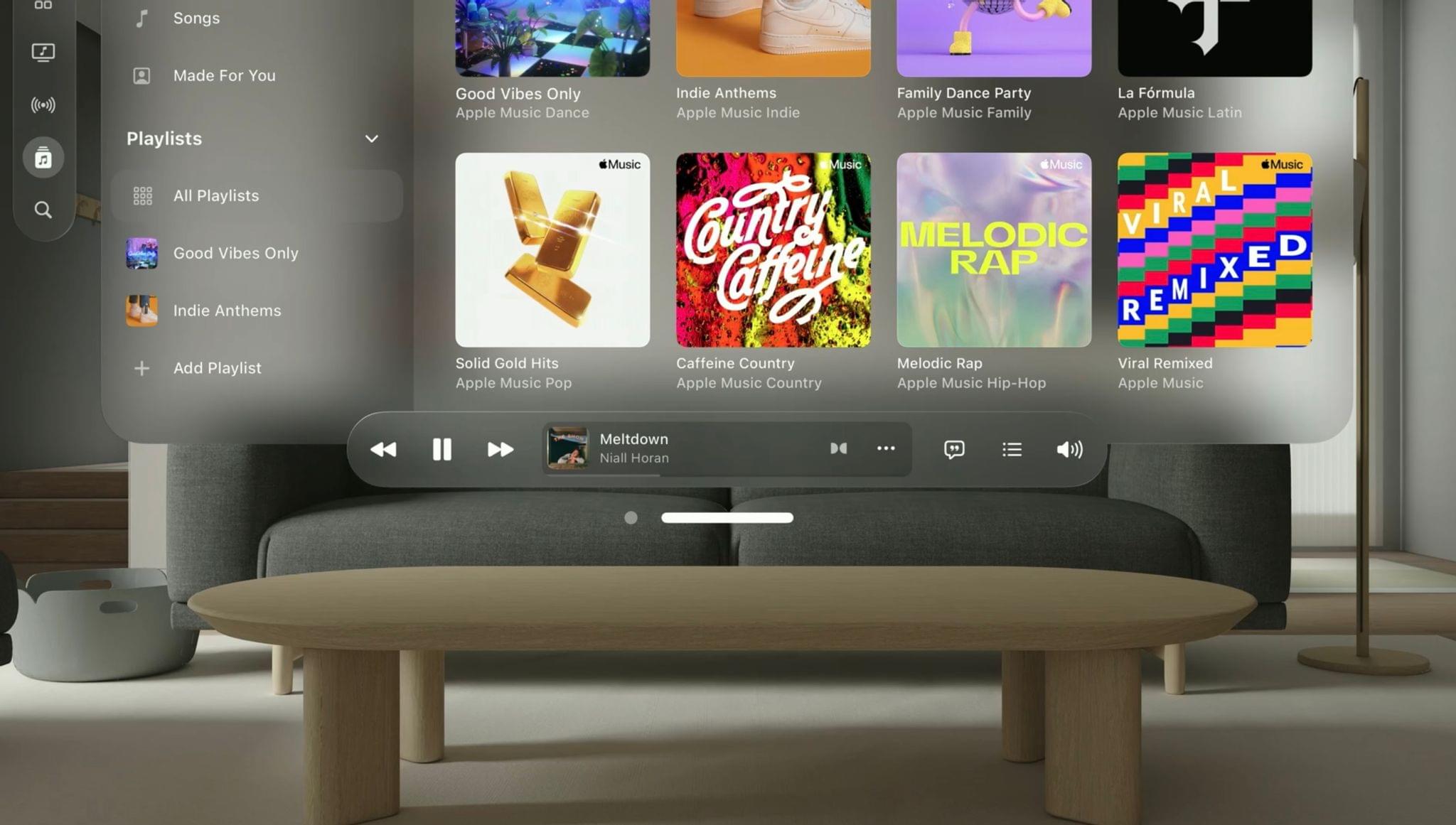

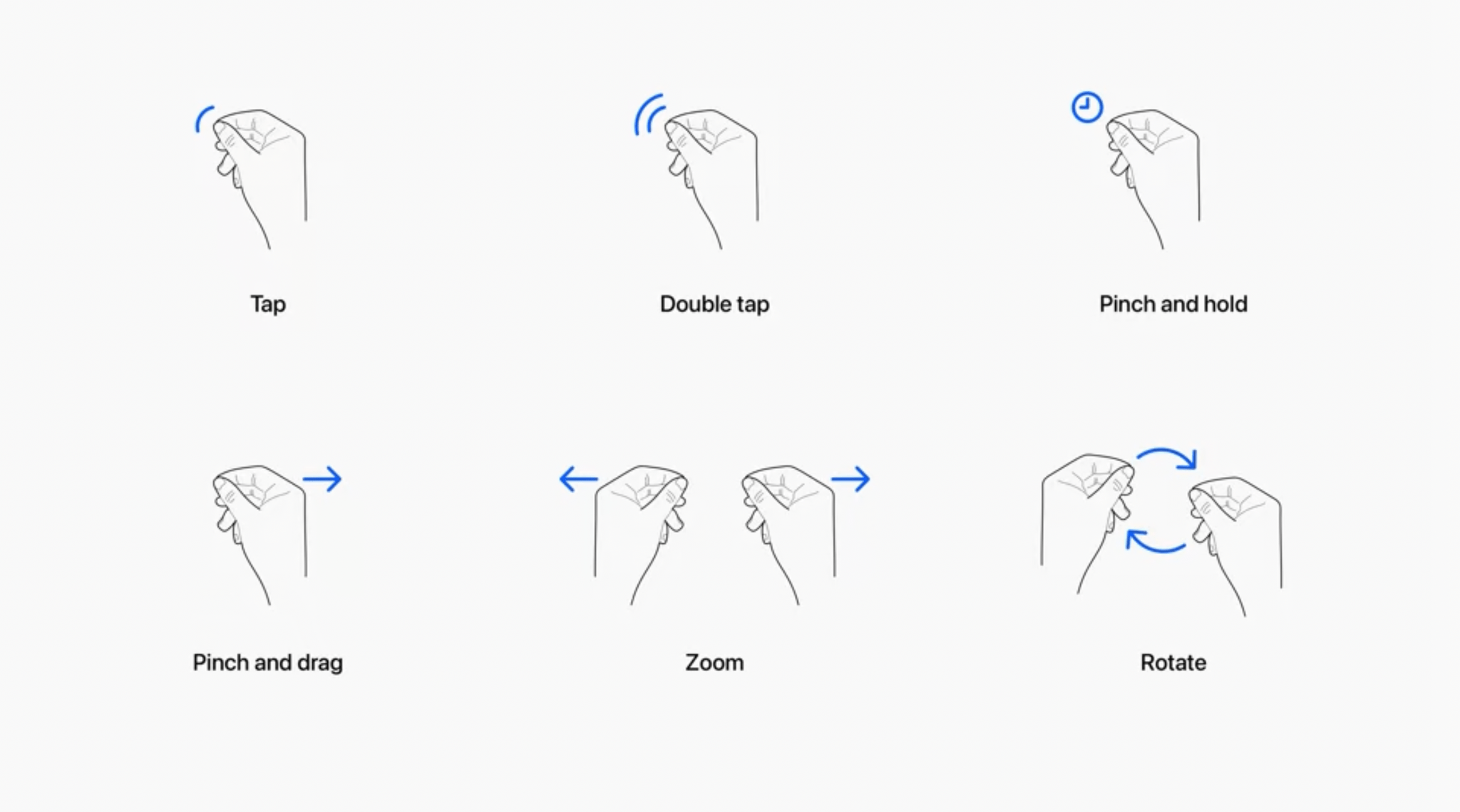

It took me a bit longer to adjust to hand tracking and gesture-based controls. The Vision Pro uses new types of “pinch-tap” and “pinch-hold-drag” gestures to tap and scroll in apps, respectively. The gestures were easy to memorize and their recognition felt as fast as eye tracking. Since Vision Pro has cameras and sensors pointing down, I was able to keep my hands on my lap for most of the demo while laying back on the couch, and gestures were recognized nearly every time.

I only had to repeat a scroll gesture twice, and I’m guessing that’s because I was too relaxed after the mindfulness demo and my hand had exited the device’s downward-facing field of view. In any case: based on what I saw, it’s not like you’ll have to use gestures with your arms outstretched in front of you. (Someone once said that the ergonomics of this aren’t great.) You can just keep them in a natural resting position and gestures will be picked up by visionOS.

In my demo, all these aspects clicked very quickly and I found myself navigating visionOS (sometimes skipping ahead of the demo and what Apple’s people wanted me to do) because everything felt intimately familiar. As someone who’s been reviewing iOS and iPadOS every year for the past decade or so, I knew what I was supposed to do a few minutes into my demo. The Window Bar, which is the element at the bottom of a window that you can use to grab it and move it around in space, is reminiscent of the iOS Home indicator; the apps I tried (Safari, Messages, and Photos) featured layouts reminiscent of their iPad versions; when I was selecting icons on the Home View or toolbar items with my eyes, they animated with a parallax effect similar to the one found on tvOS.

One of the many consistencies between visionOS and Apple’s other operating systems: the window resize control of visionOS is the same one seen in Stage Manager for iPad.

This is the advantage Apple has over its competitors: they can leverage over 15 years of work on iOS to launch a new platform that immediately fits into an established ecosystem of apps, services, user interactions, and developer frameworks.

I only had a few minutes to play around with apps and windows, but the moment I started arranging my workspace in front of me, it felt liberating. I was using apps I already knew, but I wasn’t constrained by a physical screen anymore. So I took a Safari window, made it extra-large, and pinned it to a wall on the left side of the room; then I took Photos, made it smaller, and placed it in front of me; last, I grabbed Messages and placed it above Photos.

This concept isn’t totally new – again, I have a Quest 2 – but what’s fundamentally different with Apple’s take is that I was doing all of that while being aware of my surroundings. And not just somewhat “aware”: I was literally blending digital content with the room I was in.

For this reason, in imagining a future use of Vision Pro in everyday life, I can see the following scenario coming true in 2024: I can just lay on the couch, open a giant Obsidian window in front of me, pin a small Timery one to the bottom left, and maybe leave Ivory by the kitchen table. I could get work done from the couch, all while keeping an eye on my dogs or being available to Silvia since I’m not isolating myself in a closed-off VR environment. And when I’m ready for an espresso break, I could head over to the kitchen for a few minutes without taking my Vision Pro off, catch up on Ivory in the kitchen while the espresso machine is warming up, and walk back to the couch when I’m done.

Until a few months ago, this would have seemed unreal, right? And yet that’s exactly what I experienced, in a limited fashion, in my demo. The fact that Vision Pro is marketed as a spatial computer doesn’t just mean that it can be a fancy entertainment device for immersive TV content; it means it’s a computer that does computer things and its potential should also be considered through the lens of productivity and office/remote work. Sure, a lot of people will be drawn to its entertainment and media capabilities at first; personally, I also saw its future as a machine I could use to be productive with a kind of flexibility I’d never experienced before.

Raise your hand if you’ve ever heard this argument from me before.

Still, there are questions that have been left unanswered for now. I tested Vision Pro in a relatively small, square room inside Apple Park. Will its real-time room-mapping capabilities work just as well for my larger living room? What happens when my dogs decide to, say, walk in front of Safari? (Apple people in the room never walked in front of my windows.) Will I be able to pin a window all the way to the back of my living room, like I did in Apple’s demo room? With the exception of a 3D object in Freeform that popped out of a board, the apps I tried didn’t really have volumetric designs. What kind of experiences will third-party developers build now that, in addition to windows, they can also create 3D and full-space experiences?

I can’t answer these questions now, but if what I saw is of any indication, and based on the marketing materials on Apple’s website, I think what I imagined above will be absolutely possible next year.

With a spatial computer, my entire space can become a canvas for apps. As you can guess, I’m pretty excited about that.

Immersion Vs. Presence

At the moment, I own two VR headsets: a Meta Quest 2 and PSVR 2. I can only use them if a) I’m home alone or b) it’s a collaborative situation with friends who came over to test videogames in VR, so we’re taking turns with the headset.

The biggest hurdle of VR headsets has always been, and will likely continue to be unless their designs are rethought completely, their sense of isolation from the outside world. To an extent, that’s by design: maybe you do want to shut yourself off completely from reality. In my experience, however, I’ve always found that to be a platonic ideal more than a practical advantage of VR headsets. Sure, in theory it sounds good that I can fully immerse myself in a videogame without being aware of my surroundings at all; in practice, unless I’m alone, I don’t want to be a jerk to my girlfriend, dogs, or other people around me and become unreachable unless I take off the headset.

In most modern VR headsets, immersion and presence are mutually exclusive aspects of the experience. The opposite is true on the Vision Pro: you can be immersed and present at the same time, but that is only possible thanks to the hardware and engineering efforts that went into the design of this device.

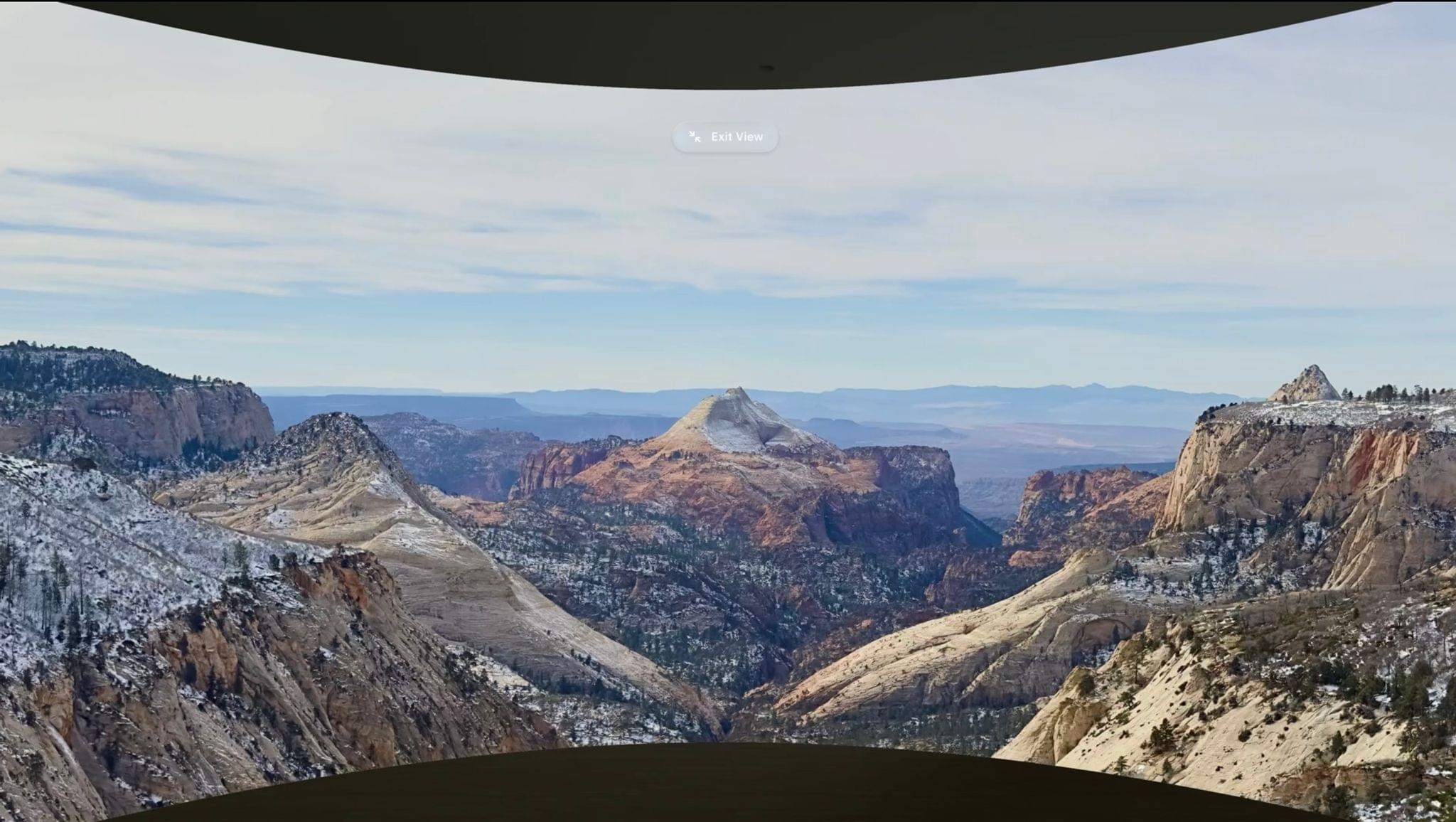

To get a feel for immersion, Apple had me try one of their environments, which is accessed from a tab on the left side of the Home View. The one I (and I assume other members of the media too) tried was Mount Hood. Once I selected it, I was transported to this beautiful location in Oregon that appeared in front of me, in the middle of my field of view.

By default, stepping into an environment doesn’t fully immerse you into the scene – you still see your surroundings on the left, right, and behind you.5 Then, with a spin of the Digital Crown, I slowly expanded the virtual recreation of Mount Hood until it was all around me. I was in it. I opened the Home View, selected an app, and there I was, doing computer things while sitting in front of a beautiful lake.

And once again, I couldn’t help but wonder: what if I could, say, do my Markdown text editing while immersed in a tropical rainforest? Listen to Music at the top of the Golden Gate Bridge? Open my RSS reader on the Monday morning after WWDC week, but do it in front of the ocean, so I can watch all the hot takes wash up on the shore?

The main point, though, is that even when I’ll get to do that, I won’t be inaccessible to the outside world, which is also something I got to experience during my demo. First, I was told to hold out my hands, and when I did that, I could see them in front of me, surrounded by a shimmering outline. (It’s similar to the outline you get when selecting a subject in the Photos app.) At any point during any of the immersive demos, I could just hold out my hands and, say, see the Apple Watch on my wrist. That was neat.

I was stunned, however, by the Breakthrough feature, which is as obvious in hindsight as it must have been incredibly challenging to build.

When you’re immersed in an environment or any other full-screen space, if someone gets close to you or starts talking, you see them appear in your field of view. They fade in, smoothly and quickly, with a visual effect that is neither creepy nor disconcerting. It just feels natural. And once we tried it a couple times during the demo, it was clear to me that Breakthrough is one of those classic Apple “ah-ha” features that will make other headsets feel broken without it. Of course somebody should be able to interrupt me and of course I should be able to see them and talk to them without taking my Vision Pro off.

The “content-based” experiences I had during the demo were also impressive. I watched two minutes of Avatar: The Way of Water in 3D, and all I can say is that I’m never going to watch a 3D movie in a theater again once I have a Vision Pro.

Why would I? You have to wear lousy plastic glasses, sit in an uncomfortable chair, and all you get is a grainy image with washed out colors. On the Vision Pro, the image was crystal clear and spatial audio sounded good with the device’s built-in speakers. The 3D effect was believable, and it just made the two-minute clip-watching experience feel more premium than sitting in a theater surrounded by loud-chewing people ever was. I was even able to switch the scene to a virtual movie theater, where I could watch the movie alone from the comfort of a couch. I get the appeal of going to the movies with friends (which I like to do and will continue to do), but for “technical” creations such as Avatar, I’d prefer the high-fidelity experience of a Vision Pro.

I then watched a montage of clips recorded with Apple Immersive Video, which consists of 180-degree footage recorded in 8K. The best way I can describe these is that they were like a video version of the panoramas I mentioned above. Imagine a panoramic video that expands in front and around you, with fantastic display quality and spatial audio. The videos were so large and expansive, I felt like I was “in” them at several times, or at least very close to the action.

One moment I was flying over the ocean, the next I was watching a group of scientific researchers (I think?) in a jungle care for a rhino. As one of the women in the group started scratching the rhino’s nose, I could hear the sound of the rhino’s thick skin in my left ear since my head was turned in the opposite direction. Later in the video, another baby rhino came closer to me, and I instinctively went “aww” and reached out with my hand because I wanted to scratch its nose this time. The montage ended with a very intense, front-facing shot of a woman standing on a tightrope between mountains looking straight into my “eyes”. In that moment I looked down, afraid of the void, then back up again at her gaze, and the video cut to black.

Based on the two different video clips I saw, I have no doubt about the potential for entertainment and educational content on the Vision Pro. The latter was also highlighted by another 3D demo I experienced, in which a portal into prehistoric times opened at floor level in front of me and a butterfly came out flying. I was told to hold out my finger, and the butterfly landed on it, which was some nice stagecraft on Apple’s part. Later, a dinosaur (a T-Rex?) approached the portal and half of its body slowly came out of it, looking around the room. At that point, I was told I could stand and get closer to it, so I grabbed the Vision Pro’s battery pack with my left hand and, since I was in a mixed reality environment where I could see my surroundings, naturally walked around the coffee table in front me, past the Apple people in the room, and stood in front of the dinosaur.

As I was staring into the dinosaur’s eyes, it felt like it was there. The quality of the 3D creature and textures were outstanding. I held out my right hand again, and when it made a sudden movement, I flinched. I can’t even begin to imagine what museums, documentary makers, and apps for historical studies could do with this technology in the future – especially when you consider how, on top of the 3D performance I just mentioned, you still have a computer with spatial audio and multitasking capabilities powering an experience that may or may not isolate you from the real world.

On a similar note, as someone who’s been working at home, remotely, by myself well before the pandemic (I’ve been doing this since 2009), I was fascinated by the brief demo I had of personas, which is Apple’s name for photorealistic 3D characters of other people you have conversations with on FaceTime.

Based on what I’ve heard from other press members last week, my understanding is that the quality of personas has been fairly hit or miss, most likely due to connection issues at Apple Park or the early nature of the product. Regardless of other people’s thoughts, my persona demo was flawless. I was connected in a couple seconds to another Apple employee somewhere in Apple Park, and there they were: a reconstructed 3D head of someone else floating in my field of view as we started collaborating on a Freeform board with 3D objects that were popping out of the board.

This feature is hard to describe in an article because, let’s face it, it sounds kind of creepy when I put it like that. Not knowing the real face of the Apple employee who was chatting with me, I have no frame of reference as to whether their persona was accurate or not. (Of course, I couldn’t see whether I also had a persona on the other side of the call.) But what I can say is that it didn’t feel uncanny to me. Their facial expressions seemed believable, their eyes were looking around the room and looked fine, and I was able to “grab” the FaceTime tile and put it somewhere else next to me. Once Vision Pro ships and an improved version of human-like spatial personas becomes available, would I consider this technology instead of regular 2D FaceTime video calls? Yes. Did I also prefer it to Meta’s Wii-like cartoon characters for remote work sessions? Absolutely.

Lastly, I believe the Vision Pro’s immersive capabilities have a real shot at rejuvenating the market of mindfulness and meditation apps. In a 1-minute experience I had during my Vision Pro demo, I tested a version of Apple’s Mindfulness app for visionOS. As soon as I opened it, a sphere made of colored, translucent leaves appeared in front of me. As the sphere started pulsating and a guided voice told me to focus on my breath, the leaves started spreading around until the whole room grew dark and I was completely surrounded by colors.

I have to be honest: it felt nice. For just a few seconds, it was just me, soothing music, and a relaxing 3D visualization that gently engulfed me until I returned to the real world, ready to continue working my way through the demo. Once developers of mental health-focused apps and services start crafting more personalized and complex experiences for visionOS, I think we’ll be able to appreciate the dualistic nature of Vision Pro – it’s immersive only as long as you want it to be – even more.

Miscellaneous Tidbits and Open Questions

Below, I’ve collected a series of miscellaneous notes and tidbits from my Vision Pro demo.

The setup process took a while; will the final experience be like this? To prepare for my Vision Pro demo, I first had to use an iPhone with a Face ID-like onboarding process that scanned the shape of my face and ears. Then, since I wear prescription glasses, I went into what looked like an optometrist’s office and my glasses were scanned by a machine to see what kind of custom attachment lenses I would need on the Vision Pro. In total, this process took about five minutes, and I had to wait 15 more before my demo began. Then, once I put on the headset and pressed the Digital Crown, I had to calibrate eye tracking by looking at a moving dot in a circle of more colored dots.

Will the final onboarding and setup process be similar to this? What about trying to test a Vision Pro inside Apple Stores? Will you be able to perform some of these initial steps on your own at home, before ordering a Vision Pro online? These questions have been left unanswered for now. All I can say is that, after this entire process, I was able to use Vision Pro without my glasses and I could see everything clearly – a first for me with VR headsets.

I used a top head strap and didn’t find the Vision Pro heavy. This isn’t included in the marketing materials for Vision Pro, likely because the design isn’t final: when I put on the Vision Pro, I adjusted its snugness with a wheel on the right side, but then I also attached a top head strap. From what I saw, this essentially looked and felt like a nylon Sport Loop band for Apple Watch, down to the velcro-like attachment mechanism.

Other people’s opinions on this may differ, but I personally didn’t find the Vision Pro too heavy or unwieldy. It felt right. The light seal (the fabric component that sits in between your face and the unit) was letting a tiny bit of light in at first from under my eyes, but then I pushed the headset more firmly against my face, and that fixed it. Having tried a bunch of VR headsets over the years, I can say that the Vision Pro was, by far, the most comfortable and premium one I’ve ever tried. The curved glass in the front looks exquisitely Apple-y and the whole thing has a very “this is an object from the future” vibe.

I didn’t mind the battery pack. I sort of expected this would be the case and, yes: having an external battery pack was a non-issue in my experience. When I was sitting down, the battery was placed somewhere around my left hip and I completely ignored it. When I walked up to the dinosaur, I grabbed the battery and noticed it was warm to the touch. Apple wouldn’t say whether battery packs for Vision Pro will be hot-swappable as you’re using the device, but I hope that will be the case once the product ships. As someone who frequently works in 3-4 hour focused writing sessions, having to turn off the device to replace the battery pack wouldn’t be an optimal experience.

Apparently, the butterfly didn’t land on everybody’s finger. I heard from a little birdie that there’s a bit of an internal debate inside Apple in terms of who managed to get the butterfly to land on their hand or not. Mine landed on my right index finger and flapped its wings. Looking closer at it, I could see a tiny gap between its legs and the surface area of my finger, which I assume means 3D collisions may still need a bit of work.

Safari supports rubber-banding. In case there was any doubt: scrolling in Safari (and I have to assume everywhere else) supports the smooth, elastic scrolling effect Apple is known for on each of its operating systems. I grabbed a page, scrolled quickly to the bottom with the dedicated pinch gesture, and the page accelerated until it gradually stopped. That felt great.

The speakers were good, but not AirPods Pro quality. I was impressed by the spatial audio performance of the Vision Pro’s built-in speakers, but if I were to watch a full movie or listen to music, I’d still go for the superior sound quality of AirPods Pro. That’s not surprising, but it was good to confirm there will be at least a built-in sound output option on the Vision Pro that will not require a separate purchase of AirPods Pro.

Frontiers

I’ve been writing MacStories since 2009, and over the past 14 years, I’ve been able to attend my fair share of Apple keynotes and product events. If there’s anything I’ve learned from my experience, it’s this: it’s a rare thing to go to an Apple event and witness the future of your job in front of you – tangible, but not quite within your grasp yet.

I’m fully aware of the fact that, in this very moment, many of you reading this are rolling your eyes and scoffing at my excitement. It’s too expensive. It’s just a new toy. It’s creepy. No one will ever use it. A laptop is better. I have a TV already. I understand your skepticism. And I’m here to tell you that, at this point, I think I’ve been around long enough to have a pretty good sense for when things are about to change.

I’m convinced that Vision Pro and the visionOS platform are a watershed moment in the arc of personal computing. After trying it, I came away reflecting that we’ll eventually think of software before spatial computing, and after it. For better or worse – we can’t know if this platform will be successful yet – Apple created a clear demarcation between the era of looking at a computer and looking at the world as the computer. Whether their plan succeeds or not, we’ll remember this moment in the history of the company.

In the business of covering technology for a living, it’s easy – whether for personal taste or financial incentives – to prefer a certain kind of jaded, artificially-objective reporting that constantly aims to find faults in exciting products. In my case, I’m not saying that criticizing Apple is a mistake. To name a recent example, I spent a year calling out the flaws of Stage Manager for iPad. What I’m saying is that I hold Apple to a high standard, and I love my job because every once in a while, the company redefines what a high standard even means.

You see, my philosophy in life is pretty simple. When I dislike something, I loudly – but, I hope, always elegantly and constructively – make my case against it. But when I love something, and more specifically, when a piece of technology – be it software or hardware – captures my imagination and upends my expectations in a way I didn’t think possible, then I really love it. When I see something wonderful, I want to point at it. To each their own style; personally, I don’t think I’d still do what I do after 14 years without this approach.

What’s the point of writing about something for so long if you don’t love some of it?

My Vision Pro demo was the best tech demo I ever experienced. It was a glimpse of a future in which the computer – this machine that can make me productive, entertained, connected to my friends, nostalgic, focused, and everything in between – isn’t limited by a “screen” anymore.

The Vision Pro I tried felt like the distillation of every single OS feature, design change, and platform enhancement I’ve covered in my annual iOS and iPadOS reviews so far. I loved it, and I exited the demo room already longing for it.

Someone asked me last week: would you use a Vision Pro instead of your iPad Pro?

I don’t know if the Vision Pro is meant to replace other computers in my life yet. But I can’t help but feel that it’s all been leading up to this.

You can also follow our 2023 Summer OS Preview Series through our dedicated hub, or subscribe to its RSS feed.

- Because, let’s face it, capturing spatial photos and videos only through a Vision Pro seems a bit silly. ↩︎

- Which is bigger than the one seen on Apple Watch and more similar to an AirPods Max one. ↩︎

- Huge accessibility-related asterisk here, of course. What you see “with your own eyes” varies wildly from person to person. I, for one, need to wear glasses to see clearly. I’m not well informed enough at the moment to know more about the Accessibility features of Vision Pro, but I want to read more about the topic now that I’m back home. ↩︎

- The Quest 2 has a resolution of 1832×1920 pixels per-eye as opposed to the Vision Pro’s 4K per-eye. ↩︎

- I have to believe that the chairs the two Apple people in the room with me were sitting on had been carefully placed so they would be exactly in the non-covered area of a newly-opened environment. ↩︎