DeepSeek released an updated version of their popular R1 reasoning model (version 0528) with – according to the company – increased benchmark performance, reduced hallucinations, and native support for function calling and JSON output. Early tests from Artificial Analysis report a nice bump in performance, putting it behind OpenAI’s o3 and o4-mini-high in their Intelligence Index benchmarks. The model is available in the official DeepSeek API, and open weights have been distributed on Hugging Face. I downloaded different quantized versions of the full model on my M3 Ultra Mac Studio, and here are some notes on how it went.

Posts in notes

Testing DeepSeek R1-0528 on the M3 Ultra Mac Studio and Installing Local GGUF Models with Ollama on macOS

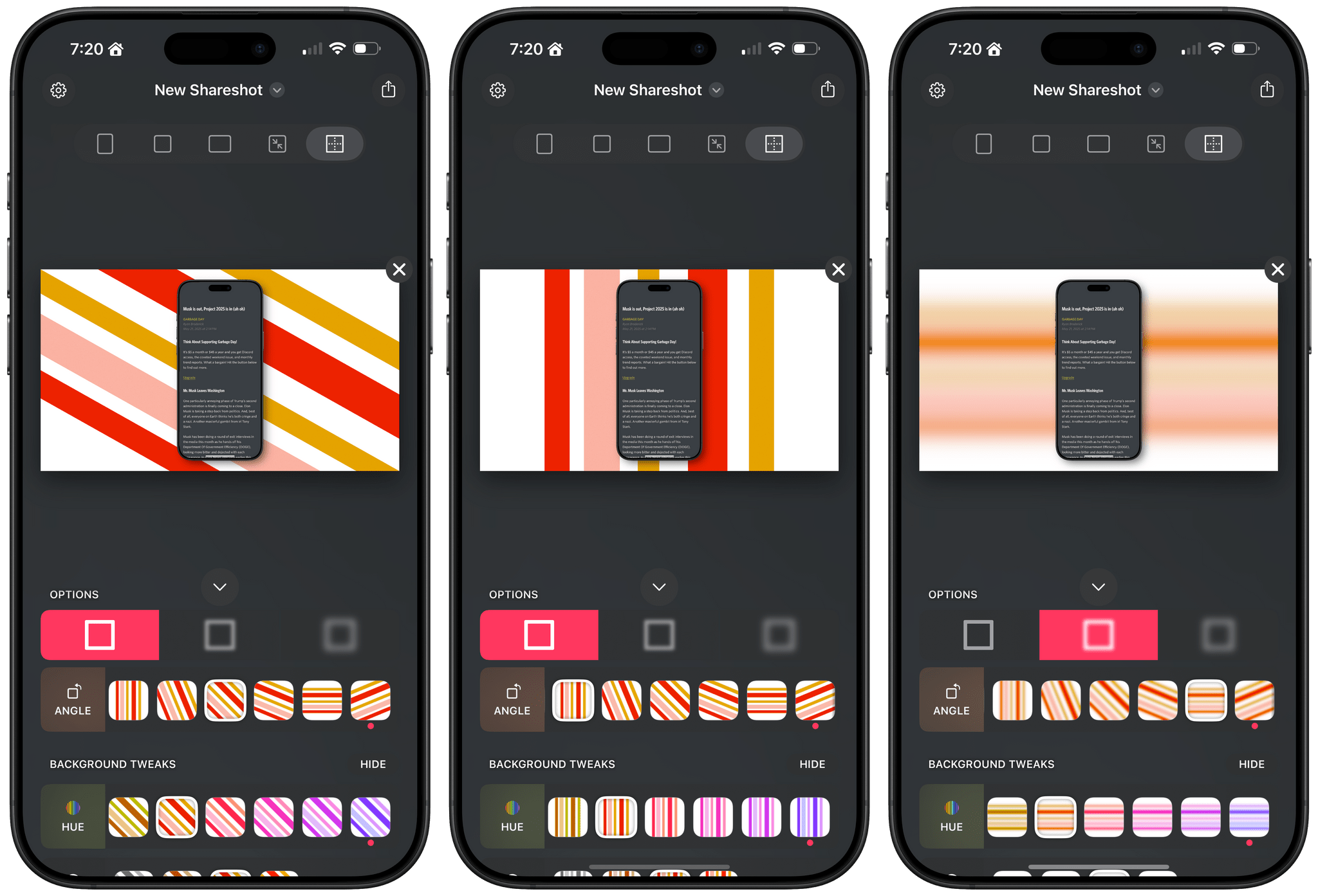

Shareshot 1.3: Greater Image Flexibility, New Backgrounds, and Extended Shortcuts Support

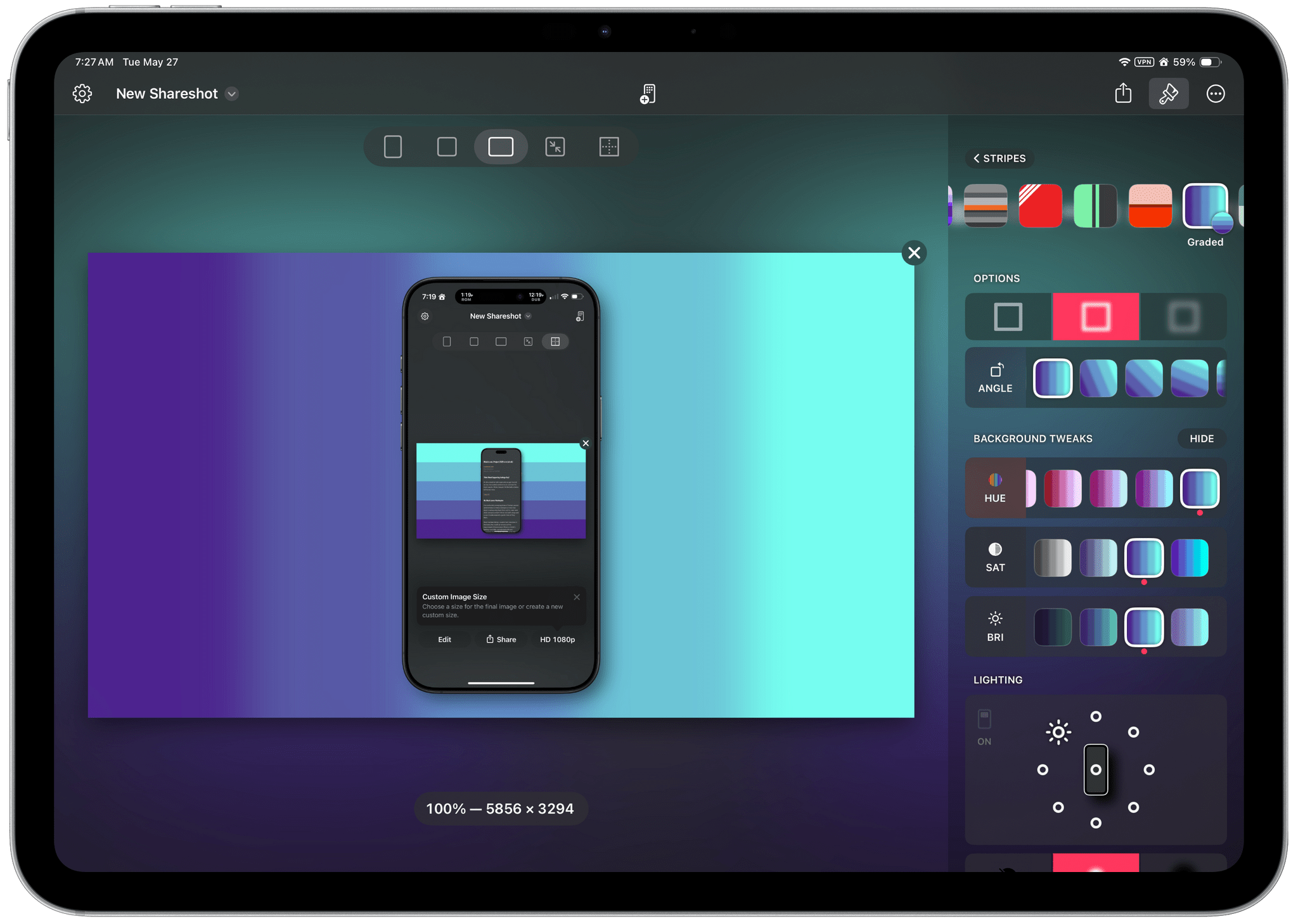

If you have a screenshot you need to frame, Shareshot is one of your best bets. That’s because it makes it so hard to create an image that looks bad. The app, which is available for the iPhone, iPad, Mac, and Vision Pro, has a lot of options for tweaking the appearance of your framed screenshot, so your final image won’t have a cookie-cutter look. However, there are also just enough constraints to prevent you from creating something truly awful.

You can check out my original review and coverage on Club MacStories for the details on version 1.0 and subsequent releases, but today’s focus is on version 1.3, which covers three areas:

- Increased image size flexibility

- New backgrounds

- Updated and extended Shortcuts actions

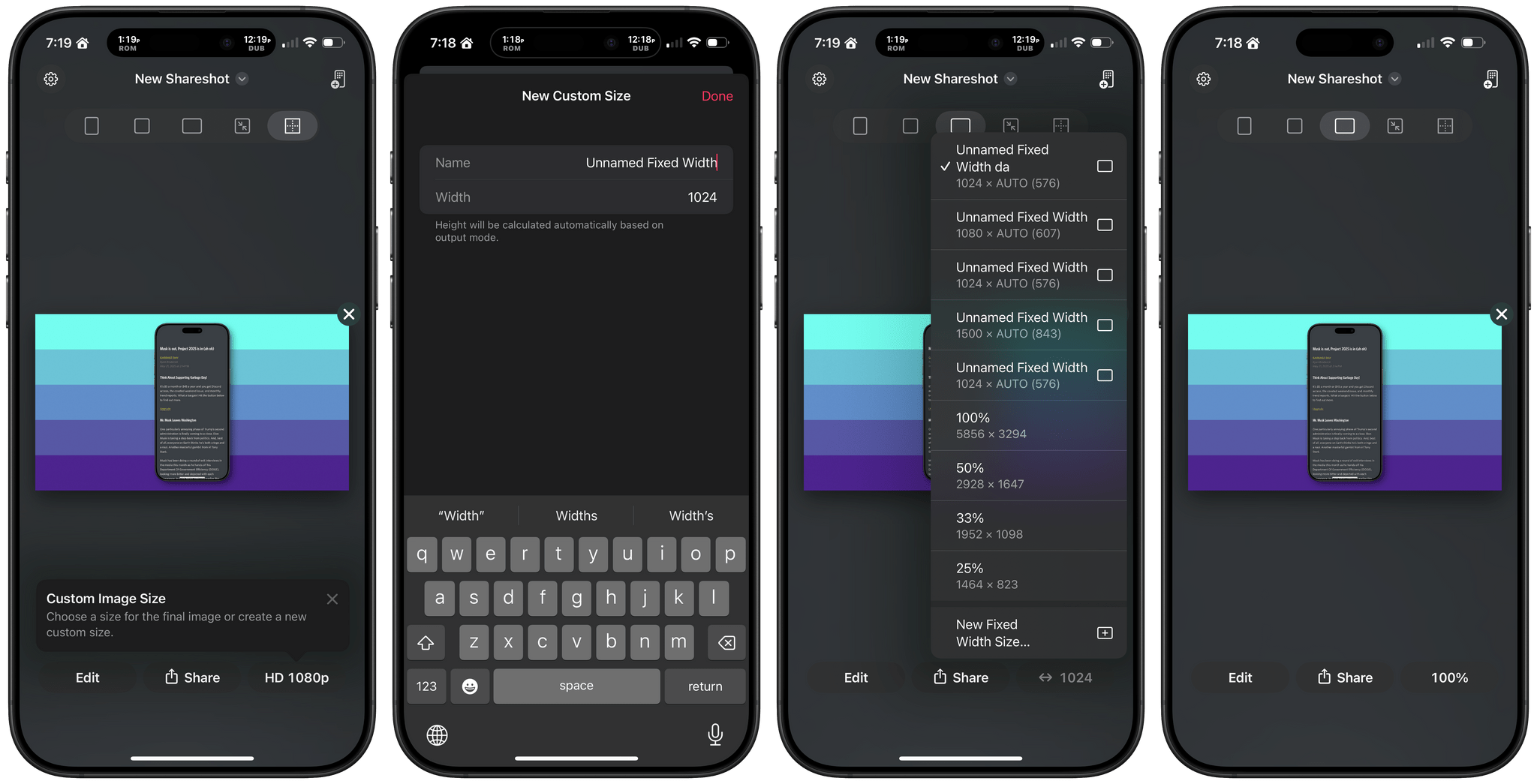

With version 1.3, Shareshot now lets you pick any output size you’d like. The app then frames your screenshot and fits it in the image size you specify. If you’re doing design work, getting the exact-size image you want out of the app is a big win because it means you won’t need to make adjustments later that could impair its fidelity.

A related change is the ability to specify a fixed width for the image that Shareshot outputs. That means you can pick the aspect ratio you want, such as square or 16:9, then specify a fixed width, and Shareshot will take care of automatically adjusting the height of the image to preserve the aspect ratio you chose. This feature is perfect if you publish to the web and the tools you use are optimized for a certain image width. Using anything wider just means you’re hosting a file that’s bigger than necessary, potentially slowing down your website and resulting in unnecessary bandwidth costs.

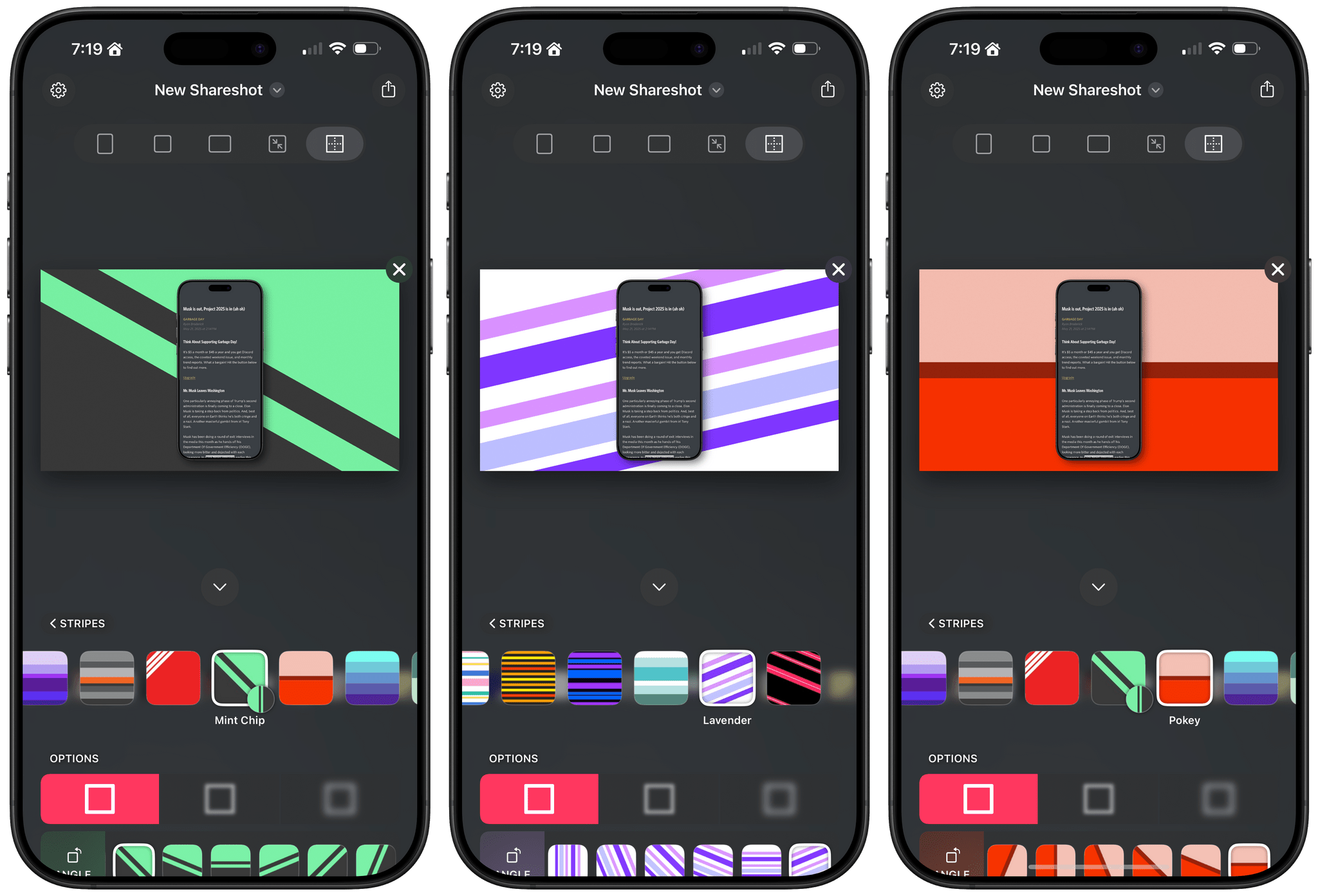

Shareshot has two new categories of backgrounds too: Solidarity and Stripes. Solidarity has two options styled after the Ukrainian and Palestinian flags, and Stripes includes designs based on LGBTQ+ colors and other color combinations in a variety of styles. All of the new categories allow you to adjust several parameters including the angle, color, saturation, brightness, and blur of the stripes.

Finally, Shareshot has revamped its Shortcuts actions to take advantage of App Intents, giving users control over more parameters of images generated using Shortcuts and preparing the app for Apple’s promised Smart Siri in the future. The changes add:

- Support for outputting custom-sized images,

- A scale option for fixed-width and custom-sized images, and

- New parameters for angling and blurring backgrounds.

The progress Shareshot has made since version 1.0 is impressive. The app has grown substantially to offer a much wider set of backgrounds, options, and flexibility without compromising its excellent design, which garnered it a MacStories Selects Award last year. I’m still eager to see multiple screenshot support added, a feature I know is on the roadmap, but that’s more a wish than a complaint; Shareshot is a fantastic app that just keeps getting better.

Shareshot 1.3 is free to download on the App Store. Some of its features require a $1.99/month or $14.99/year subscription.

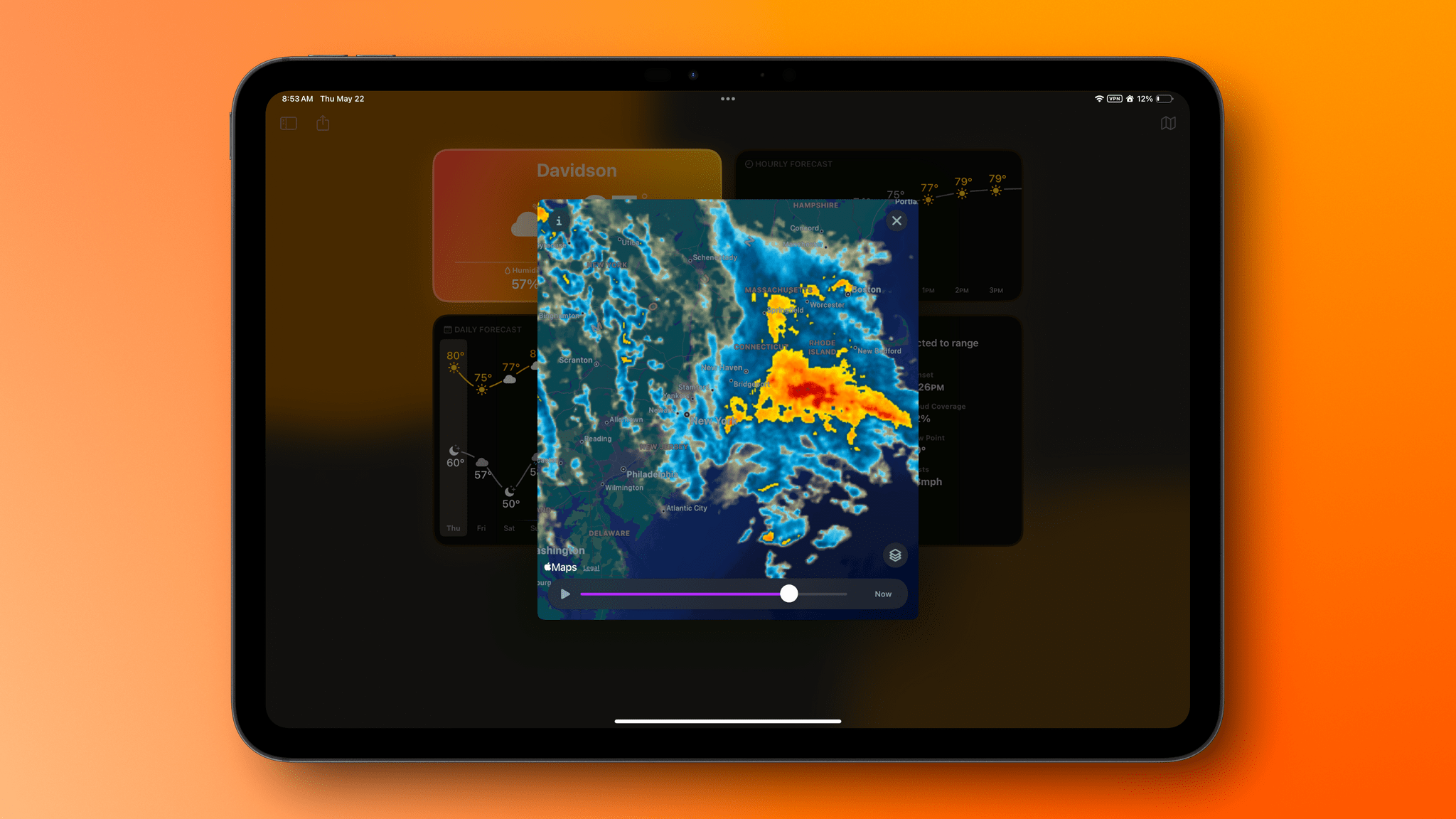

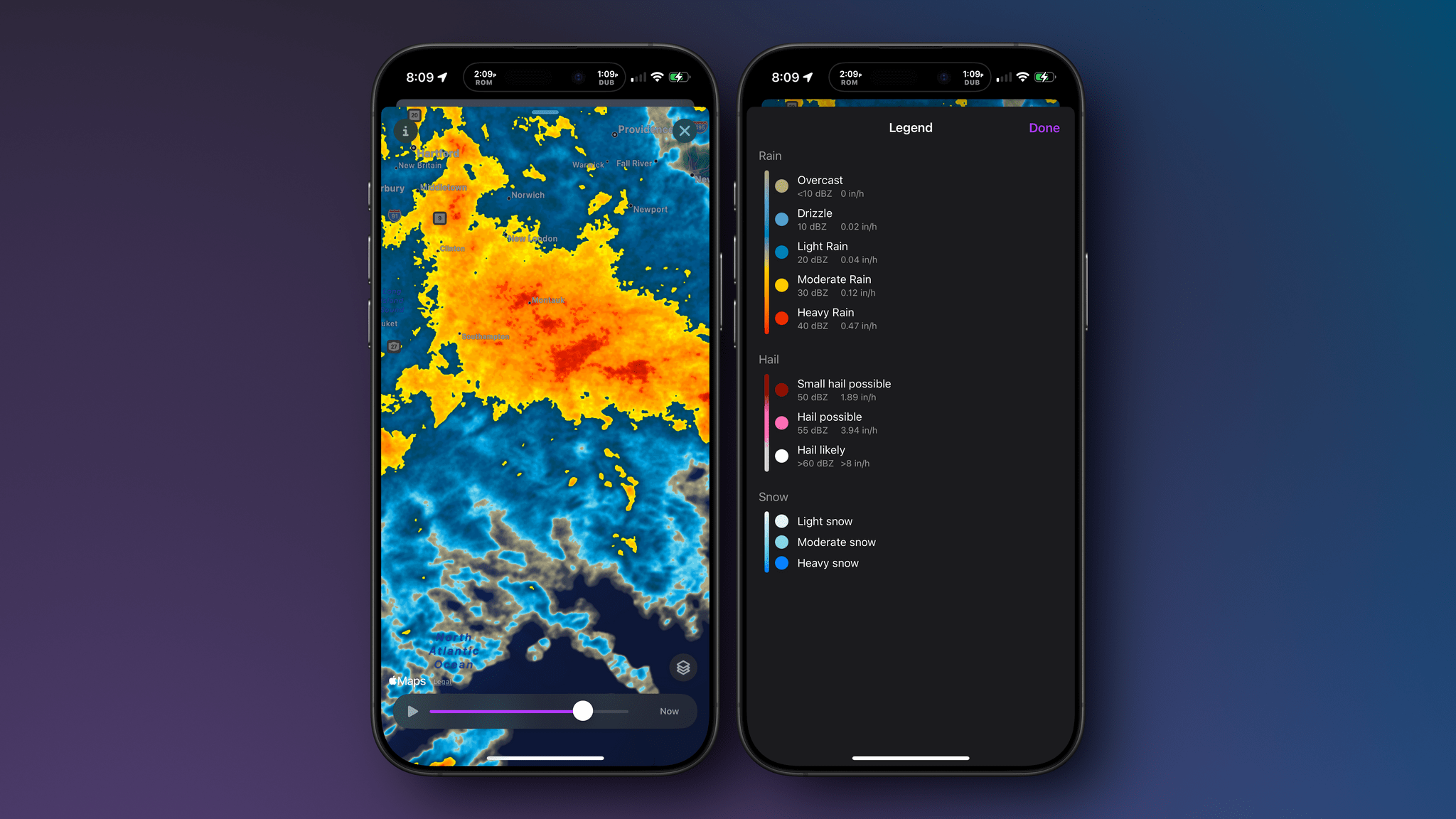

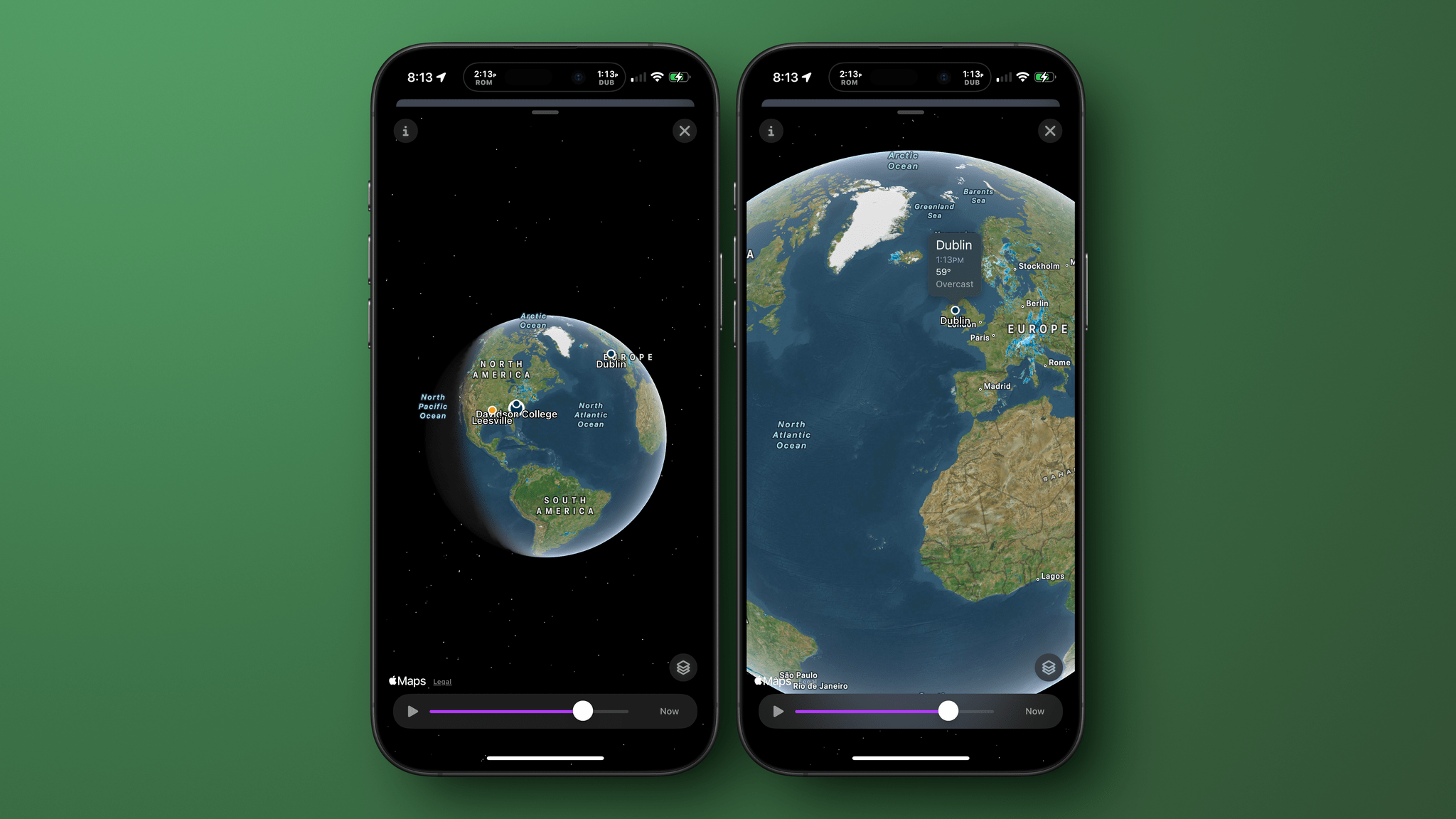

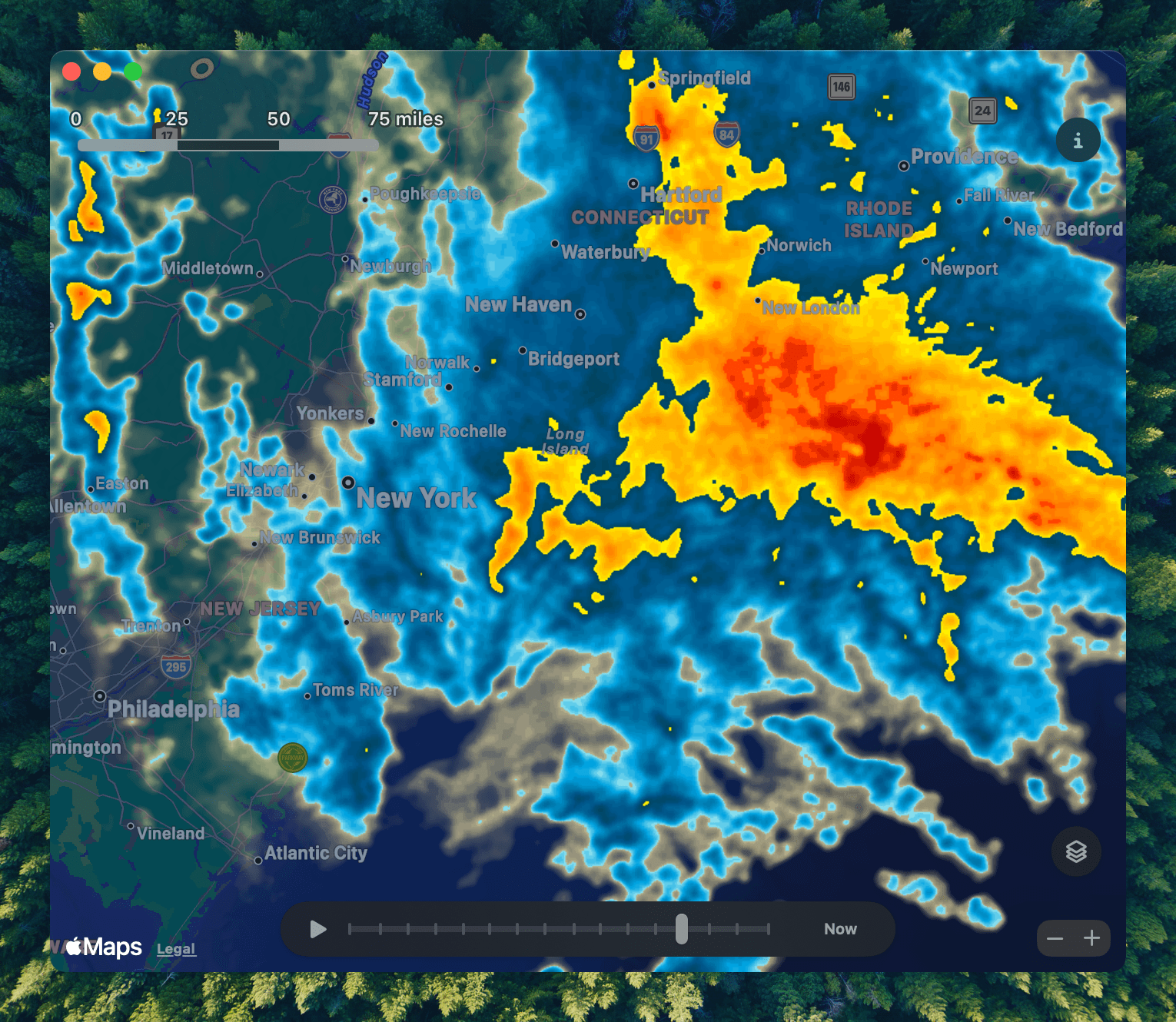

Notes on Mercury Weather’s New Radar Maps Feature

Since covering Mercury Weather 2.0 and its launch on the Vision Pro here on MacStories, I’ve been keeping up with the weather app on Club MacStories. It’s one of my favorite Mac menu bar apps, it has held a spot on my default Apple Watch face since its launch, and last fall, it added severe weather notifications.

I love the app’s design and focus as much today as I did when I wrote about its debut in 2023. Today, though, Mercury Weather is a more well-rounded app than ever before. Through regular updates, the app has filled in a lot of the holes in its feature set that may have turned off some users two years ago.

Today, Mercury Weather adds weather radar maps, which was one of the features I missed most from other weather apps, along with the severe weather notifications that were added late last year. It’s a welcome addition that means the next time a storm is bearing down on my neighborhood, I won’t have to switch to a different app to see what’s coming my way.

Radar maps are available on the iPhone, iPad, and Mac versions of Mercury Weather; they offer a couple of different map styles and a legend that explains what each color on the map means. If you zoom out, you can get a global view of Earth with your favorite locations noted on the map. Tap one, and you’ll get the current conditions for that spot. Mercury Weather already had an extensive set of widgets for the iPhone, iPad, and Mac, but this update adds small, medium, and large widgets for the radar map, too.

With a long list of updates since launch, Mercury Weather is worth another look if you passed on it before because it was missing features you wanted. The app is available on the App Store as a free download. Certain features require a subscription or lifetime purchase via an in-app purchase.

Notes on Early Mac Studio AI Benchmarks with Qwen3-235B-A22B and Qwen2.5-VL-72B

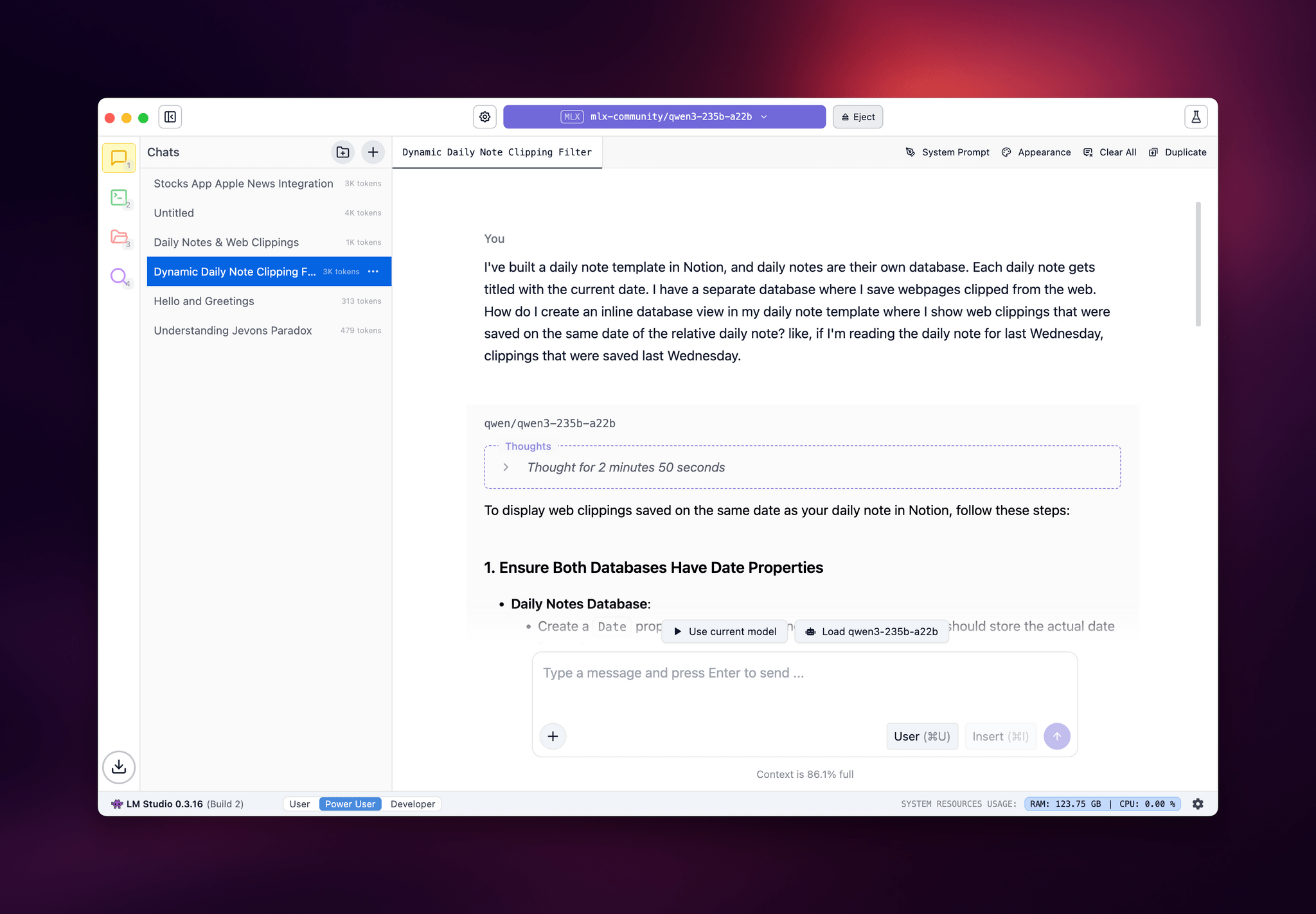

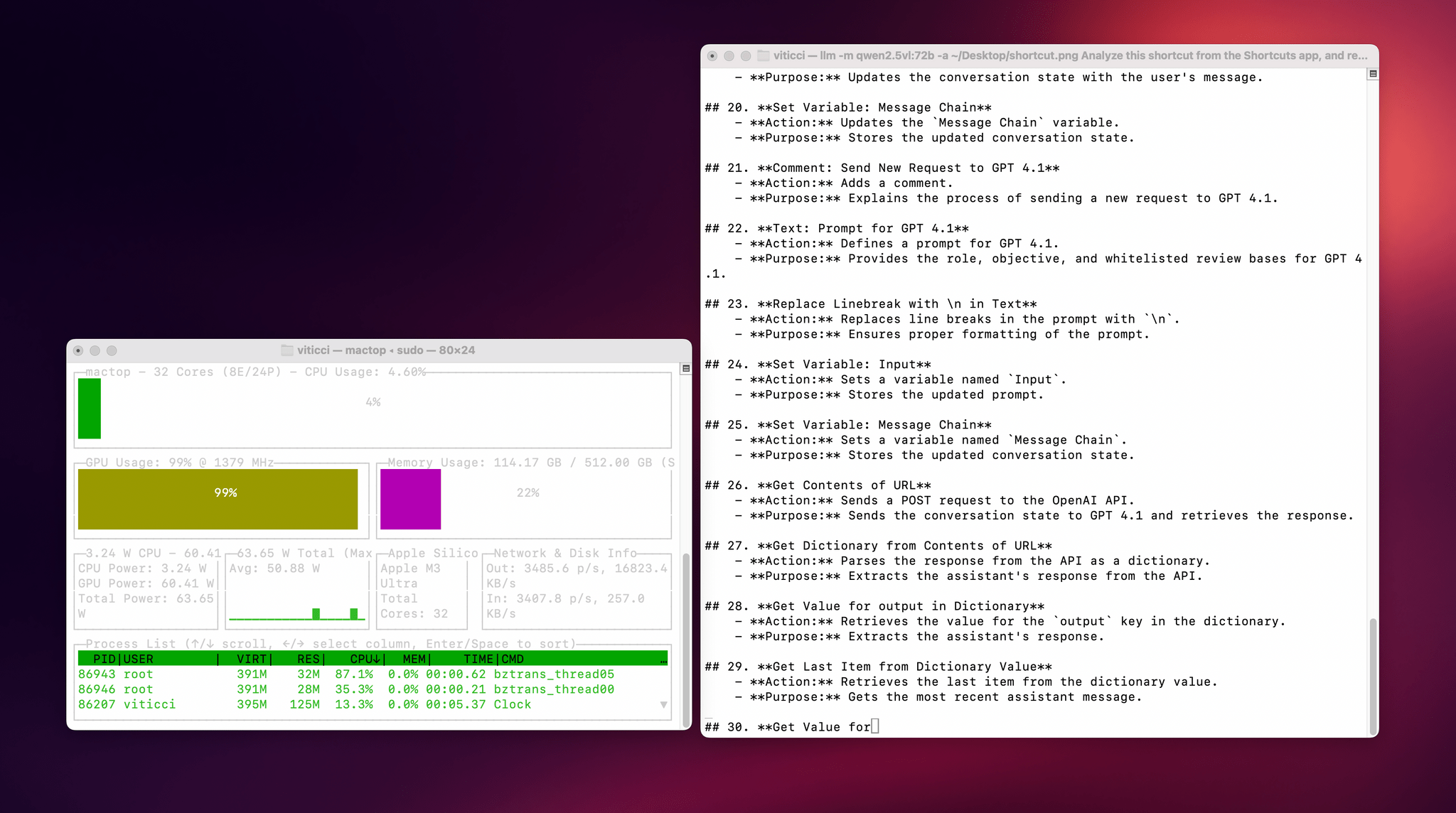

I received a top-of-the-line Mac Studio (M3 Ultra, 512 GB of RAM, 8 TB of storage) on loan from Apple last week, and I thought I’d use this opportunity to revive something I’ve been mulling over for some time: more short-form blogging on MacStories in the form of brief “notes” with a dedicated Notes category on the site. Expect more of these “low-pressure”, quick posts in the future.

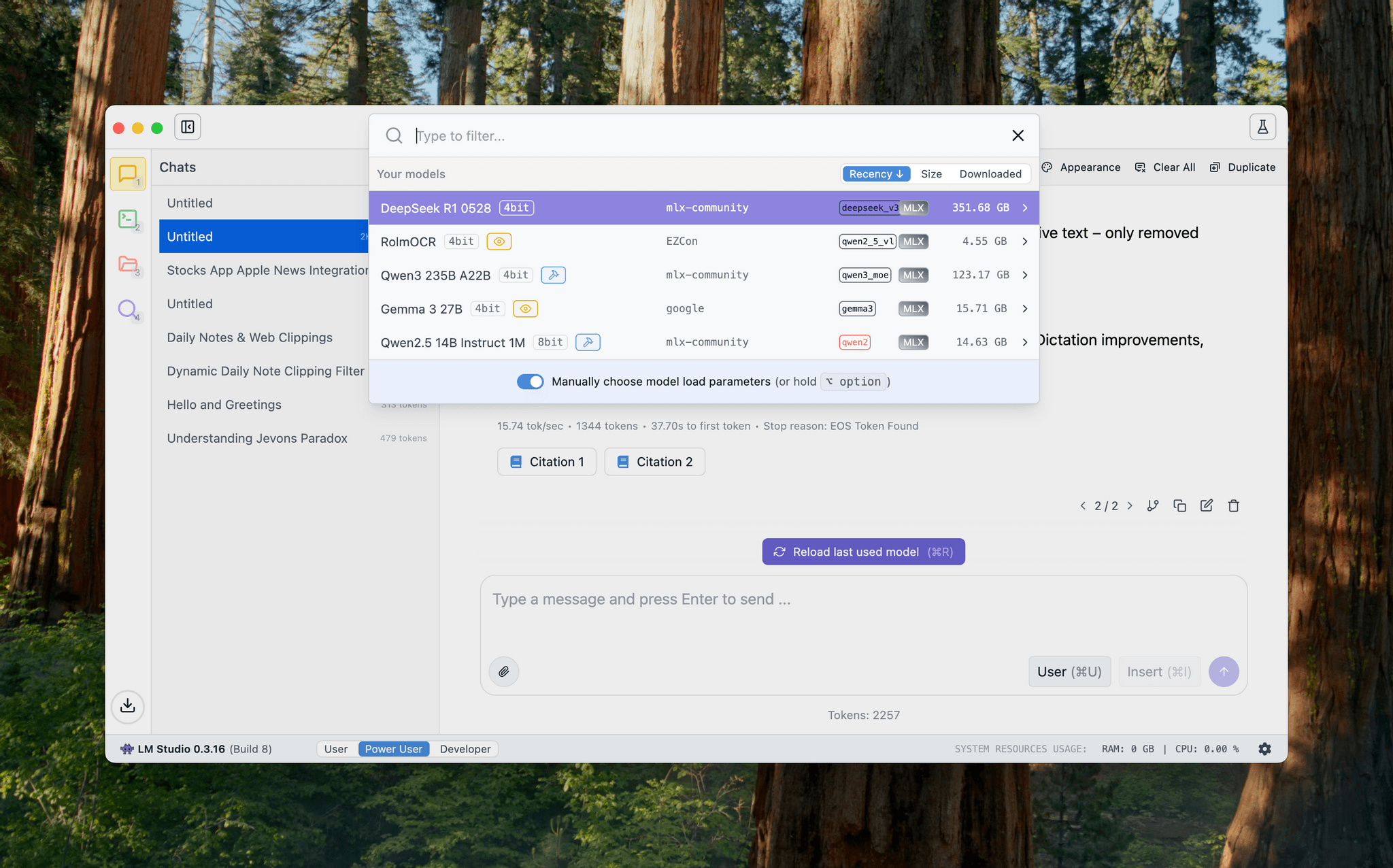

I’ve been sent this Mac Studio as part of my ongoing experiments with assistive AI and automation, and one of the things I plan to do over the coming weeks and months is playing around with local LLMs that tap into the power of Apple Silicon and the incredible performance headroom afforded by the M3 Ultra and this computer’s specs. I have a lot to learn when it comes to local AI (my shortcuts and experiments so far have focused on cloud models and the Shortcuts app combined with the LLM CLI), but since I had to start somewhere, I downloaded LM Studio and Ollama, installed the llm-ollama plugin, and began experimenting with open-weights models (served from Hugging Face as well as the Ollama library) both in the GGUF format and Apple’s own MLX framework.

I posted some of these early tests on Bluesky. I ran the massive Qwen3-235B-A22B model (a Mixture-of-Experts model with 235 billion parameters, 22 billion of which activated at once) with both GGUF and MLX using the beta version of the LM Studio app, and these were the results:

- GGUF: 16 tokens/second, ~133 GB of RAM used

- MLX: 24 tok/sec, ~124 GB RAM

As you can see from these first benchmarks (both based on the 4-bit quant of Qwen3-235B-A22B), the Apple Silicon-optimized version of the model resulted in better performance both for token generation and memory usage. Regardless of the version, the Mac Studio absolutely didn’t care and I could barely hear the fans going.

I also wanted to play around with the new generation of vision models (VLMs) to test modern OCR capabilities of these models. One of the tasks that has become kind of a personal AI eval for me lately is taking a long screenshot of a shortcut from the Shortcuts app (using CleanShot’s scrolling captures) and feed it either as a full-res PNG or PDF to an LLM. As I shared before, due to image compression, the vast majority of cloud LLMs either fail to accept the image as input or compresses the image so much that graphical artifacts lead to severe hallucinations in the text analysis of the image. Only o4-mini-high – thanks to its more agentic capabilities and tool-calling – was able to produce a decent output; even then, that was only possible because o4-mini-high decided to slice the image in multiple parts and iterate through each one with discrete pytesseract calls. The task took almost seven minutes to run in ChatGPT.

This morning, I installed the 72-billion parameter version of Qwen2.5-VL, gave it a full-resolution screenshot of a 40-action shortcut, and let it run with Ollama and llm-ollama. After 3.5 minutes and around 100 GB RAM usage, I got a really good, Markdown-formatted analysis of my shortcut back from the model.

To make the experience nicer, I even built a small local-scanning utility that lets me pick an image from Shortcuts and runs it through Qwen2.5-VL (72B) using the ‘Run Shell Script’ action on macOS. It worked beautifully on my first try. Amusingly, the smaller version of Qwen2.5-VL (32B) thought my photo of ergonomic mice was a “collection of seashells”. Fair enough: there’s a reason bigger models are heavier and costlier to run.

Given my struggles with OCR and document analysis with cloud-hosted models, I’m very excited about the potential of local VLMs that bypass memory constraints thanks to the M3 Ultra and provide accurate results in just a few minutes without having to upload private images or PDFs anywhere. I’ve been writing a lot about this idea of “hybrid automation” that combines traditional Mac scripting tools, Shortcuts, and LLMs to unlock workflows that just weren’t possible before; I feel like the power of this Mac Studio is going to be an amazing accelerator for that.

Next up on my list: understanding how to run MLX models with mlx-lm, investigating long-context models with dual-chunk attention support (looking at you, Qwen 2.5), and experimenting with Gemma 3. Fun times ahead!

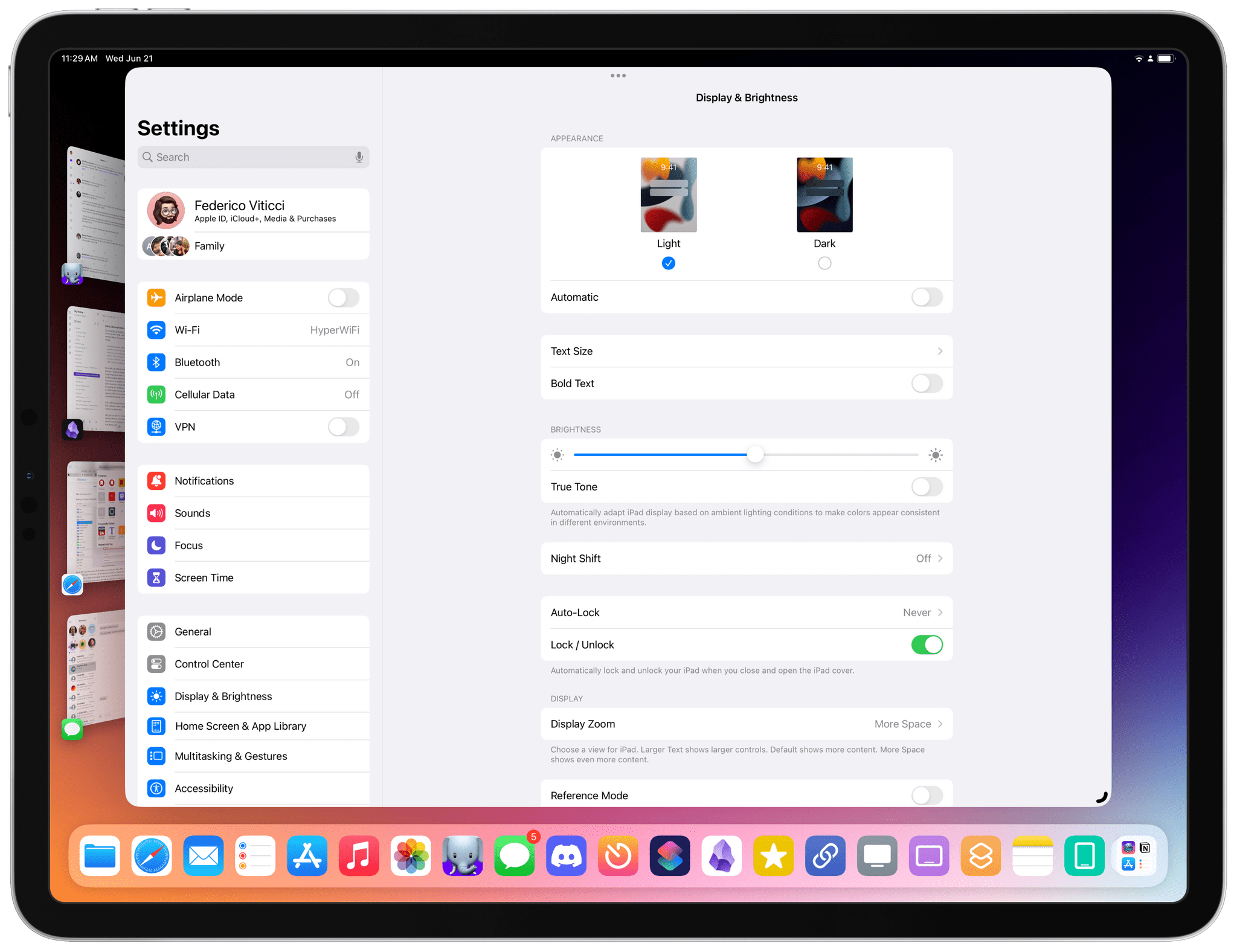

Faking ‘Clamshell Mode’ with External Displays in iPadOS 17

Fernando Silva of 9to5Mac came up with a clever workaround to have ‘clamshell mode’ in iPadOS 17 when an iPad is connected to an external display. The catch: it doesn’t really turn off the iPad’s built-in display.

Now before readers start spamming the comments, this is not true clamshell mode. True clamshell mode kills the screen of the host computer and moves everything from that display to the external monitor. This will not do that. But this workaround will allow you to close your iPad Pro, connect a Bluetooth keyboard and mouse, and still be able to use Stage Manager on an external display.

Essentially, the method involves disabling the ‘Lock / Unlock’ toggle in Settings ⇾ Display & Brightness that controls whether the iPad’s screen should lock when a cover is closed on top of it. This has been the iPad’s default behavior since the iPad 2 and the debut of the Smart Cover, and it still applies to the latest iPad Pro and Magic Keyboard: when the cover is closed, the iPad gets automatically locked. However, this setting can be disabled, and if you do, then sure: you could close an iPad Pro and continue using iPadOS on the external display without seeing the iPad’s built-in display. Except the iPad’s display is always on behind the scenes, which is not ideal.1

Still: if we’re supposed to accept this workaround as the only way to fake ‘clamshell mode’ in iPadOS 17, I would suggest some additions to improve the experience.

Last Week, on Club MacStories: Symlinks for Windows and macOS, File Organization Tips, Batch-Converting Saved Timers, and an Upcoming ‘Peek Performance’ Town Hall

Because Club MacStories now encompasses more than just newsletters, we’ve created a guide to the past week’s happenings along with a look at what’s coming up next:

Monthly Log: February 2022

- Federico shared how he’s using symbolic links to sync his Dolphin emulator saves across Windows and macOS using Dropbox.

- John explains his lightweight system for organizing files using folders, tagging, and search.

MacStories Weekly: Issue 310

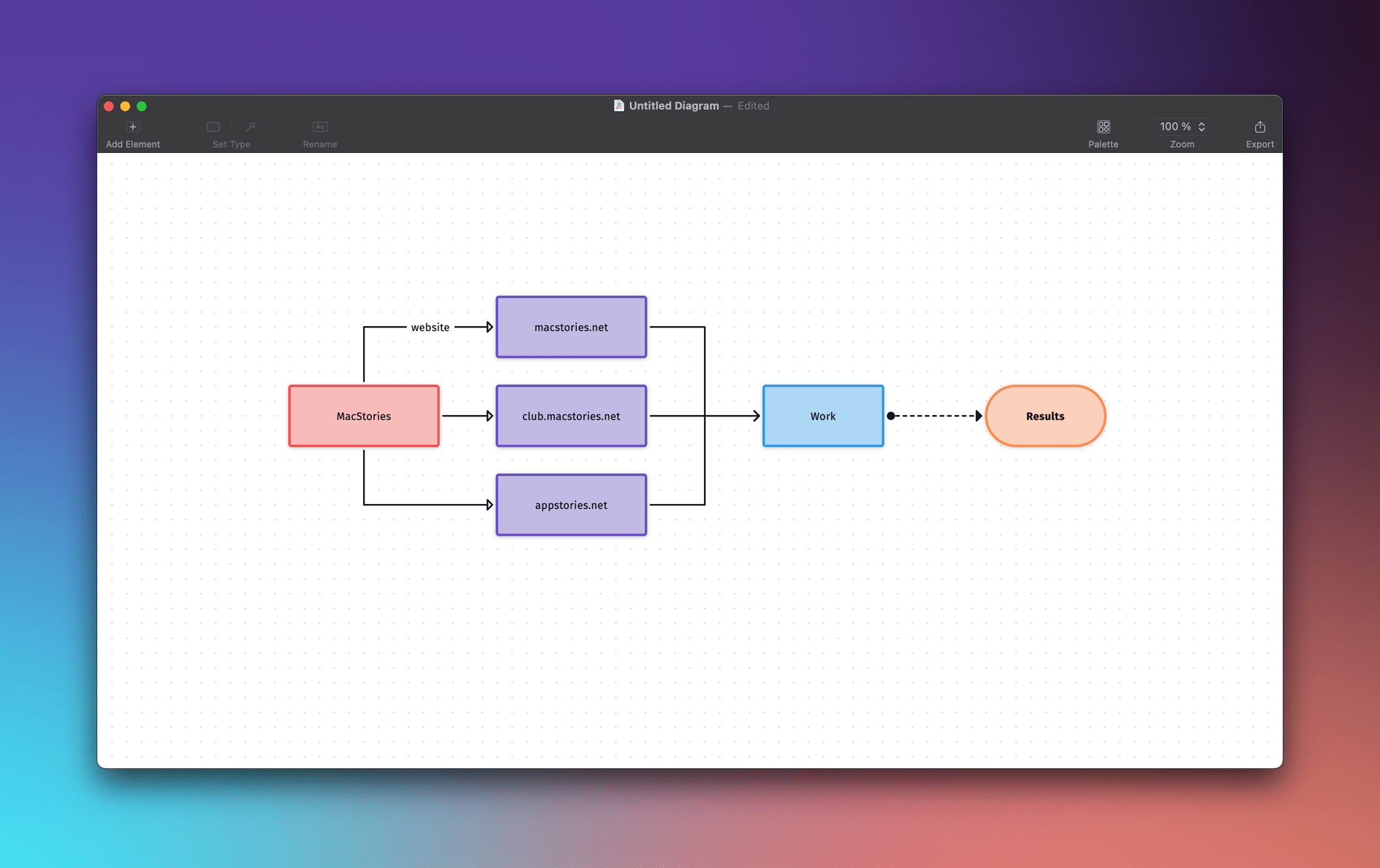

- John recommended Diagrams, an excellent charting tool.

- Federico created a shortcut for batch-converting his saved Timery timers into individual shortcuts.

- John shared a tip on how to mimic the iPhone and iPad’s scroll-to-top gesture on the Mac.

- Plus:

- App Debuts

- Highlights from the Club MacStories+ Discord

- Interesting links from around the web

- A sneak peek at what’s next on MacStories’ podcasts

- and more

Up Next

Next week on Club MacStories:

- On March 8th, at 5:00 pm Eastern US time, we’ll be holding a live audio Town Hall in the Club MacStories+ and Club Premier Discord community. Join Federico, John, and Alex for reactions to the day’s events and to ask any questions you may have. More details about the Town Hall are available in the Announcements channel on Discord.

- In MacStories Weekly 311, John will publish a shortcut for tweeting links to web articles via Typefully.

Apple Music, Exclusive Extras, and Merch

Apple and Billie Eilish, whose highly anticipated album WHEN WE ALL FALL ASLEEP, WHERE DO WE GO? (out March 29) has set a new record for pre-adds on Apple Music, have launched an interesting new kind of partnership on the company’s streaming service. At this link (which is not the same as the standard artist page for Billie Eilish on Apple Music), you’ll find a custom page featuring an exclusive music video for you should see me in a crown, the upcoming album that you can pre-add to your library, an Essentials playlist for Billie Eilish’s previous hits, two Beats 1 interviews, and, for the first time on Apple Music (that I can recall), a link to buy a limited edition merch collection.

The merch drop is available at this page, which is a Shopify store with Apple Music branding that offers a t-shirt and hoodie designed by streetwear artist Don C, featuring Takashi Murakami’s artwork from the aforementioned music video. The purchase flow features Apple Pay support; both the website and email receipts contain links to watch the video, pre-add the album, and listen to the Essentials playlist on Apple Music.

For a while now, I’ve been arguing that Apple Music should offer the ability to buy exclusive merch and concert tickets to support your favorite artists without leaving the app. The move would fit nicely with Apple’s growing focus on services (you have to assume the company would take a cut from every transaction), it would increase the lock-in aspect of Apple Music (because you can only get those exclusive extras on Apple’s service), and it would provide artists with an integrated, more effective solution to connect with fans directly than yet another attempt at social networking.

This collaboration with Billie Eilish feels like a first step in that direction, with Apple actively promoting the limited edition sale and embedding different types of exclusive content (video, merch, Beats 1 interviews) in a single custom page. I wouldn’t be surprised if Apple continues to test this approach with a handful of other artists who have major releases coming up in 2019.

The Reliable Simplicity of AirPods

Chris Welch, writing for The Verge on AirPods’ advantage over other wireless earbuds:

AirPods are the best truly wireless earbuds available because they nail the essentials like ease of use, reliability, and battery life. There are alternatives that definitely_ sound_ better from Bose, B&O Play, and other. But they often cost more and all of them experience occasional audio dropouts. AirPods don’t. I’d argue they’re maybe the best first-gen product Apple has ever made. Unfortunately, I’m one of the sad souls whose ears just aren’t a match for the AirPods — and I’m a nerd who likes having both an iPhone and Android phone around — so I’ve been searching for the best non-Apple option.

But some 14 months after AirPods shipped, there’s still no clear cut competitor that’s truly better at the important stuff. They all lack the magic sauce that is Apple’s W1 chip, which improves pairing, range, and battery life for the AirPods. At this point I think it’s fair to say that Bluetooth alone isn’t enough to make these gadgets work smoothly. Hopefully the connection will be more sturdy once more earbuds with Bluetooth 5 hit the market. And Qualcomm is also putting in work to help improve reliability.

I haven’t tested all the wireless earbuds Welch has, but I have some anecdotal experience here.

A few months ago, I bought the B&O E8 earbuds on Amazon. After getting a 4K HDR TV for Black Friday (the 55-inch LG B7), I realized that I wanted to be able to watch a movie or play videogames while lying in bed without having to put bulky over-ear Bluetooth headphones on. Essentially, I wanted AirPods for my TV, but I didn’t want to use the AirPods that were already paired with my iPhone and iPad. I wanted something that I could take out of the case, put on, and be done with. So instead of getting a second pair of AirPods, I decided to try the E8.

I like the way the E8 sound and I’m a fan of the Comply foam tips. The case is elegant (though not as intuitive as the AirPods’ case) and the gestures can be confusing. My problem is that, despite sitting 3 meters away from the TV, one of the earbuds constantly drops out. I sometimes have to sit perfectly still to ensure the audio doesn’t cut out – quite often, even turning my head causes the audio to drop out in one of the E8. I’m still going to use these because I like the freedom granted by a truly wireless experience and because I’ve found the ideal position that doesn’t cause audio issues, but I’m not a happy customer. Also, it’s too late to return them now.

A couple of days ago, I was doing chores around the house. I usually listen to podcasts with my AirPods on if it’s early and my girlfriend is still sleeping, which means I leave my iPhone in the kitchen and move around wearing AirPods. At one point, I needed to check out something outside (we have a very spacious terrace – large enough for the dogs to run around) and I just walked out while listening to a podcast.

A couple of minutes later, the audio started cutting out. My first thought was that something in Overcast was broken. It took me a solid minute to realize that I had walked too far away from the iPhone inside the house. I’m so used to the incredible reliability and simplicity of my AirPods, it didn’t even occur to me that I shouldn’t have left my iPhone 15 meters and two rooms away.

The Cases for (and Against) Apple Adopting USB-C on Future iPhones

Jason Snell, writing for Macworld on the possibility of Apple adopting USB-C on future iPhones:

But the Lightning paragraph–that’s the really puzzling one. At first parsing, it comes across as a flat-out statement that Apple is going to ditch Lightning for the USB-C connector currently found on the MacBook and MacBook Pro. But a second read highlights some of the details–power cord and other peripheral devices?–that make you wonder if this might be a misreading of a decision to replace the USB-A-based cords and power adapters that come in the iPhone box with USB-C models. (I’m also a bit baffled by how the Lightning connector is “original,” unless it means it’s like a Netflix Original.)

Still, the Wall Street Journal would appear to be a more visible and reputable source than an analyst or blog with some sources in Apple’s supply chain. It’s generally considered to be one of the places where Apple has itself tactically leaked information in the past. So let’s take a moment and consider this rumor seriously. What would drive Apple to kill the Lightning connector, and why would it keep it around?

I’ve been going back and forth on this since yesterday’s report on The Wall Street Journal. Like Jason, I see both positive aspects and downsides to replacing Lightning with USB-C on the iPhone, most of which I highlighted on Connected. Jason’s article perfectly encapsulates my thoughts and questions.

USB-C represents the dream of a single, small, reversible connector that works with every device, and it’s being adopted by the entire tech industry. USB-C isn’t as small as Lightning but it’s small enough. More importantly, it’d allow users to use one connector for everything; USB-A, while universal on desktop computers, never achieved ubiquity because it wasn’t suited for mobile devices. USB-C is.

Conversely, Lightning is under Apple’s control and Apple likes the idea of controlling their stack as much as possible (for many different reasons). A transition to USB-C would be costly for users in the short term, and it would be extremely perplexing the year after the iPhone 7 fully embraced Lightning.

Furthermore, unlike the transition from 30-pin to Lightning in 2012, Apple now has a richer, more lucrative ecosystem of accessories and devices based on Lightning, from AirPods and Apple Pencil to keyboards, mice, EarPods, game controllers, Siri remotes, and more. Moving away from Lightning means transitioning several product lines to a standard that Apple doesn’t own. It means additional inconsistency across the board.

Like I said, I’m not sure where I stand on this yet. These are discussions that Apple likely has already explored and settled internally. I’m leaning towards USB-C everywhere, but I’m afraid of transition costs and setting a precedent for future standards adopted by other companies (what if mini-USB-C comes out in two years?).

In the meantime, I know this: I’m upgrading to USB-C cables and accessories as much as I can (I just bought this charger and cable; the Nintendo Switch was a good excuse to start early) and I would love to have a USB-C port on the next iPad Pro. If there’s one place where Apple could start adopting peripherals typically used with PCs, that’d be the iPad.