Halide 1.14 is out with a new lens switching UI to accommodate the three-camera system of the iPhone 11 Pro and Pro Max. As soon as the update was out, I went for a walk to give it a try.

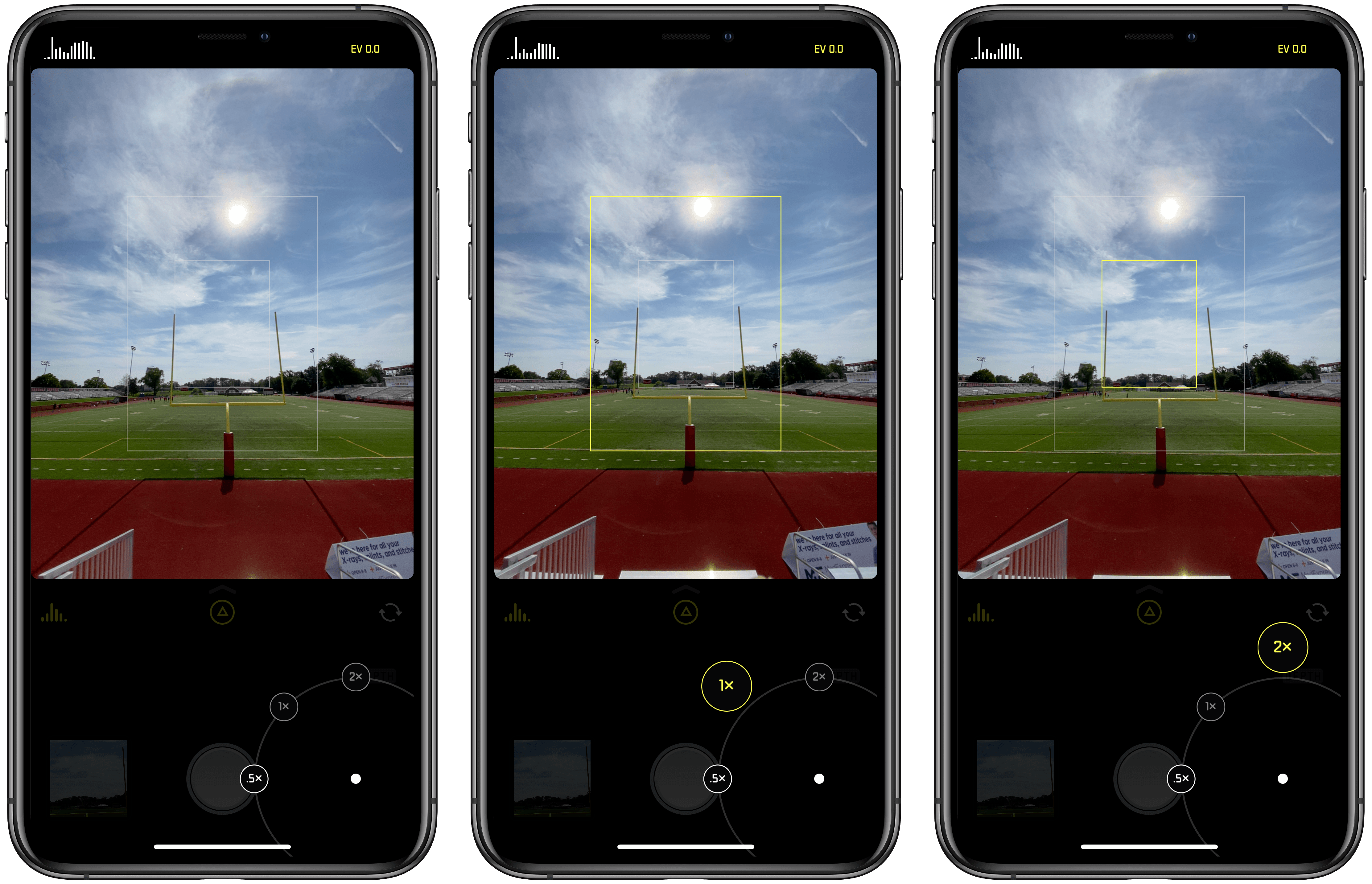

Halide has introduced a new lens switching button featuring haptic feedback and a dial-like system for moving among the iPhone’s lenses. When you press down on the lens button, you get a tap of haptic feedback to let you know without looking that the lens picker has been engaged.

From there, you can slide your finger among the ultra wide, wide, and telephoto options that radiate out from the button. As you swipe your finger across each option, it enlarges, and you’re met with another little bit of haptic feedback as you swipe over the lenses other than the one already selected. Once you have the lens you want, you simply let go and your iPhone switches to it.

You can also cycle through the lenses in order by tapping the button repeatedly or swipe left for the ultra wide lens or up for the telephoto one. In my brief tests, swiping left or up is the best option if you already know the lens you want, but using the dial-like lens switcher is perfect for considering your options first because Halide has also added lens preview guides.

With the lens button engaged, Halide shows guides for each of your zoom options. That means if you’re using the ultra-wide lens, you’ll see the light gray guidelines for the wide and telephoto lenses. As you swipe over those lenses, the guides change to yellow to highlight the composition you’ll get if you switch to that lens.

If you’re already using the telephoto lens though, Halide will highlight the outer frame of the image to suggest you’ll get a wider shot, though it does not zoom the viewfinder out to show that composition until you lift your finger. You can see how the lens guides work from the screenshots I took at a local high school football field above and in this video:

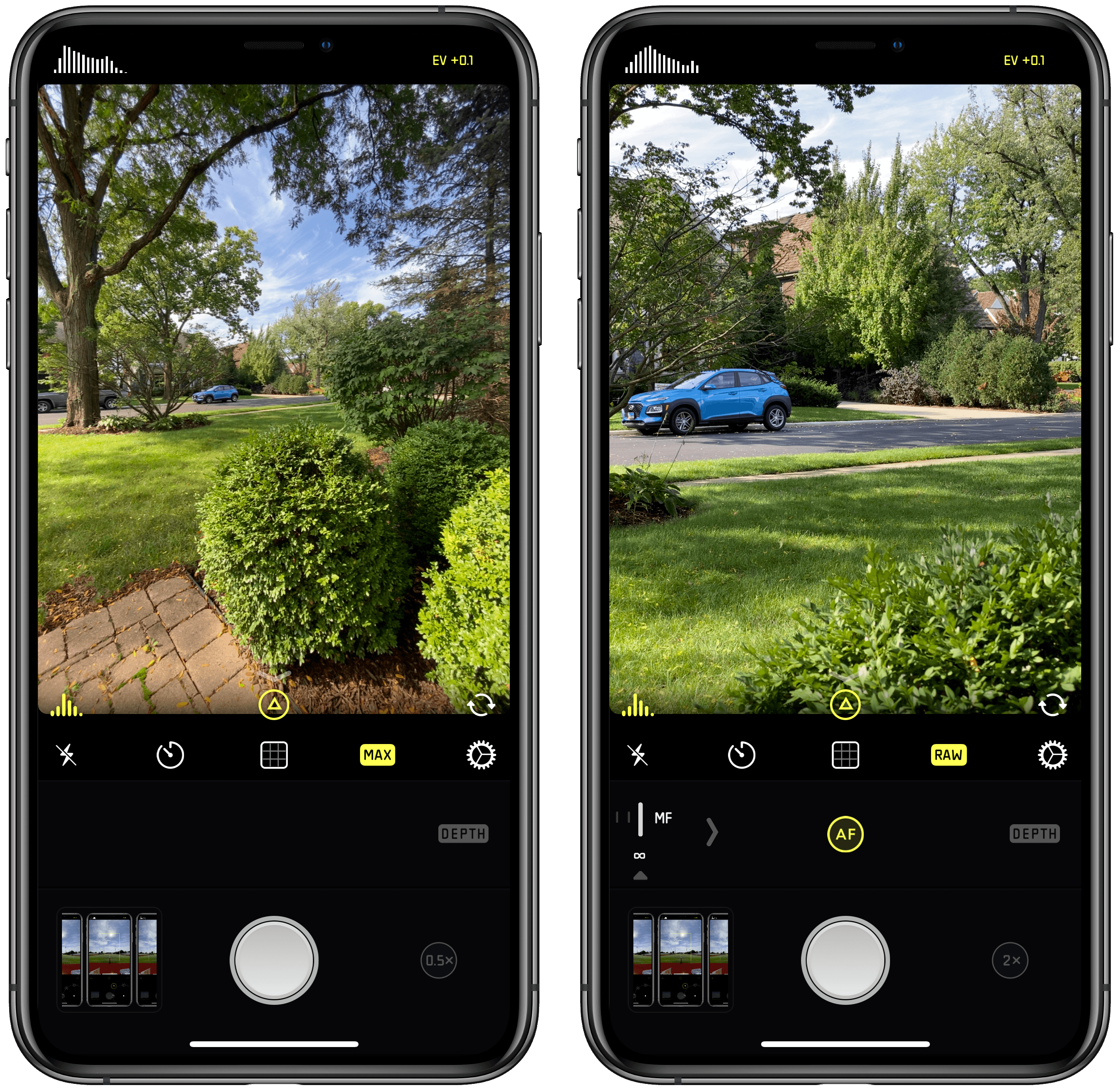

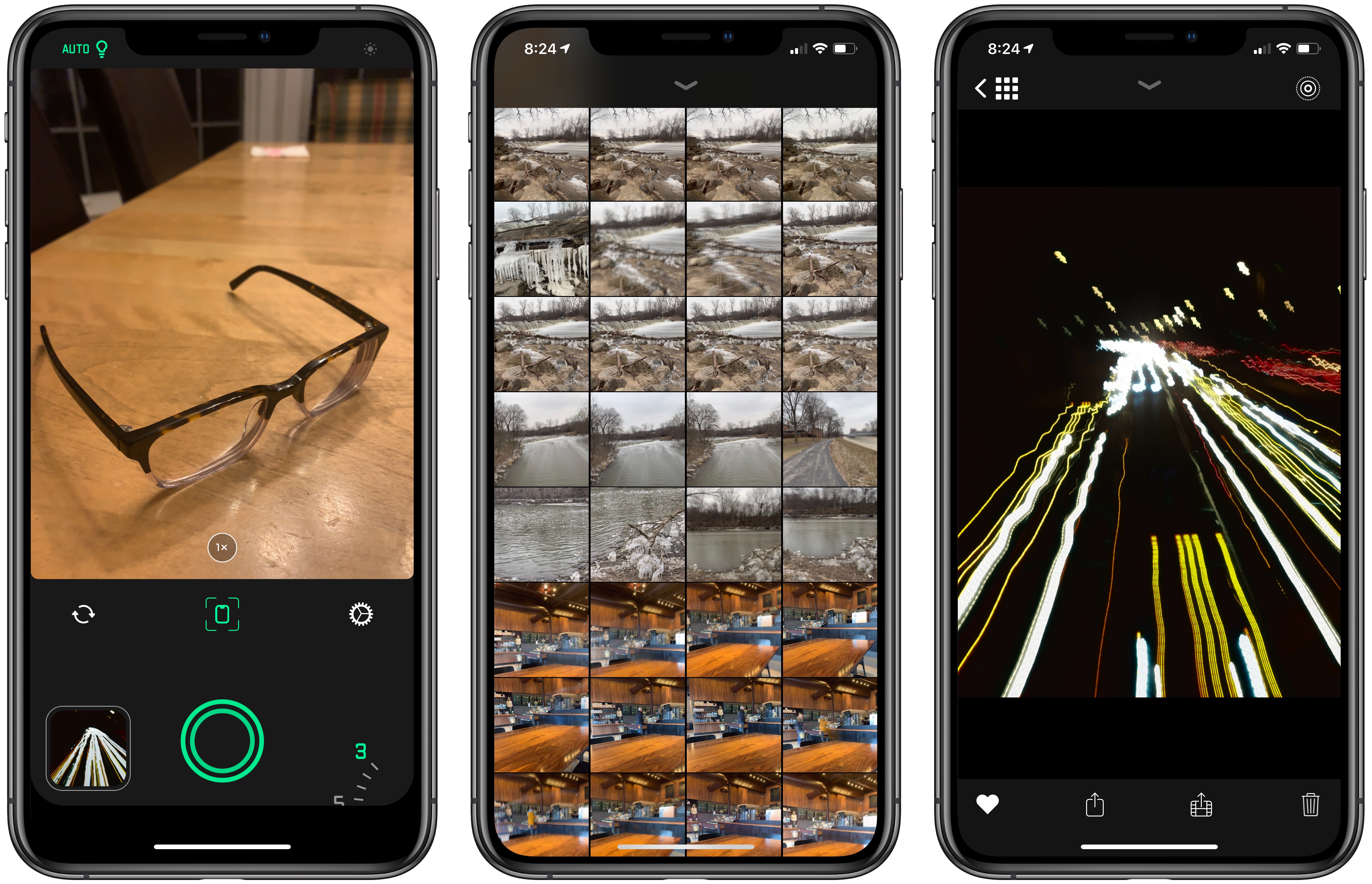

When you switch to the ultra wide lens, you’ll notice that not all the usual Halide features are available. Manual focus is missing and so is shooting in RAW. That’s because the new iPhone hardware and iOS and iPadOS 13 don’t support those features. Although the ultra wide shots don’t support RAW, Halide has included a ‘MAX’ option in place of the ‘RAW’ option, so you can get the most image data possible from your wide shots, which you can see in the screenshots below.

The Halide team says that the latest update also includes noise-reduction adjustments to the RAW images produced by the iPhone 11, but that they are continuing to fine-tune how that app handles RAW photos from the new phones as part of a more significant update that is coming next.

The latest update is relatively small, but I especially like the use of haptic feedback and lens guides, which make it easy to switch lenses when you’re focused on the viewfinder of the camera instead of Halide’s buttons.

Halide is available on the App Store for $5.99.