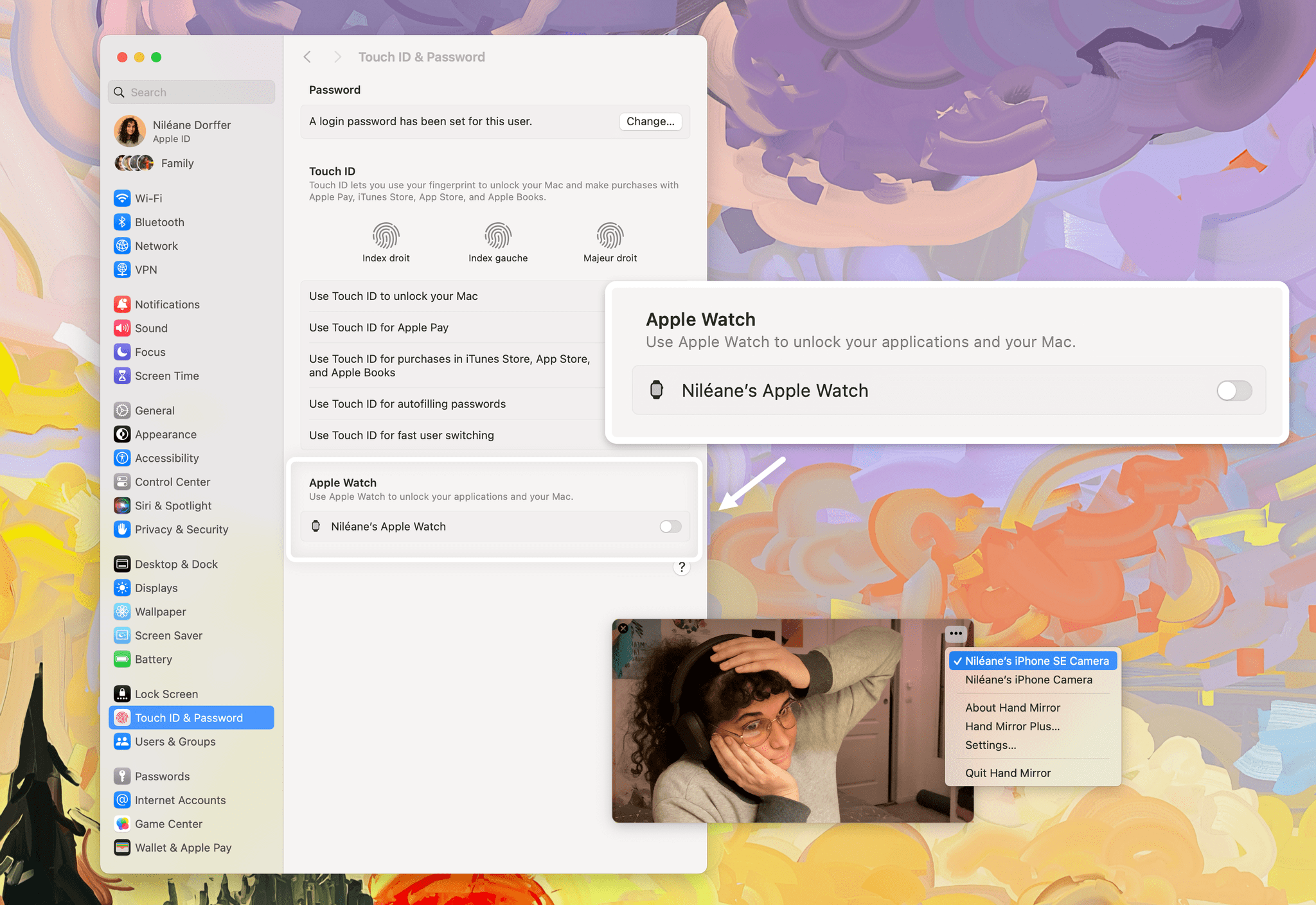

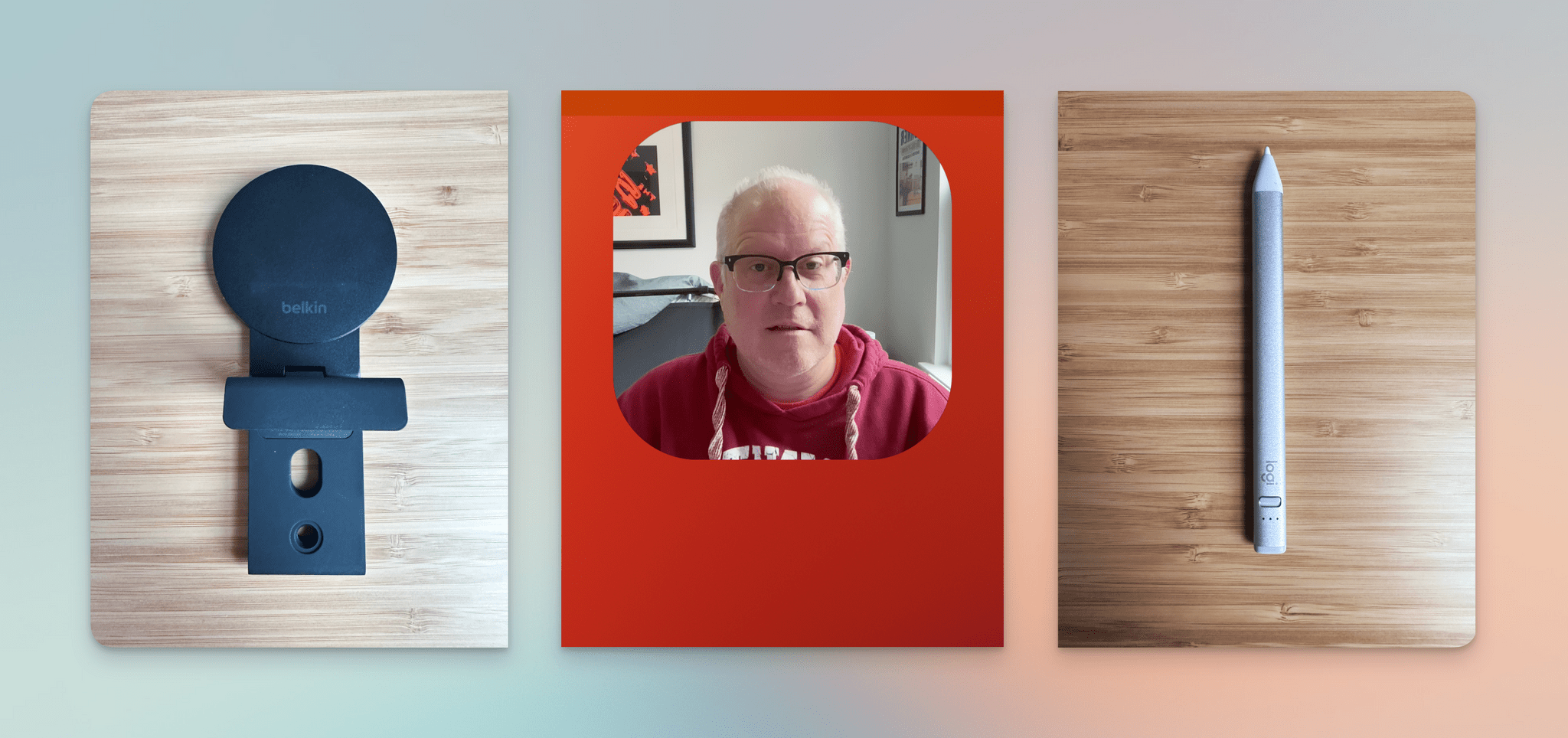

Continuity Camera is amazing. Since it was introduced in macOS Ventura, I’ve been using the feature almost daily. Continuity Camera is a native feature on macOS that lets you use an iPhone as your webcam. For it to work, you can either connect the iPhone to your Mac using a cable, or use it wirelessly if both devices are signed in with the same Apple ID. It’s quite impressive that, despite having to rely so often on video calls for work, I still don’t own a webcam today. Instead, the camera I use at my desk is an old iPhone SE (2nd generation), which was my partner’s main iPhone until they upgraded last year.

Over the past few months, however, the number of video calls I have needed to take on a daily basis has become critical. As an activist, part of my work now also involves conducting online training sessions with sometimes up to a hundred participants at a time. I just couldn’t afford to join one of those sessions and not have my camera working. Continuity Camera became a feature that I need to work reliably. Sadly, it doesn’t. Half of the time, apps like Zoom and Discord on macOS could not see the iPhone SE in the list of available cameras. This meant I had to fetch a Lightning cable to manually connect the iPhone. If I was unlucky that day, and that didn’t work, I would have to completely reboot the Mac. If I was really unlucky that day, and even that didn’t work, I would end up joining the call without a camera. Despite meeting all the requirements listed by Apple Support, this problem just kept happening on random occasions.

I had to find a fix for this bug, or at least a way to work around it.