Anthropic introduced a couple of new features in its Claude Mac app today that lower the friction of working with the chatbot.

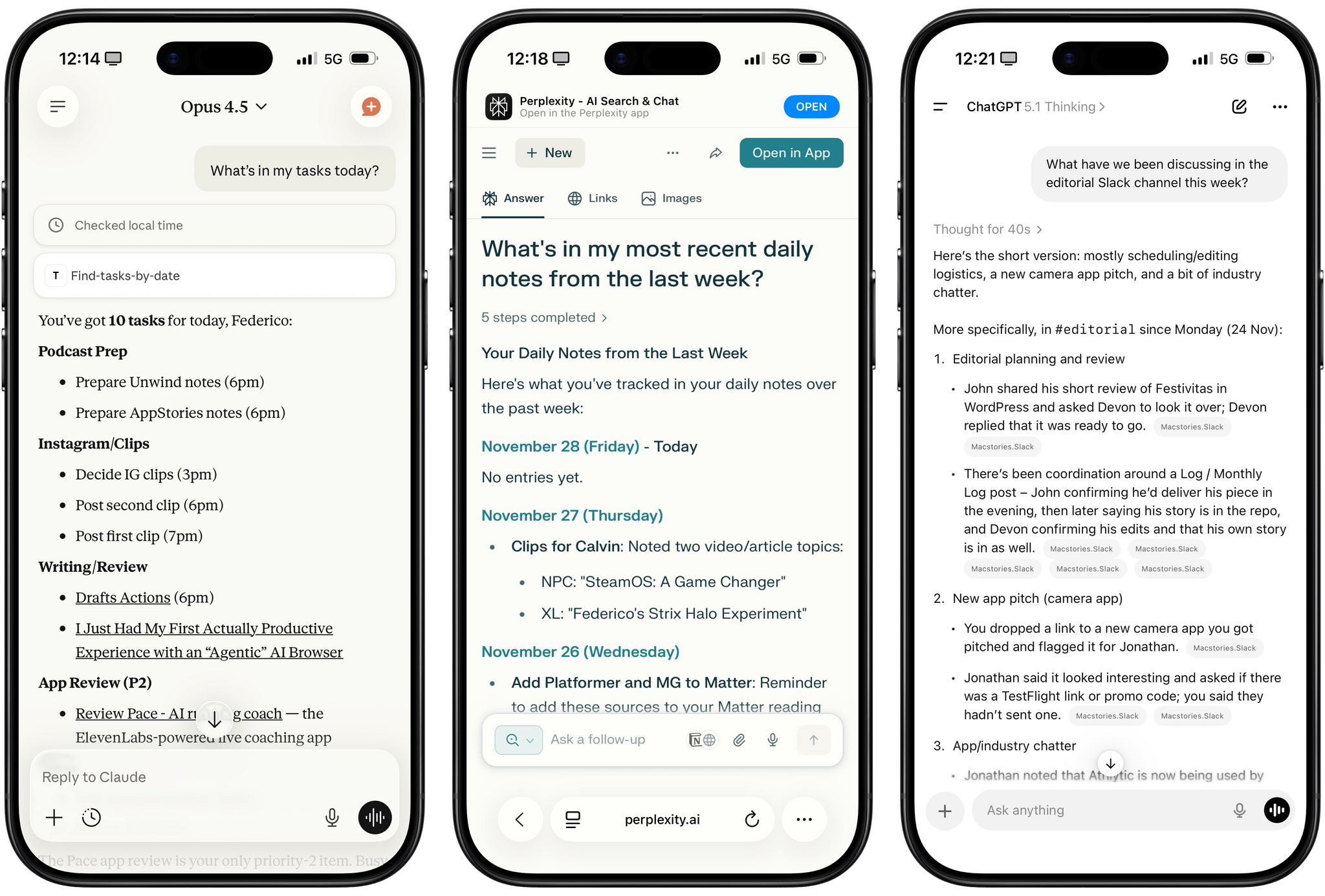

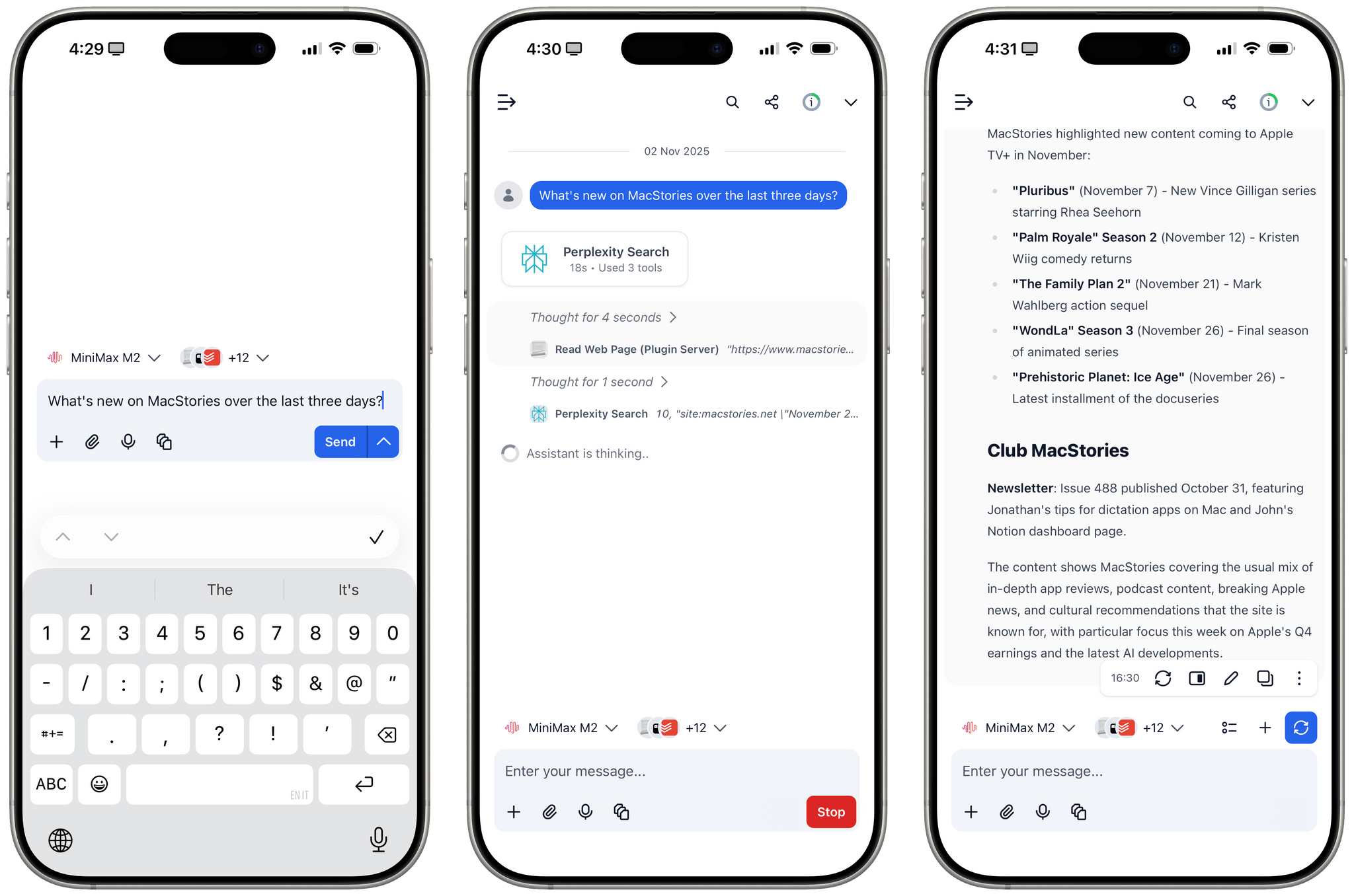

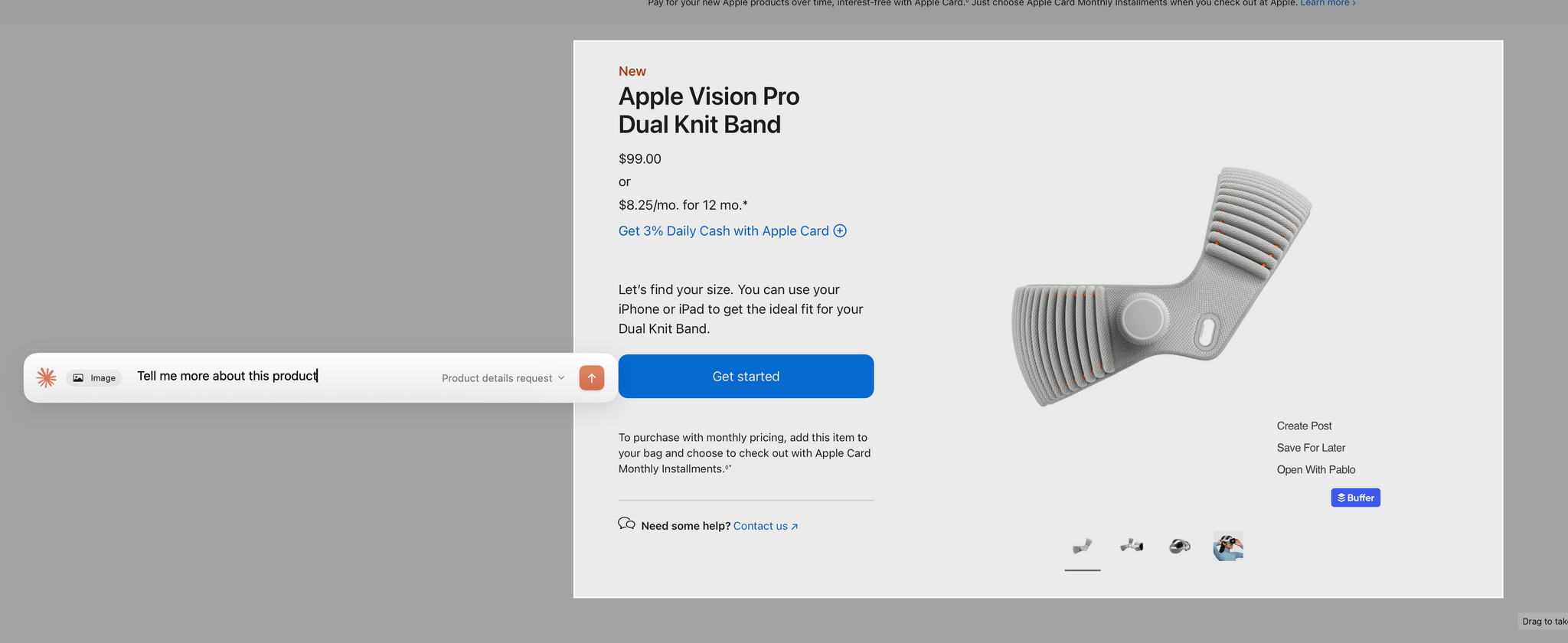

First, after giving screenshot and accessibility permissions to Claude, you can double tap the Option button to activate the app’s chat field as an overlay at the bottom of your screen. The shortcut simultaneously triggers crosshairs for dragging out a rectangle on your Mac’s screen. Once you do, the app takes a screenshot and the chat field moves to the side of the area you selected with the screenshot attached. Type your query, and it and the screenshot are sent together to Claude, switching you to Claude and kicking off your request automatically.

Instead of double-tapping the Option key, you can also set the keyboard shortcut to Option + Space, or a custom key combination. That’s nice because not all automation systems support two modifier keys as a shortcut. For example, Logitech’s Creative Console cannot record a double tap of the Option button as a shortcut.

I send a lot of screenshots to Claude, especially when I’m debugging scripts. This new shortcut will greatly accelerate that process simply by switching me back to Claude for my answer. It’s a small thing, but I expect it will add up over time.

My only complaint is that the experience has been inconsistent across my Macs. On my M1 Max Mac Studio with 64GB of memory, it takes 3-5 seconds for Claude to attach the screenshot to its chat field whereas on the M4 Max MacBook Pro I’ve been testing, the process is almost instant. The MacBook Pro is a much faster Mac than my Mac Studio, but I was surprised at the difference since it occurs at the screenshot phase of the interaction. My guess is that another app or system process is interfering with Claude.

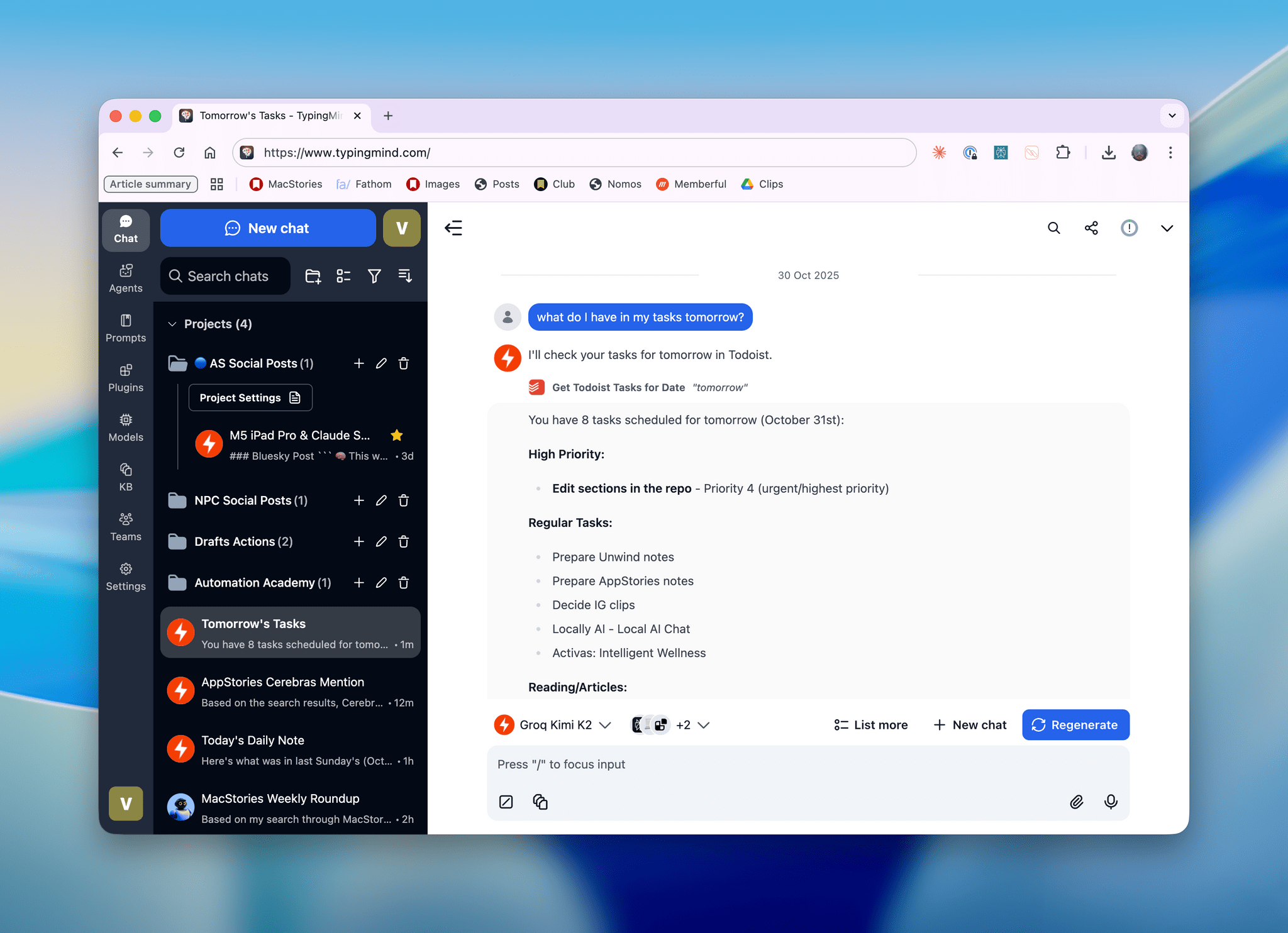

The other new feature of Claude is that you can set the Caps Lock button to trigger voice input. Once you trigger voice input, an orange cloud appears at the bottom of your screen indicating that your microphone is active. The visual is a little over-the-top, but the feature is handy. Tap the Caps Lock button again to finish the recording, which is then transcribed into a Claude chat field at the bottom of your screen. Just hit return to upload your query, and you’re switched back to the Claude app for a response.

One of the greatest strengths of modern AI chatbots is their multi-modality. What Anthropic has done with these new Claude features is made two of those modes – images and audio – a little bit easier, which gets you from input to a response a little faster, which I appreciate. I highly recommend giving both features a try.