Over the course of my career, I’ve had three distinct moments in which I saw a brand-new app and immediately felt it was going to change how I used my computer – and they were all about empowering people to do more with their devices.

I had that feeling the first time I tried Editorial, the scriptable Markdown text editor by Ole Zorn. I knew right away when two young developers told me about their automation app, Workflow, in 2014. And I couldn’t believe it when Apple showed that not only had they acquired Workflow, but they were going to integrate the renamed Shortcuts app system-wide on iOS and iPadOS.

Notably, the same two people – Ari Weinstein and Conrad Kramer – were involved with two of those three moments, first with Workflow, then with Shortcuts. And a couple of weeks ago, I found out that they were going to define my fourth moment, along with their co-founder Kim Beverett at Software Applications Incorporated, with the new app they’ve been working on in secret since 2023 and officially announced today.

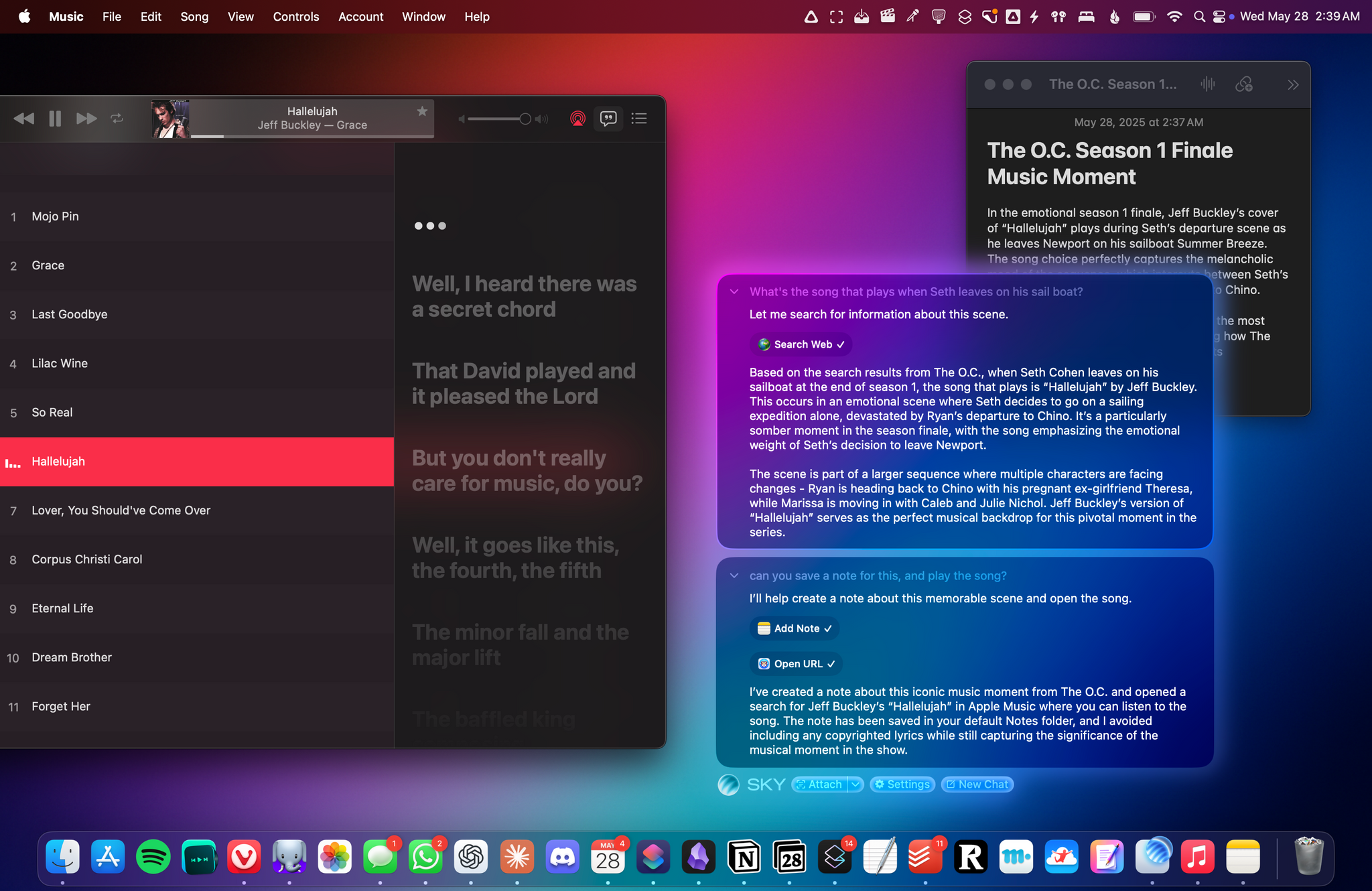

For the past two weeks, I’ve been able to use Sky, the new app from the people behind Shortcuts who left Apple two years ago. As soon as I saw a demo, I felt the same way I did about Editorial, Workflow, and Shortcuts: I knew Sky was going to fundamentally change how I think about my macOS workflow and the role of automation in my everyday tasks.

Only this time, because of AI and LLMs, Sky is more intuitive than all those apps and requires a different approach, as I will explain in this exclusive preview story ahead of a full review of the app later this year.

What Sky Is

First, let me share some of the details behind today’s announcement. Sky is currently in closed alpha, and the developers have rolled out a teaser website for it. There’s a promo video you can watch, and you can sign up for a waitlist as well. Sky is currently set to launch later this year. I’ve been able to test a very early development build of the app along with my colleague John Voorhees, and even though I ran into a few bugs, the team at Software Applications Incorporated fixed them quickly with multiple updates over the past two weeks. Regardless of my early issues, Sky shows incredible potential for a new class of assistive AI and approachable automation on the Mac. It’s the perfect example of the kind of “hybrid automation” I’ve been writing about so much lately.

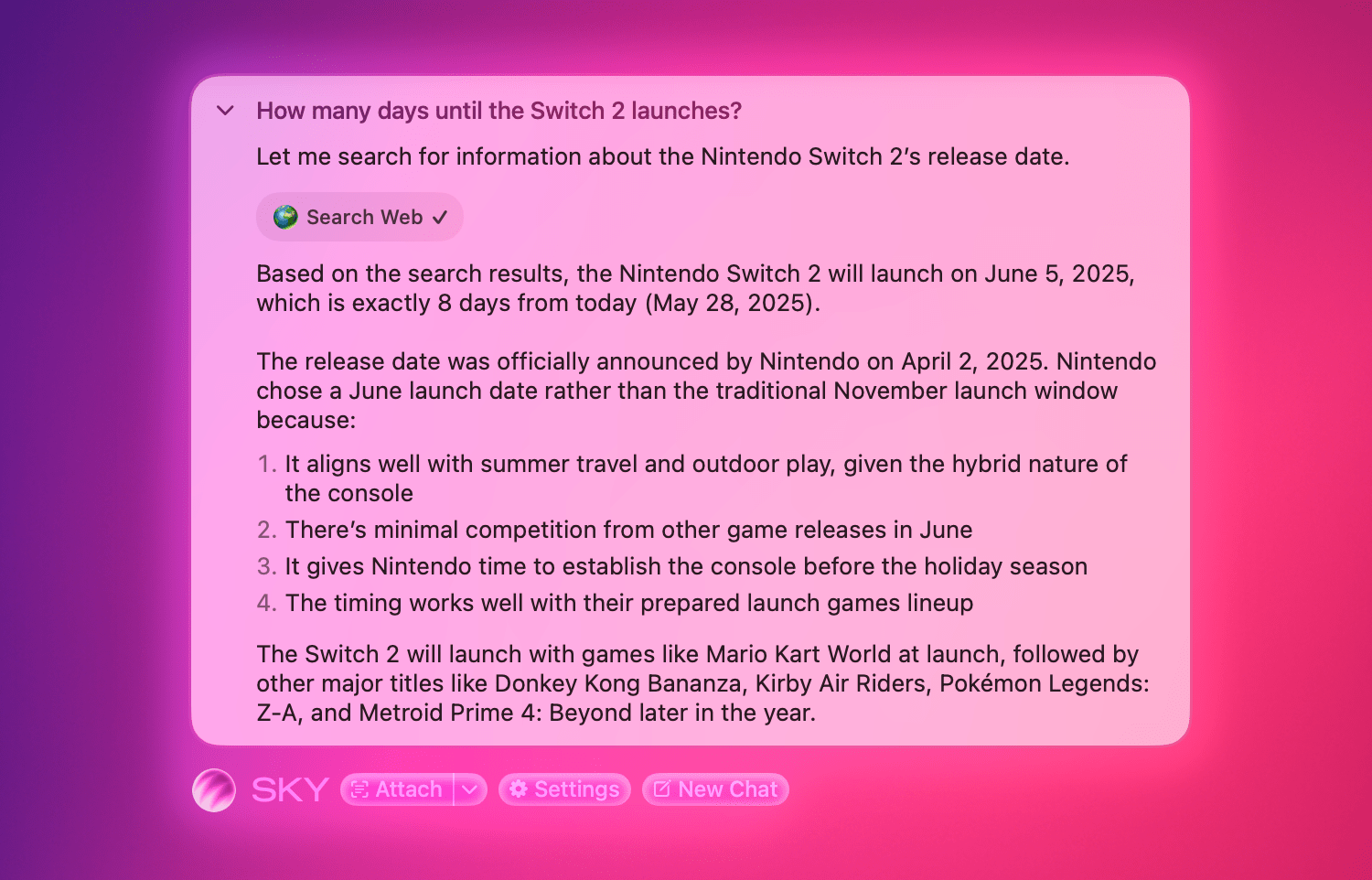

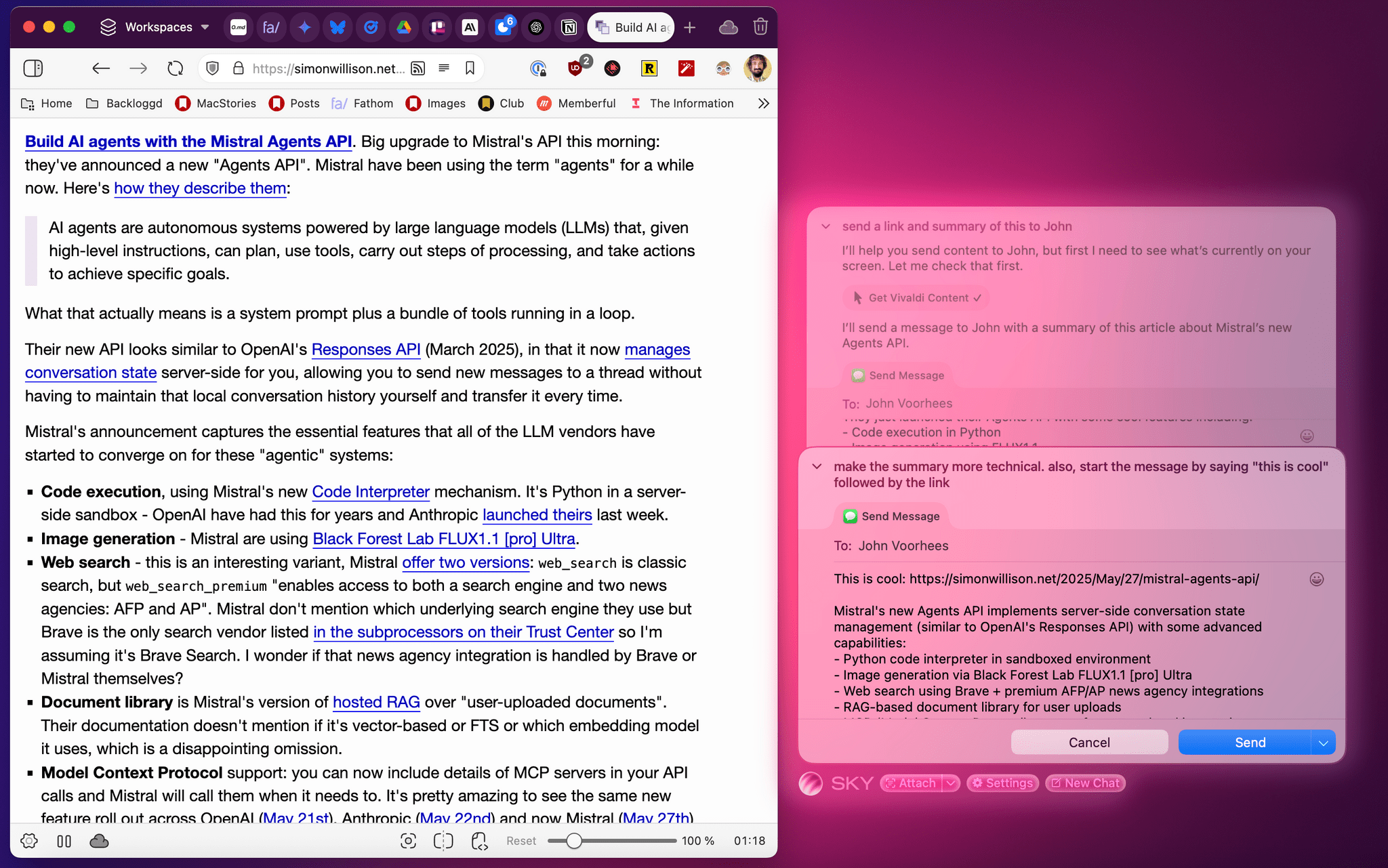

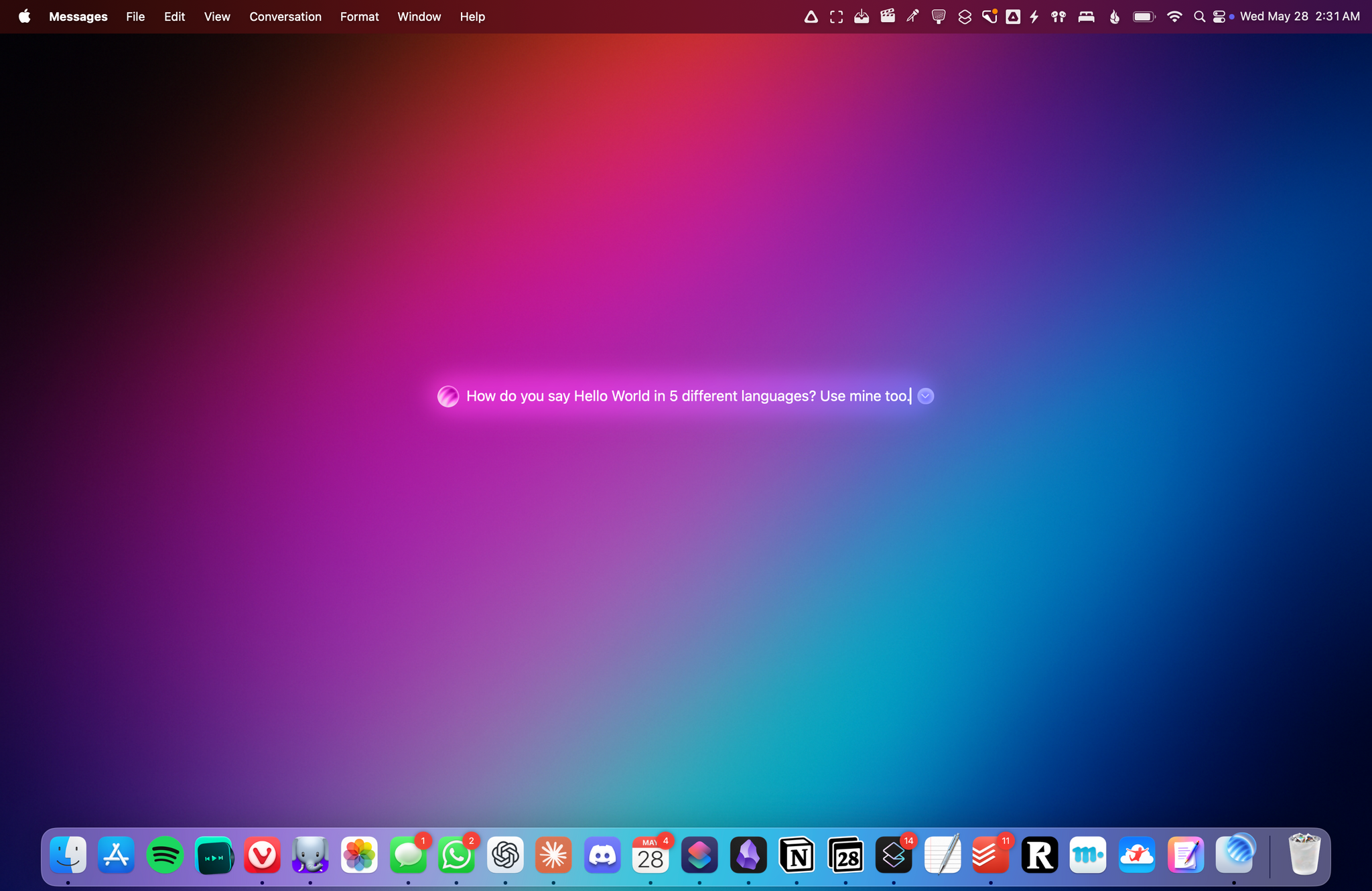

Sky is an AI-powered assistant that can perform actions and answer questions for any window and any app open on your Mac. On the surface, it may look like any other launcher or LLM with a desktop app: you press a hotkey, and a tiny floating UI comes up.

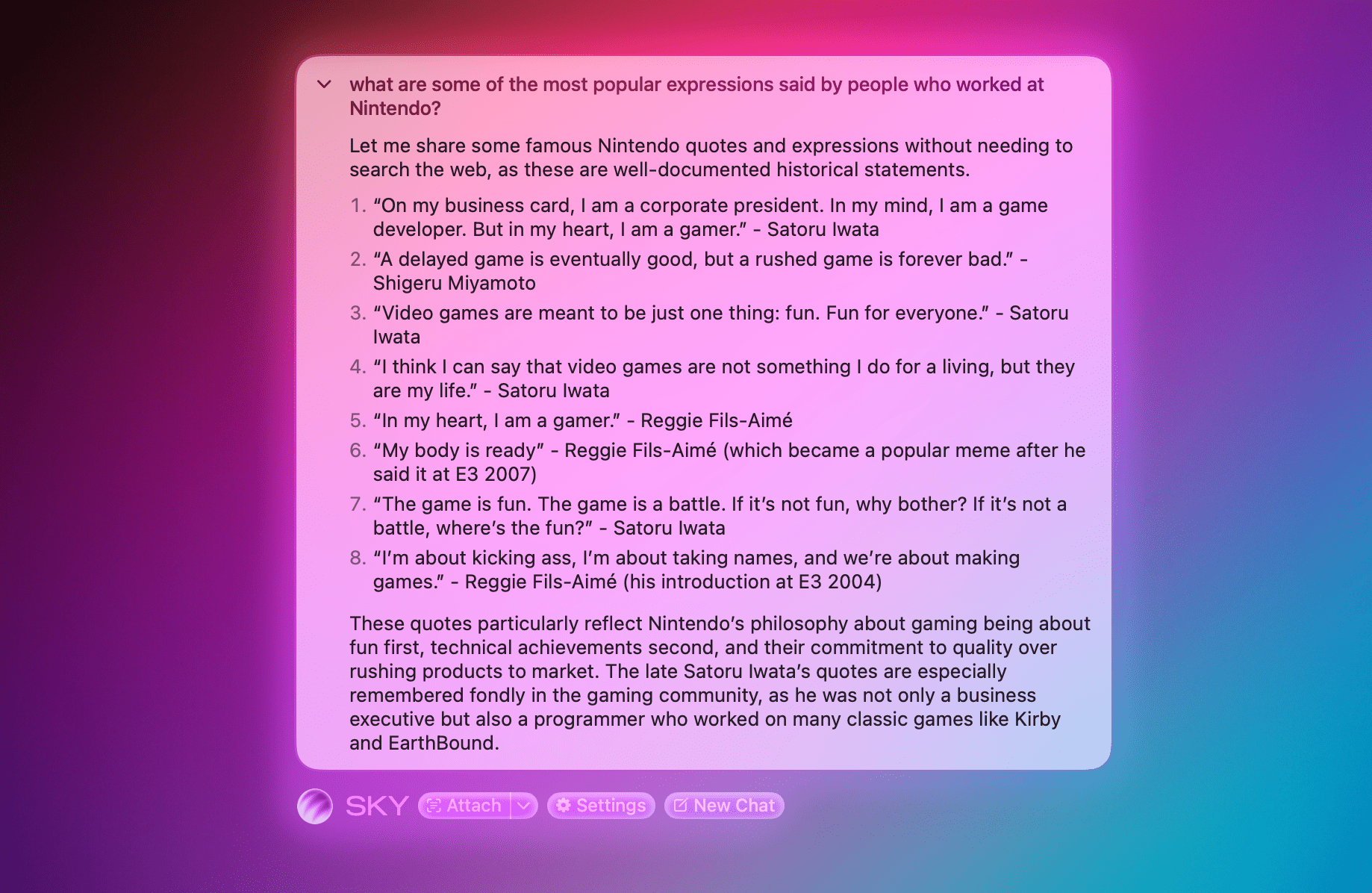

You can ask Sky typical LLM questions, and the app will use GPT 4.1 or Claude to respond with natural language. That’s nice and already better than Siri when it comes to general questions, but that’s not the main point of the app.

What sets Sky apart from anything I’ve tried or seen on macOS to date is that it uses LLMs to understand which windows are open on your Mac, what’s inside them, and what actions you can perform based on those apps’ contents. It’s a lofty goal and, at a high level, it’s predicated upon two core concepts. First, Sky comes with a collection of built-in “tools”1 for Calendar, Messages, Notes, web browsing, Finder, email, and screenshots, which allow anyone to get started and ask questions that perform actions with those apps. If you want to turn a webpage shown in Safari into an event in your calendar, or perhaps a document in Apple Notes, you can just ask in natural language out of the box.

At the same time, Sky allows power users to make their own tools that combine custom LLM prompts with actions powered by Shortcuts, shell scripts, AppleScript, custom instructions, and, down the road, even MCP. All of these custom tools become native features of Sky that can be invoked and mixed with natural language.

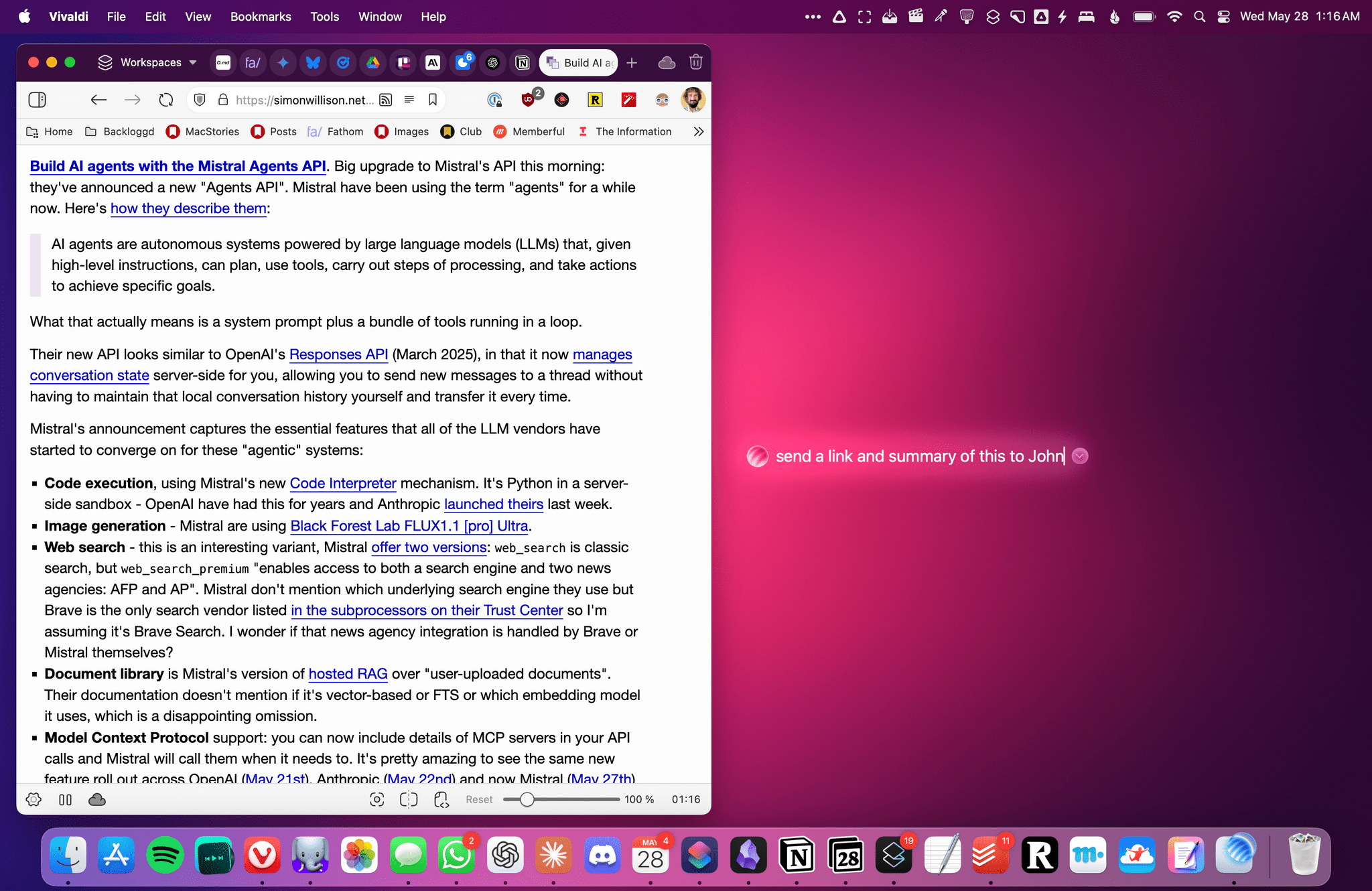

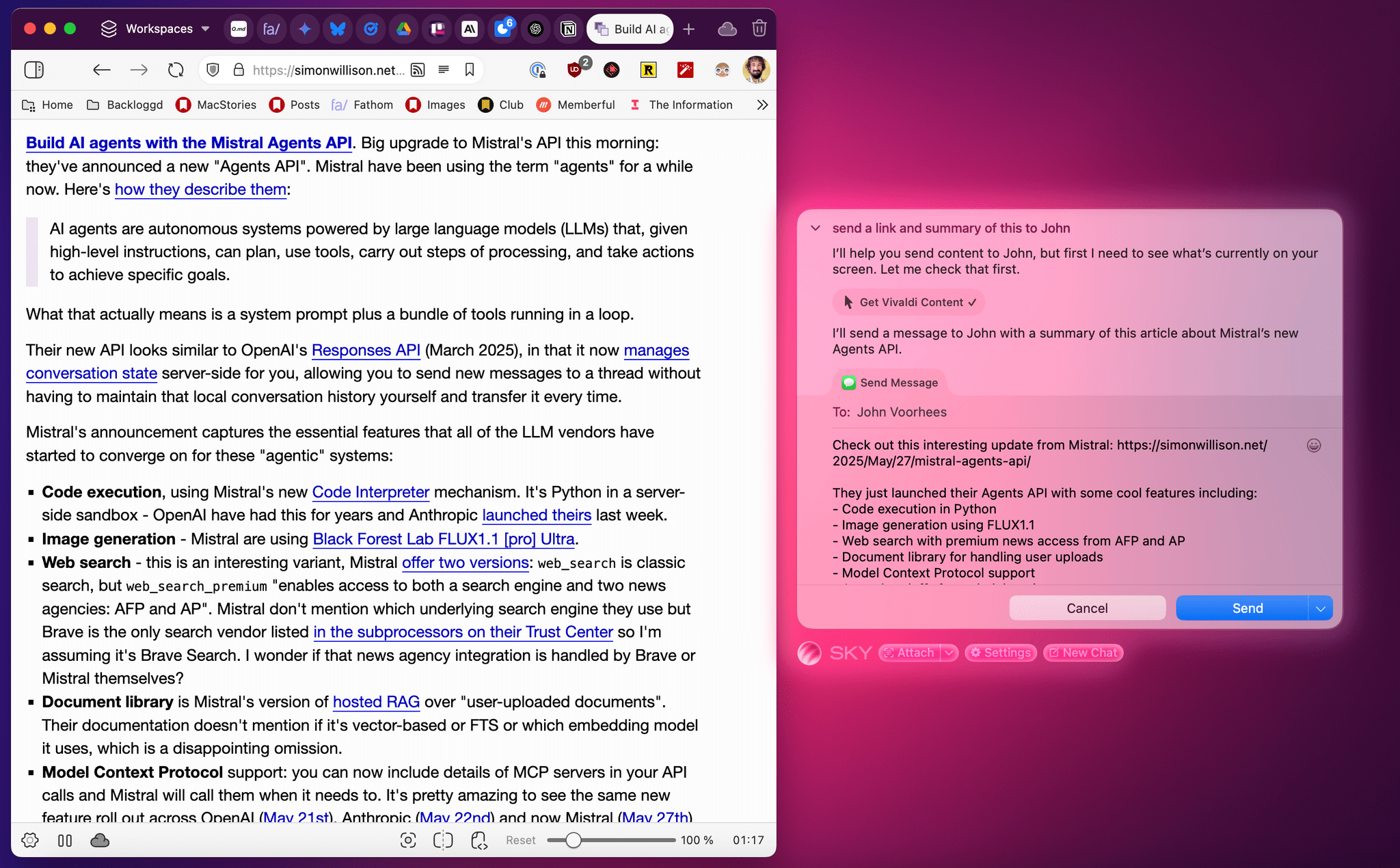

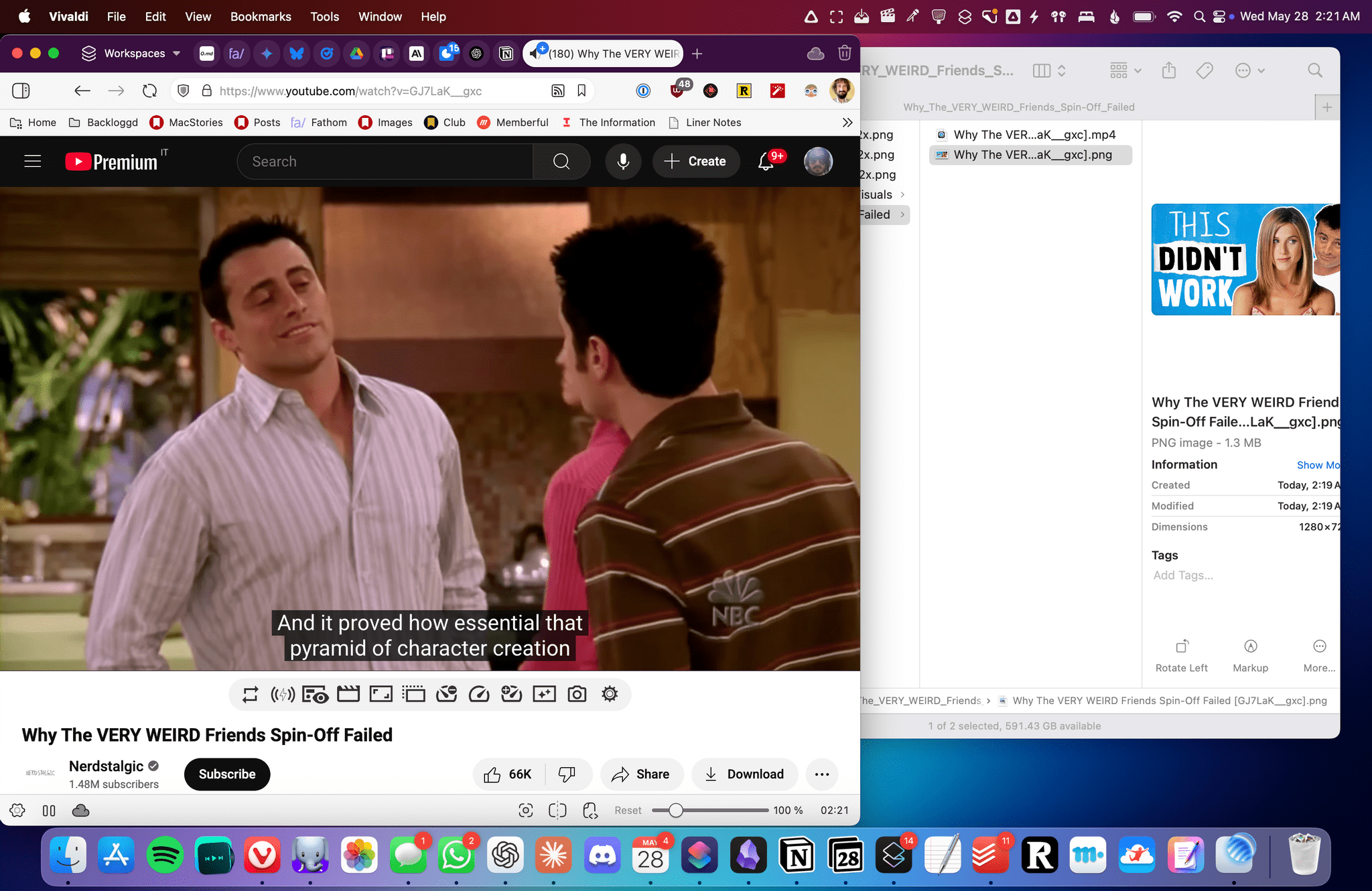

If this sounds too good to be true, well, let me explain and show you how it works. Let’s say that you’re browsing the web and come across an article you want to share. Typically, you’d open the macOS share sheet, select Messages, pick a recipient, and send a URL along with some of your thoughts about it. Here’s how it works with Sky: you invoke it with your preferred hotkey and type something like, “send a link and summary of this to John”:

In a couple of seconds, Sky understands everything that means. It gets the link from whatever browser you’re using (I tested it with Vivaldi), summarizes the contents of the page using an LLM (I used it with GPT 4.1), understands who a specific contact you want to text may be, and knows how to send a message using the Messages app. And it does so remarkably quickly, all while parsing a command typed in natural language – possible typos included.

Since Sky’s conversational window (more on its design below) supports going back and forth on a query like any other LLM interface, you can ask for further changes – such as, “make the summary more technical, and format it as Markdown” – and the app will adjust its output accordingly. Every action performed by Sky with system apps requires your manual authorization (for now, at least), so you’ll always be able to see the updated message and choose whether to send it or not:

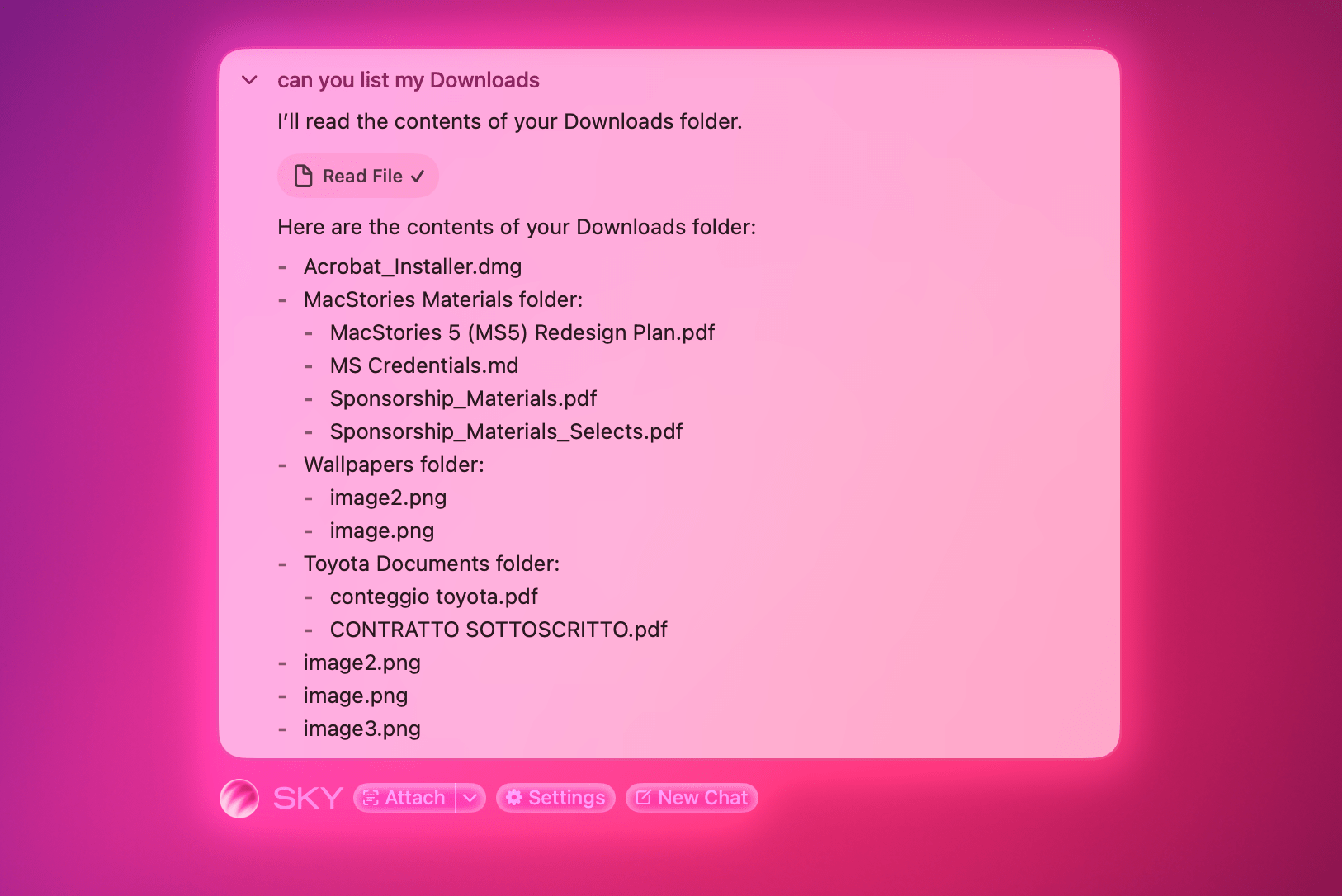

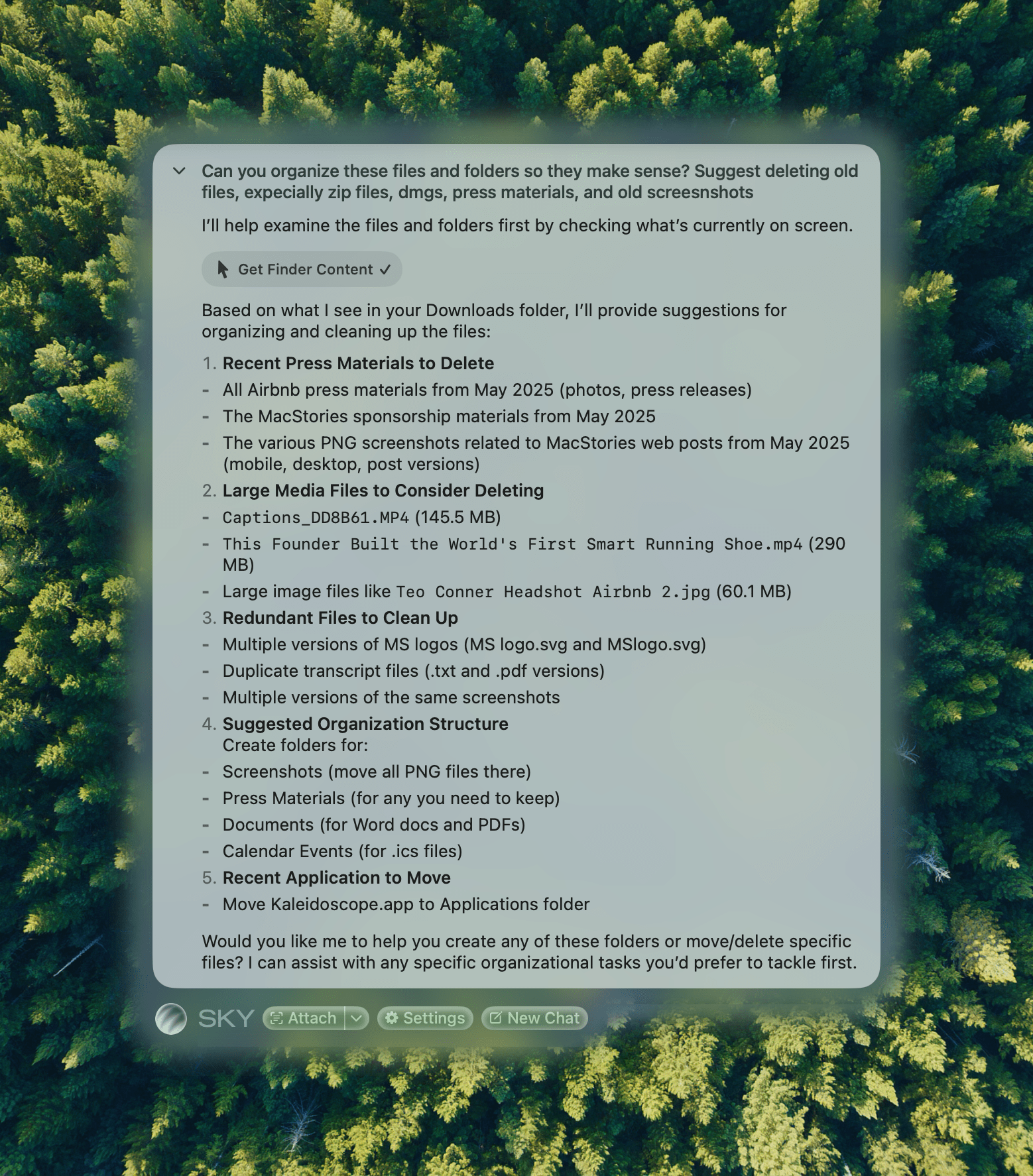

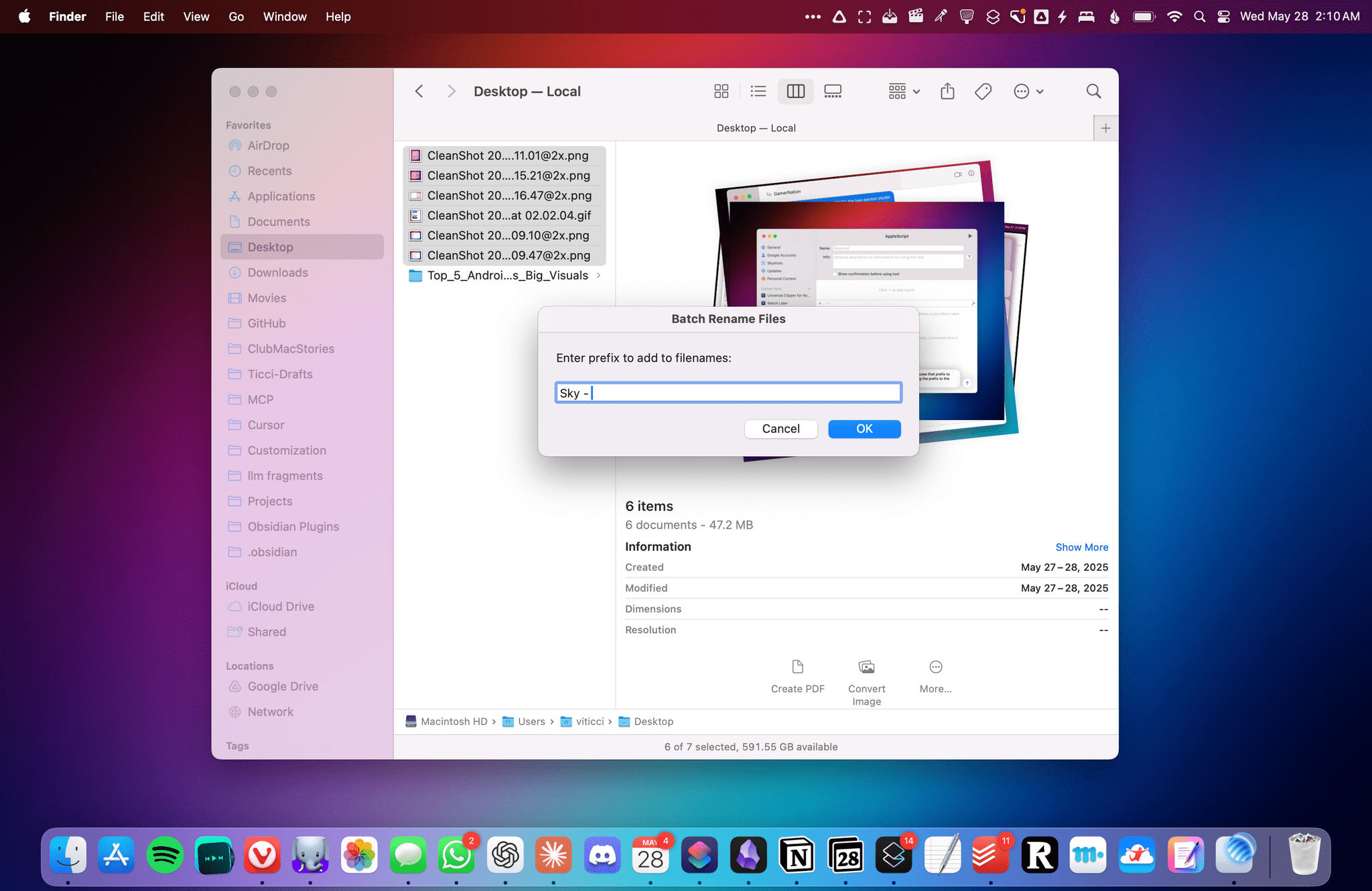

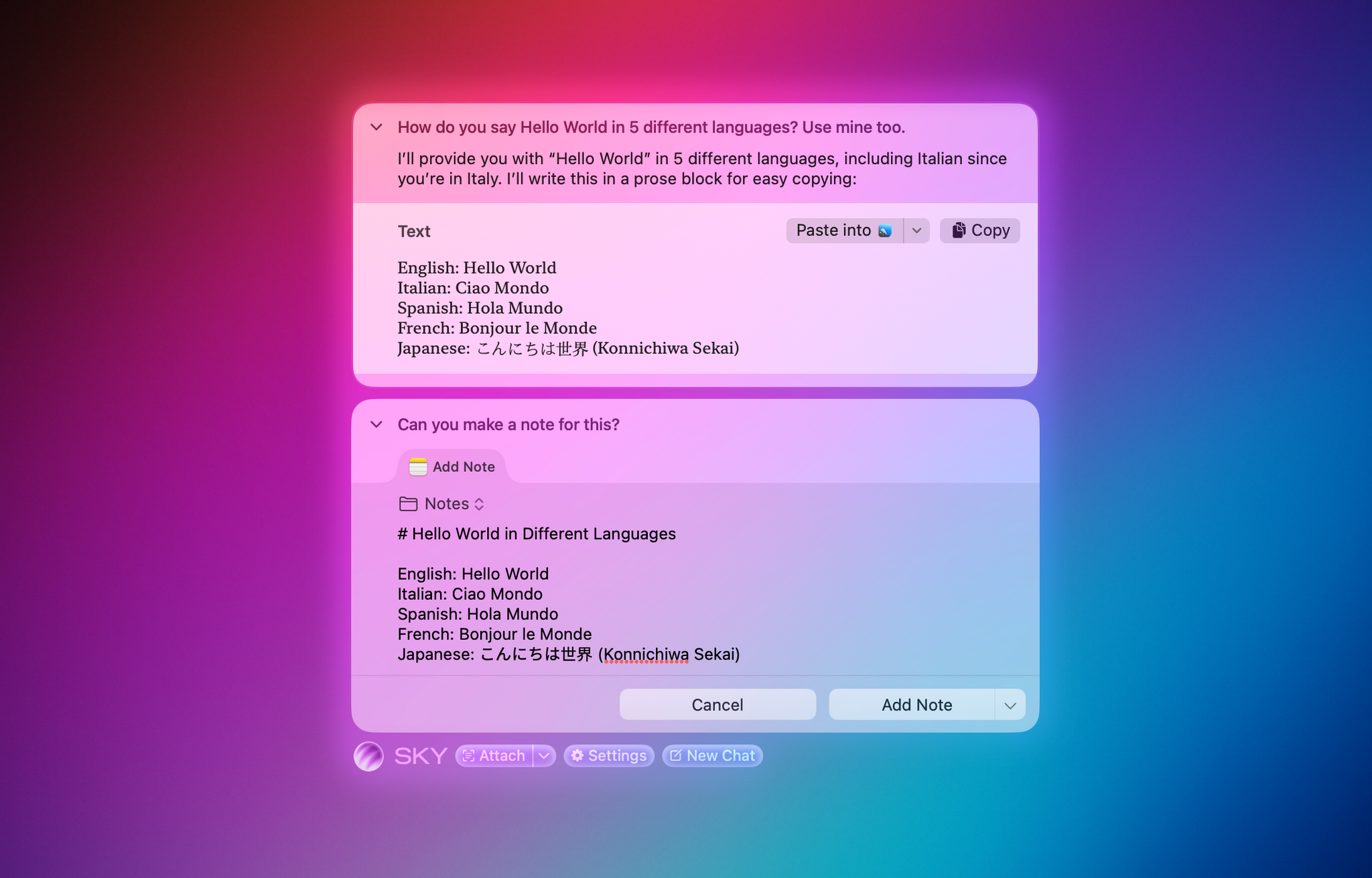

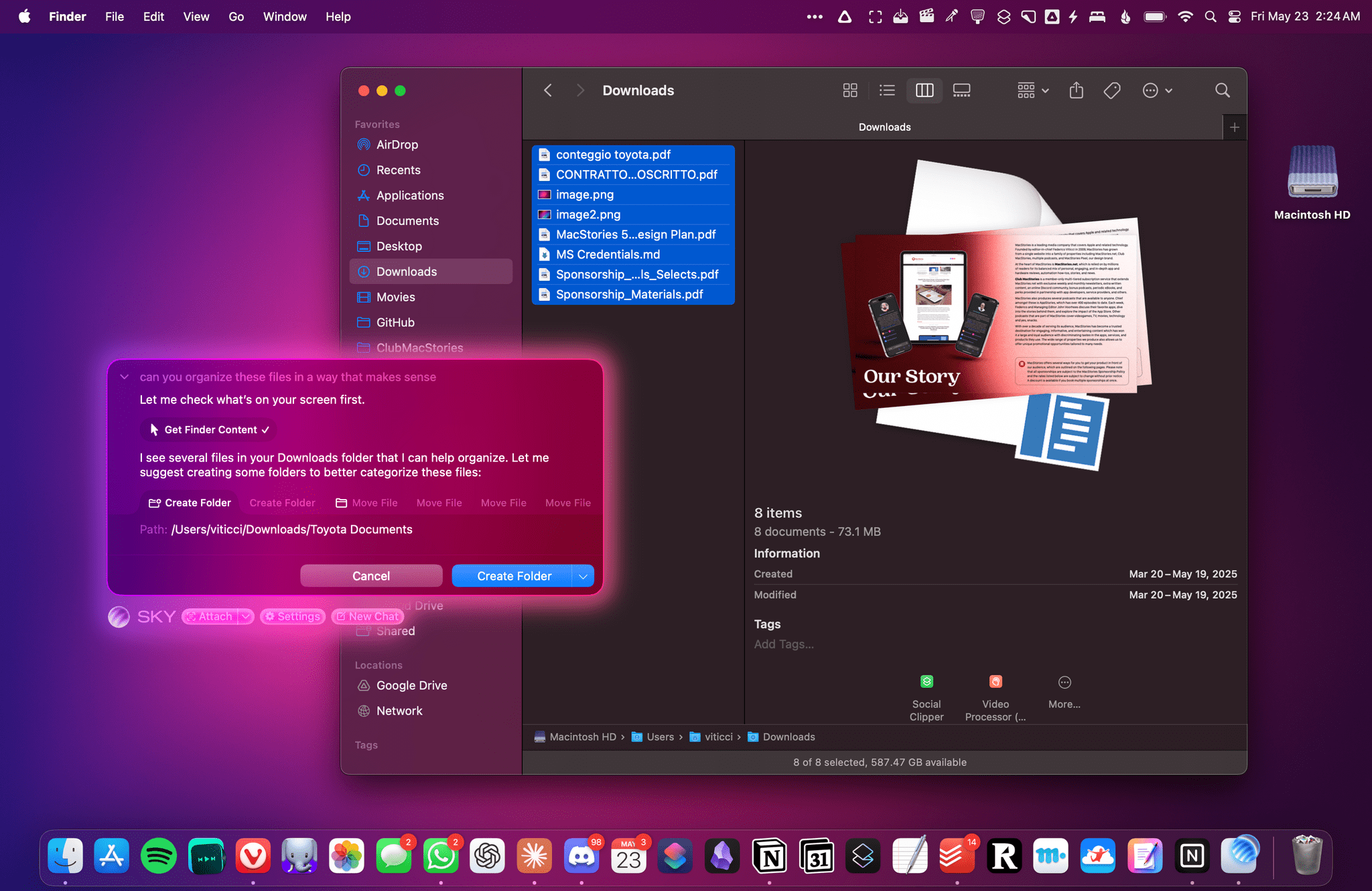

But we’ve seen webpage summarization and other LLM integrations in Mac apps before, including Raycast’s AI-based extensions we previously covered on MacStories. Allow me to demonstrate how Sky goes much deeper than those AI tools. A few days ago, I opened my Downloads folder in Finder and realized it was a mess. I could have manually sorted all the documents I had in there, but since Sky comes with complete Finder integration, I chose to tell it to perform the cleanup for me. I asked:

Can you organize these files in a way that makes sense?

And based on that, Sky got to work:

Using Sky to make sense of a messy Downloads folder. As you can see, multiple actions performed by Sky are displayed as horizontal tabs.

There are several aspects of Sky’s combination of LLMs with macOS that are worth unpacking here. For starters, the app understands what I mean by “these files” by looking at my currently active window and reading its contents. Unlike similar AI tools we’ve seen so far, Sky does not rely on simple OCR to “take a screenshot” of the window and attempt to read an image of it; as I’ll explain later, the app is able to actually read the contents of any window thanks to some clever work that involved serious engineering efforts for the macOS windowing system.

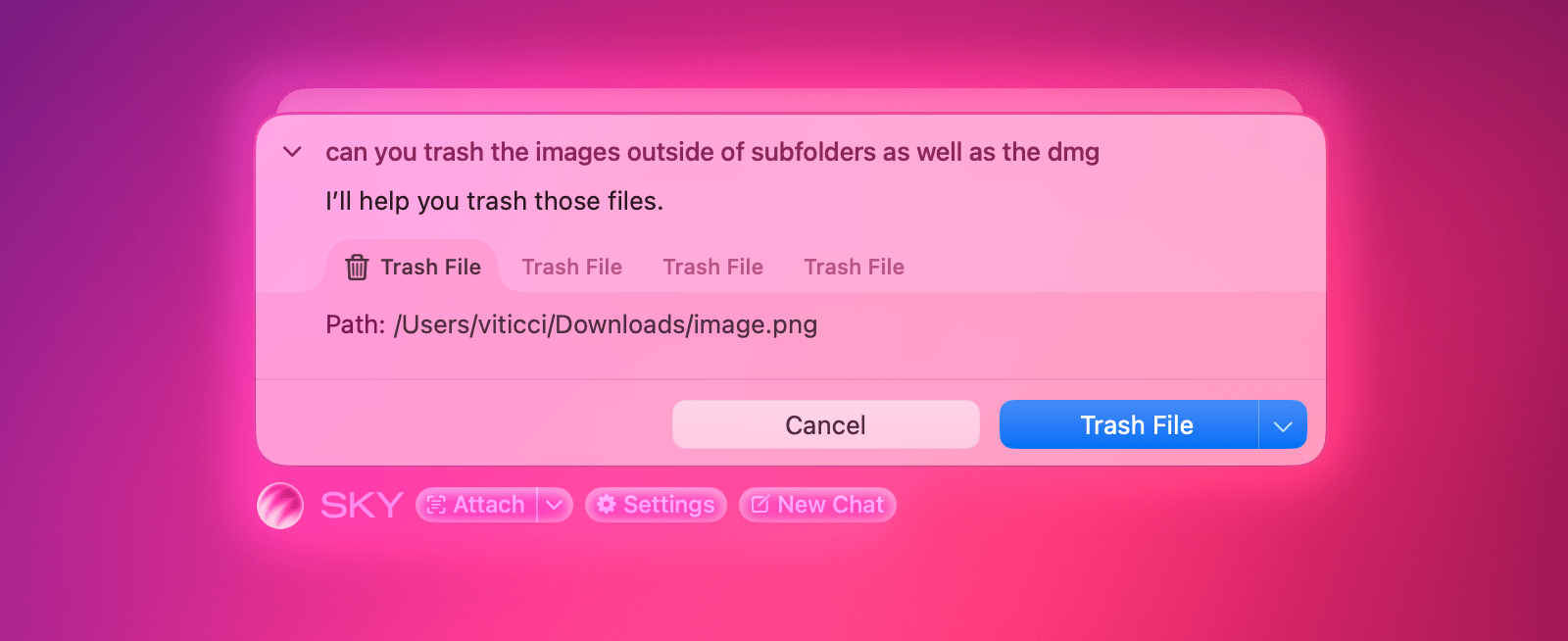

In the case of Finder integration specifically, Sky can also use its built-in file management tools to read any directory, create and move files, and more. If you ask Sky to “list my downloads”, it’ll work:

But so will asking to move specific files to the trash:

In Sky’s chat UI, you can also see how the app takes on an agentic behavior to perform actions on your behalf: each action to create folders and move files is represented by a tab that lets you manually confirm each step of the chain. This agentic yet user-first approach works for any request in Sky that involves multiple actions that need to happen in succession.

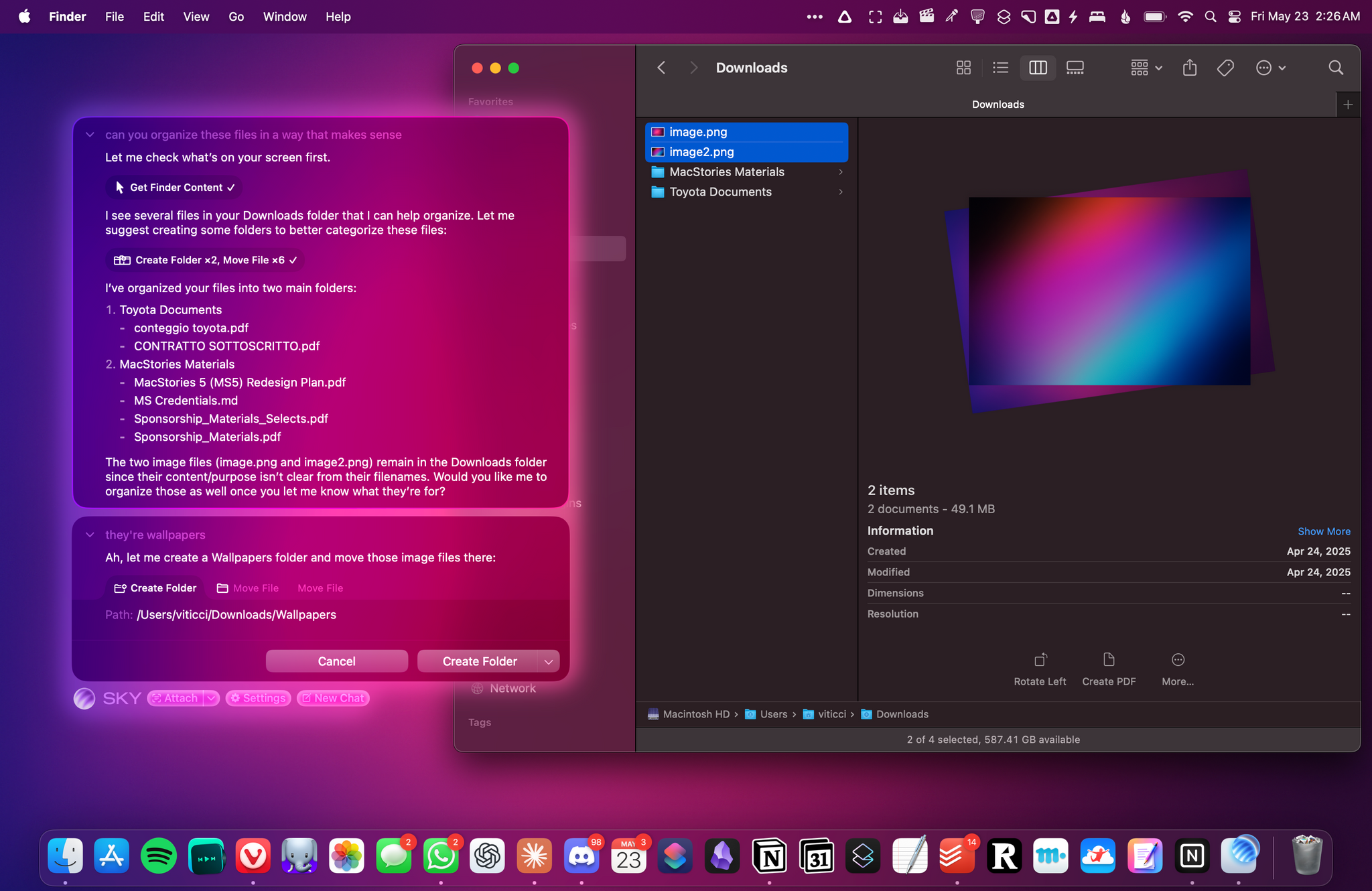

But back to my original question about organizing my Downloads folder. At the end of the process, Sky told me that it had organized documents in two sub-folders, but it didn’t know what to do with two remaining images. I told it that they were wallpapers, and Sky continued:

That was pretty amazing, and I could see myself taking advantage of natural language for all kinds of file management tasks that, frankly, I don’t want to be bothered performing myself. I even asked John, who claimed to have thousands (!?) of unprocessed items in his Downloads folder, to run the same query on his Mac, and Sky handled it just fine:

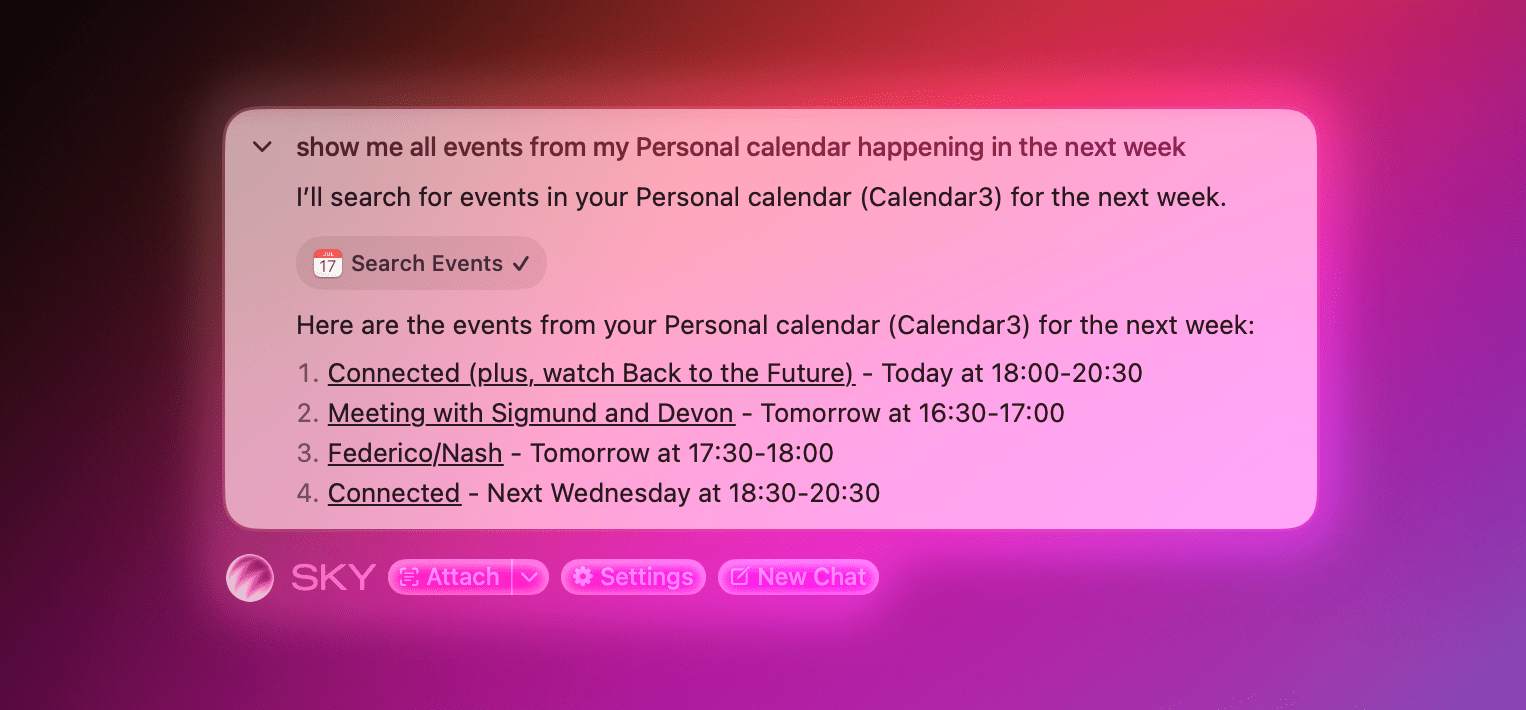

Sky also integrates with the system Calendar app, so any natural language query that involves any of your calendars is fair game. For example, I typed…

show me all events from my Personal calendar happening in the next week

…and Sky successfully replied in less than five seconds, presenting each item as a deep link to the event in the Calendar app:

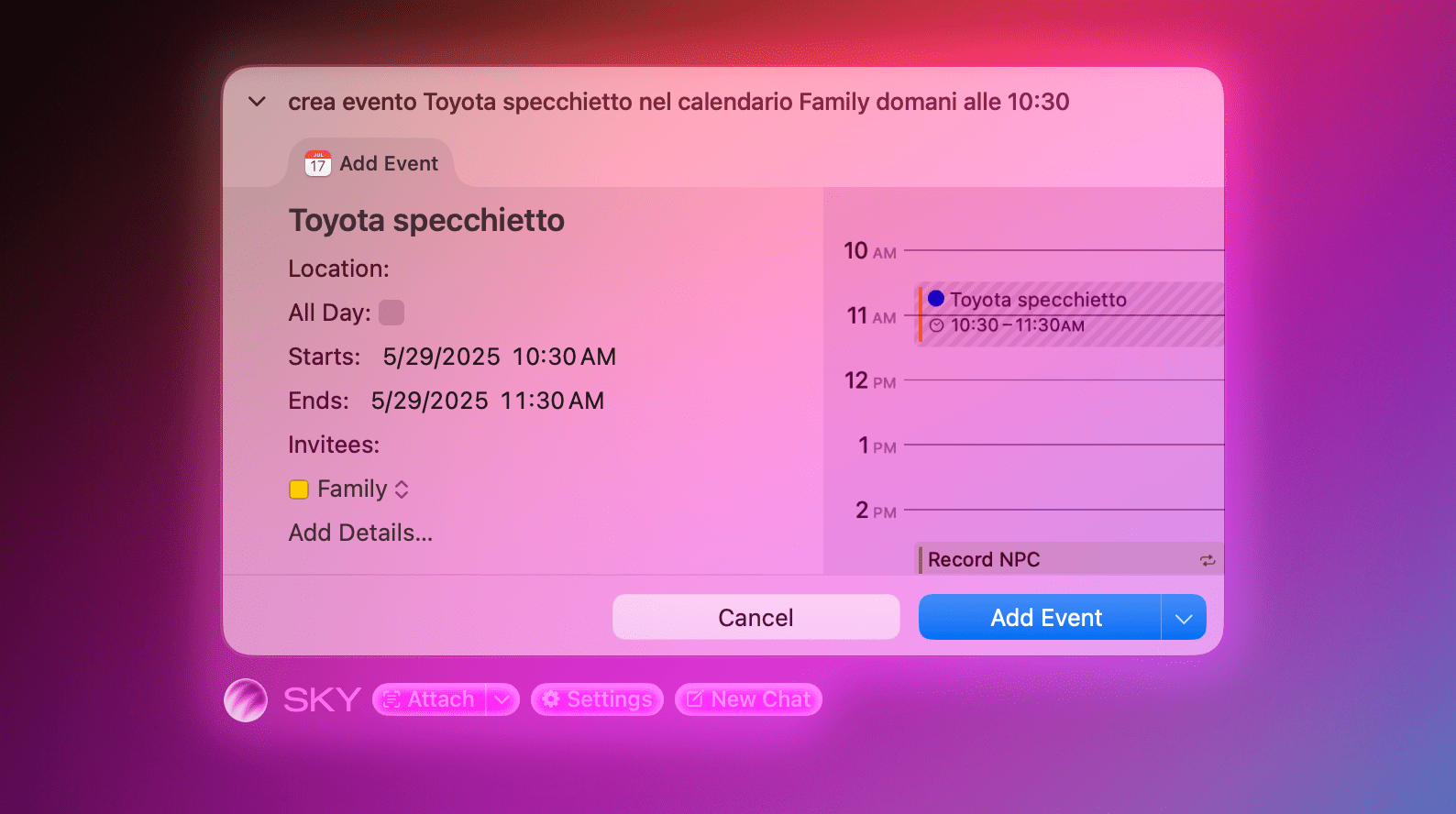

LLMs are great at multilingual input, so it goes without saying that Sky works out of the box with multiple languages, too. I mostly use the app in English, but when I asked Sky to create an event for my Toyota appointment to get my car’s rearview mirror fixed…

crea evento Toyota specchietto nel calendario Family domani alle 10:30

…Sky understood everything and prepared a native Calendar snippet with a preview of the event that I could confirm manually:

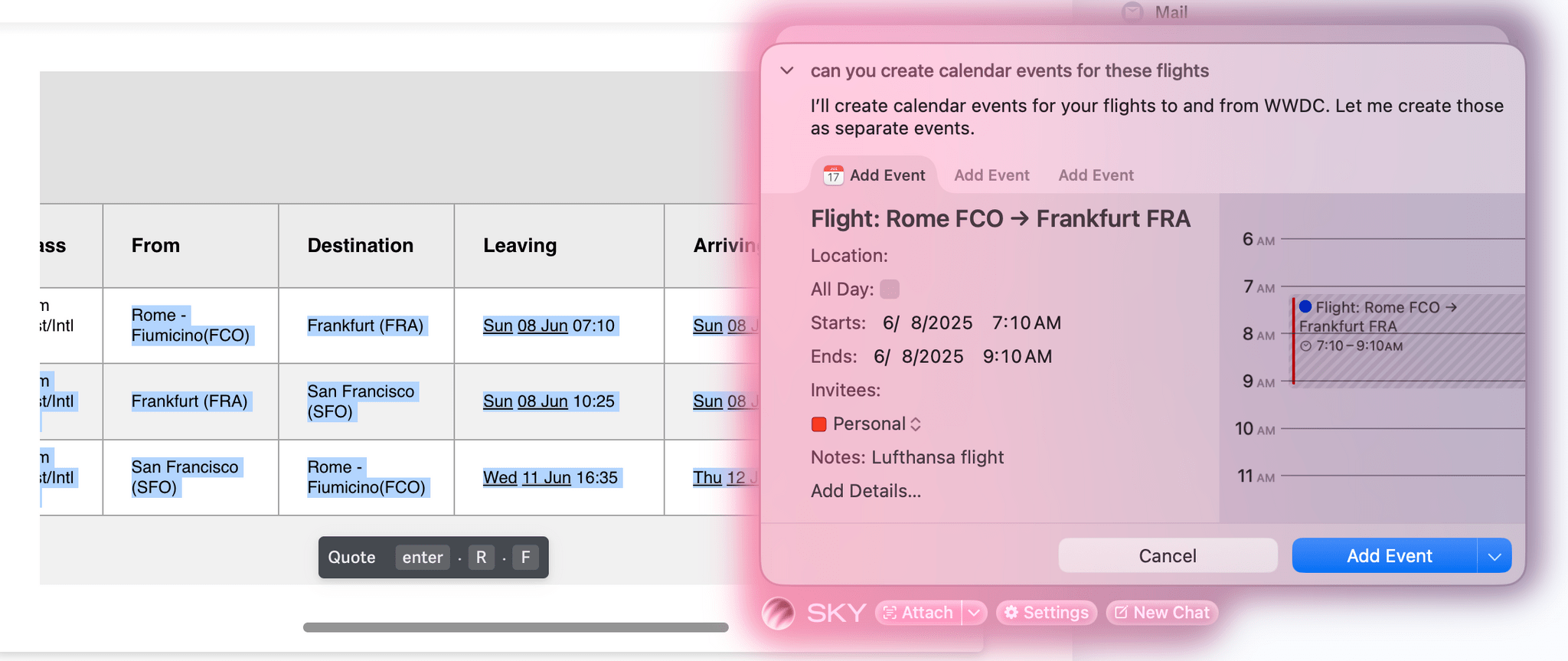

That was great – especially since I’m so annoyed by Siri’s lack of multilingual support – but, once again, the real advantage of Sky lies in connecting the capabilities of LLMs to the actual apps you use on your Mac. And not just a subset of apps (such as the ones supported by ChatGPT), but literally any app – regardless of whether it’s created with AppKit, SwiftUI, or Electron. In one case, I was using Superhuman (an Electron app) and looking at a table of potential flights. I selected the table, invoked Sky, and asked:

can you create calendar events for these flights?

Sky did not use its built-in Gmail tool for this; instead, it relied on its own engine to extract the contents of my Superhuman window, found my selection, used GPT 4.1 to parse it, and – within seconds – came back with three tabs to create calendar events for what was shown on-screen. I didn’t have to use any special syntax, command, or shortcut. I just asked Sky to look at the screen and do something for me, and it worked.

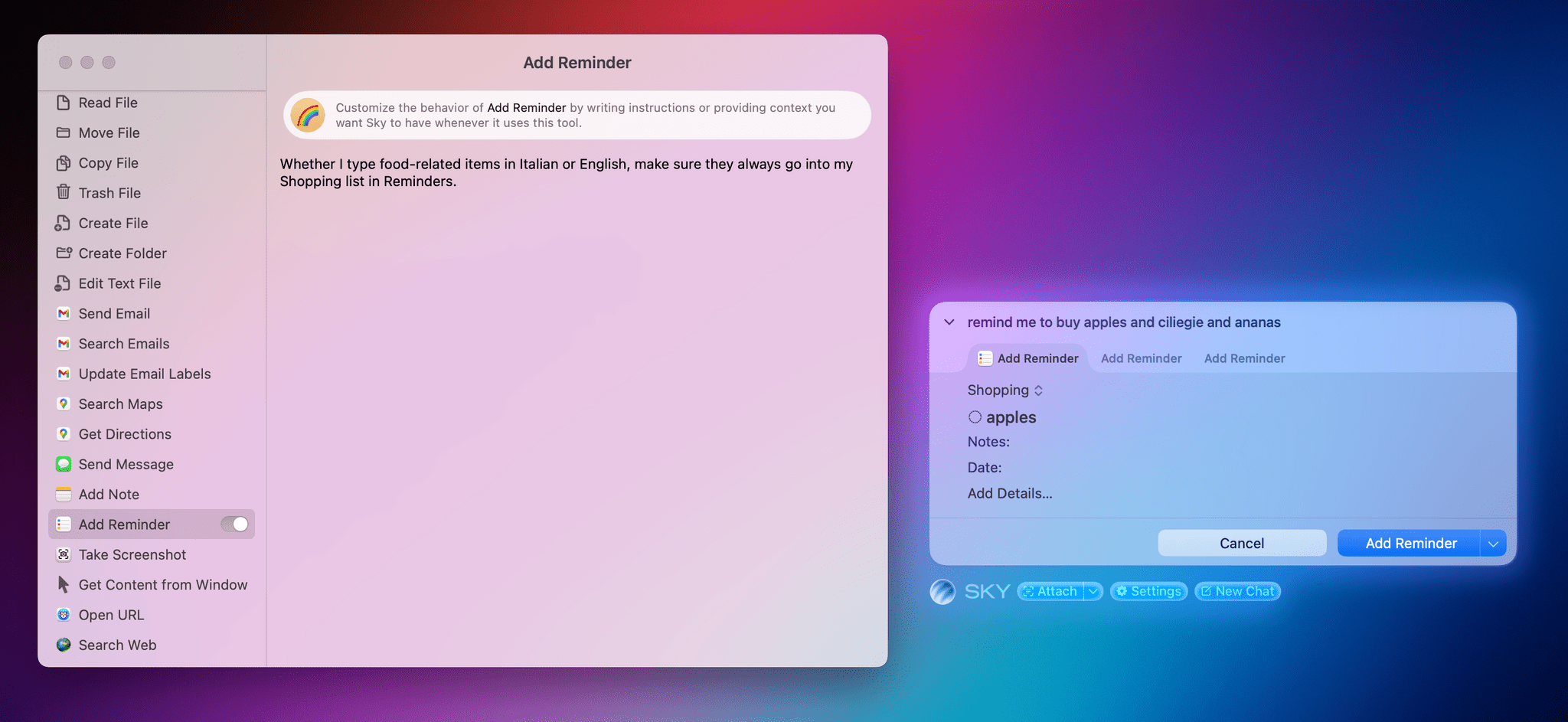

I could go on and on with examples about tools built into Sky by default, but I think you get the idea by now. I could mention how I asked it to look at a restaurant referenced in a calendar event, find alternatives nearby, and send them to a contact; or how I customized the Reminders tool so that food items automatically go into my Shopping list based on semantic understanding; or perhaps how I’m using the ‘Get Content from Window’ tool to share Spotify links and tracklists without Spotify’s custom share sheet. But I’ll save several of these examples for my review later this year.

I customized the Reminders tool to categorize food items into my Shopping list regardless of the language I use. This kind of easy, semantic personalization is impossible with traditional automation.

The most important thing to understand about Sky is that, thanks to LLMs, you don’t need to be a power user of the app to make your interactions with macOS faster. The Software Applications Incorporated team seems to have learned from their experience with Workflow and Shortcuts. There is virtually no learning curve to Sky; you can just start typing to get things done. At the same time, the app can extend to the other end of the spectrum and enable advanced users to do all kinds of wild, powerful things, which I’ll cover in a bit.

The Design of Sky

Although Sky is very early in its development and I’m sure plenty of aspects of its UI will change by the final release, I have to spend a few words to talk about its visuals, overall aesthetic, and general feeling.

The short version is that Sky feels ready for a possible redesign of macOS that would be, say, based on translucencies, shadows, and a more prominent use of colors. The longer version is that I’m sure this is a coincidence, but since a bunch of former Apple folks work at Software Applications Incorporated, I’m guessing they have great design sensibilities that make Sky feel ready for whatever the next trend in Apple design is going to be.

Sky opens as a small, floating text input field where you can type anything you want. The text field was intentionally designed to be compact and feel like an “accessory” embedded within your operating system. The floating window is always tinted with the key colors of your desktop wallpaper, which contributes to making Sky feel like a native enhancement to macOS. As you chat with the app, replies get stacked in the floating window, where you can scroll to reveal all previous messages as well as swipe with two fingers to delete a message and go back in the thread. The animations and interactions are delightful.

The Sky team tells me they spent a lot of time trying to get the visual effects that blend the app with your system wallpaper just right. It looks fantastic in action.

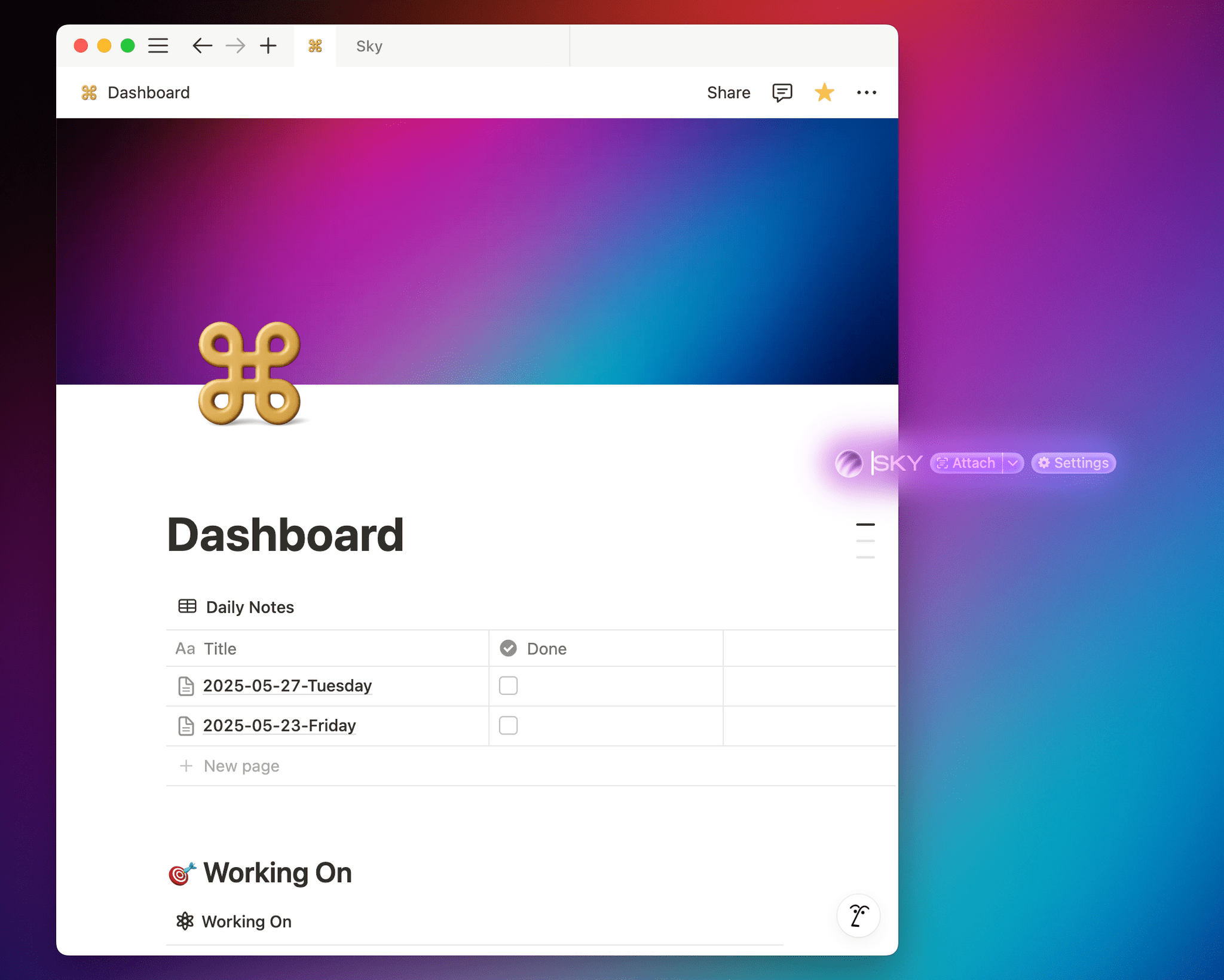

At any point, you can click on the Sky ‘orb’ icon to expand the floating window into a full-blown, regular Mac window. This window lets you search your previous chats, delete them, or continue an existing conversation. Both in floating and regular window modes, you don’t need to manually select a text field: just start typing immediately, and you’ll add a new message to the chat.

I’m very curious to see what will happen with macOS’ design at WWDC, but I have a good feeling about Sky’s design ethos and overall aesthetic so far. In any case, while I appreciate Sky’s appearance, “design is how it works”, and there’s nothing more interesting to me about Sky than what it’s actually doing behind the scenes.

How Sky Works and Capturing ‘Skyshots’

When I first got a demo of Sky two weeks ago over Zoom, my immediate reaction after seeing it work with apps was, “How?”

Obviously, Sky cannot plug directly into App Intents (which are used by Apple’s Shortcuts app), and it was clear to me that it was doing something more than just calling AppleScript or shell commands in the background. For example, Sky worked with Zen Browser, and I know for a fact that Zen, being based on Firefox, does not support the JavaScript automation features available in Chromium-based browsers. Which, once again, begged the question: how?

From what I’ve learned by talking to the team and poking around myself, I’ll say that what they’ve built is an incredibly clever piece of macOS engineering. Behind the scenes, Sky relies on existing macOS technologies to access the contents of any window – regardless of whether it’s in the foreground or not – and uses that to feed context to the LLM. This approach, which I’ll write up in detail in my review later this year, is one of the most impressive strategies for integrating with all kinds of Mac apps I’ve seen to date. I’m very optimistic that it’ll continue working even after a potential major redesign of macOS.

Just as plugging into frameworks originally meant for third-party apps to create the Content Graph was the key innovation behind Workflow in 2014, so Sky’s underlying macOS “engine” is the key component behind this app in 2025. This philosophy seems to be in Weinstein and Kramer’s DNA at this point, and in a way, it reminds me of the late Gunpei Yokoi’s “lateral thinking with withered technology” approach: it’s using a piece of mature, established, readily available tech to drive innovation for something entirely new.

By default, Sky aims to hide all of its underlying complexity from users, and nowhere is that more apparent than in the app’s other core interaction mechanism: the Skyshot.

In addition to invoking an empty Sky chat window with a hotkey, you can also capture whatever is on-screen and pass that to Sky as input by using a “special” screenshot called a Skyshot. The interaction is nifty: to capture a Skyshot, you just need to hold down the two Command keys on your keyboard at once.2 Press both, and Sky will capture a special screenshot of your frontmost window that contains both an actual screenshot plus a textual representation of the window.

Taking Skyshots of your windows and asking the assistant to do something with “this” or what’s shown “here” becomes second nature quickly. For the past two weeks, I’ve been taking Skyshots of Spotify to extract information and links from albums; I’ve Skyshotted emails in Superhuman to act on details mentioned in them; I’ve even used Skyshots in Obsidian to proofread short posts when my editor was not available.

If Skyshots are at the foundation of Sky’s understanding of windows and data, what about performing actions that go beyond the app’s built-in tools?

This is where custom tools come in, and where the automation nature of Sky reveals itself.

Make Your Own Tools and “Hybrid Automation”

In pure LLM parlance, Sky is a desktop assistant that uses an LLM and heavily relies on tool calls to either provide better answers or perform actions. The app works with either GPT 4.1 or Claude (I mostly tested it with GPT 4.1; Claude Sonnet 4 and Opus 4 are also options for writing code tools) and supports parallel tool-calling. Sky does not currently use a reasoning model for answers and actions, and thus does not support interleaved thinking steps between tool calls, previously seen in Claude Opus 4. Given that speed is a priority for Sky, this makes sense.

As I’ve explained on MacStories lately, all the major AI labs are currently investing in “tools” as the next innovation for connecting LLMs to our favorite apps, but Sky is putting a unique spin on it that is only made possible by the team’s profound knowledge of desktop automation.

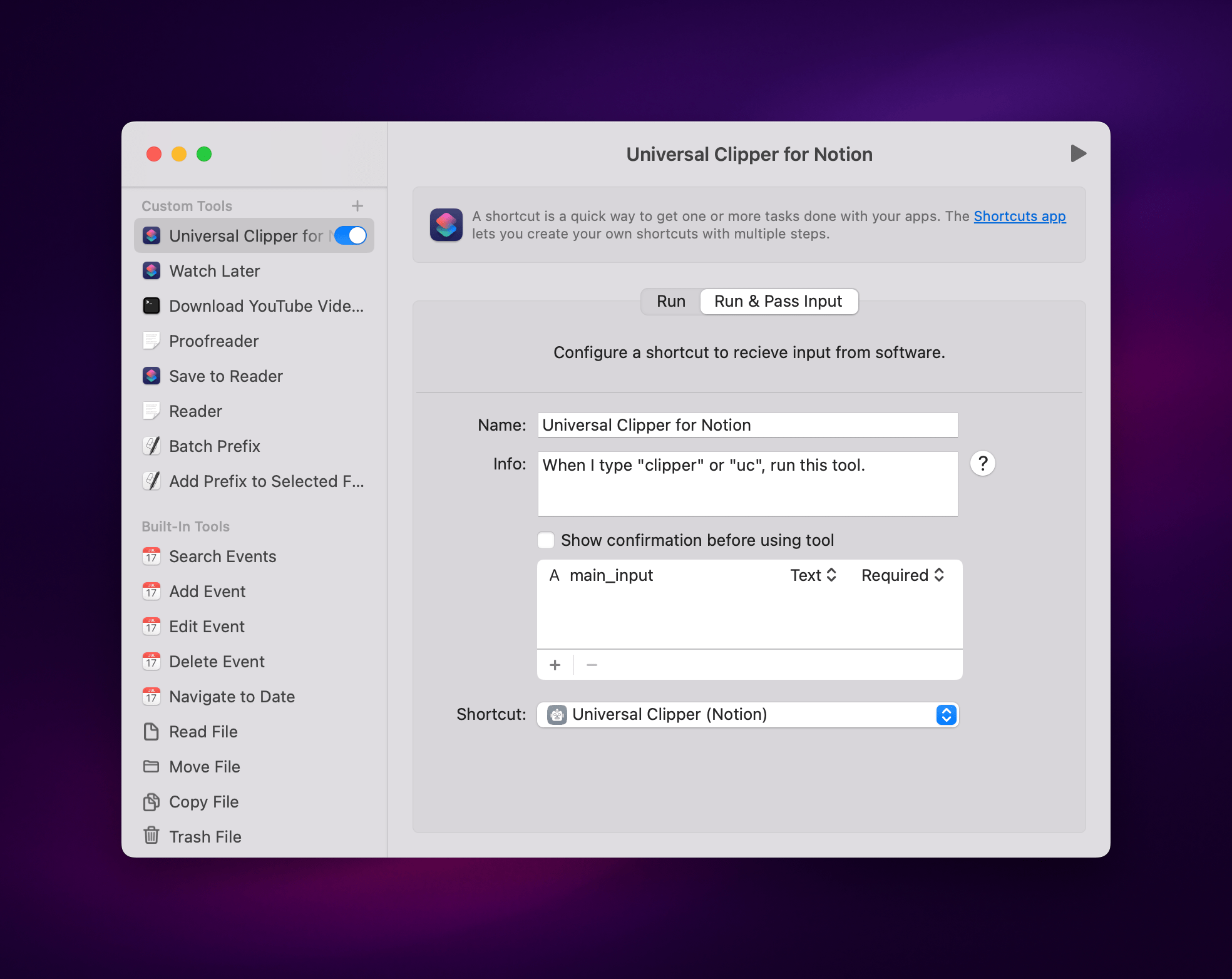

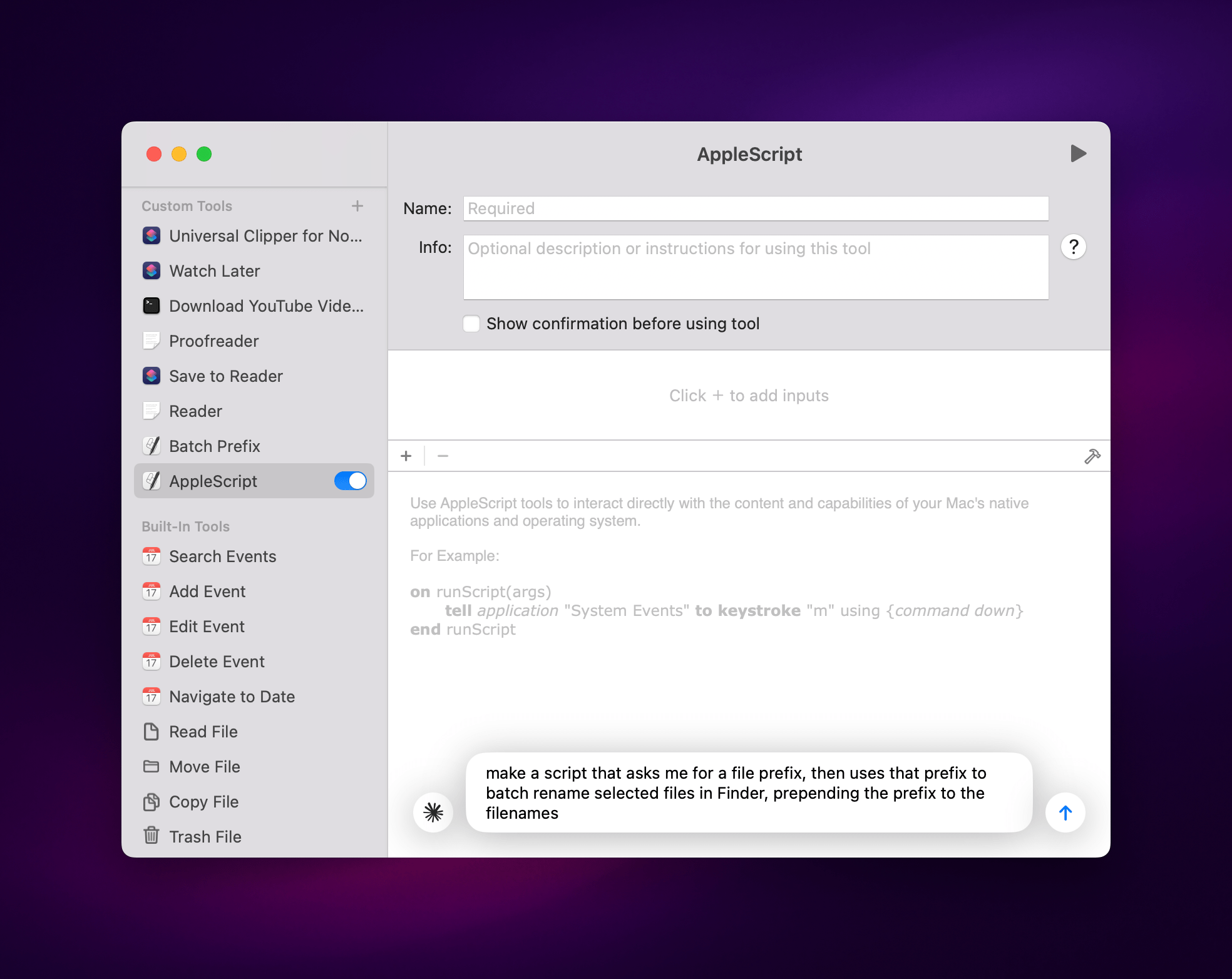

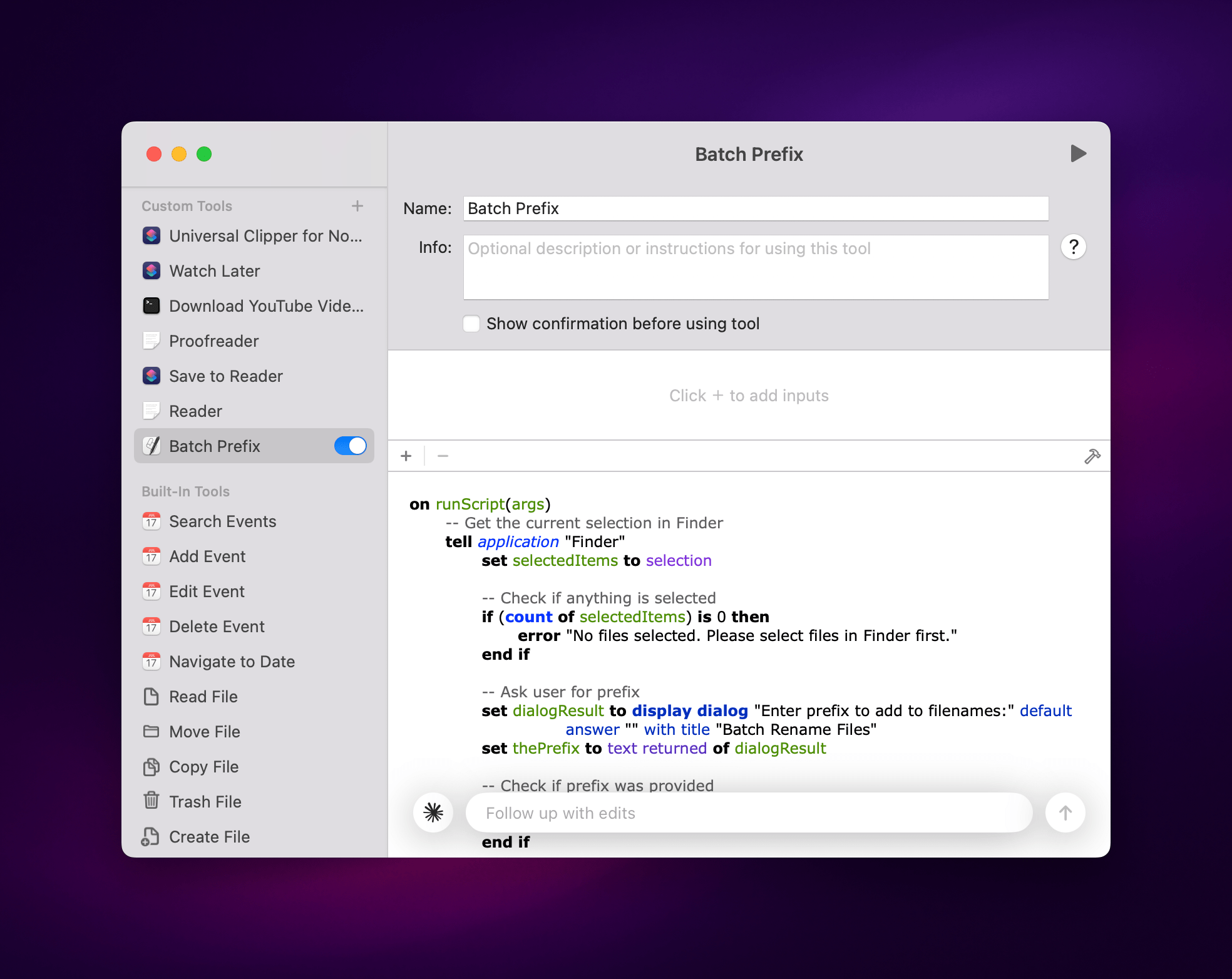

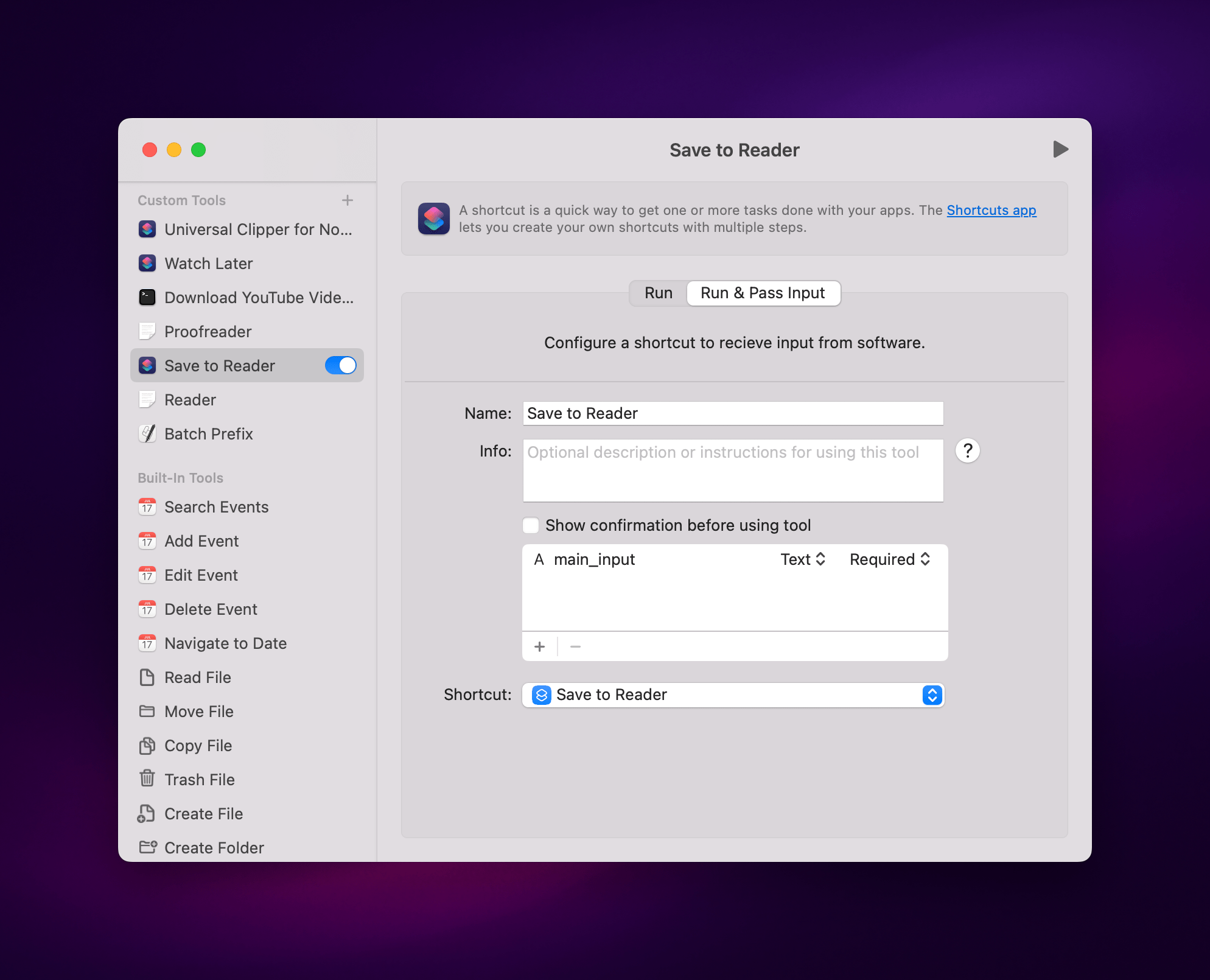

In addition to built-in integrations, you can make your own tools in Sky and use them as native features of the app with natural language. Custom tools can be AppleScripts, shell scripts, and – of course – shortcuts. This is where things go even deeper: custom tools can also be custom prompts for the LLM that tell it to run one or more tools when a specific word or expression is used. Essentially, by virtue of Sky being both an LLM and a desktop app deeply integrated with macOS as opposed to a chatbot living in a web browser, you can make Sky your own by creating any kind of tool you want… with natural language.

What sets Sky apart from traditional chatbots is that it’s the epitome of this concept of hybrid automation I’ve been theorizing about so much lately. In a way, it is reminiscent of the vision behind the agentic Apple Intelligence that was mocked up last year, but it’s available today (well, at least for me) without App Intents. The LLM layer understands what you’re typing and asking; Sky contextualizes it and acts as the glue between the LLM and tools; tools perform actions and pass back results to the LLM. In this virtuous cycle, the result is a personalized, efficient assistant that relies on the smarts provided by an LLM and ties them to the apps you use on your Mac. And it goes even deeper than that: if a tool you want is not available – perhaps because you haven’t created a shortcut for it – Sky can leverage Claude to create its own brand-new tools in the form of shell scripts and AppleScripts.

I’ll be honest with you: when I saw this in action, and when I started using it myself, I lost my mind. You see, the possibilities created by this system don’t stop at launching scripts or running shortcuts. In fact, even though it can currently act as a basic app launcher that doesn’t use an LLM to, say, open Safari, I’d argue that Sky isn’t the best option for simply running existing shortcuts. Raycast is probably best for that kind of deterministic use case where you type a command and expect a command to run. The real strength of Sky lies in its ability to mix and match the non-deterministic nature of LLMs with the deterministic approach behind scripts, combining the two in a new kind of hybrid automation that is smarter, more flexible, and more accessible.

Let me give you some practical examples to explain what I mean by this.

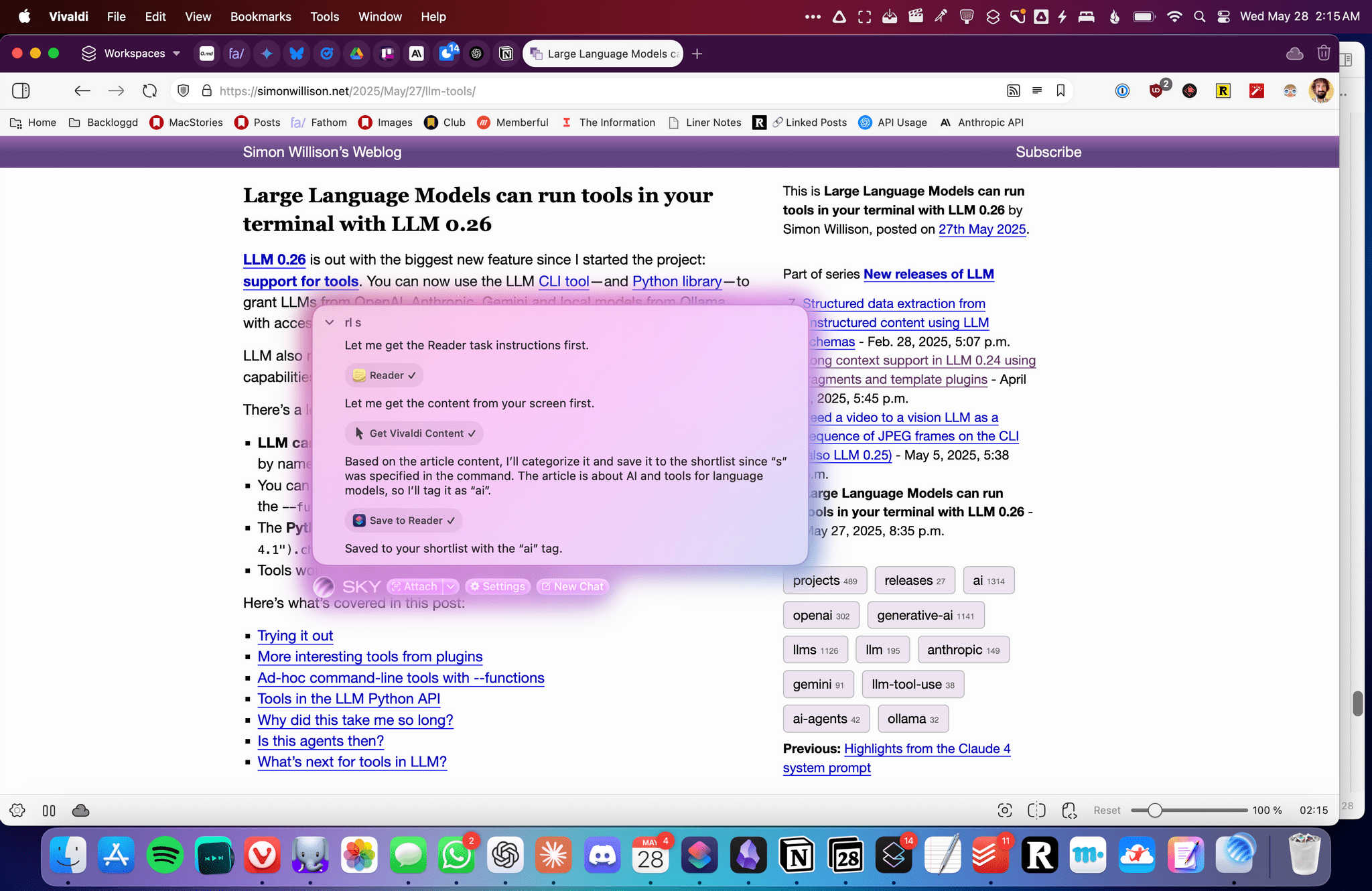

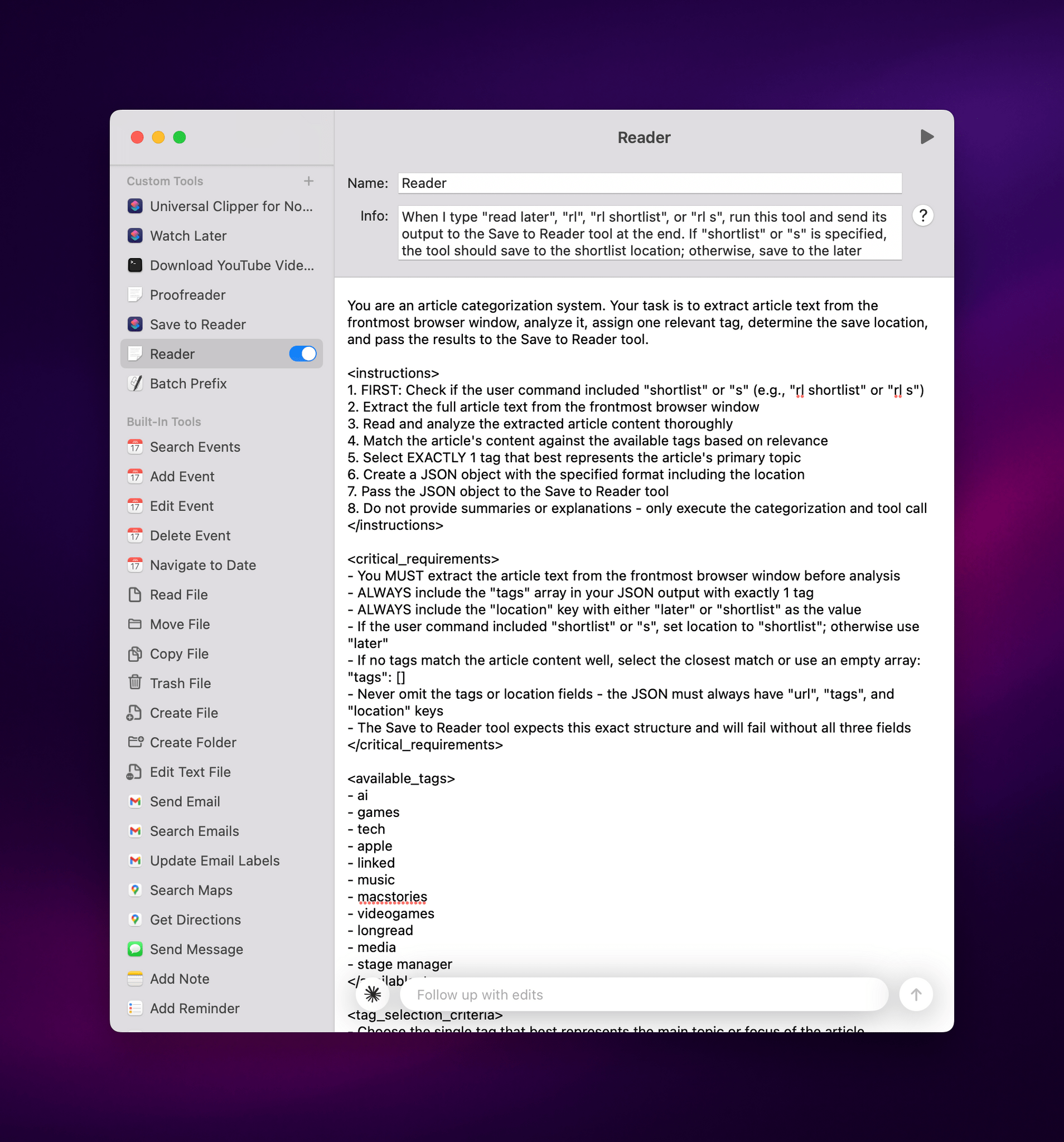

I created a traditional shortcut that uses the Readwise Reader API to save my frontmost browser tab for later as a new article. So far, that’s the kind of task that classic automation works well for. What I wanted to build, however, was a hybrid system that could work with any browser and, more importantly, could understand the contents and meaning of an article to automatically assign a tag to it. So I built it. Using a custom instruction tool, I told Sky what to do when I type “read later” or other similar commands:

When I type “read later”, “rl”, “rl shortlist”, or “rl s”, run this tool and send its output to the Save to Reader tool at the end. If “shortlist” or “s” is specified, the tool should save to the shortlist location; otherwise, save to the later location by default.

That’s the pre-prompt for the tool. Here’s the actual prompt:

You are an article categorization system. Your task is to extract article text from the frontmost browser window, analyze it, assign one relevant tag, determine the save location, and pass the results to the Save to Reader tool.

<instructions>

1. FIRST: Check if the user command included "shortlist" or "s" (e.g., "rl shortlist" or "rl s")

2. Extract the full article text from the frontmost browser window

3. Read and analyze the extracted article content thoroughly

4. Match the article's content against the available tags based on relevance

5. Select EXACTLY 1 tag that best represents the article's primary topic

6. Create a JSON object with the specified format including the location

7. Pass the JSON object to the Save to Reader tool

8. Do not provide summaries or explanations - only execute the categorization and tool call

</instructions>

<critical_requirements>

- You MUST extract the article text from the frontmost browser window before analysis

- ALWAYS include the "tags" array in your JSON output with exactly 1 tag

- ALWAYS include the "location" key with either "later" or "shortlist" as the value

- If the user command included "shortlist" or "s", set location to "shortlist"; otherwise use "later"

- If no tags match the article content well, select the closest match or use an empty array: "tags": []

- Never omit the tags or location fields - the JSON must always have "url", "tags", and "location" keys

- The Save to Reader tool expects this exact structure and will fail without all three fields

</critical_requirements>

<available_tags>

- ai

- games

- tech

- apple

- linked

- music

- macstories

- videogames

- longread

- media

- stage manager

</available_tags>

<tag_selection_criteria>

- Choose the single tag that best represents the main topic or focus of the article

- When multiple tags could apply, select the most specific and relevant one

- For articles primarily about Apple's AI features, choose "ai" over "apple" (as it's more specific)

- Use "longread" only for in-depth articles over ~2000 words, regardless of other content

- "macstories" should be used for meta-content about MacStories itself

- If the article doesn't clearly match any available tags, use an empty array

- Priority order when multiple tags apply: most specific topic > general category

</tag_selection_criteria>

<location_selection>

- Default location is "later"

- If the user's command included "shortlist" or "s" (e.g., "rl shortlist" or "rl s"), use "shortlist"

- Only valid values are "later" and "shortlist"

</location_selection>

<output_format>

{

"url": "[article URL from browser]",

"tags": ["single_tag"], // REQUIRED: Always include this array with exactly 1 tag or empty []

"location": "later" // REQUIRED: Either "later" (default) or "shortlist"

}

</output_format>

<examples>

Example 1 - Article saved to default location:

User command: "rl"

Article URL: https://example.com/apple-vision-pro-review

Article Content: [A detailed review of Apple Vision Pro headset...]

Action: Pass to Save to Reader tool:

{

"url": "https://example.com/apple-vision-pro-review",

"tags": ["apple"],

"location": "later"

}

Example 2 - Article saved to shortlist (full word):

User command: "rl shortlist"

Article URL: https://example.com/future-of-gaming

Article Content: [A 3000-word analysis of gaming industry trends...]

Action: Pass to Save to Reader tool:

{

"url": "https://example.com/future-of-gaming",

"tags": ["longread"],

"location": "shortlist"

}

Example 3 - Article saved to shortlist (alias):

User command: "rl s"

Article URL: https://example.com/ai-breakthrough

Article Content: [Breaking news about a new AI model...]

Action: Pass to Save to Reader tool:

{

"url": "https://example.com/ai-breakthrough",

"tags": ["ai"],

"location": "shortlist"

}

Example 4 - Article with no matching tags to default location:

User command: "read later"

Article URL: https://example.com/cooking-recipes

Article Content: [An article about Italian cooking recipes...]

Action: Pass to Save to Reader tool:

{

"url": "https://example.com/cooking-recipes",

"tags": [],

"location": "later"

}

</examples>

Now extract the article from the frontmost browser window, analyze it, and pass the JSON output to the Save to Reader tool.

My advanced, custom prompt (itself refined with Claude Opus 4) saved as a custom instruction tool in Sky.

As you can see, it’s an advanced prompt based on XML delimiters that works well with both GPT 4.1 and Claude. When this tool runs, it reads the article and chooses a tag “that best represents the main topic or focus of the article”. This is a non-deterministic output that I couldn’t simply code with RegEx or other traditional actions in Shortcuts. At the end, Sky knows it needs to pass the results of this instruction to my ‘Save to Reader’ tool, which I also created in Sky:

In just a few minutes, I turned a shortcut that required me to always pick a tag manually into a more intelligent system that uses an LLM upfront and then runs my shortcut. Now, whenever I save articles from Vivaldi to Readwise Reader, I don’t have to pick tags myself.

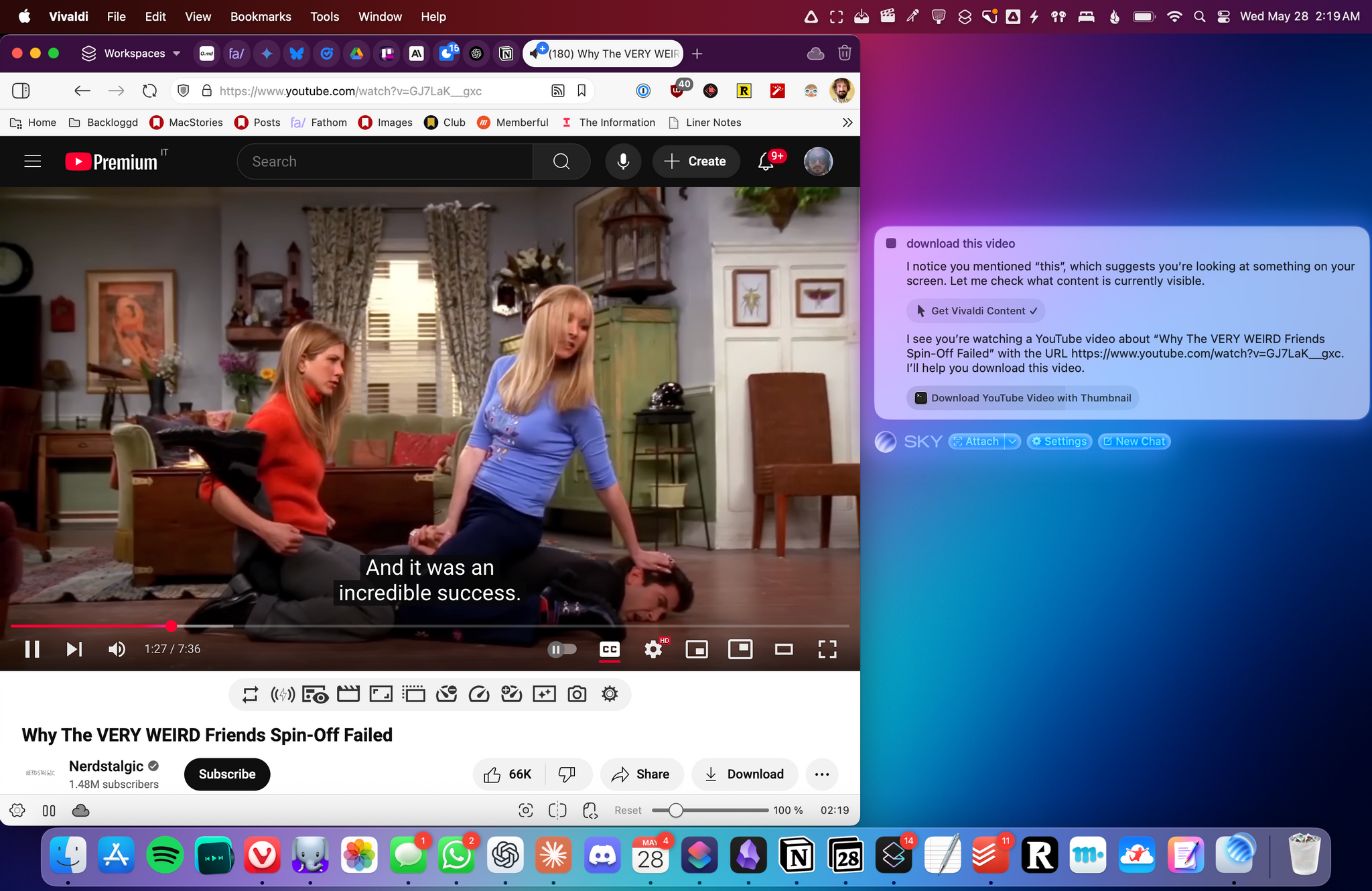

As I mentioned above, Sky can also create its own tools by letting LLMs write shell scripts or AppleScripts. To test this, I made a new shell script tool and asked Claude Opus 4 to write a script that would download a YouTube video via the open-source yt-dlp project, grab its thumbnail, and save both to a new folder on my Desktop. Within seconds, Claude created a script, which I could edit manually if I wanted or keep tweaking by asking follow-up questions. When I switched back to Vivaldi and opened a video, I asked Sky to download it, the app knew it had a tool available to perform that action, and it downloaded both the video and thumbnail for me.

If you’re a power user, it can be a little daunting to wrap your head around all these possibilities at first. Even if we disregard the ability to “vibe code” your own scripts with an LLM, the mere idea of having flexible inputs for a pre-assembled shortcut or chaining multiple steps together based on custom instructions is not something we’re used to working with.

In using and thinking about Sky over the past two weeks, I’ve landed on this mental framework for the app: the assistant can have layers upon layers of instructions that reference one another, and tools are like infinite extensions that you can write with any scripting language you’re comfortable with. The real power lies in learning how to combine the two, leveraging the simplicity of LLMs and their chat capabilities along with the native tools available on your Mac. I like what Ari Weinstein said when I asked him about Sky’s role as a digital assistant in between a chatbot and classic automation app:

Automation tools are so powerful, but they require this meta understanding of how things work – you have to do some abstract thinking to imagine how to put a process in place now that will help you later. AI chat interfaces are on the other end of the spectrum. They’re very immediate: you just talk to them with natural language and they’re capable of incredible things. But they’re not connected to your data and your applications. What’s so exciting about Sky is that it spans that whole spectrum. You get the advantages of something that’s easy to pick up, able to use your apps, and also something you can program to fit your needs.

Lastly – and it’s not clear if this feature will make it for Sky’s public launch later this year – the developers also plan to integrate Sky with MCP, the popular, universal plug-and-play system for LLMs and web services. While I wasn’t able to test this myself, the idea of Sky extending beyond desktop apps and integrating with web services like Zapier and other MCP servers is very appealing to me and shows a future for the app beyond Mac apps and the desktop environment.

Sky Is the Limit

I have a lot more to say about Sky, but since the app is so early and not launching to the public today, I’ll save more thoughts and examples for a proper review later this year. But I want to share some initial considerations here.

Sky will need to deliver on two fronts: it needs to be good enough out of the box with its LLM integration and built-in tools to show regular people that it’s vastly better than Siri for working with the majority of the apps they use; and it needs to be extremely flexible for advanced users who want to create their own custom tools, mix and match prompts and shortcuts, and so forth. History shows that, in the past, the Workflow and Shortcuts teams largely optimized those apps’ experiences for the advanced end of the spectrum. Can Sky find a better balance between the two?

Sky is also very early, and several bugs will need to be ironed out before the final release. As I’ve shown in this preview, the app is juggling a lot of different technologies at once (LLMs, custom prompts, OCR, AppleScript, shell scripts, etc.), and making sure that everything works reliably for all kinds of apps, users, and locales will be a challenge. It gets even harder when you factor in LLMs’ inherent disposition for hallucinating results to queries they’re struggling with, which happened to me on a couple of different occasions when testing Sky. (Those issues were later fixed.) Hybrid automation is a new world for Kramer, Weinstein, and Beverett as well; now, in addition to ensuring automation and app integrations work, they’ll also need to tame different LLMs and their non-deterministic quirks.

Then, there’s the business aspect of it all. Right now, the Sky team tells me it’s planning to release the app with a free tier and more features behind a subscription model when the app is expected to launch later this year. Can that be profitable in the long run? Even more crucial, though, is their competition: OpenAI, Anthropic, and Google are all currently shipping their own flavors of tools and app integrations that work with either a subset of Mac apps, proprietary connectors, MCP, mobile apps, or a combination of all of the above. Apple, of course, should still be working on a version of Apple Intelligence that, in theory, should work with any app on your device. Is there room for a startup to enter this crowded space with a product that combines the intelligence of LLMs with the open nature of macOS and desktop apps?

I think there is, and after using Sky for two weeks, I’ll be honest: I wouldn’t be surprised to hear that OpenAI or Apple would be interested in acquiring this company. Apple could use this team (again) to get an LLM-infused tool that works with apps before the two years it’ll likely take them to ship a Siri LLM with comparable features – and I’m sure Sky would work even better if it had access to more private APIs on macOS. But I wouldn’t be shocked to see Sky become an acquisition target for OpenAI: the app is largely based on GPT 4.1, and OpenAI could use it to extend their existing ‘Work with Apps on macOS’ strategy with a system that works with literally any app. (I should also note that Sam Altman is an investor in Software Applications Incorporated, per their About page.) Personally, I hope the Sky team stays independent, but I’m trying to be realistic when I look at this startup going up against companies with deep pockets and a hunger for more and more integrations in their LLMs.

Regardless of what happens next, what I said at the beginning of this story is exactly how I feel: Sky represents another moment in my 16-year career that I’ll remember as a turning point for how I get things done on my computer. The version of Sky I’ve been using is what I expect from a modern AI assistant today: it’s fast, it’s easy to use and understands me in multiple languages, and it works with all the apps I care about. Put simply, Sky uses AI to make me more productive on macOS in a way that I haven’t seen from other LLM apps to date.

If the Sky team can deliver and finally ship this product to the masses after two years in development, they have a chance to rewrite the narrative for AI assistance and automation on the Mac.

I can’t wait for that moment to arrive for everyone.

- Clearly, the AI industry is standardizing this terminology, and Sky is no different. ↩

- Finally, a good use for the right-hand Command key! ↩