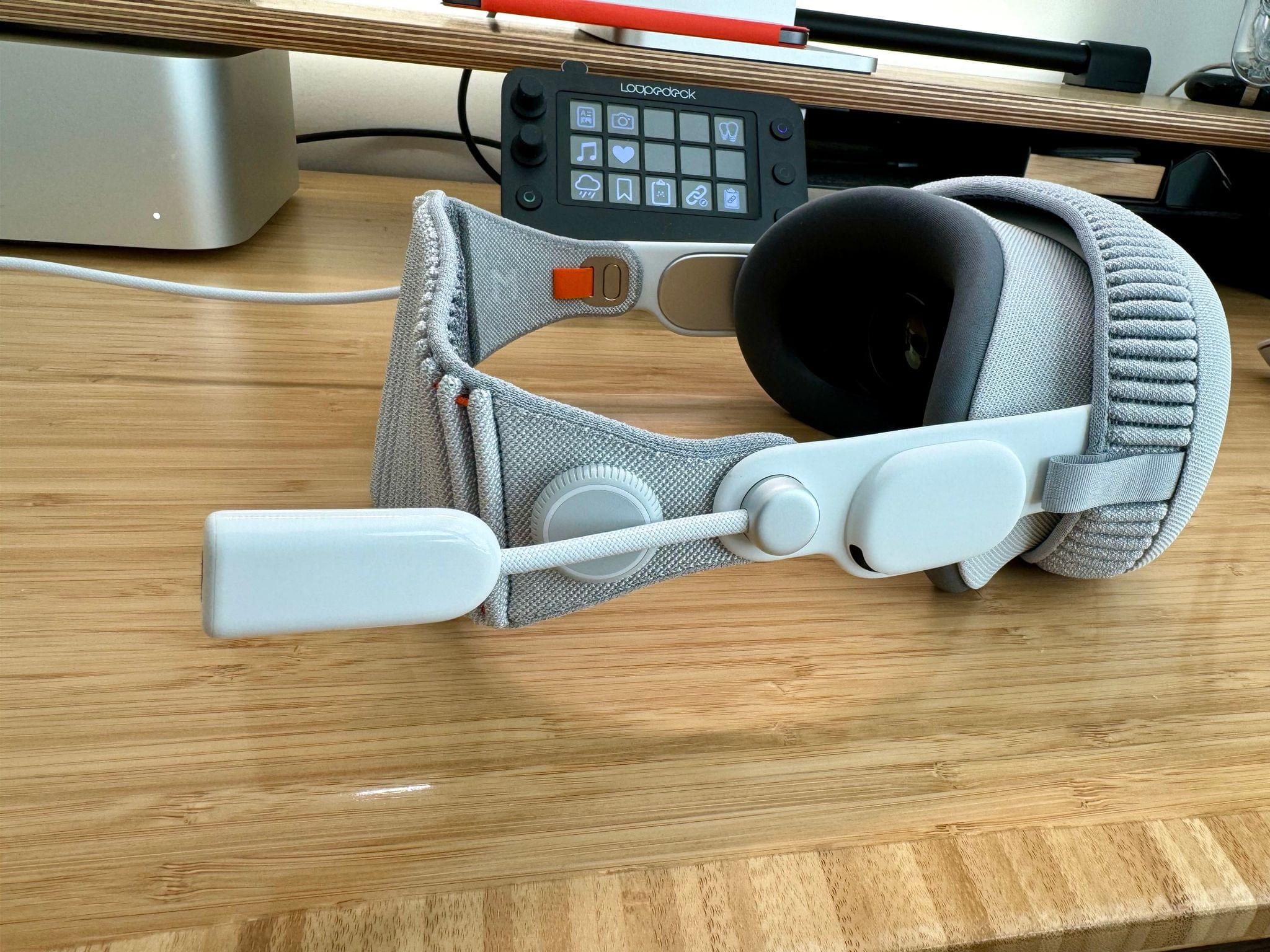

Taking good-looking screenshots on the Apple Vision Pro isn’t easy, but it’s not impossible either. I’ve already spent many hours taking screenshots on the device, and I thought I’d share my experience and some practical tips for getting the best screenshots possible.

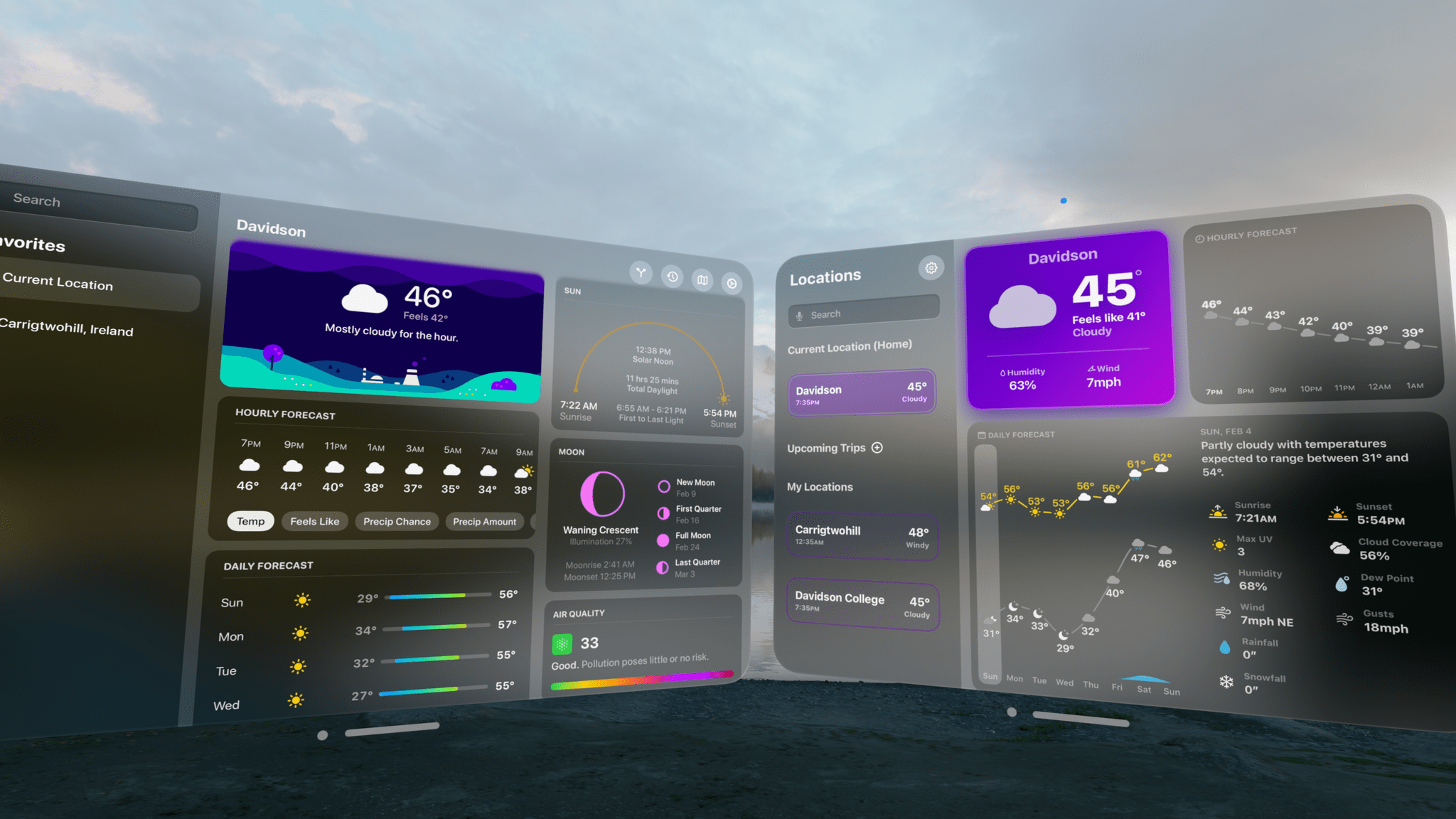

Although I’ve only had the Apple Vision Pro for a week, I’ve already spent a lot of time thinking about and refining my screenshot workflow out of necessity. That’s because after I spent around three hours writing my first visionOS app review of CARROT Weather and Mercury Weather, I spent at least as much time trying to get the screenshots I wanted. If that had been a review of the iOS versions of those apps, the same number of screenshots would have taken less than a half hour. That’s a problem because I simply don’t have that much time to devote to screenshots.

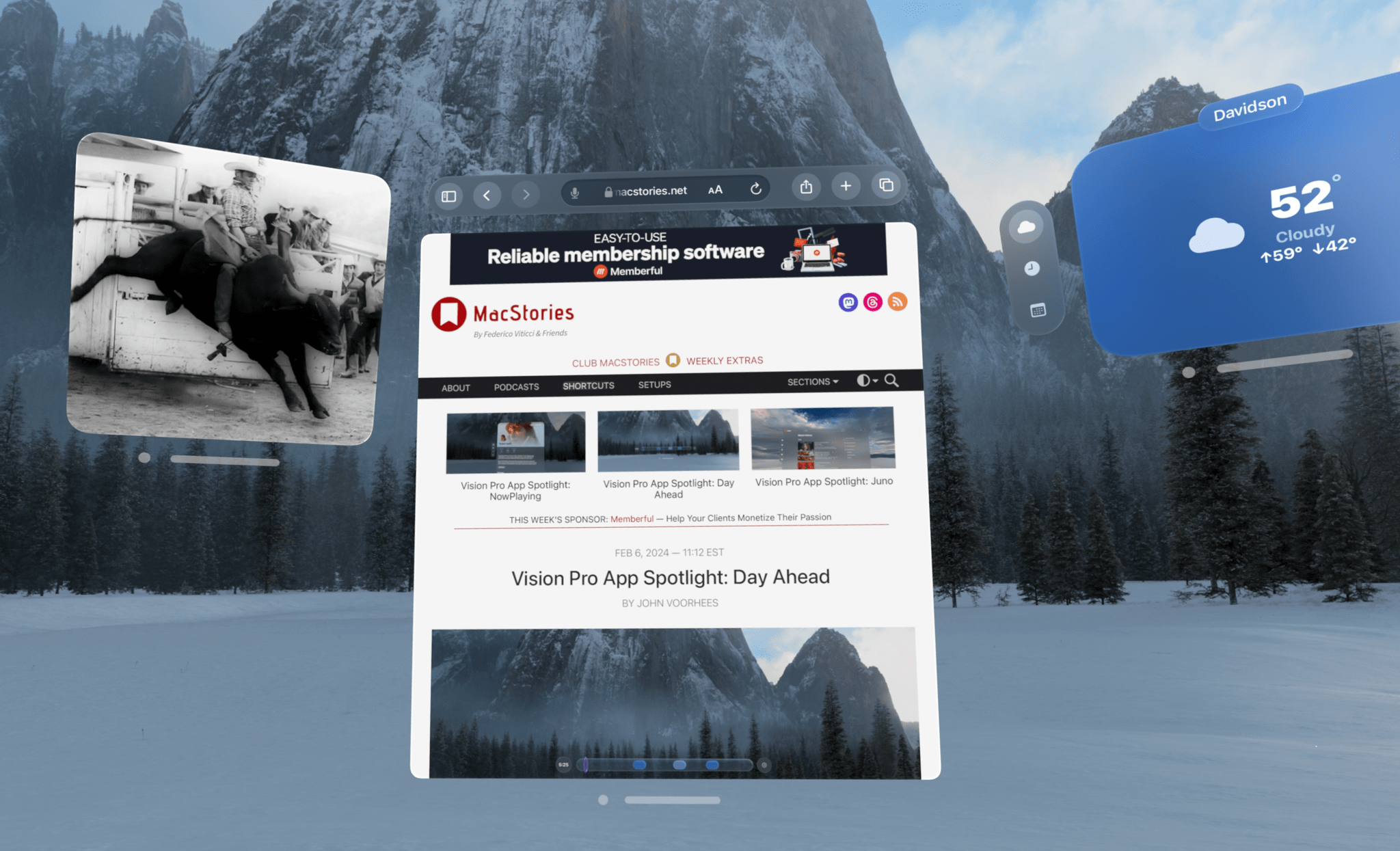

Taking screenshots with the Apple Vision Pro is difficult because of the way the device works. Like other headsets, the Apple Vision Pro uses something called foveated rendering, a technique that’s used to reduce the computing power needed to display the headset’s images. In practical terms, the technique means that the only part of the device’s view that is in focus is where you’re looking. The focal point changes as your eyes move, so you don’t notice that part of the view is blurry. In fact, this is how the human eye works, so as long as the eye tracking is good, which it is on the Apple Vision Pro, the experience is good too.

However, as well as foveated rendering works for using the Apple Vision Pro, it’s terrible for screenshots. You can take a quick screenshot by pressing the top button and Digital Crown, but you’ll immediately see that everything except where you were looking when you took the screen-grab is out of focus. That’s fine for sharing a quick image with a friend, but if you want something suitable for publishing, it’s not a good option.

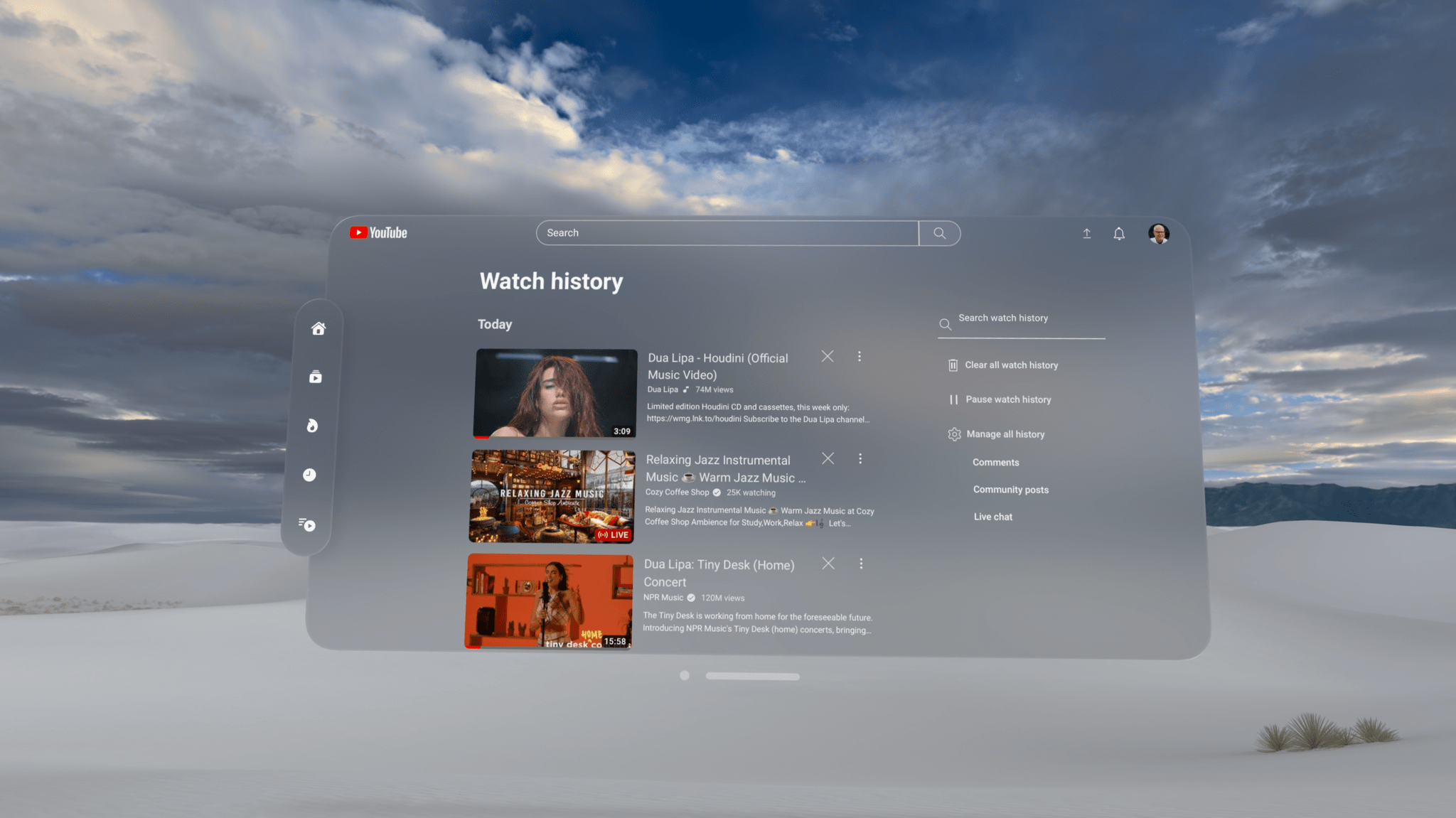

Fortunately, Apple thought of this, and there’s a solution, but it involves using Xcode and another developer tool. Of course, using Xcode to take screenshots is a little like using Logic Pro to record voice memos, except there are plenty of simple apps for recording voice memos, whereas Xcode is currently your only choice for taking crisp screenshots on the Vision Pro. So until there’s another option, it pays to learn your way around these developer tools to get the highest quality screenshots as efficiently as possible.

.](https://cdn.macstories.net/avp-layout-1-1707052612338.jpg)