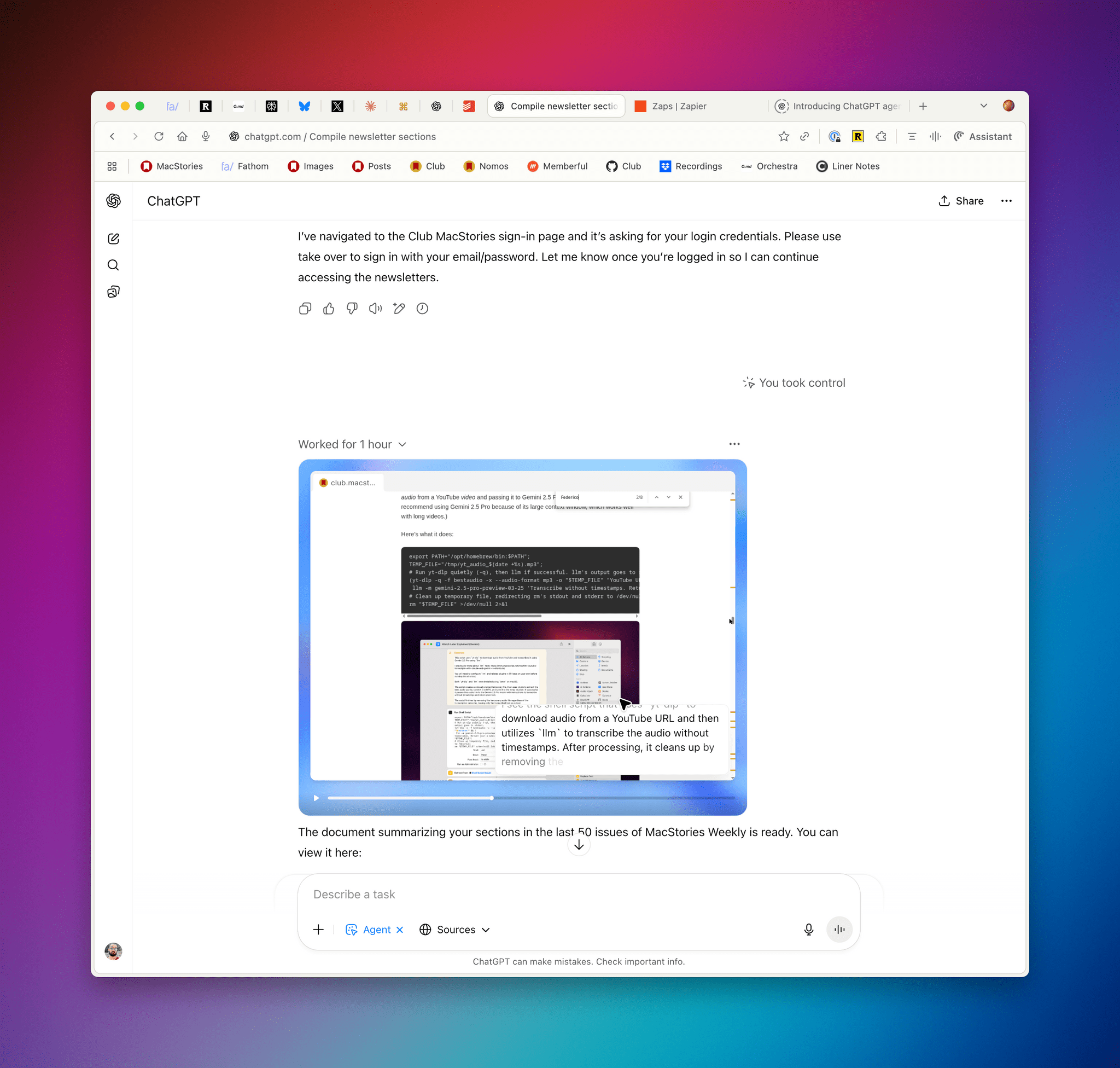

Earlier this week, OpenAI released ChatGPT agent, a new agentic model that combines the text-focused capabilities of Deep Research with the browser-based automation of Operator into a single, well, agent that can autonomously browse the web, read webpages, and interact with web apps. OpenAI describes the (lowercase) agent as ChatGPT having its own computer.

Posts tagged with "AI"

Some Early Tests and Notes on ChatGPT Agent

Cloudflare Introduces a Pay-to-Scrape Beta Program for Web Publishers

Governments have largely been ineffective in regulating the unfettered scraping of the web by AI companies. Now, Cloudflare is taking a different approach, tackling the problem from a commercial angle with a beta program that charges AI bots each time they scrape a website. Cloudflare’s CEO, Matthew Prince told Ars Technica:

Original content is what makes the Internet one of the greatest inventions in the last century, and it’s essential that creators continue making it. AI crawlers have been scraping content without limits. Our goal is to put the power back in the hands of creators, while still helping AI companies innovate. This is about safeguarding the future of a free and vibrant Internet with a new model that works for everyone.

Under the program, websites set what can be scraped and what scraping costs. In addition, for new customers, Cloudflare is now blocking AI bots from scraping sites by default, a change from its previous opt-in blocking system.

There are a lot of questions surrounding the viability of Cloudflare’s pay-to-scrape beta, and many details still need to be worked out, not the least of which includes convincing AI companies to cooperate. However, I’m glad to see Cloudflare taking the lead on an approach that attempts to compensate publishers for the value of what AI companies are scraping and put agency back in the hands of creators.

The Curious Case of Apple and Perplexity→

Good post by Parker Ortolani, analyzing the pros and cons of a potential Perplexity acquisition by Apple:

According to Mark Gurman, Apple executives are in the early stages of mulling an acquisition of Perplexity. My initial reaction was “that wouldn’t work.” But I’ve taken some time to think through what it could look like if it were to come to fruition.

He gets to the core of the issue with this acquisition:

At the end of the day, Apple needs a technology company, not another product company. Perplexity is really good at, for lack of a better word, forking models. But their true speciality is in making great products, they’re amazing at packaging this technology. The reality is though, that Apple already knows how to do that. Of course, only if they can get out of their own way. That very issue is why I’m unsure the two companies would fit together. A company like Anthropic, a foundational AI lab that develops models from scratch is what Apple could stand to benefit from. That’s something that doesn’t just put them on more equal footing with Google, it’s something that also puts them on equal footing with OpenAI which is arguably the real threat.

While I’m not the biggest fan of Perplexity’s web scraping policies and its CEO’s remarks, it’s undeniable that the company has built a series of good consumer products, they’re fast at integrating the latest models from major AI vendors, and they’ve even dipped their toes in the custom model waters (with Sonar, an in-house model based on Llama). At first sight, I would agree with Ortolani and say that Apple would need Perplexity’s search engine and LLM integration talent more than the Perplexity app itself. So far, Apple has only integrated ChatGPT into its operating systems; Perplexity supports all the major LLMs currently in existence. If Apple wants to make the best computers for AI rather than being a bleeding-edge AI provider itself…well, that’s pretty much aligned with Perplexity’s software-focused goals.

However, I wonder if Perplexity’s work on its iOS voice assistant may have also played a role in these rumors. As I wrote a few months ago, Perplexity shipped a solid demo of what a deep LLM integration with core iOS services and frameworks could look like. What could Perplexity’s tech do when integrated with Siri, Spotlight, Safari, Music, or even third-party app entities in Shortcuts?

Or, look at it this way: if you’re Apple, would you spend $14 billion to buy an app and rebrand it as “Siri That Works” next year?

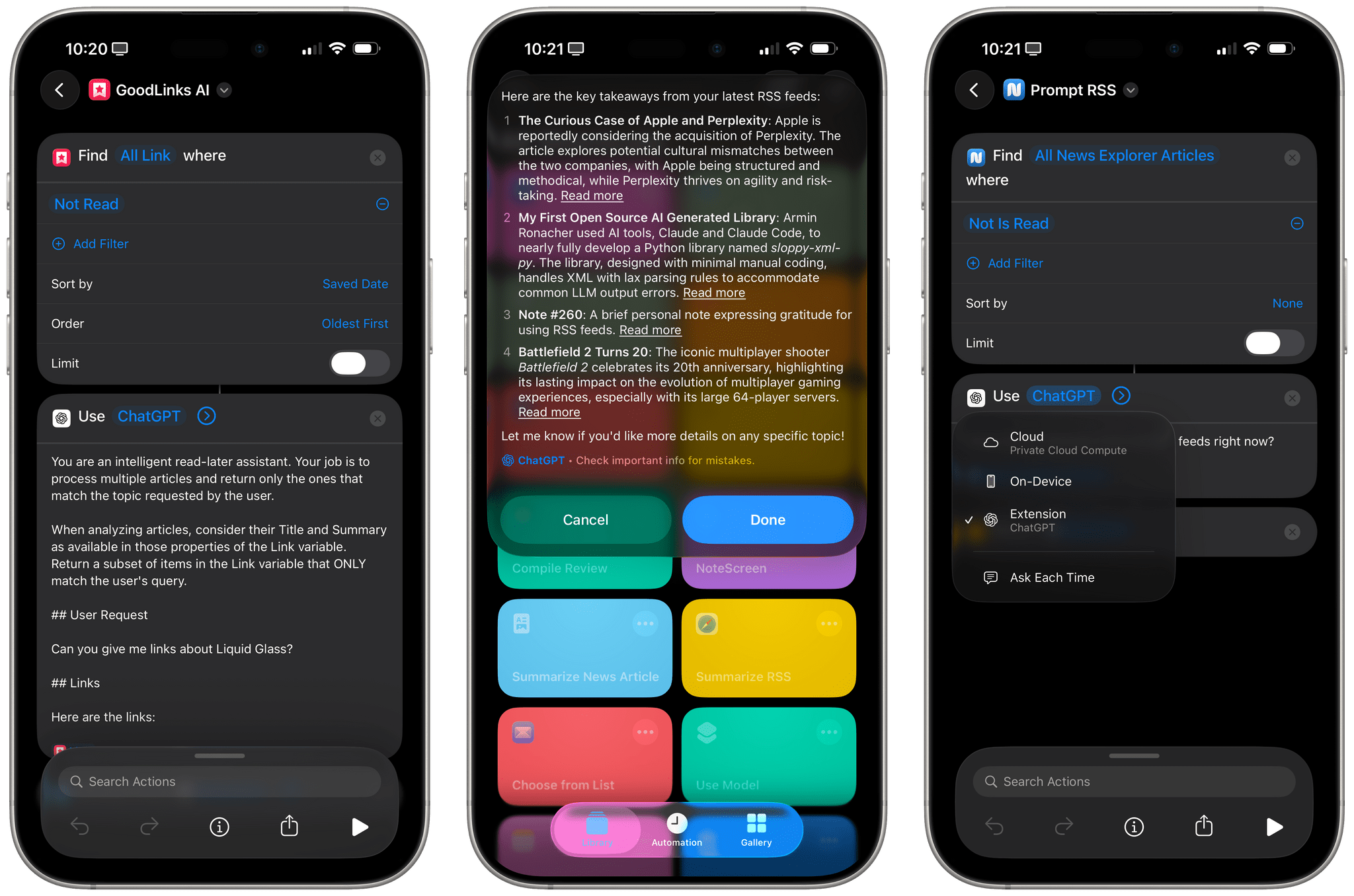

I Have Many Questions About Apple’s Updated Foundation Models and the (Great) ‘Use Model’ Action in Shortcuts

I mentioned this on AppStories during the week of WWDC: I think Apple’s new ‘Use Model’ action in Shortcuts for iOS/iPadOS/macOS 26, which lets you prompt either the local or cloud-based Apple Foundation models, is Apple Intelligence’s best and most exciting new feature for power users this year. This blog post is a way for me to better explain why as well as publicly investigate some aspects of the updated Foundation models that I don’t fully understand yet.

Hands-On: How Apple’s New Speech APIs Outpace Whisper for Lightning-Fast Transcription

Late last Tuesday night, after watching F1: The Movie at the Steve Jobs Theater, I was driving back from dropping Federico off at his hotel when I got a text:

Can you pick me up?

It was from my son Finn, who had spent the evening nearby and was stalking me in Find My. Of course, I swung by and picked him up, and we headed back to our hotel in Cupertino.

On the way, Finn filled me in on a new class in Apple’s Speech framework called SpeechAnalyzer and its SpeechTranscriber module. Both the class and module are part of Apple’s OS betas that were released to developers last week at WWDC. My ears perked up immediately when he told me that he’d tested SpeechAnalyzer and SpeechTranscriber and was impressed with how fast and accurate they were.

It’s still early days for these technologies, but I’m here to tell you that their speed alone is a game changer for anyone who uses voice transcription to create text from lectures, podcasts, YouTube videos, and more. That’s something I do multiple times every week for AppStories, NPC, and Unwind, generating transcripts that I upload to YouTube because the site’s built-in transcription isn’t very good.

What’s frustrated me with other tools is how slow they are. Most are built on Whisper, OpenAI’s open source speech-to-text model, which was released in 2022. It’s cheap at under a penny per one million tokens, but isn’t fast, which is frustrating when you’re in the final steps of a YouTube workflow.

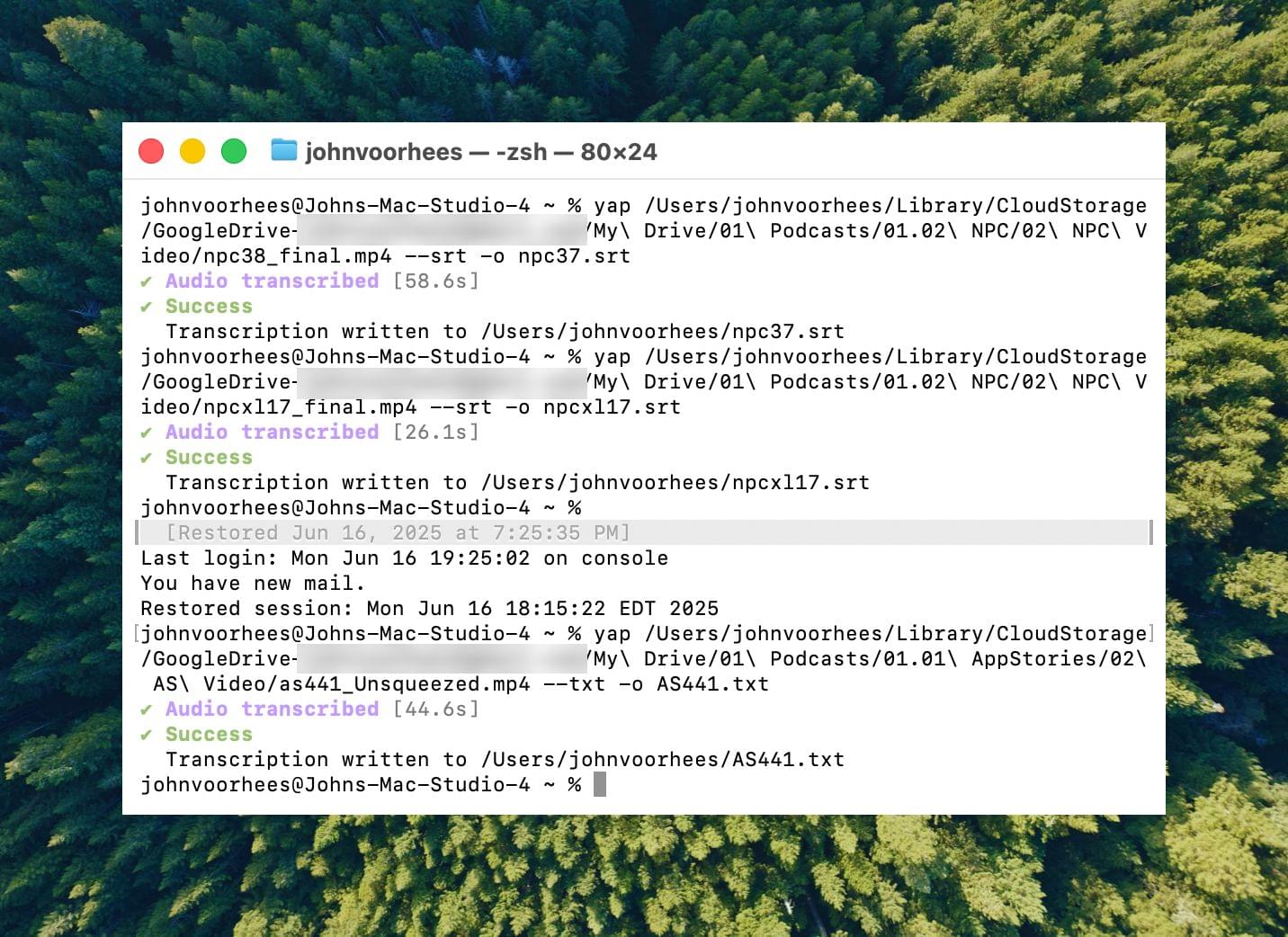

I asked Finn what it would take to build a command line tool to transcribe video and audio files with SpeechAnalyzer and SpeechTranscriber. He figured it would only take about 10 minutes, and he wasn’t far off. In the end, it took me longer to get around to installing macOS Tahoe after WWDC than it took Finn to build Yap, a simple command line utility that takes audio and video files as input and outputs SRT- and TXT-formatted transcripts.

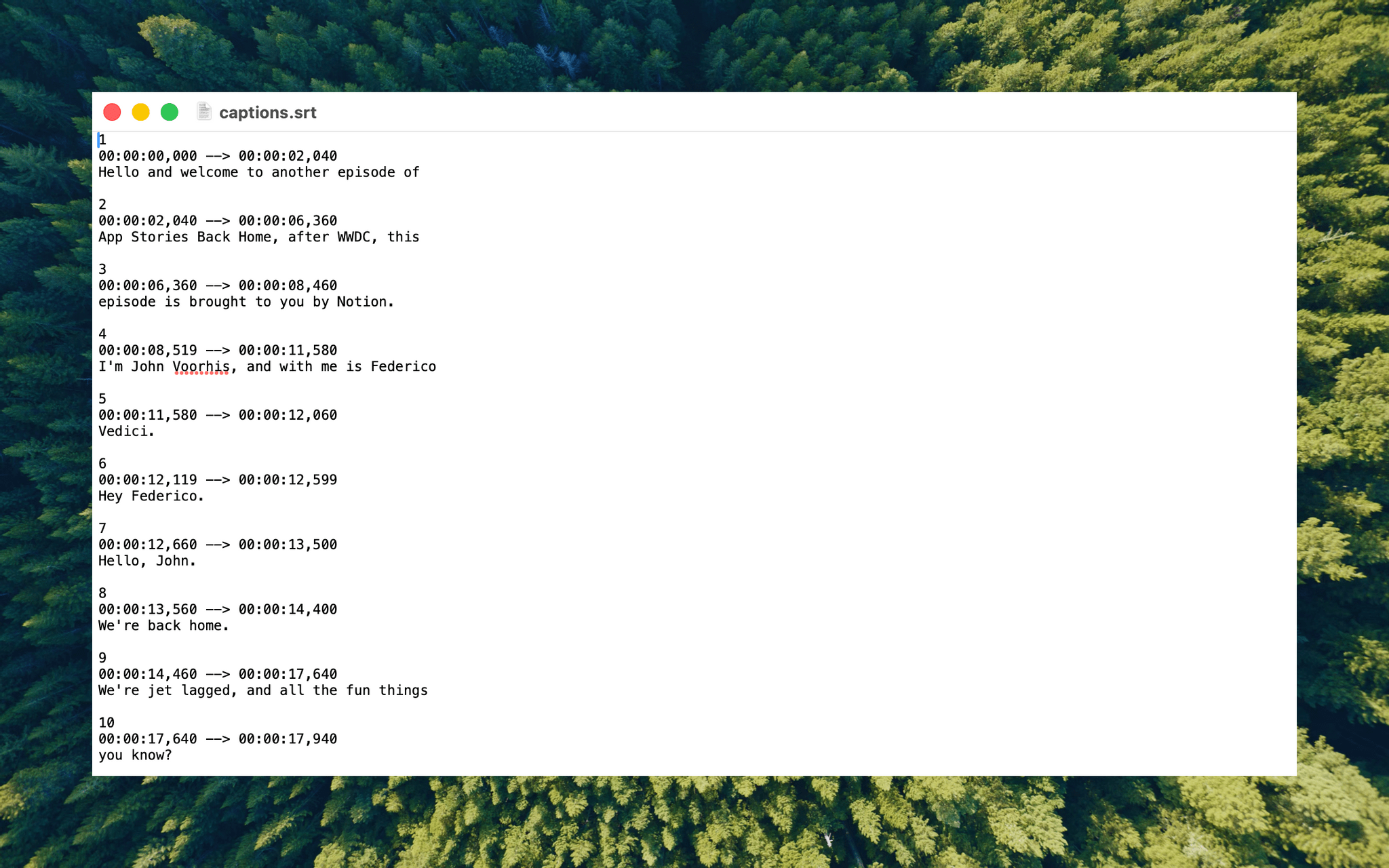

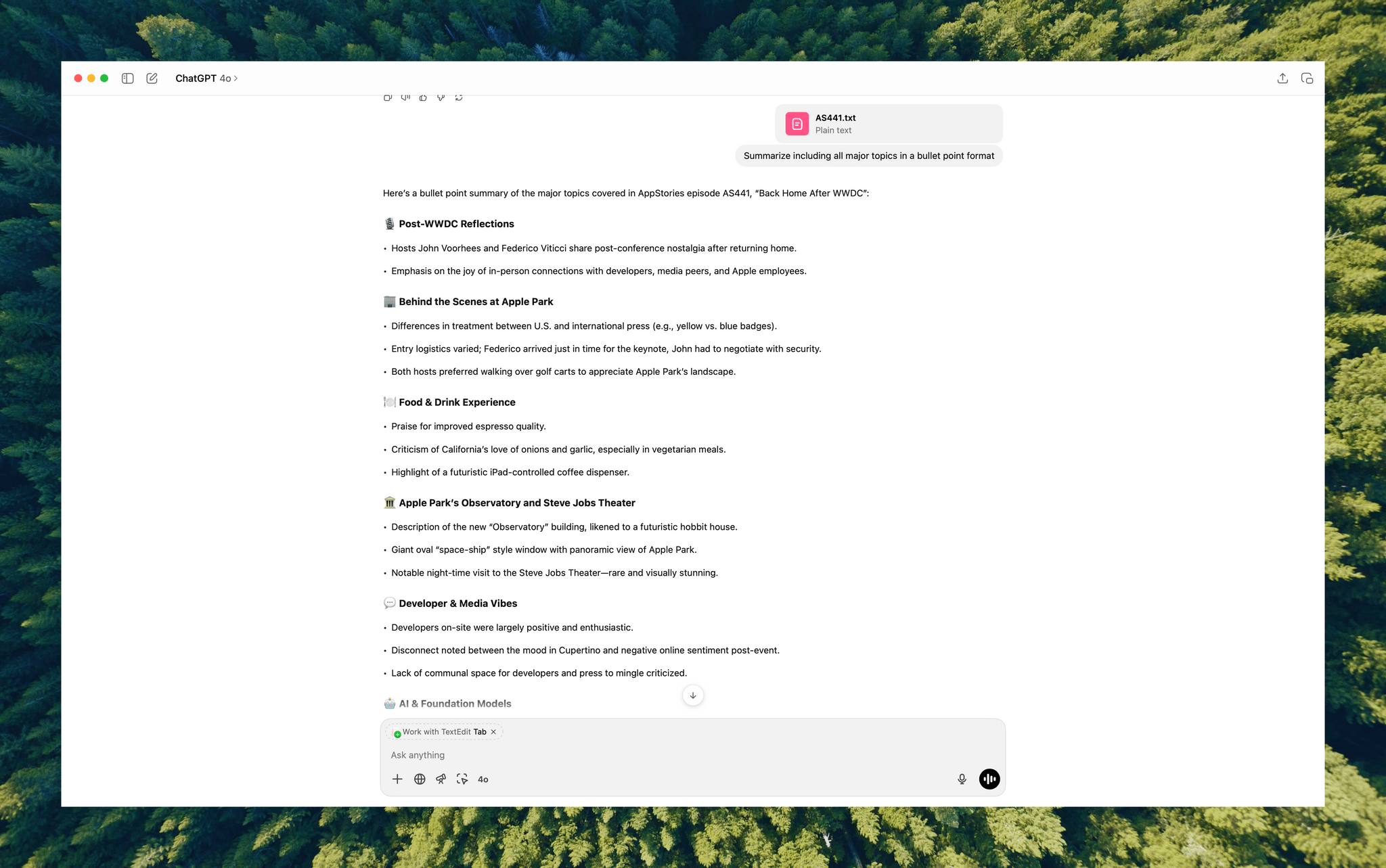

Yesterday, I finally took the Tahoe plunge and immediately installed Yap. I grabbed the 7GB 4K video version of AppStories episode 441, which is about 34 minutes long, and ran it through Yap. It took just 45 seconds to generate an SRT file. Here’s Yap ripping through nearly 20% of an episode of NPC in 10 seconds:

Next, I ran the same file through VidCap and MacWhisper, using its V2 Large and V3 Turbo models. Here’s how each app and model did:

| App | Transcripiton Time |

|---|---|

| Yap | 0:45 |

| MacWhisper (Large V3 Turbo) | 1:41 |

| VidCap | 1:55 |

| MacWhisper (Large V2) | 3:55 |

All three transcription workflows had similar trouble with last names and words like “AppStories,” which LLMs tend to separate into two words instead of camel casing. That’s easily fixed by running a set of find and replace rules, although I’d love to feed those corrections back into the model itself for future transcriptions.

What stood out above all else was Yap’s speed. By harnessing SpeechAnalyzer and SpeechTranscriber on-device, the command line tool tore through the 7GB video file a full 2.2× faster than MacWhisper’s Large V3 Turbo model, with no noticeable difference in transcription quality.

At first blush, the difference between 0:45 and 1:41 may seem insignificant, and it arguably is, but those are the results for just one 34-minute video. Extrapolate that to running Yap against the hours of Apple Developer videos released on YouTube with the help of yt-dlp, and suddenly, you’re talking about a significant amount of time. Like all automation, picking up a 2.2× speed gain one video or audio clip at a time, multiple times each week, adds up quickly.

Whether you’re producing video for YouTube and need subtitles, generating transcripts to summarize lectures at school, or doing something else, SpeechAnalyzer and SpeechTranscriber – available across the iPhone, iPad, Mac, and Vision Pro – mark a significant leap forward in transcription speed without compromising on quality. I fully expect this combination to replace Whisper as the default transcription model for transcription apps on Apple platforms.

To test Apple’s new model, install the macOS Tahoe beta, which currently requires an Apple developer account, and then install Yap from its GitHub page.

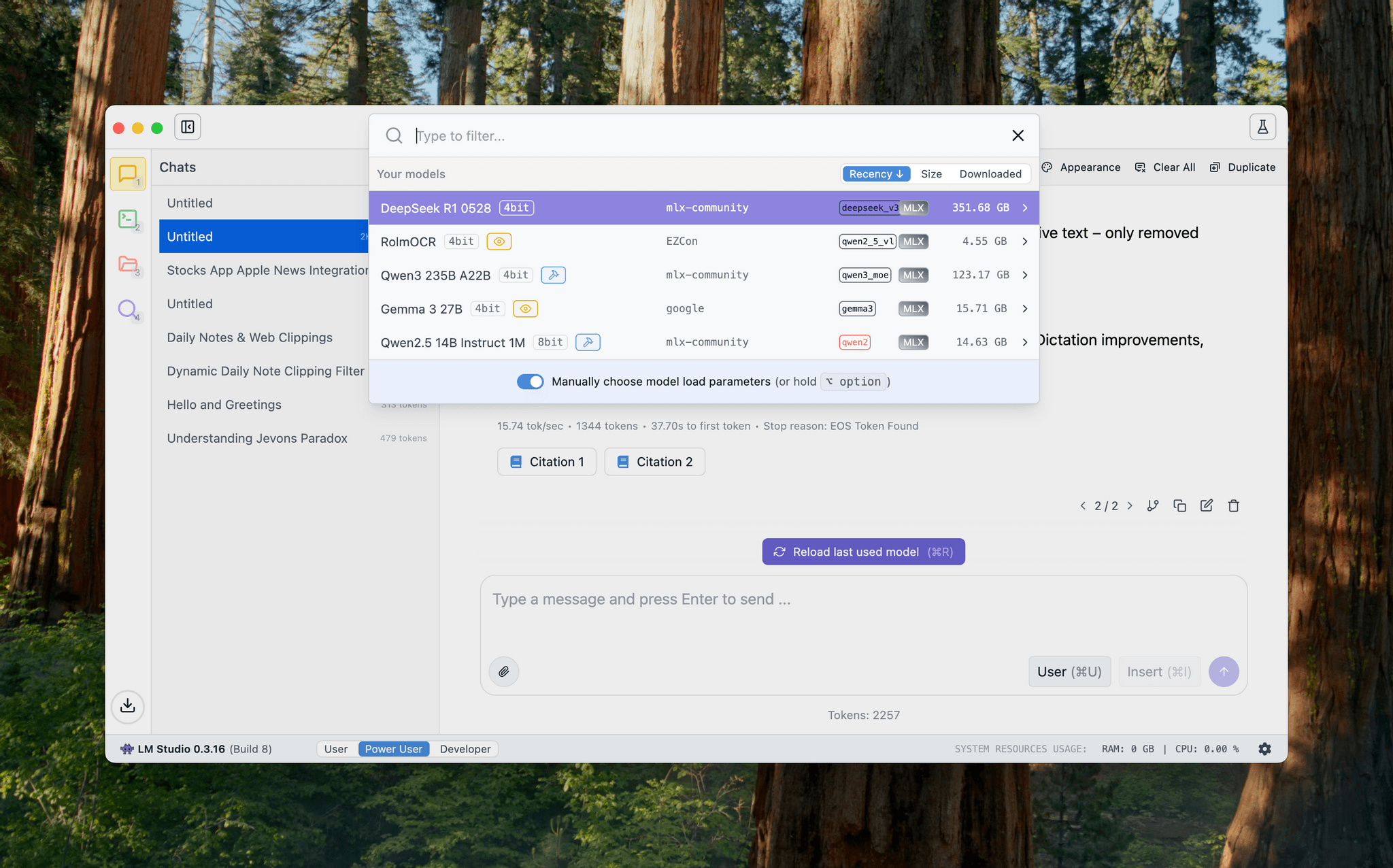

Testing DeepSeek R1-0528 on the M3 Ultra Mac Studio and Installing Local GGUF Models with Ollama on macOS

DeepSeek released an updated version of their popular R1 reasoning model (version 0528) with – according to the company – increased benchmark performance, reduced hallucinations, and native support for function calling and JSON output. Early tests from Artificial Analysis report a nice bump in performance, putting it behind OpenAI’s o3 and o4-mini-high in their Intelligence Index benchmarks. The model is available in the official DeepSeek API, and open weights have been distributed on Hugging Face. I downloaded different quantized versions of the full model on my M3 Ultra Mac Studio, and here are some notes on how it went.

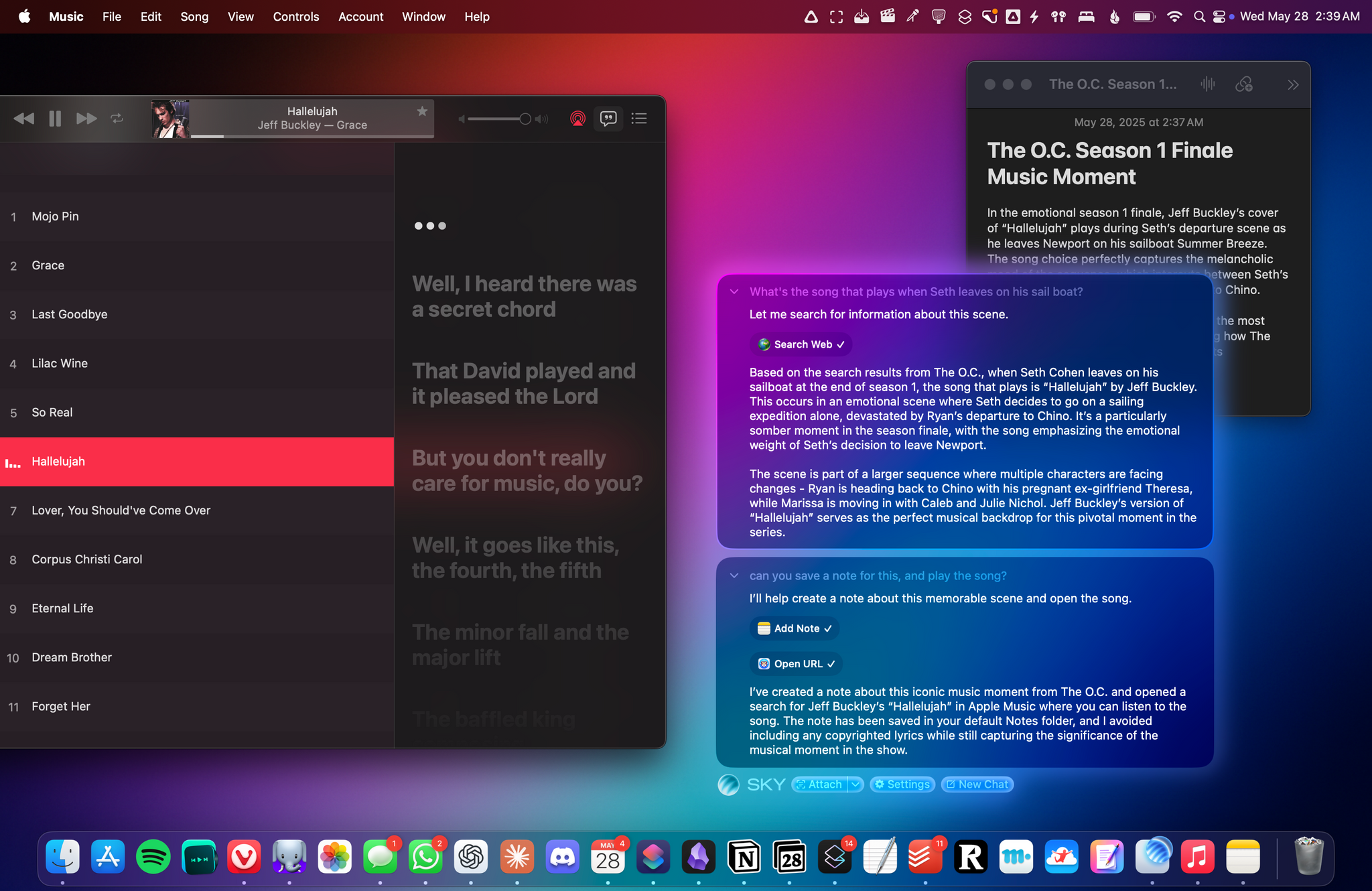

From the Creators of Shortcuts, Sky Extends AI Integration and Automation to Your Entire Mac

Over the course of my career, I’ve had three distinct moments in which I saw a brand-new app and immediately felt it was going to change how I used my computer – and they were all about empowering people to do more with their devices.

I had that feeling the first time I tried Editorial, the scriptable Markdown text editor by Ole Zorn. I knew right away when two young developers told me about their automation app, Workflow, in 2014. And I couldn’t believe it when Apple showed that not only had they acquired Workflow, but they were going to integrate the renamed Shortcuts app system-wide on iOS and iPadOS.

Notably, the same two people – Ari Weinstein and Conrad Kramer – were involved with two of those three moments, first with Workflow, then with Shortcuts. And a couple of weeks ago, I found out that they were going to define my fourth moment, along with their co-founder Kim Beverett at Software Applications Incorporated, with the new app they’ve been working on in secret since 2023 and officially announced today.

For the past two weeks, I’ve been able to use Sky, the new app from the people behind Shortcuts who left Apple two years ago. As soon as I saw a demo, I felt the same way I did about Editorial, Workflow, and Shortcuts: I knew Sky was going to fundamentally change how I think about my macOS workflow and the role of automation in my everyday tasks.

Only this time, because of AI and LLMs, Sky is more intuitive than all those apps and requires a different approach, as I will explain in this exclusive preview story ahead of a full review of the app later this year.

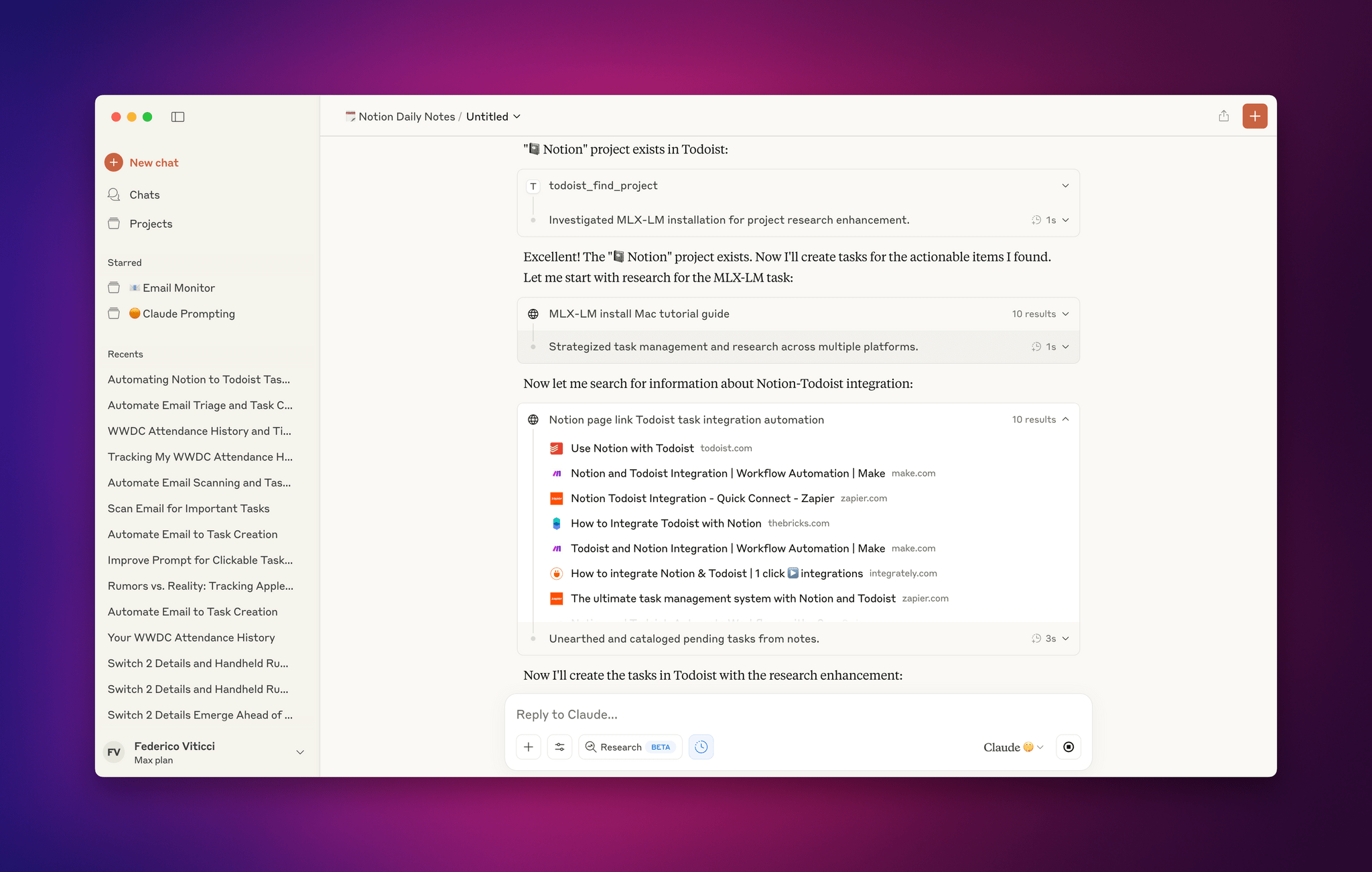

Early Impressions of Claude Opus 4 and Using Tools with Extended Thinking

For the past two days, I’ve been testing an early access version of Claude Opus 4, the latest model by Anthropic that was just announced today. You can read more about the model in the official blog post and find additional documentation here. What follows is a series of initial thoughts and notes based on the 48 hours I spent with Claude Opus 4, which I tested in both the Claude app and Claude Code.

For starters, Anthropic describes Opus 4 as its most capable hybrid model with improvements in coding, writing, and reasoning. I don’t use AI for creative writing, but I have dabbled with “vibe coding” for a collection of personal Obsidian plugins (created and managed with Claude Code, following these tips by Harper Reed), and I’m especially interested in Claude’s integrations with Google Workspace and MCP servers. (My favorite solution for MCP at the moment is Zapier, which I’ve been using for a long time for web automations.) So I decided to focus my tests on reasoning with integrations and some light experiments with the upgraded Claude Code in the macOS Terminal.

OpenAI to Buy Jony Ive’s Stealth Startup for $6.5 Billion→

Jony Ive’s stealth AI company known as io is being acquired by OpenAI for $6.5 billion in a deal that is expected to close this summer subject to regulatory approvals. According to reporting by Mark Gurman and Shirin Ghaffary of Bloomberg:

The purchase — the largest in OpenAI’s history — will provide the company with a dedicated unit for developing AI-powered devices. Acquiring the secretive startup, named io, also will secure the services of Ive and other former Apple designers who were behind iconic products such as the iPhone.

The partnership builds on a 23% stake in io that OpenAI purchased at the end of last year and comes with what Bloomberg describes as 55 hardware engineers, software developers, and manufacturing experts, plus a cast of accomplished designers.

Ive had this to say about the purportedly novel products he and OpenAI CEO Sam Altman are planning:

“People have an appetite for something new, which is a reflection on a sort of an unease with where we currently are,” Ive said, referring to products available today. Ive and Altman’s first devices are slated to debut in 2026.

Bloomberg also notes that Ive and his team of designers will be taking over all design at OpenAI, including software design like ChatGPT.

For now, the products OpenAI is working on remain a mystery, but given the purchase price and io’s willingness to take its first steps into the spotlight, I expect we’ll be hearing more about this historic collaboration in the months to come.