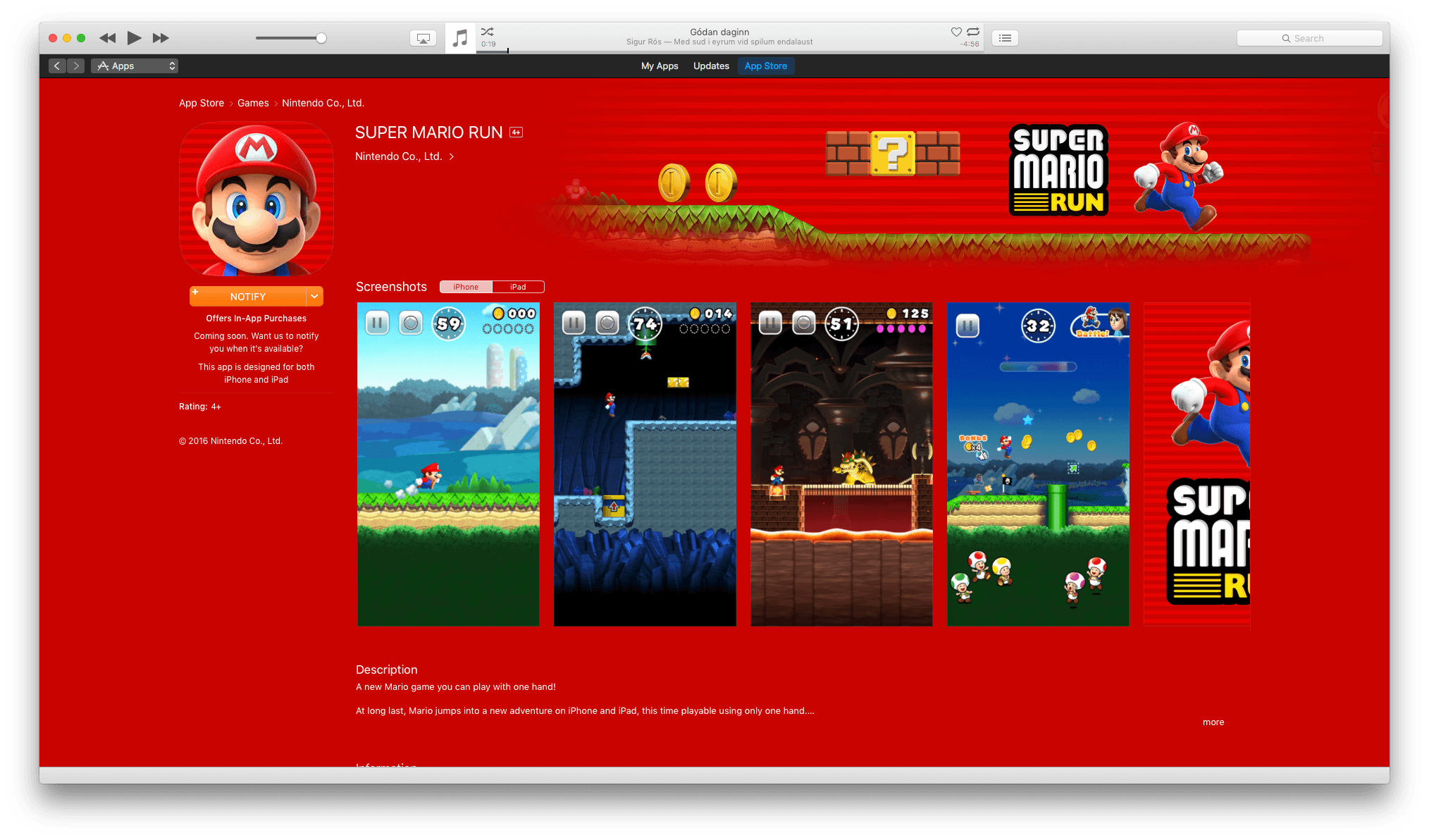

The Super Mario Run marketing blitz has begun. With the launch of Super Mario Run on iOS just one week away, Shigeru Miyamoto was interviewed by The Verge and BuzzFeed News. The creator of Mario spoke at length with both publications about the game, Nintendo’s goals for it, and how it was made.

Miyamoto discussed the thought process behind Super Mario Run’s gameplay with The Verge:

“We felt that by having this simple tap interaction to make Mario jump, we’d be able to make a game that the broadest audience of people could play.”

Nintendo’s strategy to expand its audience extends beyond gameplay though, as Miyamoto explained to BuzzFeed News:

“Kids are playing on devices that they’re getting from their parents when their parents are upgrading,” Miyamoto said. “We wanted to take an approach of how can we bring Nintendo IP to smart devices and give kids the opportunity to interact with our characters and our games.”

It’s a strategy that makes a lot of sense given the dominance of smartphones and rise of casual gaming.

Nintendo’s goal to make Super Mario Run a one-handed game necessitated designing it for portrait mode, which led to new opportunities for Nintendo’s creative team. Miyamoto told The Verge that:

“Once we did start to focus on the vertical gameplay and one-handed play, we were surprised at how much having that vertical space in a Mario game could add to the verticality of the game itself and how that added a new element of fun to Mario,” Miyamoto explains. “They’re all brand new levels that we created for this game, but because of the vertical orientation, it gave us a lot of new ideas for how to stretch the game vertically. I think it’s been maybe since the Ice Climbers days that we’ve had a game where you’re trying to climb a tower.”

Miyamoto also shared with BuzzFeed News that the inspiration for creating an “endless runner” style Mario game was influenced by fans who do speed runs through Mario and other games. You may have seen videos of speed runs; there is no shortage of them on YouTube. BuzzFeed explains that:

Watching online videos of these gamers’ astounding speed runs and other feats of gaming skill, Nintendo employees noticed that the gamers never let up on the D-Pad. Mario always kept running, and all of the skill came down to the incredible precision of the jumping. What if, the Nintendo braintrust reasoned, all players could have that experience?

In addition, Nintendo executive Reggie Fils-Aimé and Shigeru Miyamoto paid a visit to The Tonight Show Starring Jimmy Fallon last night, demoing Super Mario Run and the Nintendo Switch console that is slated for release in March. If anyone wasn’t sure before, there is no doubt that Jimmy Fallon is a huge Nintendo fan and geek. This video is wonderful: