Brian Chen, Nico Grant, and Karen Weise of The New York Times set out to explain why voice assistants like Siri, Alexa, and Google Assistant seem primitive by comparison to ChatGPT. According to ex-Apple, Amazon, and Google engineers and employees, the difference is grounded in the approach the companies took with their assistants:

The assistants and the chatbots are based on different flavors of A.I. Chatbots are powered by what are known as large language models, which are systems trained to recognize and generate text based on enormous data sets scraped off the web. They can then suggest words to complete a sentence.

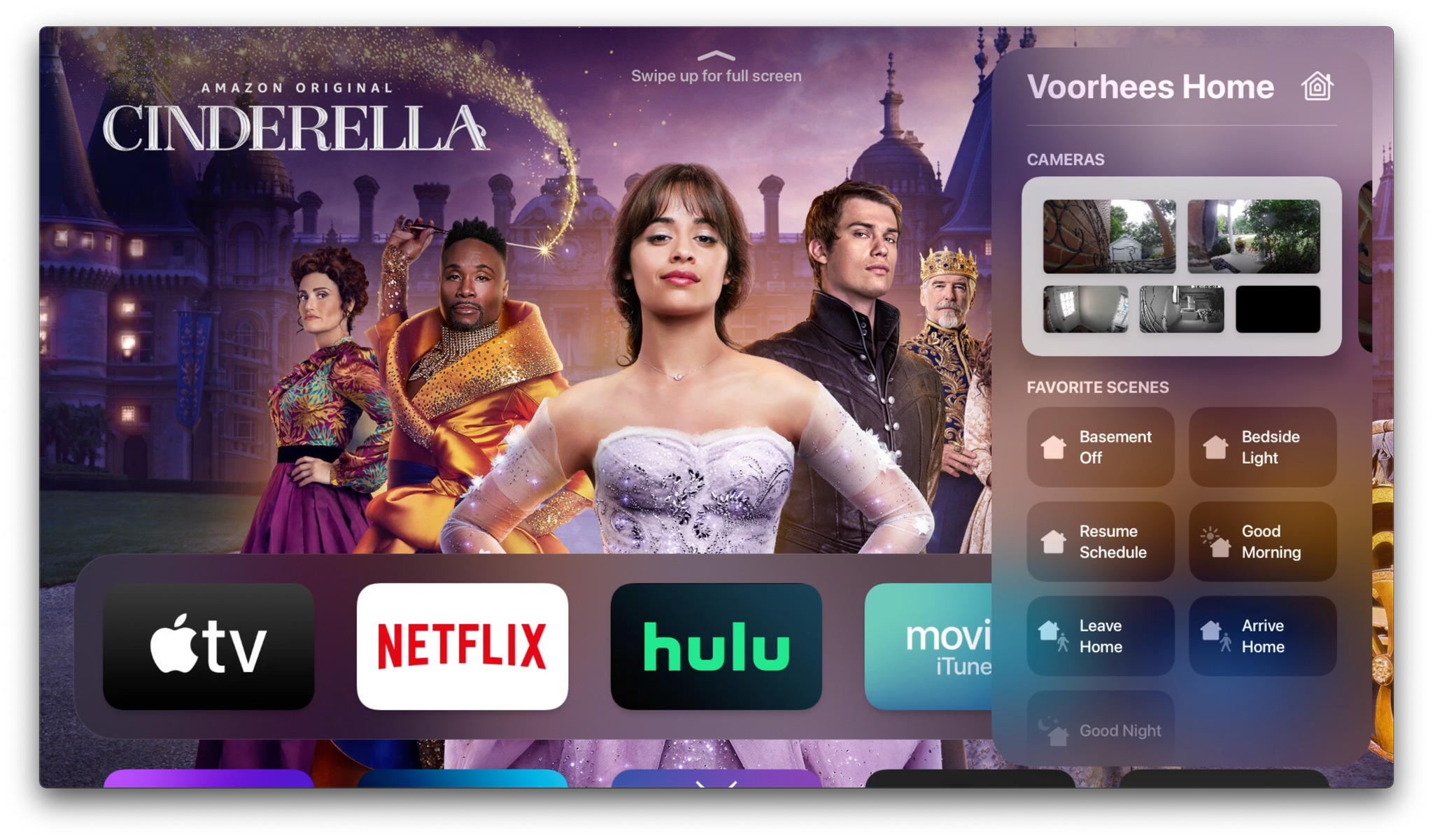

In contrast, Siri, Alexa and Google Assistant are essentially what are known as command-and-control systems. These can understand a finite list of questions and requests like “What’s the weather in New York City?” or “Turn on the bedroom lights.” If a user asks the virtual assistant to do something that is not in its code, the bot simply says it can’t help.

In the case of Siri, former Apple engineer John Burkey said the company’s assistant was designed as a monolithic database that took weeks to update with new capabilities. Burkey left Apple in 2016 after less than two years at the company according to his LinkedIn bio. According to other unnamed Apple sources, the company has been testing AI based on large language models in the years since Burkey’s departure:

At Apple’s headquarters last month, the company held its annual A.I. summit, an internal event for employees to learn about its large language model and other A.I. tools, two people who were briefed on the program said. Many engineers, including members of the Siri team, have been testing language-generating concepts every week, the people said.

It’s not surprising that sources have told The New York Times that Apple is researching the latest advances in artificial intelligence. All you have to do is visit the company’s Machine Learning Research website to see that. But to declare a winner in ‘the AI race’ based on the architecture of where voice assistants started compared to today’s chatbots is a bit facile. Voice assistants may be primitive by comparison to chatbots, but it’s far too early to count Apple, Google, or Amazon out or declare the race over, for that matter.