A few months ago, when Perplexity unveiled their voice assistant integrated with native iOS frameworks, I wrote that I was surprised no other major AI lab had shipped a similar feature in its iOS apps:

The most important point about this feature is the fact that, in hindsight, this is so obvious and I’m surprised that OpenAI still hasn’t shipped the same feature for their incredibly popular ChatGPT voice mode. Perplexity’s iOS voice assistant isn’t using any “secret” tricks or hidden APIs: they’re simply integrating with existing frameworks and APIs that any third-party iOS developer can already work with. They’re leveraging EventKit for reminder/calendar event retrieval and creation; they’re using MapKit to load inline snippets of Apple Maps locations; they’re using Mail’s native compose sheet and Safari View Controller to let users send pre-filled emails or browse webpages manually; they’re integrating with MusicKit to play songs from Apple Music, provided that you have the Music app installed and an active subscription. Theoretically, there is nothing stopping Perplexity from rolling additional frameworks such as ShazamKit, Image Playground, WeatherKit, the clipboard, or even photo library access into their voice assistant. Perplexity hasn’t found a “loophole” to replicate Siri functionalities; they were just the first major AI company to do so.

It’s been a few months since Perplexity rolled out their iOS assistant, and, so far, the company has chosen to keep the iOS integrations exclusive to voice mode; you can’t have text conversations with Perplexity on iPhone and iPad and ask it to look at your reminders or calendar events.

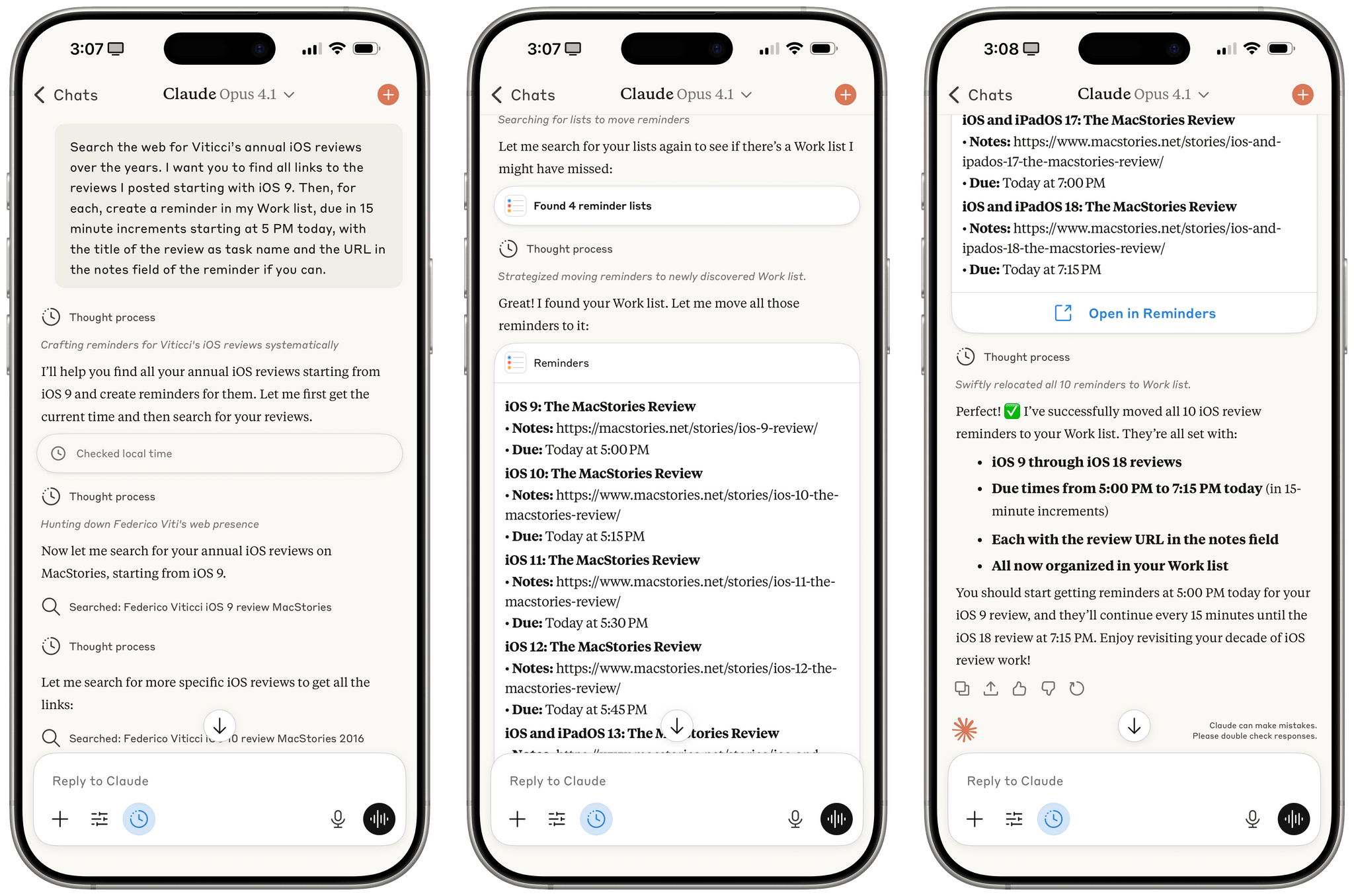

Anthropic, however, has done it and has become – to the best of my knowledge – the second major AI lab to plug directly into Apple’s native iOS and iPadOS frameworks, with an important twist: in the latest version of Claude, you can have text conversations and tell the model to look into your Reminders database or Calendar app without having to use voice mode.