Earlier this week, Apple engineers visited the Texas School for the Blind and Visually Impaired where they led a programming course from the company’s Everyone Can Code curriculum. According to the Austin Statesman’s technology blog, Open Source, the class was the first such session led by Apple for blind and low-vision students.

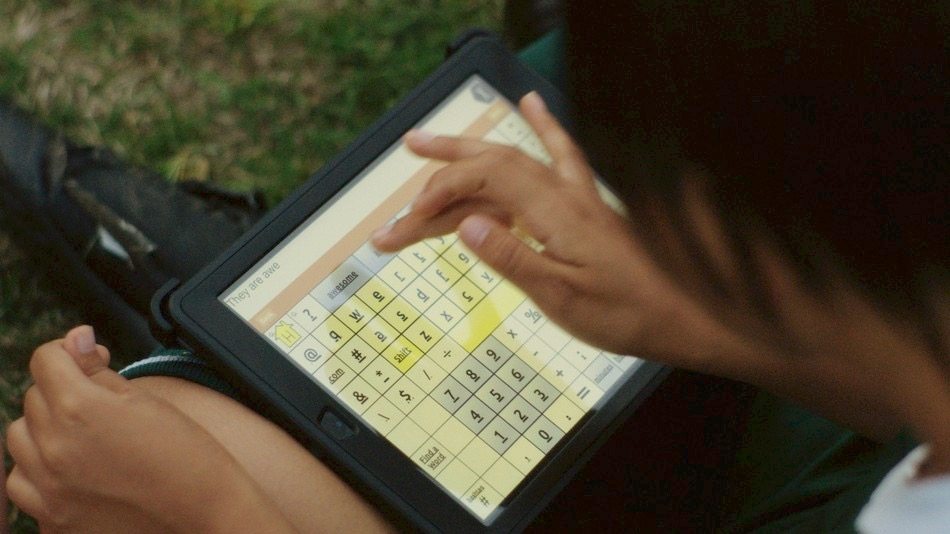

With the assistance of VoiceOver, the students completed assignments in Apple’s Swift Playgrounds iPad app. The students also got a chance to go outside and fly Parrot drones using Swift Playgrounds. Viki Davidson, a technology teacher at the school, told Open Source:

“We see this as a way to get them interested in coding and realize this could open job opportunities,” said Vicki Davidson, a technology teacher at the Texas School for the Blind and Visually Impaired. “Apple has opened up a whole new world for kids by giving them instant access to information and research, and now coding.”

Apple’s director of accessibility, Sarah Herrlinger, who will participate in a session on Innovations in Accessibility at South By Southwest on March 15th, said:

“When we said everyone should be able to code, we really meant everyone,” said Sarah Herrlinger, Apple’s director of accessibility. “Hopefully these kids will leave this session and continue coding for a long time. Maybe it can inspire where their careers can go.”

Swift Playgrounds and Apple’s Everyone Can Code curriculum have grown at a remarkable rate and are fantastic resources for students, teachers, and parents. However, it’s Apple’s long-standing commitment to accessibility across all of its products that helps ensure that those resources are available to as many students as possible.