Good post by Parker Ortolani, analyzing the pros and cons of a potential Perplexity acquisition by Apple:

According to Mark Gurman, Apple executives are in the early stages of mulling an acquisition of Perplexity. My initial reaction was “that wouldn’t work.” But I’ve taken some time to think through what it could look like if it were to come to fruition.

He gets to the core of the issue with this acquisition:

At the end of the day, Apple needs a technology company, not another product company. Perplexity is really good at, for lack of a better word, forking models. But their true speciality is in making great products, they’re amazing at packaging this technology. The reality is though, that Apple already knows how to do that. Of course, only if they can get out of their own way. That very issue is why I’m unsure the two companies would fit together. A company like Anthropic, a foundational AI lab that develops models from scratch is what Apple could stand to benefit from. That’s something that doesn’t just put them on more equal footing with Google, it’s something that also puts them on equal footing with OpenAI which is arguably the real threat.

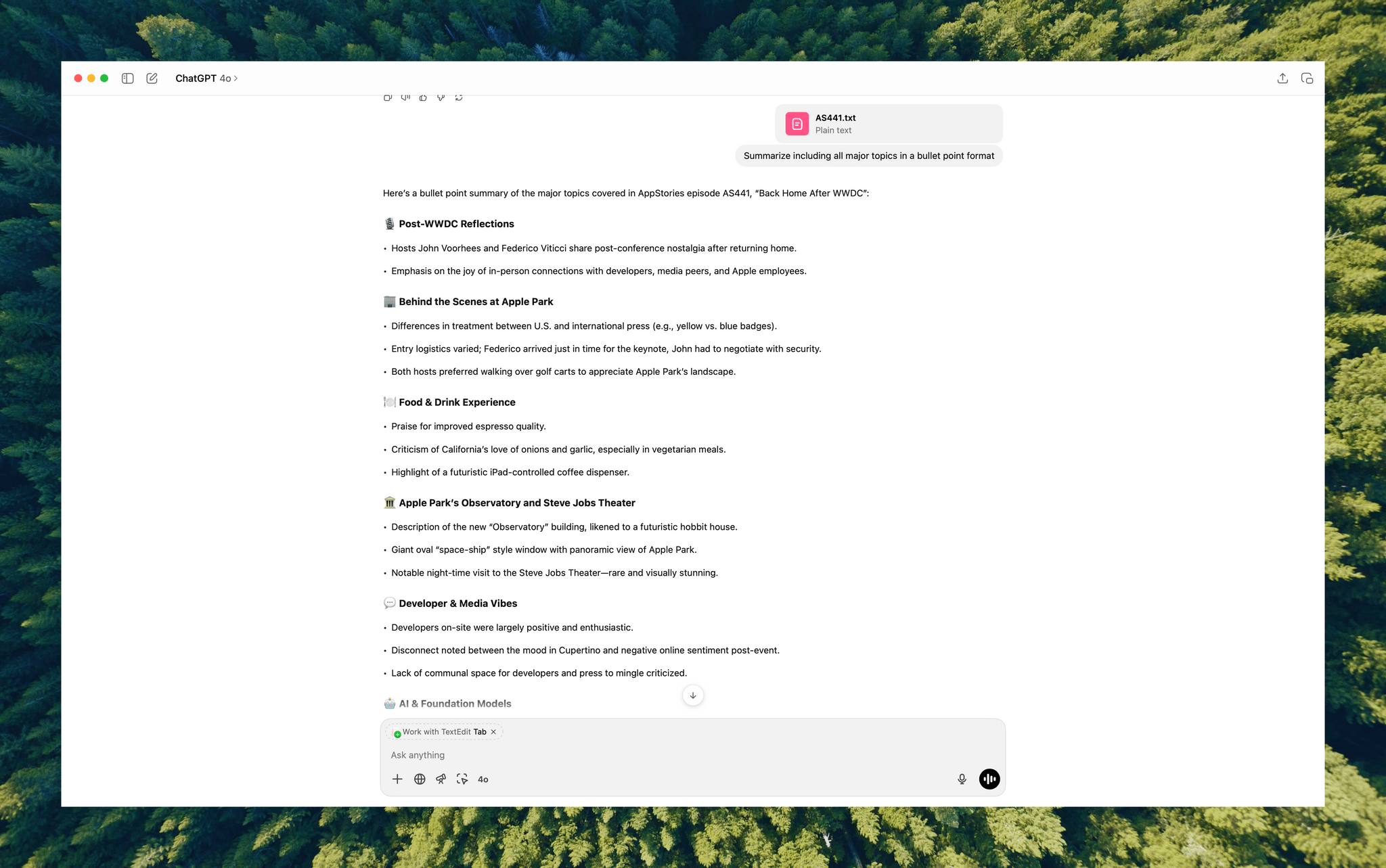

While I’m not the biggest fan of Perplexity’s web scraping policies and its CEO’s remarks, it’s undeniable that the company has built a series of good consumer products, they’re fast at integrating the latest models from major AI vendors, and they’ve even dipped their toes in the custom model waters (with Sonar, an in-house model based on Llama). At first sight, I would agree with Ortolani and say that Apple would need Perplexity’s search engine and LLM integration talent more than the Perplexity app itself. So far, Apple has only integrated ChatGPT into its operating systems; Perplexity supports all the major LLMs currently in existence. If Apple wants to make the best computers for AI rather than being a bleeding-edge AI provider itself…well, that’s pretty much aligned with Perplexity’s software-focused goals.

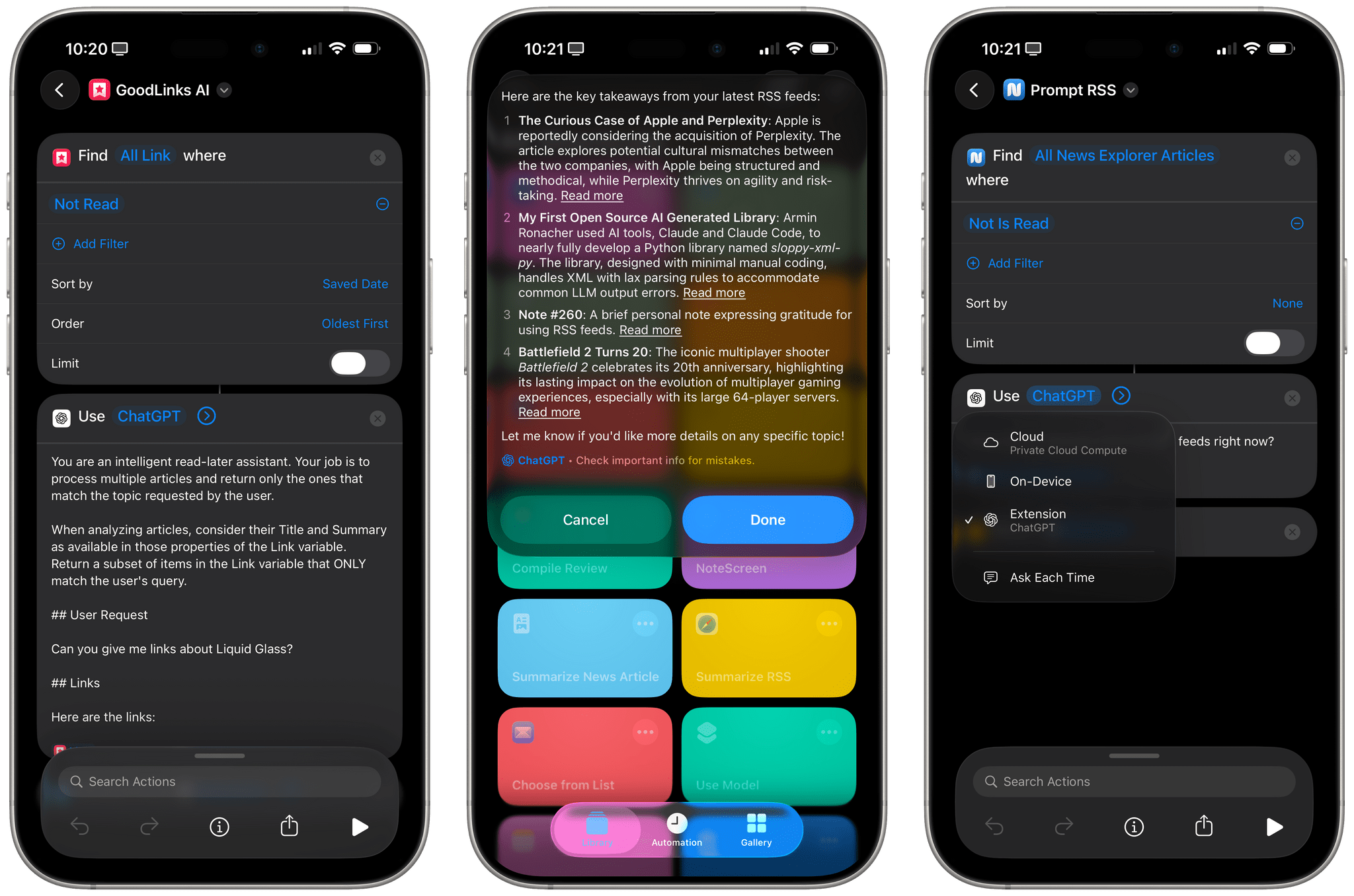

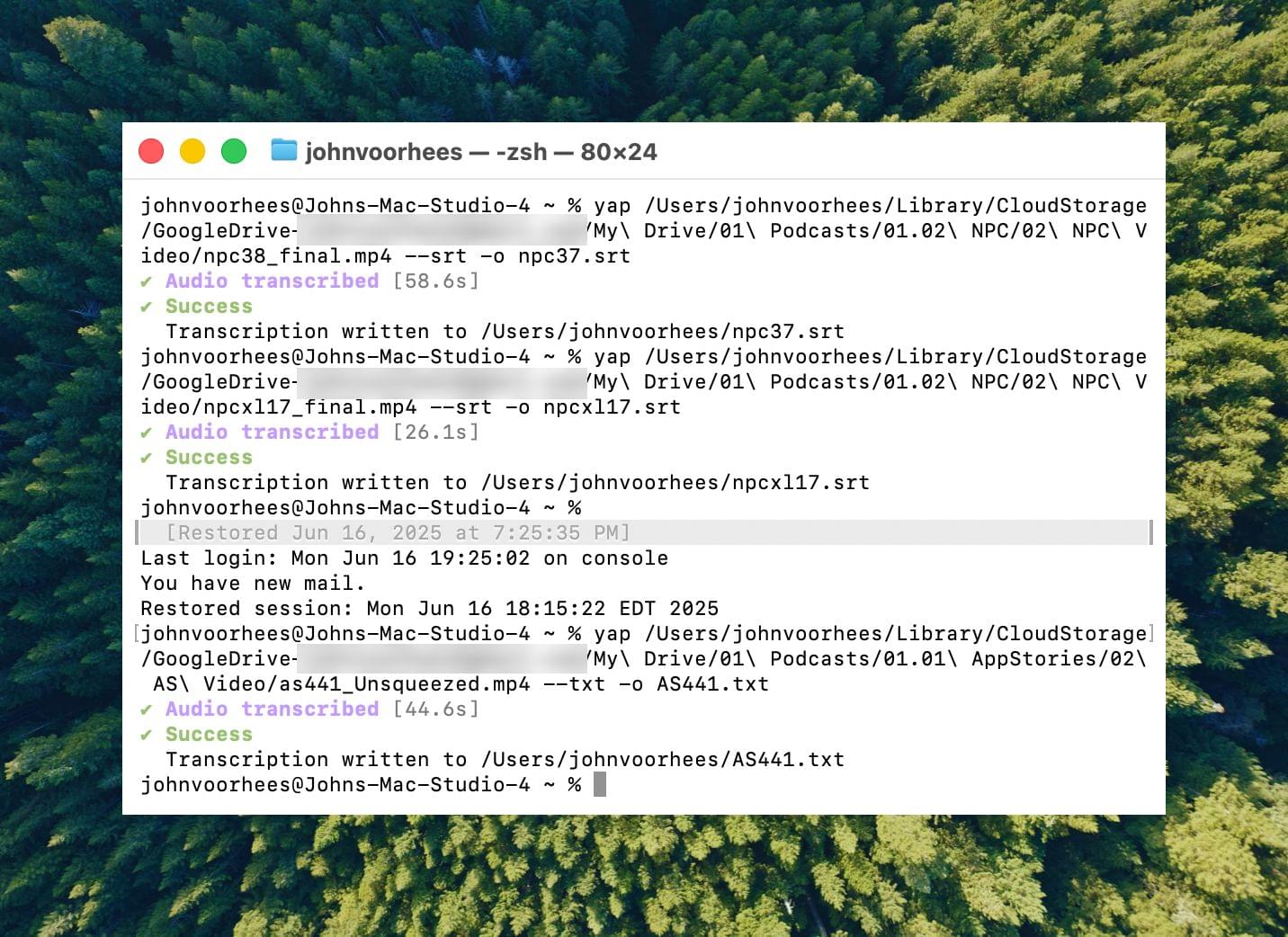

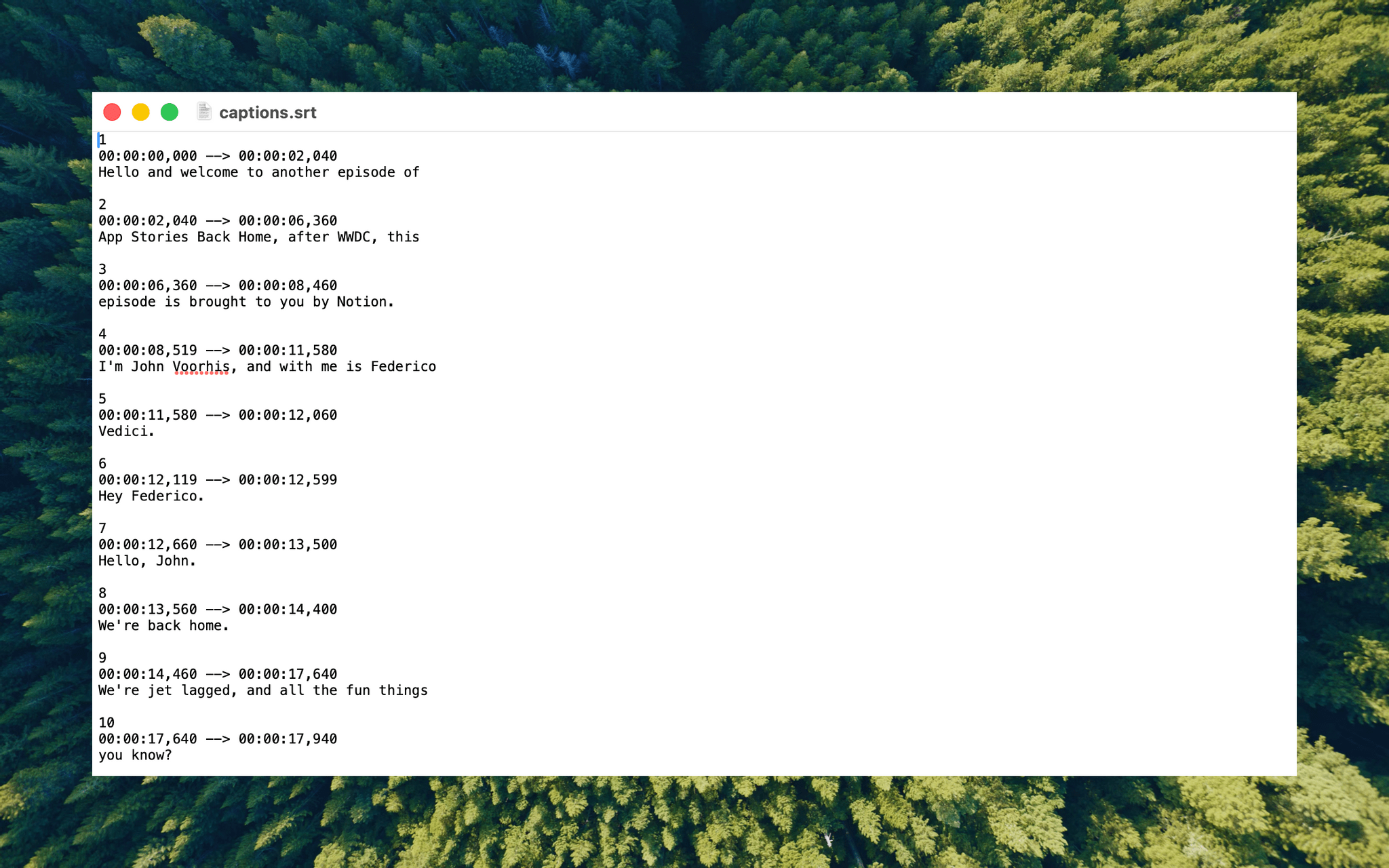

However, I wonder if Perplexity’s work on its iOS voice assistant may have also played a role in these rumors. As I wrote a few months ago, Perplexity shipped a solid demo of what a deep LLM integration with core iOS services and frameworks could look like. What could Perplexity’s tech do when integrated with Siri, Spotlight, Safari, Music, or even third-party app entities in Shortcuts?

Or, look at it this way: if you’re Apple, would you spend $14 billion to buy an app and rebrand it as “Siri That Works” next year?