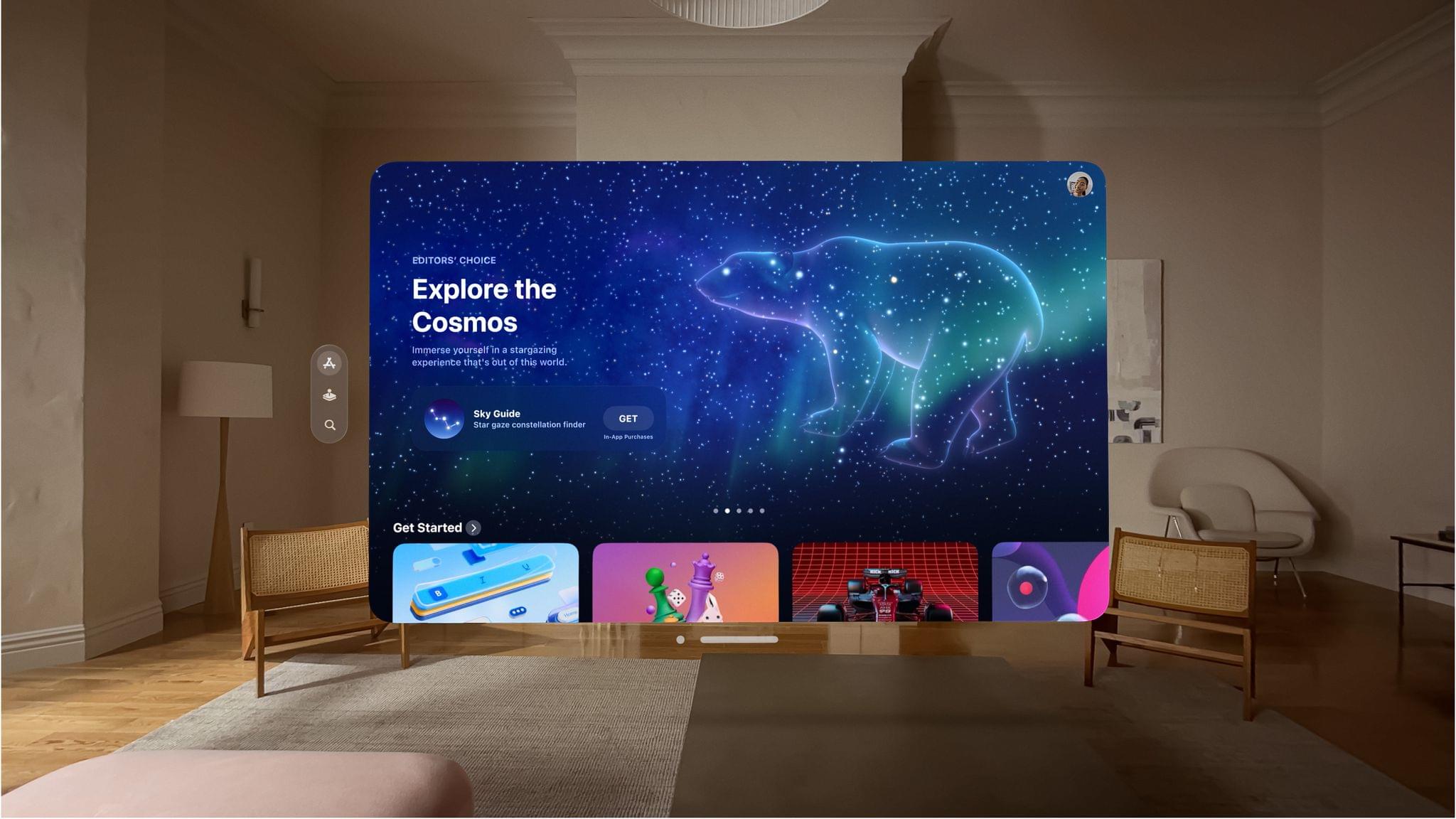

NVIDIA is in the midst of its 2024 GTC AI conference, and among the many posts published by the company yesterday, was a bit of news about the Apple Vision Pro:

Announced today at NVIDIA GTC, a new software framework built on Omniverse Cloud APIs, or application programming interfaces, lets developers easily send their Universal Scene Description (OpenUSD) industrial scenes from their content creation applications to the NVIDIA Graphics Delivery Network (GDN), a global network of graphics-ready data centers that can stream advanced 3D experiences to Apple Vision Pro.

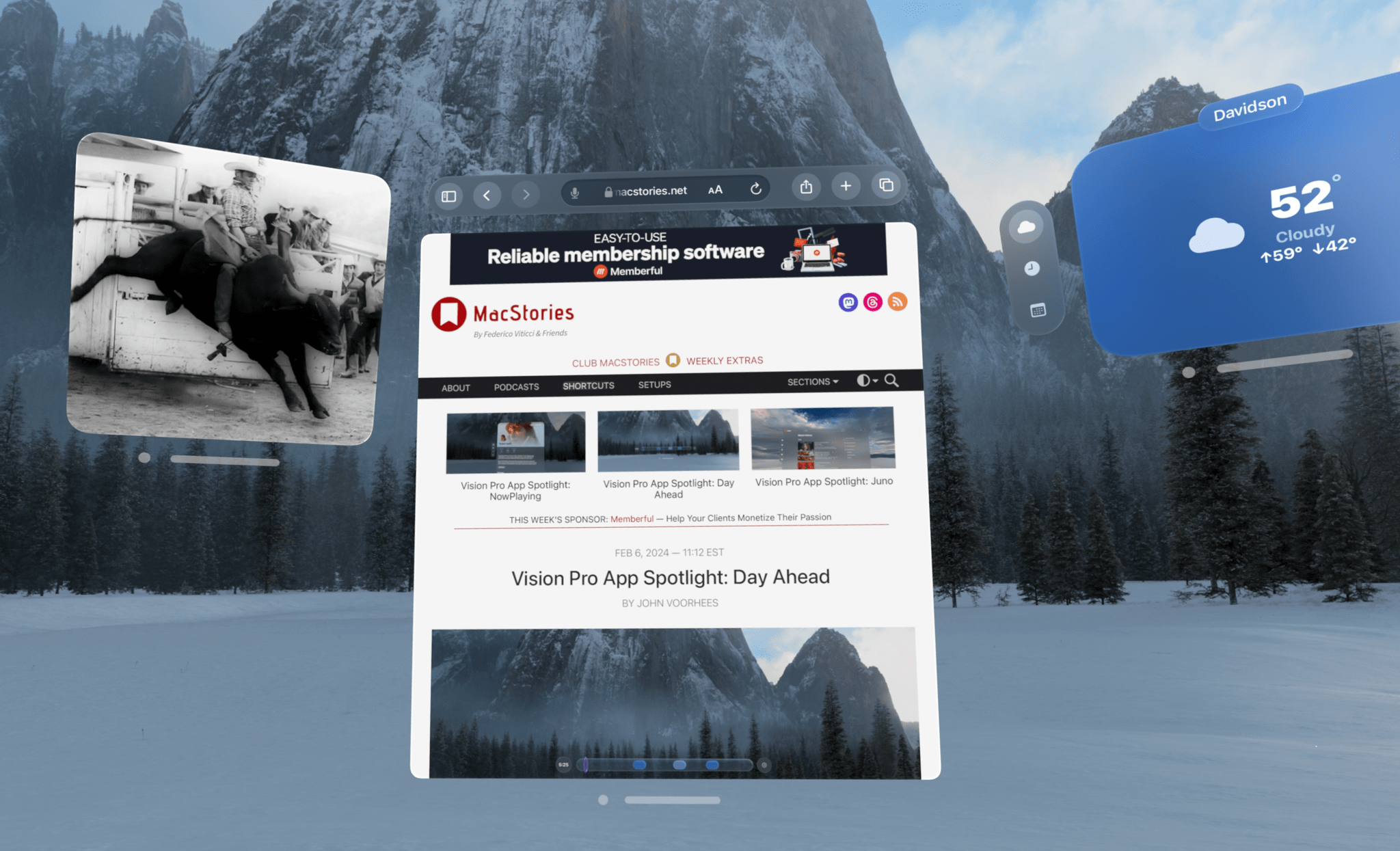

That’s a bit of an NVIDIA word salad, but what they’re saying is that developers will be able to take immersive scenes built using OpenUSD, an open standard for creating 3D scenes, render them remotely, and deliver them to the Apple Vision Pro over Wi-Fi.

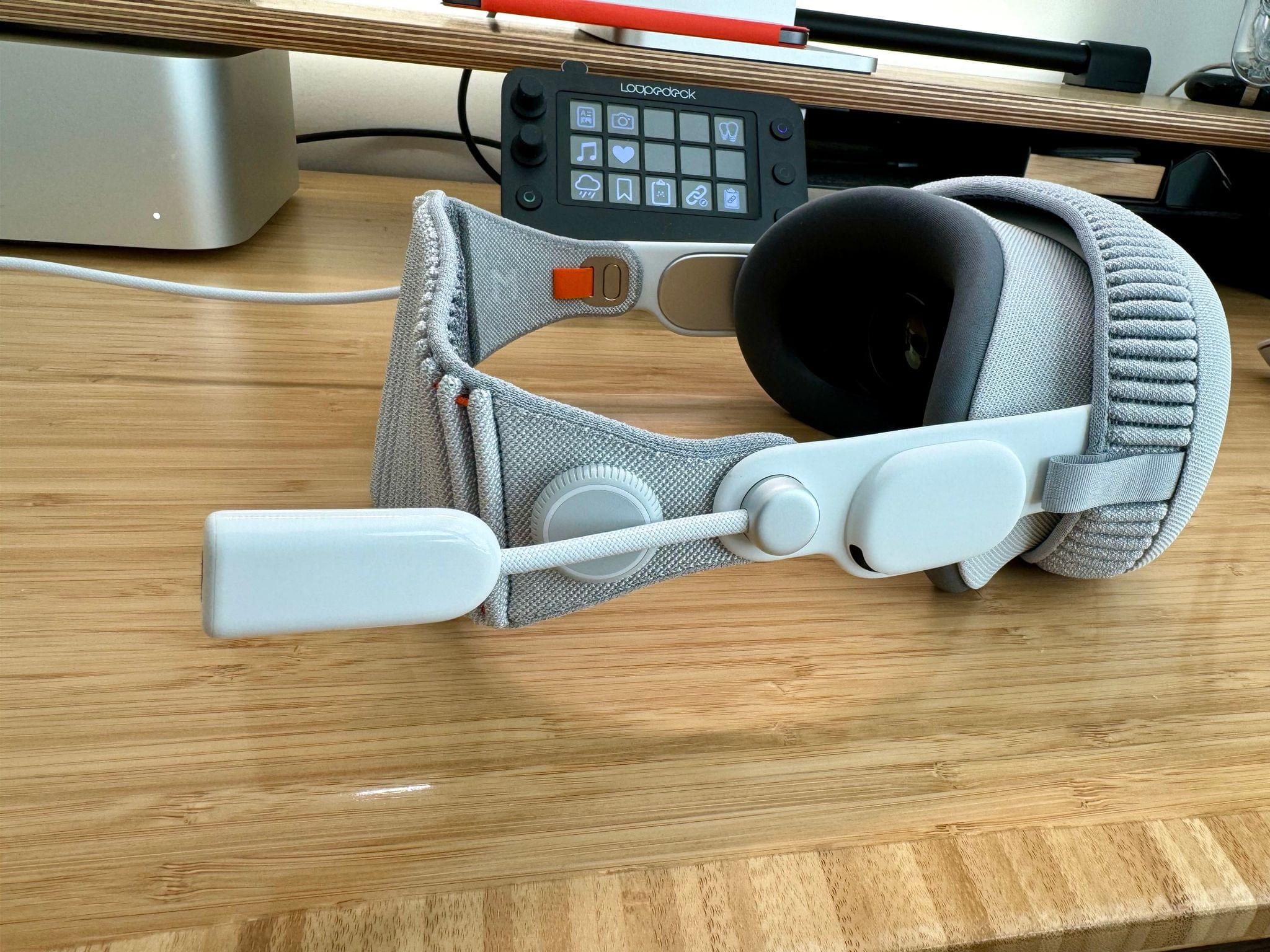

What caught my eye about this announcement is the remote rendering and Wi-Fi delivery part. NVIDIA has been using its data centers to deliver high-resolution gaming via its GeForce NOW streaming service. I’ve tried it with the Vision Pro, and it works really well.

The workflow also introduces hybrid rendering, a groundbreaking technique that combines local and remote rendering on the device. Users can render fully interactive experiences in a single application from Apple’s native SwiftUI and Reality Kit with the Omniverse RTX Renderer streaming from GDN.

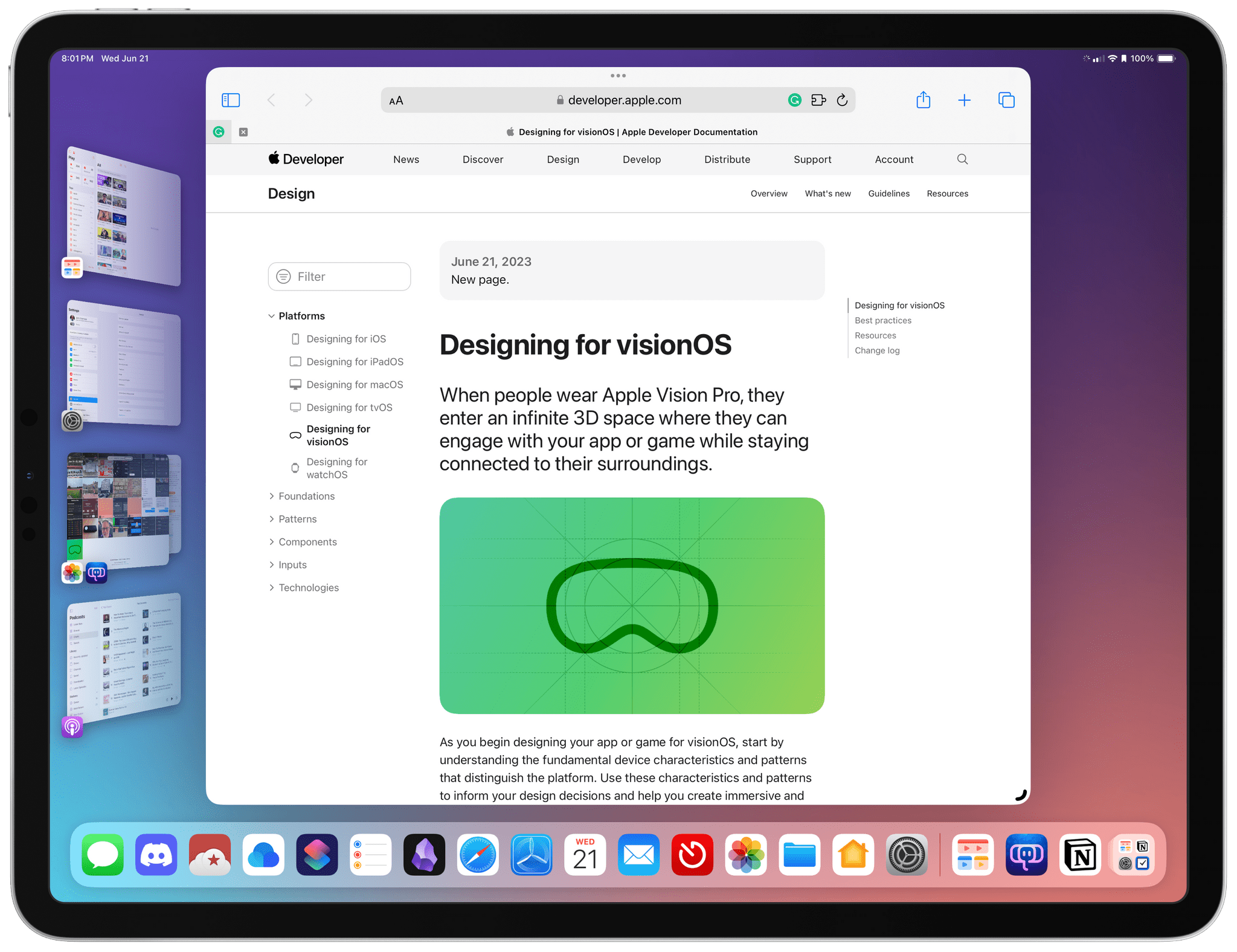

That means visionOS developers will be able to offload the rendering of an immersive environment to NVIDIA’s servers but add to the scene using SwiftUI and RealityKit frameworks, which Apple and NVIDIA expect will create new opportunities for customers:

“The breakthrough ultra-high-resolution displays of Apple Vision Pro, combined with photorealistic rendering of OpenUSD content streamed from NVIDIA accelerated computing, unlocks an incredible opportunity for the advancement of immersive experiences,” said Mike Rockwell, vice president of the Vision Products Group at Apple. “Spatial computing will redefine how designers and developers build captivating digital content, driving a new era of creativity and engagement.”

“Apple Vision Pro is the first untethered device which allows for enterprise customers to realize their work without compromise,” said Rev Lebaredian, vice president of simulation at NVIDIA. “We look forward to our customers having access to these amazing tools.”

The press release is framed as a technology focused on enterprise users, but given NVIDIA’s importance to the gaming industry, I wouldn’t be surprised to see the new frameworks employed there too. Also notable is the quote from Apple’s Mike Rockwell given the two companies’ historically chilly relationship.