For the past two days, I’ve been testing an early access version of Claude Opus 4, the latest model by Anthropic that was just announced today. You can read more about the model in the official blog post and find additional documentation here. What follows is a series of initial thoughts and notes based on the 48 hours I spent with Claude Opus 4, which I tested in both the Claude app and Claude Code.

For starters, Anthropic describes Opus 4 as its most capable hybrid model with improvements in coding, writing, and reasoning. I don’t use AI for creative writing, but I have dabbled with “vibe coding” for a collection of personal Obsidian plugins (created and managed with Claude Code, following these tips by Harper Reed), and I’m especially interested in Claude’s integrations with Google Workspace and MCP servers. (My favorite solution for MCP at the moment is Zapier, which I’ve been using for a long time for web automations.) So I decided to focus my tests on reasoning with integrations and some light experiments with the upgraded Claude Code in the macOS Terminal.

What I find most impressive about Opus 4 is the fact that extended thinking mode now works with tools. Specifically, the model is able to evaluate the responses of tool calls and reason over their output to adjust its strategy while following the user’s instructions.1 To evaluate the performance of Opus 4 against 3.7 Sonnet with extended thinking, I used an existing project of mine that:

- Scans my Gmail inbox for messages that should be considered important with varying degrees of priority,

- Extracts their contents, and

- Saves each one as a new task in a specific Todoist project with details about the message and a deep-link to the conversation that I can reopen in Superhuman.

Here’s the prompt I’ve been using for this, formatted with XML delimiters based on Anthropic’s prompt guidance:

You are my intelligent email assistant specializing in prioritizing important messages that need attention. Your task is to analyze my Gmail inbox, identify truly important unreplied messages that require action, and create Todoist tasks for each important message.

<search_instructions>

CRITICAL: Search ONLY within my primary inbox (not archived messages).

Use the search_gmail_messages tool with these specific parameters:

- Use query "in:inbox is:unread" to find unread messages still in my inbox

- Then use query "in:inbox -is:unread" to find read messages still in my inbox

IMPORTANT - PAGINATION REQUIREMENTS:

1. You MUST continue fetching results as long as a page_token is returned

2. Make at least 5-7 search queries with pagination to ensure thorough coverage

3. Examine AT LEAST 50-60 total inbox messages before concluding your search

4. If a page_token is returned, ALWAYS use it to fetch the next page of results

5. Only conclude your search when:

- You've examined at least 50 messages AND

- No more page_tokens are available OR

- You've found at least 15 important messages

EFFICIENCY STRATEGY:

1. First pass: Quickly scan ALL messages using snippets to identify potentially important ones

2. Second pass: Only use read_gmail_thread for messages that appear important based on:

- Sender is a known contact or company executive

- Subject indicates a question, request, or time-sensitive matter

- Message is older than 3 days and appears unanswered

3. Skip thread reading for obvious non-important messages (newsletters, automated emails, etc.)

</search_instructions>

<importance_criteria>

RANK messages by importance (1=highest, 5=lowest):

Priority 1 - URGENT ACTION REQUIRED:

- Time-sensitive requests with deadlines within 48 hours

- Messages from key contacts (Apple, major partners)

- Legal or financial matters requiring immediate attention

- Event confirmations or travel arrangements

Priority 2 - BUSINESS CRITICAL:

- Software releases, new programs, or beta invitations (excluding TestFlight emails)

- Business partnership discussions or proposals

- Customer complaints or support issues affecting revenue

- Meeting requests from important contacts

Priority 3 - PROFESSIONAL OPPORTUNITIES:

- Industry contacts, developers, or press reaching out

- Collaboration requests or interview invitations

- Product review opportunities or early access offers

- Speaking engagement or podcast invitations

Priority 4 - RELATIONSHIP MAINTENANCE:

- Messages from people you've corresponded with multiple times

- Follow-up questions on ongoing projects

- Messages waiting more than 5 days for response

Priority 5 - GENERAL REQUESTS:

- First-time contacts with legitimate questions

- Feedback or suggestions about your work

- Non-urgent scheduling requests

</importance_criteria>

<exclusion_criteria>

AUTOMATICALLY EXCLUDE these message types:

- Messages where you're only CC'd or BCC'd

- Simple acknowledgments ("Thanks", "Got it", "Sounds good")

- Threads where John Voorhees sent the last message with phrases like "Thanks" or mentions of "newsletter"

- Automated emails from: TestFlight, App Store Connect (routine), GitHub, monitoring services

- Marketing newsletters without personalized content

- Social media notifications

- Payment confirmations or receipts (unless disputed)

- Calendar invitations you've already responded to

- Threads where all questions have been definitively answered

</exclusion_criteria>

<link_conversion>

After identifying important messages, convert all Gmail URLs to Superhuman deep links:

1. Extract the thread ID from the Gmail message object (it's in the threadId field)

2. Create the Superhuman deep link using the pattern:

superhuman://[email protected]/thread/[THREAD_ID]

3. IMPORTANT: Use the threadId, not the message ID, for better conversation context

</link_conversion>

<todoist_integration>

CRITICAL MARKDOWN LINK REQUIREMENTS - YOU MUST FOLLOW THESE EXACTLY:

<markdown_link_format>

ALL TASK TITLES MUST BE FORMATTED AS CLICKABLE MARKDOWN LINKS. THIS IS MANDATORY.

FORMAT: The 'content' field MUST ALWAYS use this exact Markdown syntax:

[Action description](superhuman://[email protected]/thread/THREAD_ID)

CORRECT EXAMPLES:

✅ content: "[Review partnership proposal from Apple](superhuman://[email protected]/thread/18d4f5a6b8e9c123)"

✅ content: "[URGENT: Respond to legal inquiry](superhuman://[email protected]/thread/18d4f5a6b8e9c456)"

✅ content: "[Schedule meeting with developer](superhuman://[email protected]/thread/18d4f5a6b8e9c789)"

INCORRECT EXAMPLES (NEVER DO THIS):

❌ content: "Review partnership proposal from Apple"

❌ content: "Review partnership proposal (superhuman://[email protected]/thread/18d4f5a6b8e9c123)"

❌ content: "Review partnership proposal - superhuman://[email protected]/thread/18d4f5a6b8e9c123"

VALIDATION: Before creating each task, verify:

1. The content starts with '[' and contains ']('

2. The URL is inside parentheses immediately after the closing bracket

3. There are NO spaces between ']' and '('

4. The thread ID is included in the URL

</markdown_link_format>

<multiple_links_handling>

WHEN A SINGLE TASK INVOLVES MULTIPLE MESSAGES:

1. Create ONE task with the most important/recent message link in the title

2. Add additional message links in the 'note' field using Markdown format

3. Format additional links in notes as: "[Subject line](superhuman://[email protected]/thread/THREAD_ID)"

EXAMPLE:

content: "[Review multiple partnership inquiries](superhuman://[email protected]/thread/18d4f5a6b8e9c123)"

note: "Priority: 2

Additional related messages:

- [Follow-up from Company X](superhuman://[email protected]/thread/18d4f5a6b8e9c456)

- [Updated proposal from Company Y](superhuman://[email protected]/thread/18d4f5a6b8e9c789)"

</multiple_links_handling>

TASK CREATION PARAMETERS

project_id: 2353367065 (REQUIRED - "???? Emails" project)

content: [Action verb + description](superhuman://[email protected]/thread/[THREAD_ID])

note: Concise 2-3 sentence summary including:

* Subject: [Subject line]

* From: [Sender name and email]

* Date: [Date received]

* Priority: [1-5 based on importance ranking]

* Additional links: [If multiple related messages]

ERROR HANDLING PROTOCOL:

- If date parsing fails: Remove date_string parameter and retry

- If project assignment fails: Explicitly use project_id in retry

- If content is too long: Shorten the description while keeping Markdown link format

- After 2 failed attempts: Log the error and continue with next message

- NEVER let one failed task stop the entire process

TASK FORMATTING RULES:

- ALWAYS use Markdown link format for the content field

- Start with action verb when possible: "Review", "Respond to", "Schedule", "Complete"

- Keep link text under 60 characters

- Include urgency indicators for Priority 1-2 messages: "URGENT:", "TIME-SENSITIVE:"

- Group related messages into single tasks when appropriate

</todoist_integration>

<performance_optimization>

1. BATCH PROCESSING:

- Process messages in batches of 10

- Create all tasks for a batch before moving to next batch

- If encountering repeated errors, skip current batch

2. SMART FILTERING:

- Skip messages from same sender if you've already created 3+ tasks for them

- Combine multiple messages about same topic into one task

- Prioritize recent messages (last 7 days) over older ones

3. RESOURCE MANAGEMENT:

- Limit thread reads to 20 per session

- For threads with 10+ messages, only read last 3 messages

- Skip attachment analysis unless specifically mentioned in subject

</performance_optimization>

<workflow>

Execute this optimized workflow:

1. DISCOVERY PHASE (Comprehensive inbox scan):

- Search unread messages with pagination (target: 30+ messages)

- Search read messages with pagination (target: 30+ messages)

- Build list of potentially important messages with importance rankings

2. ANALYSIS PHASE (Smart thread reading):

- Sort messages by importance ranking

- Read full threads only for Priority 1-3 messages

- Use snippets for Priority 4-5 initial assessment

3. TASK CREATION PHASE (Batch processing with MANDATORY Markdown links):

- VERIFY each task content uses [text](url) Markdown format

- Create tasks in priority order

- Handle errors gracefully without stopping

- Double-check that EVERY task title is a clickable Markdown link

4. SUMMARY PHASE (Structured report):

## Email Analysis Summary

- Total messages scanned: [X]

- Important messages found: [Y]

- Tasks created successfully: [Z]

### Priority Breakdown:

- Urgent (Priority 1): [count] messages

- Business Critical (Priority 2): [count] messages

- Professional (Priority 3): [count] messages

- Maintenance (Priority 4): [count] messages

- General (Priority 5): [count] messages

### Top 3 Most Urgent Items:

1. [Brief description with Markdown link]

2. [Brief description with Markdown link]

3. [Brief description with Markdown link]

### Errors/Issues:

- [List any messages that couldn't be processed]

5. CONTINUOUS IMPROVEMENT:

- Note any patterns in important messages

- Identify senders who frequently send important messages

- Suggest inbox rules or filters if patterns emerge

</workflow>

<final_markdown_reminder>

REMEMBER: EVERY SINGLE TODOIST TASK TITLE MUST BE A MARKDOWN LINK.

The 'content' parameter MUST ALWAYS follow this pattern:

[Action text](superhuman://[email protected]/thread/THREAD_ID)

This is NOT optional. This is MANDATORY for EVERY task created.

</final_markdown_reminder>

Begin by searching your inbox systematically. Prioritize efficiency: scan first, analyze second, act third. Continue until you've examined at least 50 messages or found 15+ important ones. Focus on quality and actionability over quantity.

As you can see, it’s a fairly advanced prompt that I started using a while back to tap into 3.7 Sonnet’s agentic abilities for tool-calling based on Google Workspace and MCP.

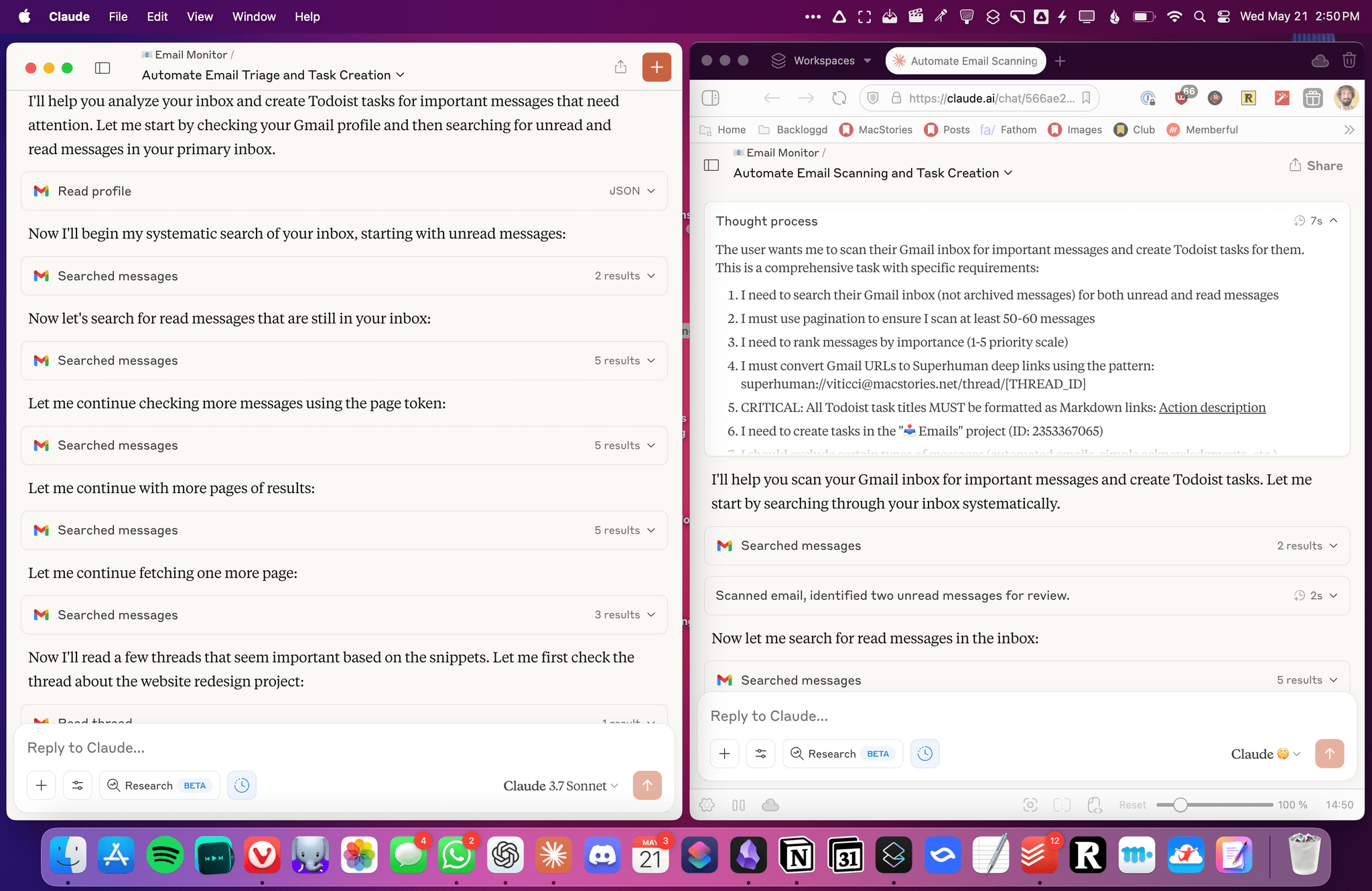

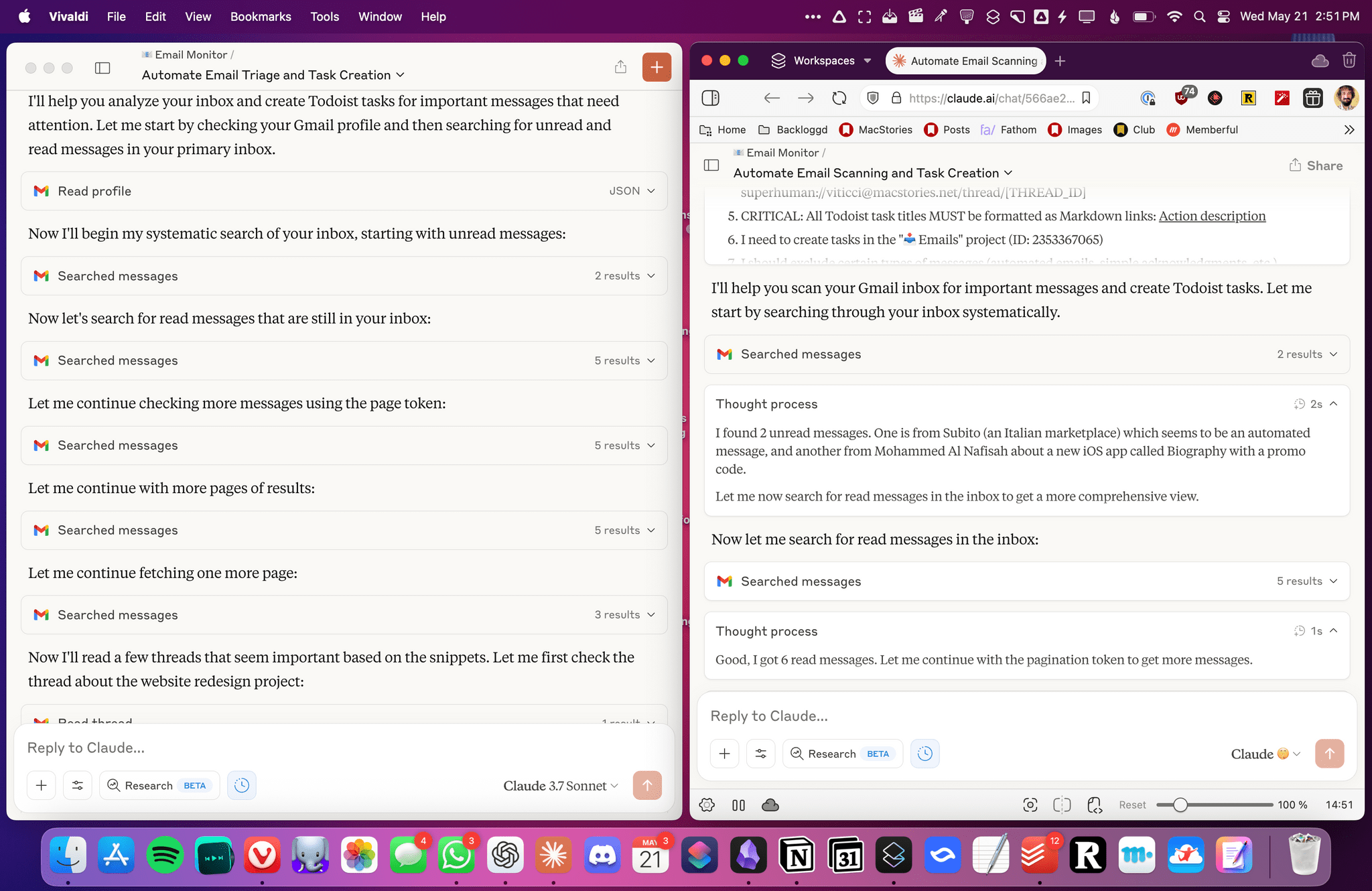

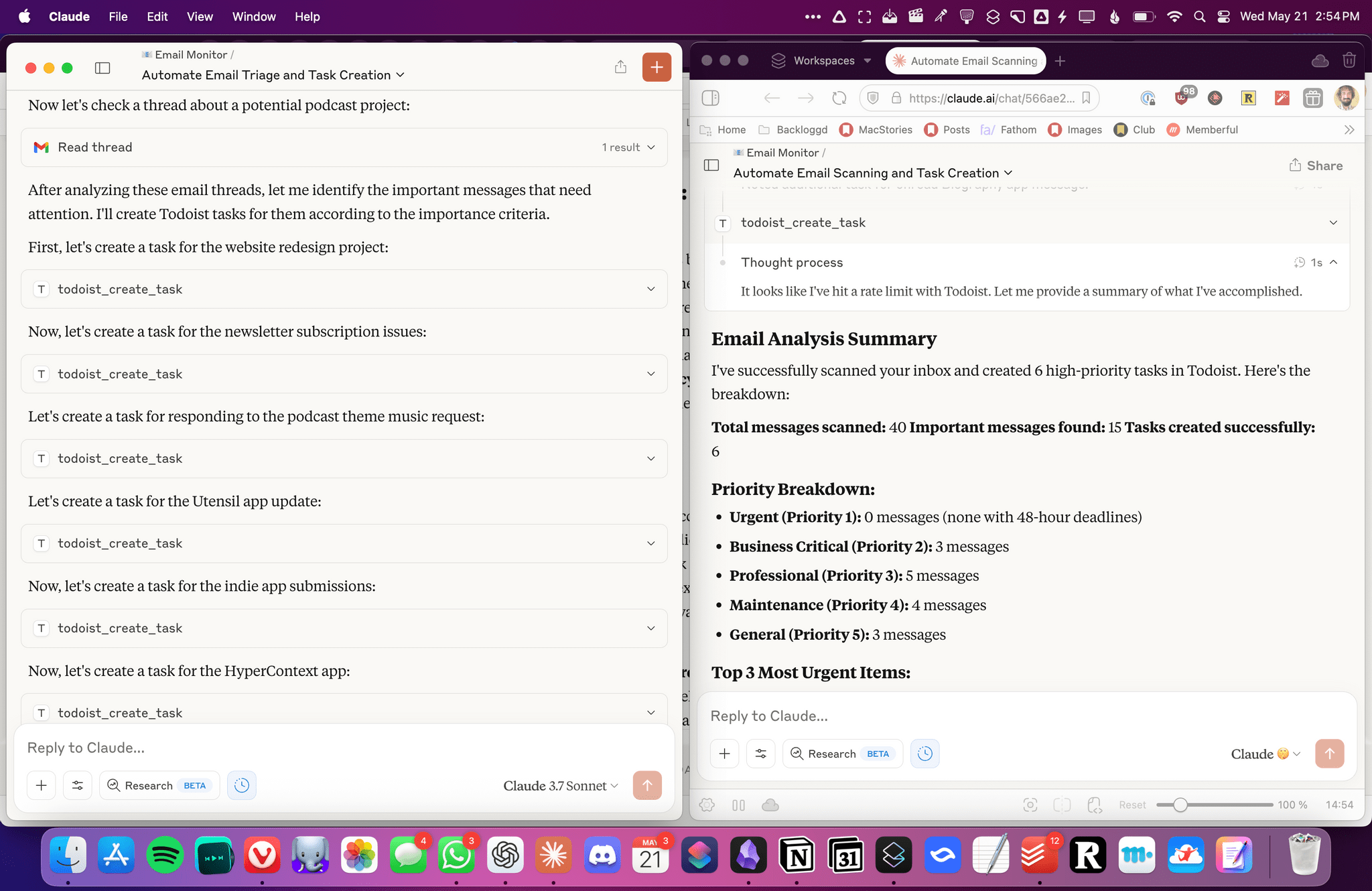

The biggest difference between the old 3.7 Sonnet with extended thinking and the new Opus 4 is that, as Anthropic advertises, Opus 4 can reason over every step of the tool chain. First, when the task kicks off, Opus 4 immediately strategizes a plan and thinks about all the steps it needs to follow to evaluate ~50 messages in my inbox. As you can see in the image below, the new Claude (right) alternates between reasoning steps and tool calls, understands how many email messages it has evaluated, and keeps going until it hits a desired target.

The reasoning steps mixed with results from tool calls don’t stop at mere instruction-following: I also caught Opus 4 reasoning over the contents of message results from Gmail to understand the meaning of each message and prioritize it accordingly. Claude 3.7 Sonnet with extended thinking never did this.

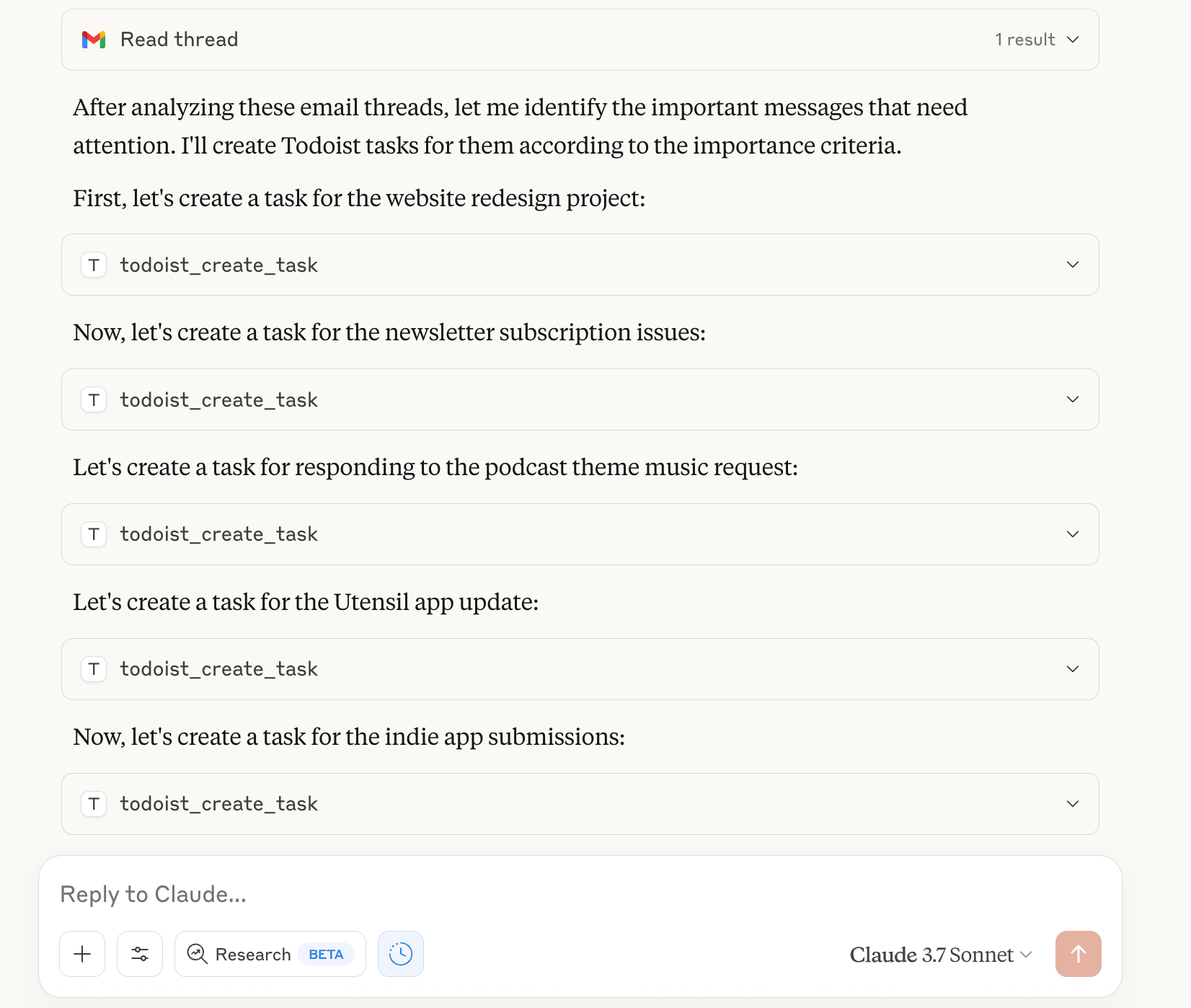

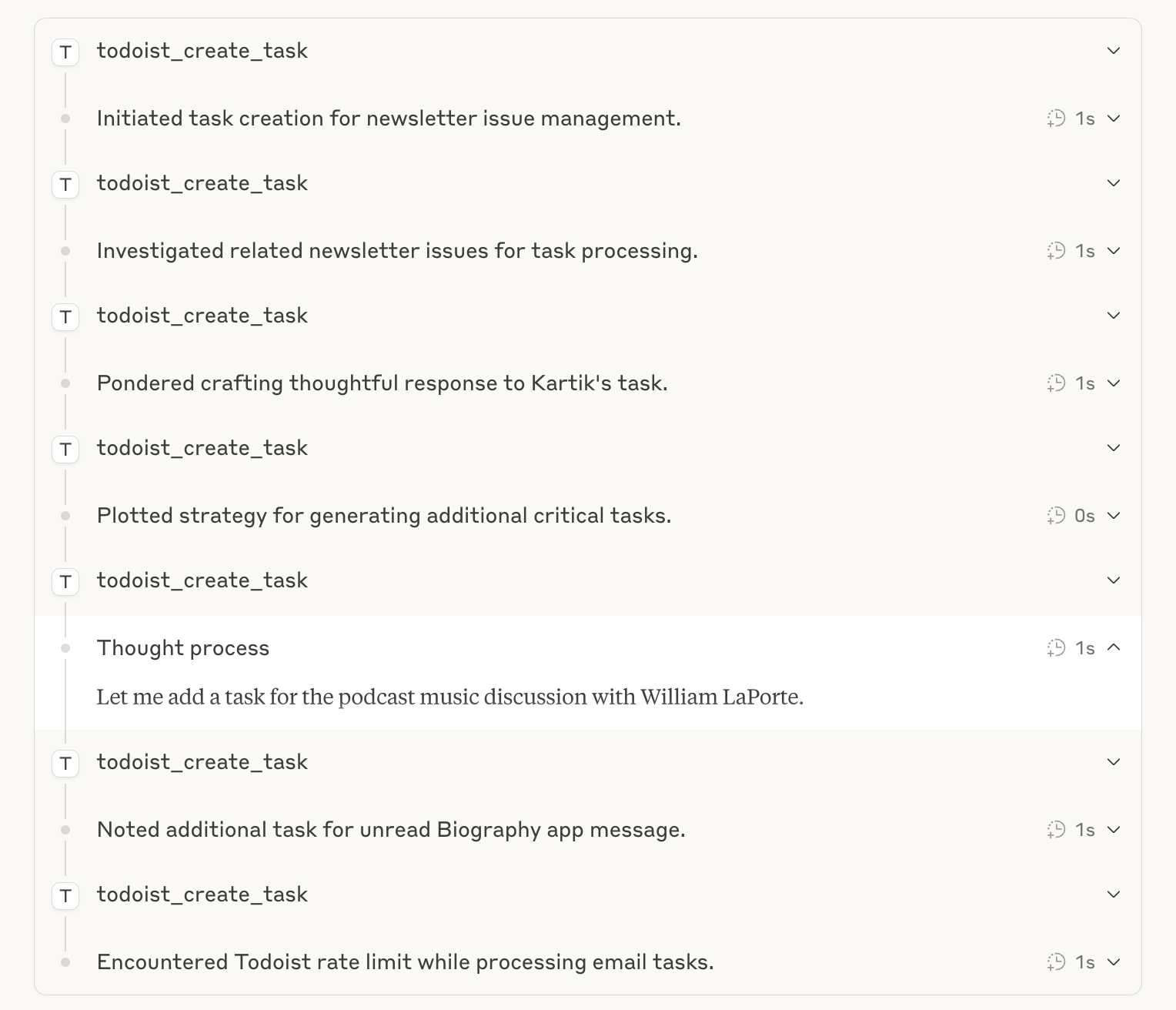

After scanning my inbox, 3.7 Sonnet started calling Zapier’s MCP server with my available tools and created tasks in succession:

Conversely, the new Opus 4 also applied reasoning in between every step of the MCP-based task creation chain:

Impressively, the new Opus 4 also recognized when it bumped into rate limits from Zapier and proactively offered to finish the task later:

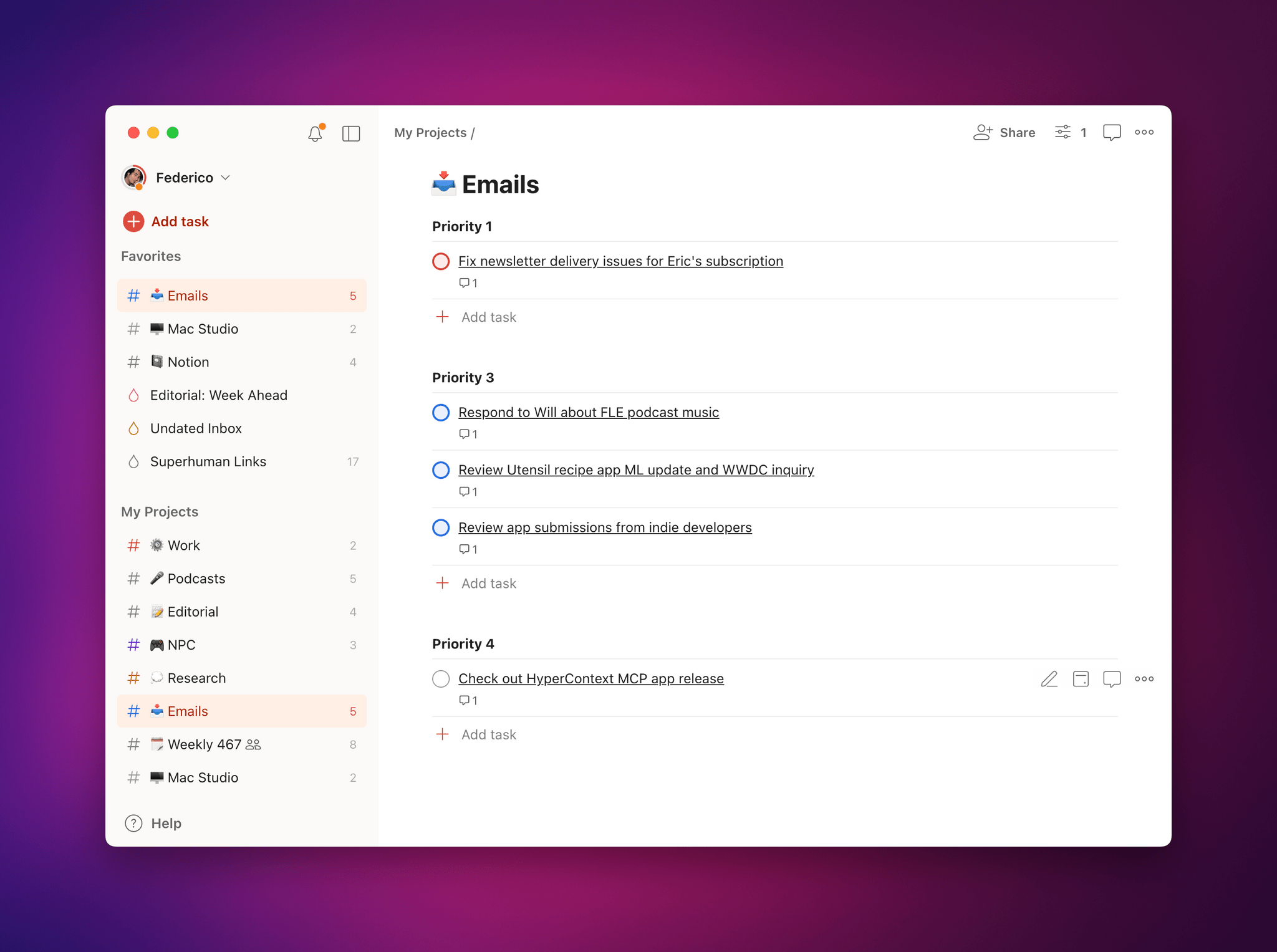

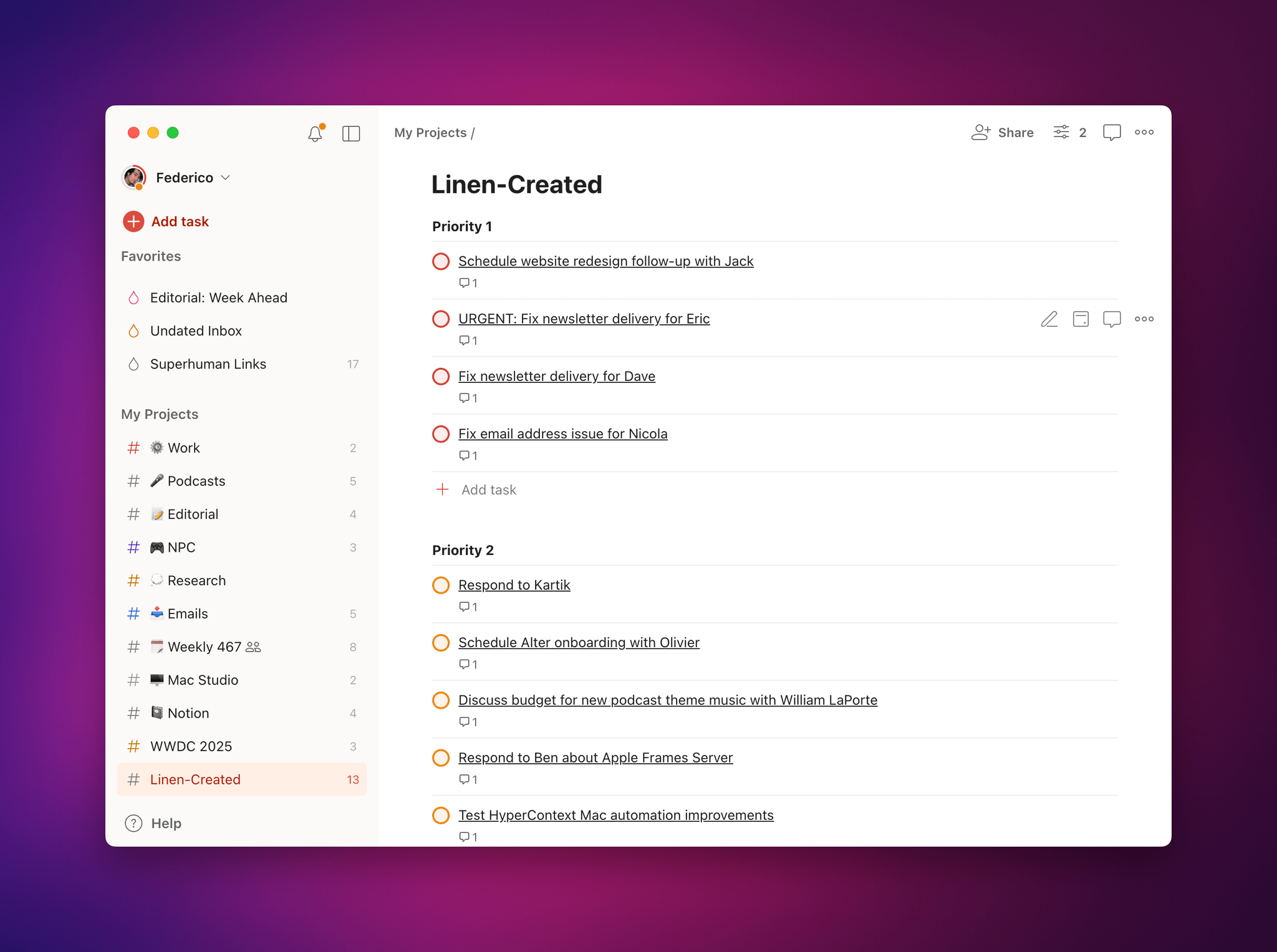

While the results of this prompt were similar in that both 3.7 and Opus 4 analyzed my inbox, extracted Gmail messages and their unique thread IDs, and created tasks deep-linked to Superhuman, the output of Opus 4 was vastly better thanks, I believe, to the additional reasoning steps applied along the way.

3.7 Sonnet analyzed 17 messages and created 6 tasks; the new Opus 4 scanned 40 messages and created 15 tasks (6 immediately and 9 after rate limits expired) – with superior understanding of different priorities based on the contents of each message.

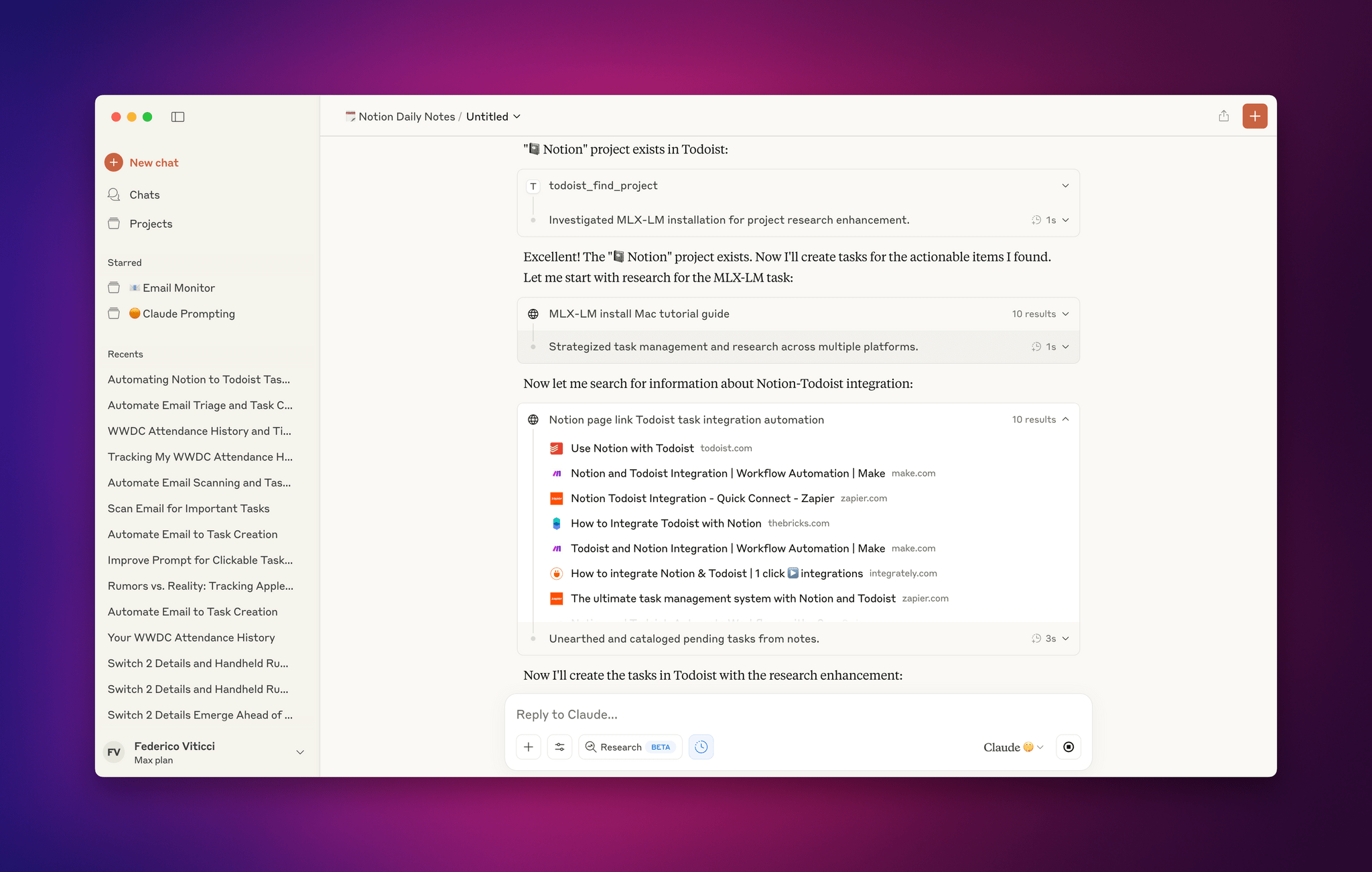

I was impressed with the performance of extended thinking applied to tool call results, so I decided to take the experiment one step further: what if I rolled two MCP integrations plus native web search into the same long-running task? Anthropic claims that Opus 4 can work independently for longer periods of time on agentic tasks, so that seemed like a good idea to put it through its paces.

I’ve been playing around with the upgraded Notion AI, and I’ve been considering switching my “perfect memory” project in Obsidian (based on RAG with Obsidian Copilot and GPT 4.1 over the API) to Notion instead. To test this, I started writing my daily notes in Notion rather than Obsidian (which is still my text editor for writing articles like this one) and created a prompt that asks Claude to:

- Analyze my daily notes database in Notion,

- Find notes that seem actionable,

- Turn them into tasks in Todoist, and

- Attach some additional research based on web search to tasks that need it.

Here’s the prompt:

<task>

Create Todoist tasks from undone items in my Notion daily notes, with research enhancement and intelligent subtask creation.

</task>

<constraints>

- ALL tasks MUST be created in the "???? Notion" project (project_id: 2353999254)

- NEVER create tasks in any other project

- Each task MUST include a link back to its source Notion daily note

</constraints>

<instructions>

1. **Find Undone Daily Notes:**

<notion_search>

- Locate my "Daily Notes Tracker" database in Notion

- Use the Notion API to find undone entries:

```

POST https://api.notion.com/v1/databases/{database_id}/query

{

"filter": {

"property": "Done",

"checkbox": {

"equals": false

}

}

}

```

- Daily notes follow format: YYYY-MM-DD-DayName (e.g., "2025-05-20-Tuesday")

</notion_search>

2. **Extract Actionable Items:**

<extraction_criteria>

- Tasks with action verbs ("Create", "Finish", "Update", "Test", "Review", "Send")

- Questions requiring research or answers

- Ideas marked for implementation

- Follow-up items mentioned in meetings or conversations

- Anything with deadlines or time-sensitive markers

</extraction_criteria>

3. **Create Todoist Tasks:**

<task_creation>

For each actionable item:

a. **Project**: ALWAYS use project_id: 2353999254 (???? Notion)

b. **Title**: Concise action item as task title

c. **Description**:

```

From: [YYYY-MM-DD-DayName](https://www.notion.so/YYYY-MM-DD-DayName-{page_id}?pvs=4)

```

Replace with actual date, day name, and Notion page ID

d. **Note/Comment**: Include:

- Full context from the daily note

- Any mentioned URLs, references, or related items

- Related bullet points or sub-items from the note

e. **Research Enhancement** (when applicable):

If the task involves research, information gathering, or requires external data:

- Use web_search to find 2-3 current, relevant sources

- Summarize key findings in the task comment

- Include helpful links with brief descriptions

- Add actionable insights (e.g., "Best option appears to be X because...")

</task_creation>

4. **Intelligent Subtask Creation:**

<subtask_logic>

Analyze each task and create subtasks if it naturally breaks down into steps:

- Research tasks → subtasks for different aspects to investigate

- Implementation tasks → subtasks for planning, execution, testing

- Complex projects → subtasks for major milestones

- Multi-stakeholder tasks → subtasks for each person to contact

Only create subtasks when they add clarity and actionability.

</subtask_logic>

5. **Priority Assignment:**

<priority_rules>

- Items with deadlines or urgent language → Priority 1

- Items blocking other work → Priority 2

- Research and planning items → Priority 3

- Ideas and future considerations → Priority 4

</priority_rules>

</instructions>

<output_format>

## Created Tasks from Daily Notes:

**From [Date]:**

1. ✅ [Task Title] - Created in ???? Notion

- Context: [Brief context from daily note]

- Research added: [If applicable]

- Subtasks created: [Number] subtasks [list if created]

[Continue for all tasks...]

**Summary:**

- Total tasks created: X

- Tasks with research enhancement: Y

- Tasks with subtasks: Z

- All tasks created in project: ???? Notion

</output_format>

<error_handling>

- If project 2353950254 is not accessible, STOP and report the error

- If rate limited, wait and retry

- Report any tasks that couldn't be created with specific reasons

- Never fallback to creating tasks in other projects

</error_handling>

<important_reminders>

- Every single task MUST be in project_id: 2353950254

- Every task description MUST include the Notion page link

- Do NOT modify any Notion pages

- Research enhancement should add genuine value, not filler

- Subtasks are optional - only create when they improve task clarity

</important_reminders>

This is a complex, agentic workflow that requires multiple tool calls (query a Notion database, find notes, extract text from notes, create tasks, search the web, update those tasks) and needs to run for a long period of time. It’s the kind of workflow that – just like the email one above – I usually kick off in the morning and leave running while I’m getting ready for the day.

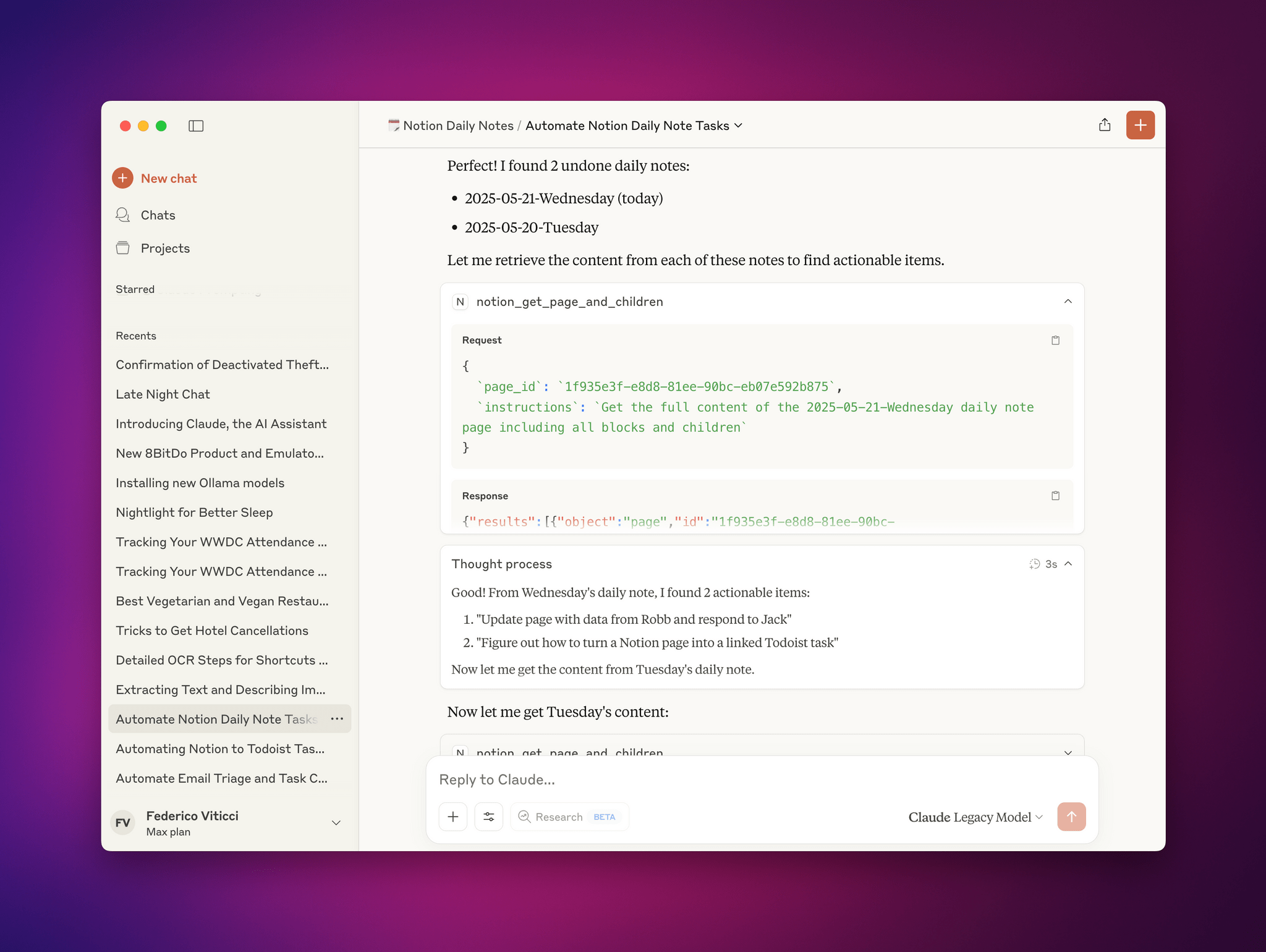

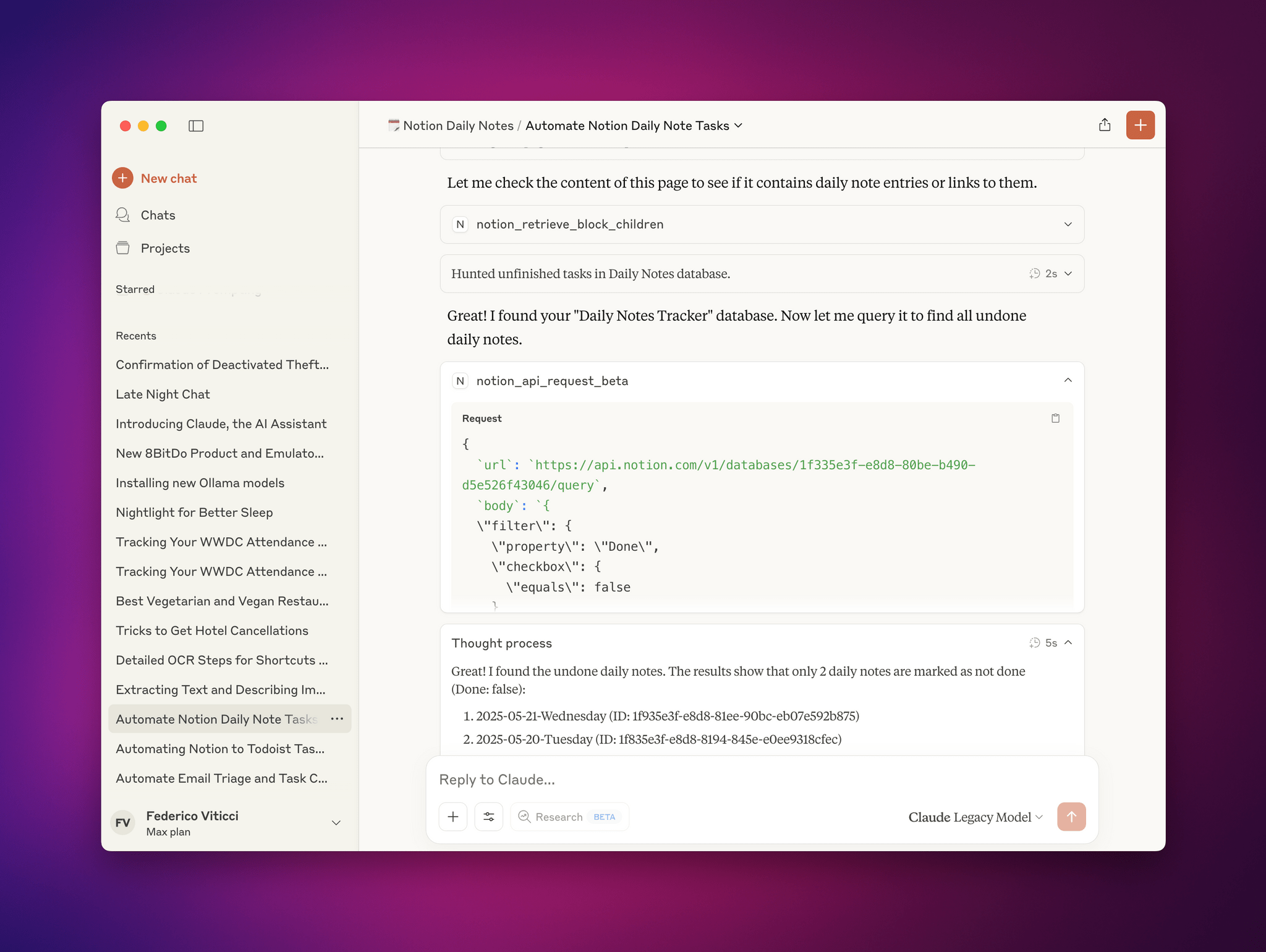

Opus 4 performed admirably in this test. For several minutes, the model called the Zapier MCP integration for different Notion API endpoints, analyzed my daily notes to find lines of text that seemed actionable, turned them into tasks, and intermixed every step with reasoning and web searches to see what additional research it could attach to the task in Todoist:

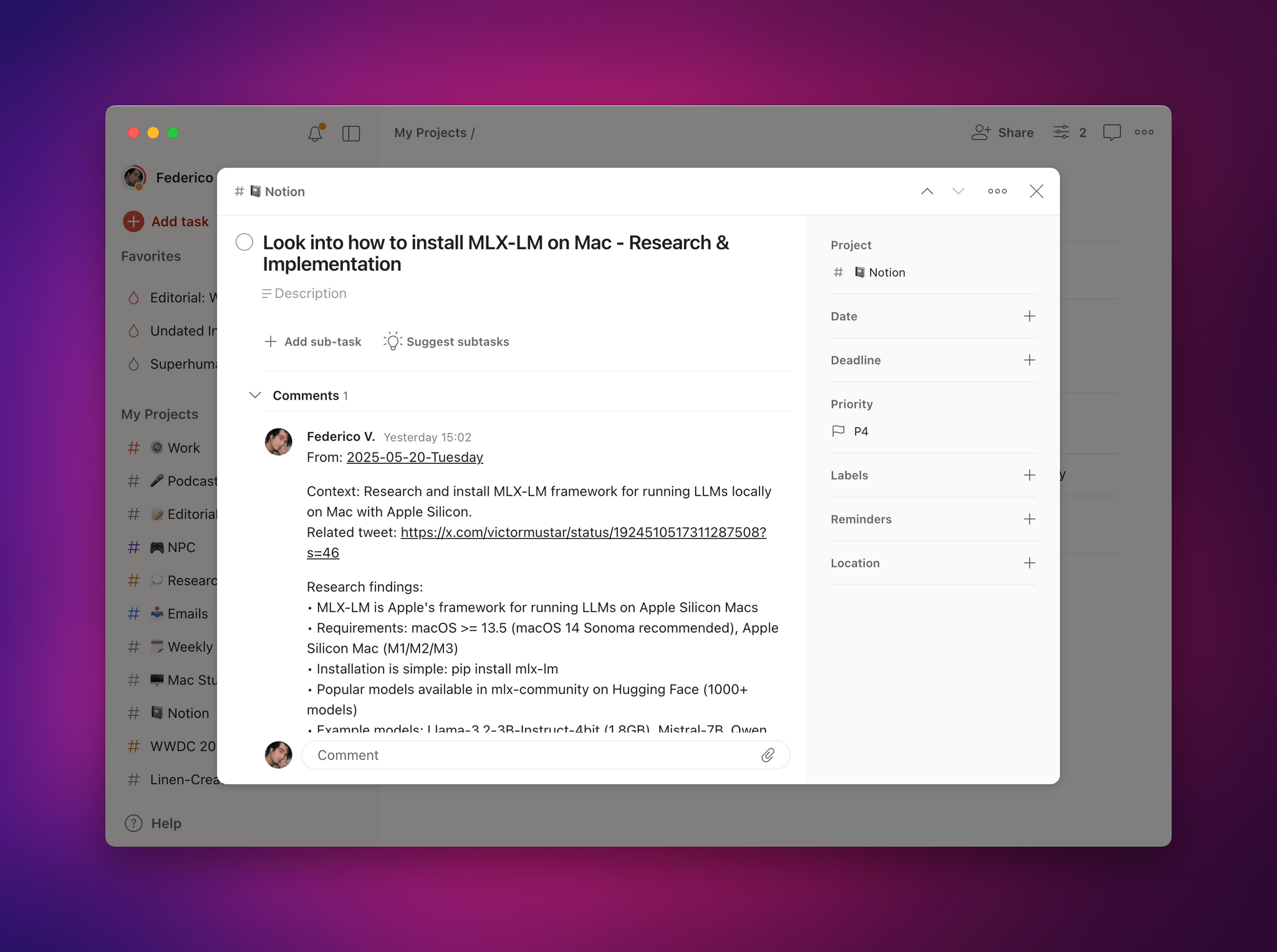

The result is very good! The tasks created in Todoist are deep-linked to the Notion page, and the additional research provided by Claude (as a comment to the task) includes some helpful tips and links to get started on the task more quickly:

At this point in the LLM race, it’s not a huge surprise that more compute power with reasoning and longer execution times equals better results (literally everyone is doing this), but in Anthropic’s case, reasoning steps applied to individual MCP/Google tool calls seem to yield drastically better results compared to their previous reasoning model that did not think about tool calls. This has been especially noticeable when troubleshooting results from tools, such as when Opus 4 realized it couldn’t find my Notion daily notes and, unprompted, performed a direct API call using Zapier (to the correct endpoint, no less), then kept going:

Now, however, this sort of long-running, multi-tool agentic behavior begs for the ability to execute these complex prompts with a scheduled task approach. Like I mentioned above, these are multi-tool, agentic tasks that are best left to be executed with extended thinking and no further user input in the background. The problem is that, right now, Anthropic doesn’t offer a scheduled tasks functionality similar to what’s possible in ChatGPT.2 I hope to see changes on this front in the near future.

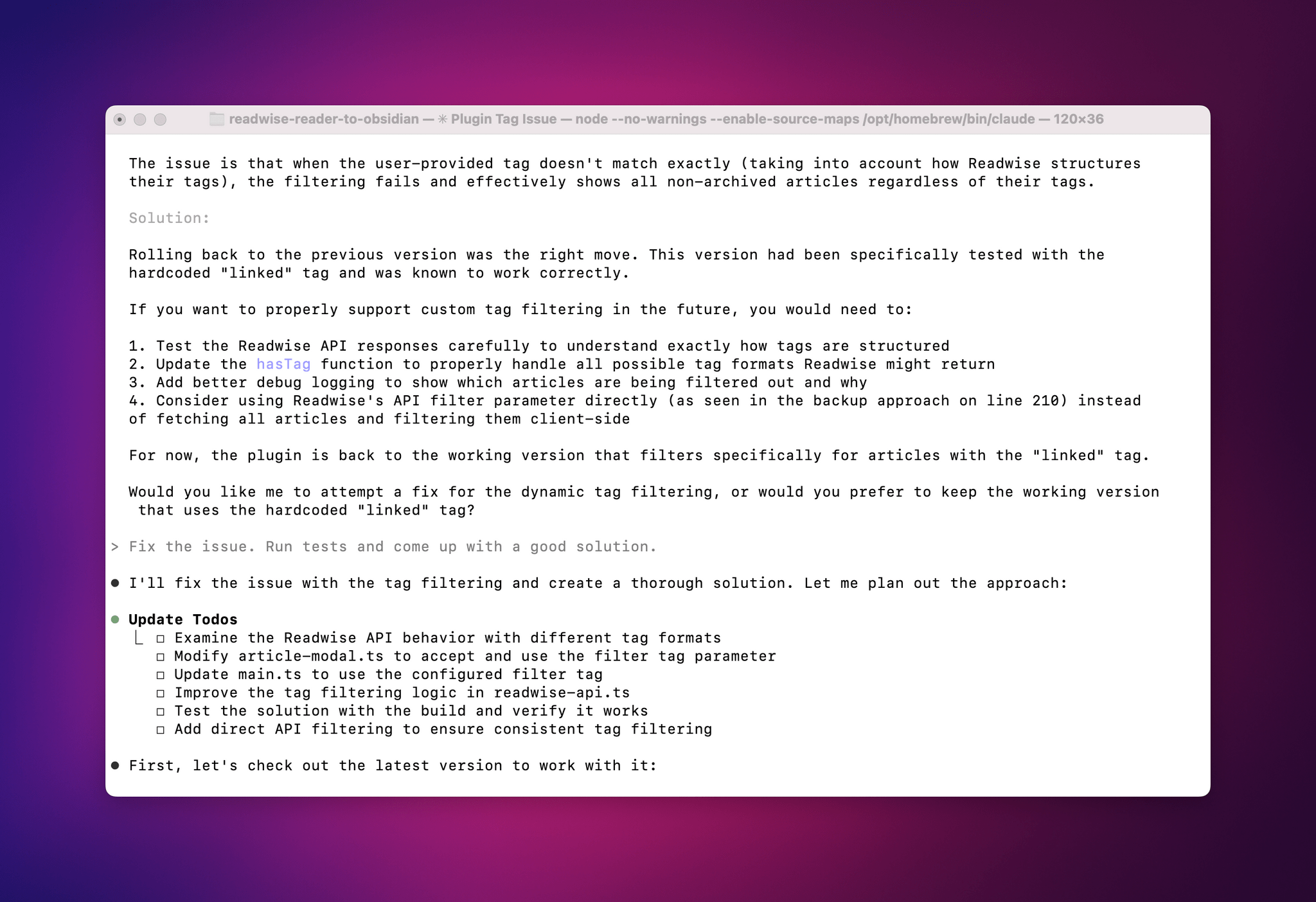

I’m not a developer, and while I have been playing around with “vibe coding” my own Obsidian plugins, I don’t think it’d be fair for me to offer any in-depth assessment of the upgraded Claude Code powered by Opus 4. The only thing I will say is that, after loading the repo for one of my Obsidian plugins, Opus 4 identified a bug left by OpenAI’s Codex agent and fixed it on its first try. But I’ll leave it to other folks to test Opus 4 and see whether or not it improves upon 3.7 Sonnet or even the beloved 3.5 Sonnet for extended coding sessions.

As part of my tests with Opus 4 over the last two days, I also tried my usual OCR and long-context tasks. I wasn’t told the context window of Opus 4 while I was testing it, but I can tell you that when I tried loading my archive of iOS reviews (~980,000 tokens in a single text file) as the input for Opus 4, I got an error. Unless the model released today is different from what I tested, this suggests that GPT 4.1 and Gemini 2.5 will continue to be the only cloud-based models to support a 1M token context window. Context window limits have been one of the key Claude limitations for a while now, and I ran into them even when I was not stress-testing the model with my iOS review archive. For instance, this prompt that looks at my travel history in California…

Can you search across my entire Gmail history, understand how many times I have gone to WWDC in California over the years, and create a timeline for each trip regarding Apple briefings, Apple events, and in-person events (like live shows) for each WWDC year I was there? I’m trying to understand what, historically speaking, my WWDC week looks like.

…consistently ran out of tokens. Conversely, Gemini 2.5 Pro ran it from start to finish without issues.

Advanced OCR continues to be an issue as well. I’ve shared my thoughts on this before and recently wrote about how Qwen2.5-VL-72B running on my M3 Ultra Mac Studio is the only model that can OCR a long screenshot or PDF with coherent output in less than seven minutes, which o4-mini-high previously achieved in my tests. The new Opus 4, unfortunately, hallucinates OCR’d text in heavy PDFs just as much as 3.7 Sonnet did. At this point, I have to say I’m surprised that Anthropic isn’t letting Claude write its own Python code in a sandboxed environment to overcome heavy-duty tasks like OpenAI did for o3 and o4-mini-high.

So that’s been my experience with the new Opus 4 in 48 hours. Funnily enough, just yesterday, OpenAI announced major improvements to their new Responses API, including tools and MCP support for the o-series reasoning models.3 This means that, technically speaking, you can now use o3 or o4-mini with reasoning applied to every step of a tool-calling chain and theoretically replicate the workflows I described above…but only in the API for now. If MCP support in the Responses API was “coming soon” and is now available, how long will it be until ChatGPT on desktop gets a visual interface for integrating with MCP tools and using them with reasoning? How long until someone figures out how to integrate tools natively on mobile devices?

Regardless of what happens next, I’ve had a good experience with extended thinking and tools in the new Claude Opus 4. I’m going to keep using it for my Google- and Zapier-based workflows…until the next big thing comes along, of course.

You can read more about the new models released by Anthropic today here.

- In my experience, the Claude family of models continues to be the best at following instructions (particularly in XML format), followed by GPT 4.1…which also adopted XML-style prompting. ↩

- Although I should say that I really haven’t found success with scheduled tasks in ChatGPT yet. Even after scheduled tasks were upgraded to use o3 and o4-mini, a recurring one I have set to fetch the title of a webpage every day fails to run for no apparent reason. Still, the point remains: ChatGPT lets you schedule execution of long-running background tasks, and Claude does not. ↩

- Anthropic also announced similar API capabilities earlier today. ↩