One of the big questions heading into today’s WWDC keynote was how Apple would address its AI efforts. After a splashy introduction last year followed by a staggered rollout and the eventual delay of the more personalized Siri, it was unclear how much focus the company would put on Apple Intelligence during its big announcement video.

Surprisingly, they came right out of the gate with a segment on Apple Intelligence, even going so far as to mention the fact that the more personalized Siri needed more time; it’s slated to be released “in the coming year.” But SVP of Software Craig Federighi also said that Apple Intelligence had progressed with more capable and efficient models and teased that more Apple Intelligence features would be revealed throughout the presentation. Rather than dedicating a significant portion of the keynote just to AI features, the company returned to a platform-centered structure for the rest of the video and mentioned Apple Intelligence as it related to each OS.

In its second year, Apple Intelligence is set to expand in more ways than one. Perhaps most excitingly, third-party developers will soon have access to Apple Intelligence’s on-device foundation model, enabling them to implement AI features in their apps that work offline in a privacy-respecting way. And because the framework is local, it will be available to developers at no additional cost with no API fees.

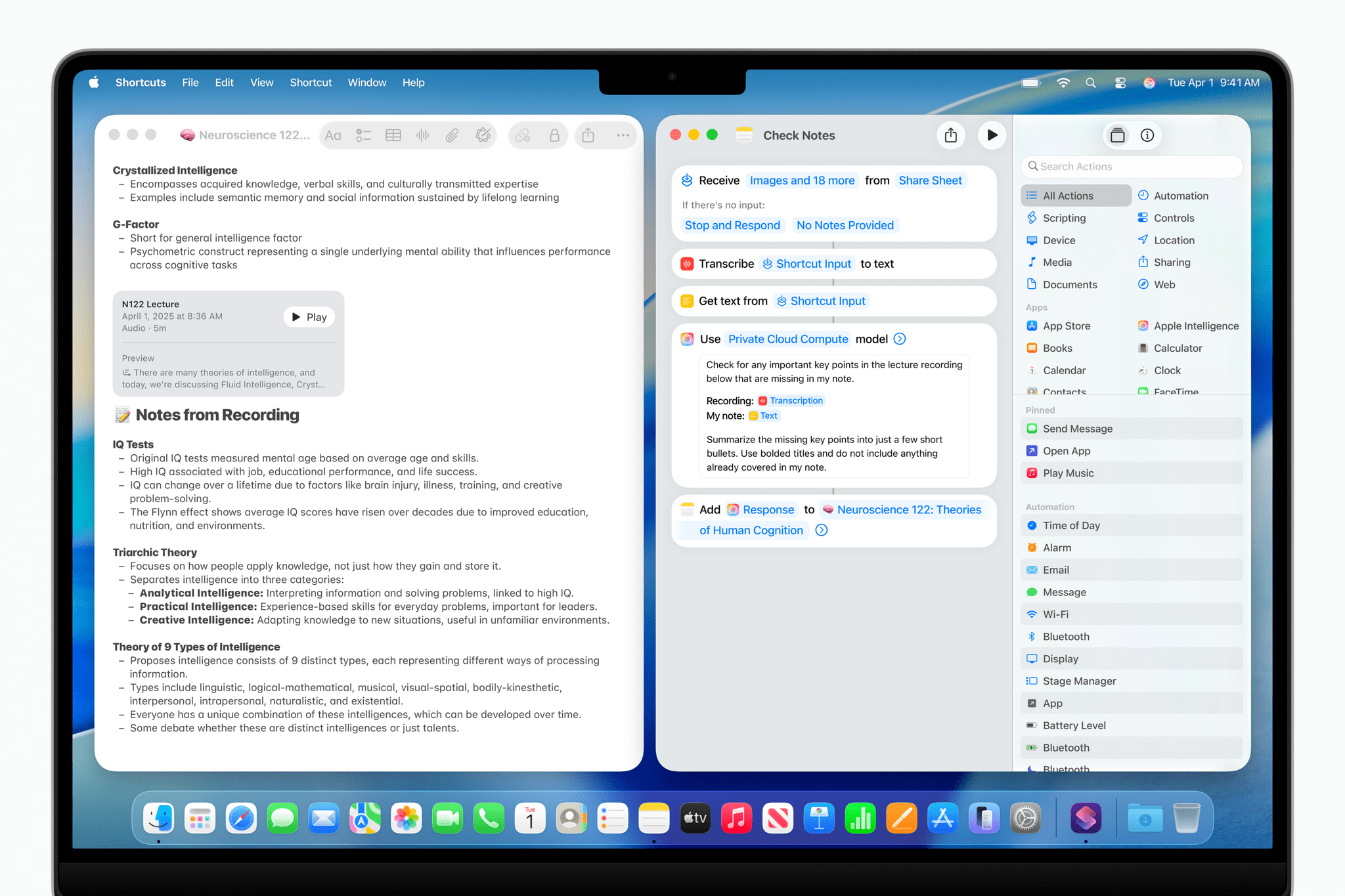

Users will have deeper access to Apple Intelligence, too, in the form of new Shortcuts actions. In addition to AI-powered actions like summarization and image generation, Shortcuts will offer direct access to Apple Intelligence models – both on-device and via Private Cloud Compute – with an action that lets you freely prompt the model and receive a response. In this way, Apple Intelligence can become a central part of any automated workflow. The AI action will also include the option to use ChatGPT instead.

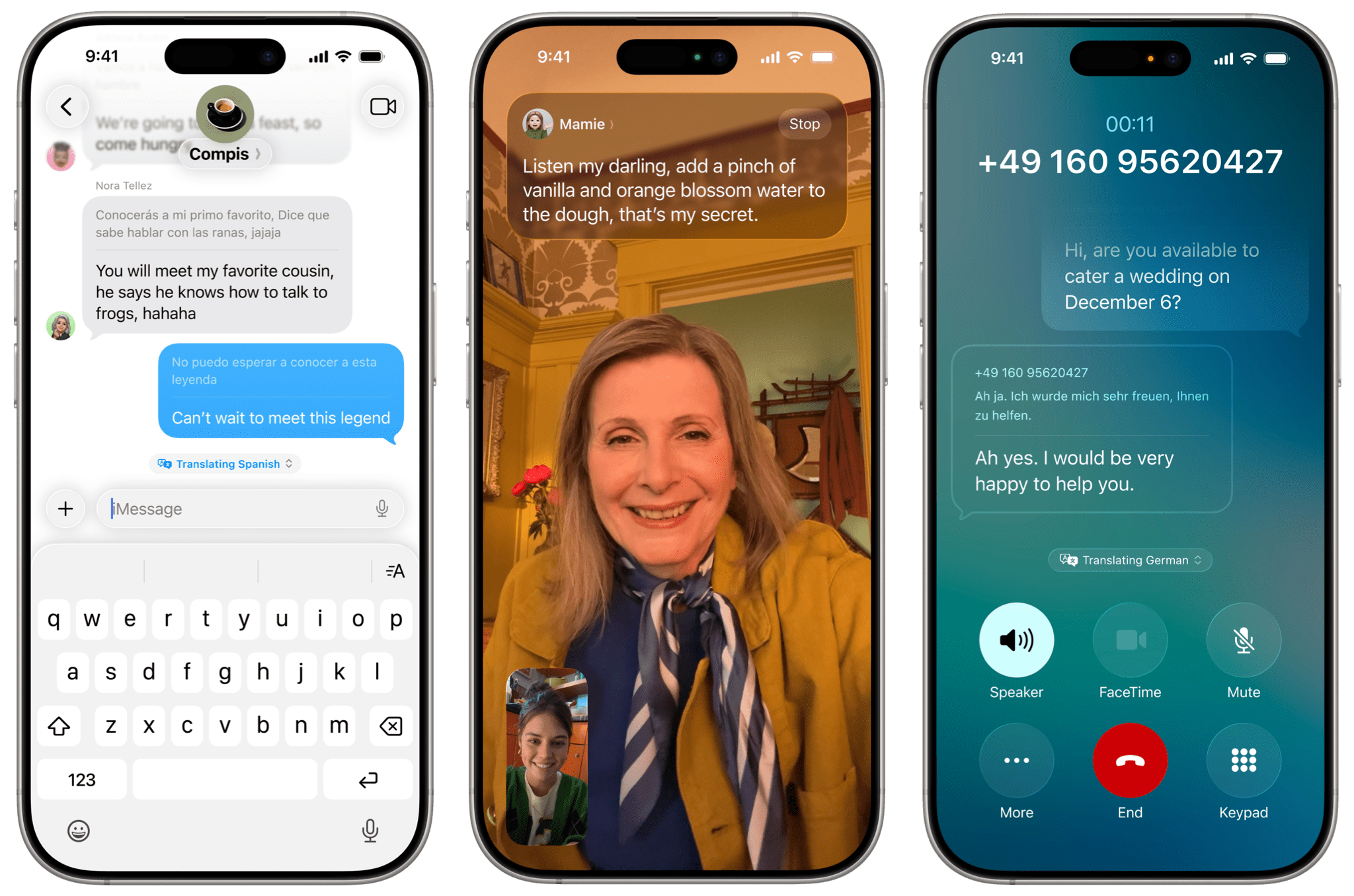

Live Translation is a new Apple Intelligence feature built into Messages, FaceTime, and Phone to facilitate conversations across language barriers. Messages will translate texts into the user’s preferred language and send responses in the language spoken by the recipient. FaceTime will display live translated captions as the other person is talking, and the Phone app will both display captions and speak translations aloud.

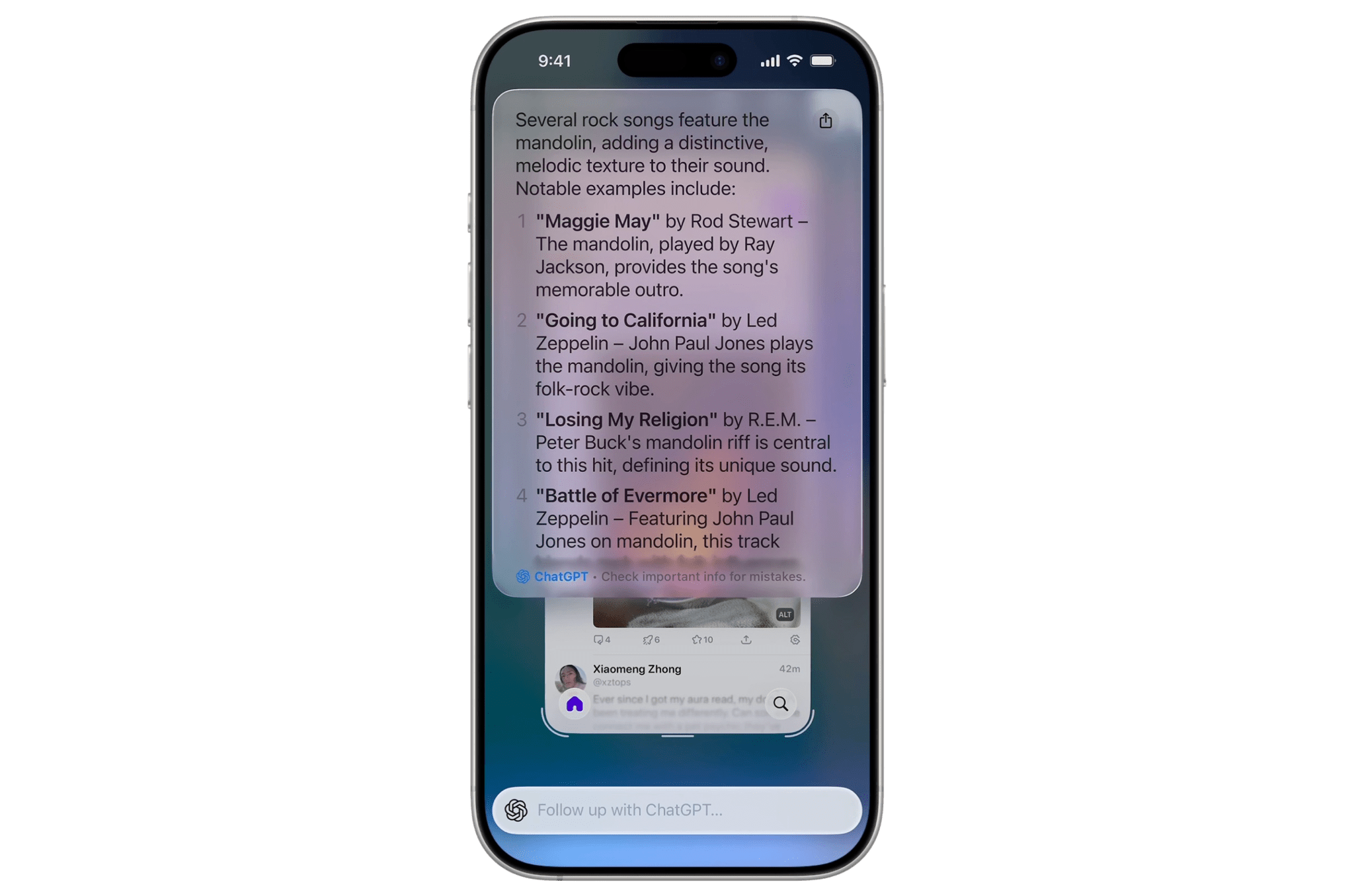

Visual intelligence, a feature announced alongside the iPhone 16 and 16 Pro last year, is moving beyond the camera to help users better understand what’s on their screens. It’s built into the new screenshot UI and lets you ask ChatGPT questions about what you see onscreen or search Google, Pinterest, Etsy, and other apps (integrated using app intents) for similar items. The feature can also detect event information onscreen and add events to your calendar accordingly.

While the Apple Watch is yet to run Apple Intelligence natively, it continues to benefit from its connection to the iPhone in the form of a new Apple Intelligence feature called Workout Buddy. Built using data from Apple Fitness+ instructors, Workout Buddy implements a text-to-speech model to encourage users during workouts, incorporating information from the current session as well as past workouts to generate inspiring messages. The feature will require your Watch to be connected to your iPhone during the workout and will be available in English to start across a subset of Apple Workouts: Outdoor and Indoor Run, Outdoor and Indoor Walk, Outdoor Cycle, HIIT, and Functional and Traditional Strength Training.

Apple Intelligence’s image generation tools are receiving some upgrades as well. Genmoji will allow users to combine multiple emoji into one and mix existing emoji with descriptions to make new ones. Image Playground is integrating with ChatGPT to offer new styles like oil painting and vector art, as well as an ‘Any Style’ option where you can describe the look you’re going for. In both Genmoji and Image Playground, you’ll be able to make fine tweaks to illustrations based on people, including adjusting hairstyle and expression.

Finally, Apple Intelligence is expanding into other system apps in smaller ways. It will suggest creating polls in group chats when appropriate, surface order tracking information in the Wallet app, and help categorize tasks in Reminders.

It’s encouraging to see Apple Intelligence continue to grow despite the company’s setbacks when it comes to Siri. Developer access and the new Shortcuts actions are especially interesting because they put the power of AI into new hands. We’ll soon see what new implementations come of this expansion.

You can follow all of our WWDC coverage through our WWDC 2025 hub or subscribe to the dedicated WWDC 2025 RSS feed.