Writing at his personal blog, Brendon Bigley explains why the Delta emulator launching on the App Store is a big deal for retrogaming fans who also love native iOS apps:

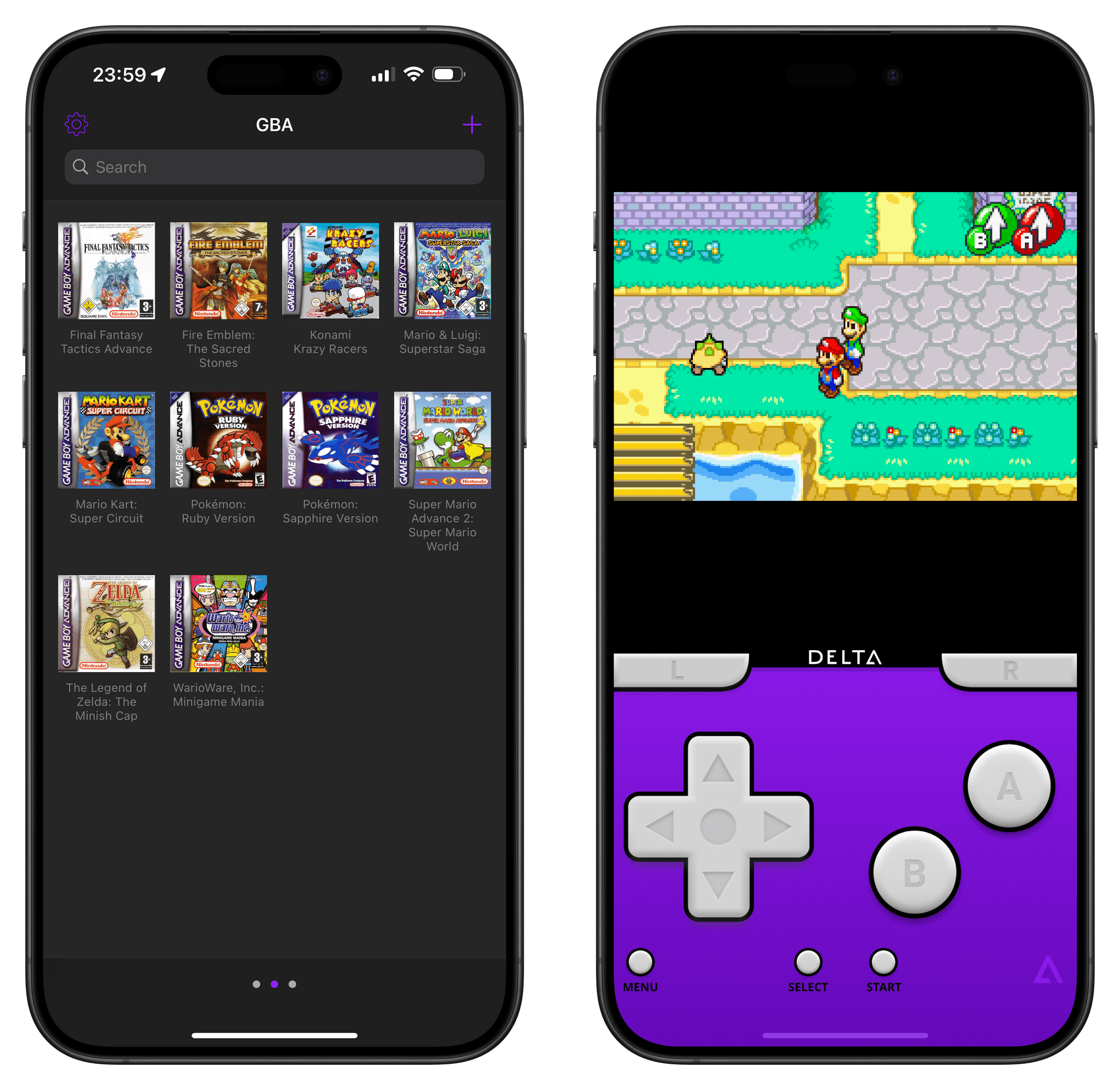

AltStore for me (and many) was just a way to get access to Delta, which is the best emulator on iOS by a pretty shocking margin. While there are admittedly more feature-rich apps like RetroArch out there, no other app feels made for iPhone in the way Delta does. With a slick iOS-friendly user interface, custom themes and designs to reskin your experience, and the ability to grab game files from iCloud, Delta always represented what’s possible what a talented app developer could do if the App Store was even slightly more open. It’s in this possibility space where I likely never switch to Android again.

I posted this on Threads and Mastodon, but I was able to start playing old save files from my own copies of Final Fantasy Tactics Advance and Pokémon Ruby in 30 seconds thanks to Delta.

Years ago, one of my (many) lockdown projects involved ripping my own Game Boy Advance game collection using a GB Operator. I did that and saved all my games and associated save files in iCloud Drive, thinking they’d be useful someday. Today, because Delta is a great iOS citizen that integrates with the Files picker, I just had to select multiple .gba files in iCloud Drive to add them to Delta. Then, since Delta also supports context menus, I long-pressed each game to import my own save files, previously ripped by the GB Operator. And that’s how I can now continue playing games from 20 years ago…on my iPhone. And those are just two aspects of the all-encompassing Delta experience, which includes Dropbox sync, controller support, haptic feedback, and lots more.

Brendon also wraps up the story with a question I’m pondering myself:

How does Nintendo react to the news that despite their desire to fight game preservation at all costs, people are nonetheless still enjoying the very games that built their business in the first place?

I’ll never get over the fact that Nintendo turned the glorious Virtual Console into a subscription service that is randomly updated and not available on mobile devices at all. I’m curious to see if Nintendo will have any sort of response to emulators on iOS; personally, I know that Delta is going to be my new default for all Game Boy/DS emulation needs going forward.

](https://cdn.macstories.net/banneras-1629219199428.png)