Yesterday was a big step forward for MacStories and the Club. If you haven’t read Federico’s story about what we’re doing and why, it’s worth checking out because it’s not just that the Club is now on macstories.net. We’re taking an entirely new approach to MacStories that, in time, will touch everything we do on...

How I Use Claude Code Running on My Mac mini From the iPhone and iPad

How I Use Claude Code Running on My Mac mini From the iPhone and iPad

Given what we’ve discussed on AppStories lately, it shouldn’t come as a huge surprise that I’ve been using Claude Code on my Mac mini home server a lot. And if you know me, you shouldn’t be surprised by the fact that I’ve been looking for ways to access the desktop version of Claude Code from...

Welcome to the New, Unified MacStories and Club MacStories

Today, I’m pleased to announce something we’ve been working on for the past two years: MacStories and Club MacStories are now one website. If you’re a Club MacStories member, you no longer need to go to a separate website to read our exclusive columns and weekly newsletters: everything has been unified into the main MacStories.net website you know and love. The subscription plans are the same. We’ve imported 11 years of Club MacStories content into MacStories, with everything running on a new foundation powered by WordPress; going forward, all member content – including AppStories – will be published directly on MacStories.

To get started, simply log into your existing Club MacStories account on the new MacStories Plans page or by clicking the Account icon in the top toolbar. Members can still access a special homepage of Club-only content at macstories.net/club or club.macstories.net – whatever you prefer. A few things will be different as part of this transition, and some parts of the previous Club MacStories experience haven’t been migrated yet, which I will explain in this story.

The short version of this announcement is that this has been a massive undertaking for me, John, and our new developer Jack. We’ve been working on this project in secret for months, and our goal was always to ensure a smooth, relatively pain-free migration for our members and MacStories readers. Now more than ever, the Club MacStories membership program is a core component of the entire MacStories ecosystem of articles, exclusive perks, and podcasts; it’s only thanks to the Club that, in this day and age, MacStories can continue to thrive with its editorial independence, vibrant community of members, and focus on producing high-quality, well-researched content written and spoken by humans, not AI.

The longer version is that the last few years have been complicated. We faced some challenges along the way, made some wrong technical calls, and have been working to rectify them – with the ultimate goal of propelling MacStories into its third decade of existence on the Open Web. We’re turning MacStories – the website that millions of people visit every year – into a destination that (hopefully!) will put a stronger spotlight on all the things we do. But to get to this point, we had to break a few things, iterate slowly, start over, and refine until we were happy with the results.

If you’re a Club member: thank you, and we hope you’ll enjoy the more intuitive and integrated experience we’ve prepared. If you’re not, I hope you’ll consider checking out the (many) exclusive perks of a Club MacStories subscription.

And if you’re curious to learn more about what we’re launching today and how we got to this point…well, do I have a story for you.

Terminal Tips and Claude Code Workflows

Terminal Tips and Claude Code Workflows

This week, Federico and John share their workflows and tips on how they use Claud Code and Codex to build automations.

On AppStories+, John and Federico explore the Apple and Google Gemini deal and the end of Shortcuts as we know it.

We deliver AppStories+ to subscribers with bonus content, ad-free, and at a high bitrate early every week.

To learn more about an AppStories+ subscription, visit our Plans page, or read the AppStories+ FAQ.

AppStories Episode 469 - Terminal Tips and Claude Code Workflows

43:26

This episode is sponsored by:

- HTTPBot: A powerful API client and debugger for Apple platforms. Get a 7-day trial and 25% off your subscription.

An Actually Productive Experience with “Agentic” AI Browsers…?

An Actually Productive Experience with “Agentic” AI Browsers…?

As I shared on AppStories a few months ago, I’m very skeptical of this new wave of “agentic” AI browsers that promise to take interactions with webpages off users’ hands and into their LLMs. They’re slow and riddled with security risks for prompt injection and data exfiltration attacks, and – honestly – most of the...

OpenClaw (Formerly Clawdbot) Showed Me What the Future of Personal AI Assistants Looks Like

Update, January 30, 2026: The project has now been rebranded as OpenClaw (frankly, a much better name). The official website is openclaw.ai. Everything from this article still applies.

Update, January 27, 2026: Clawdbot has been renamed to Moltbot following a trademark-related request by Anthropic.

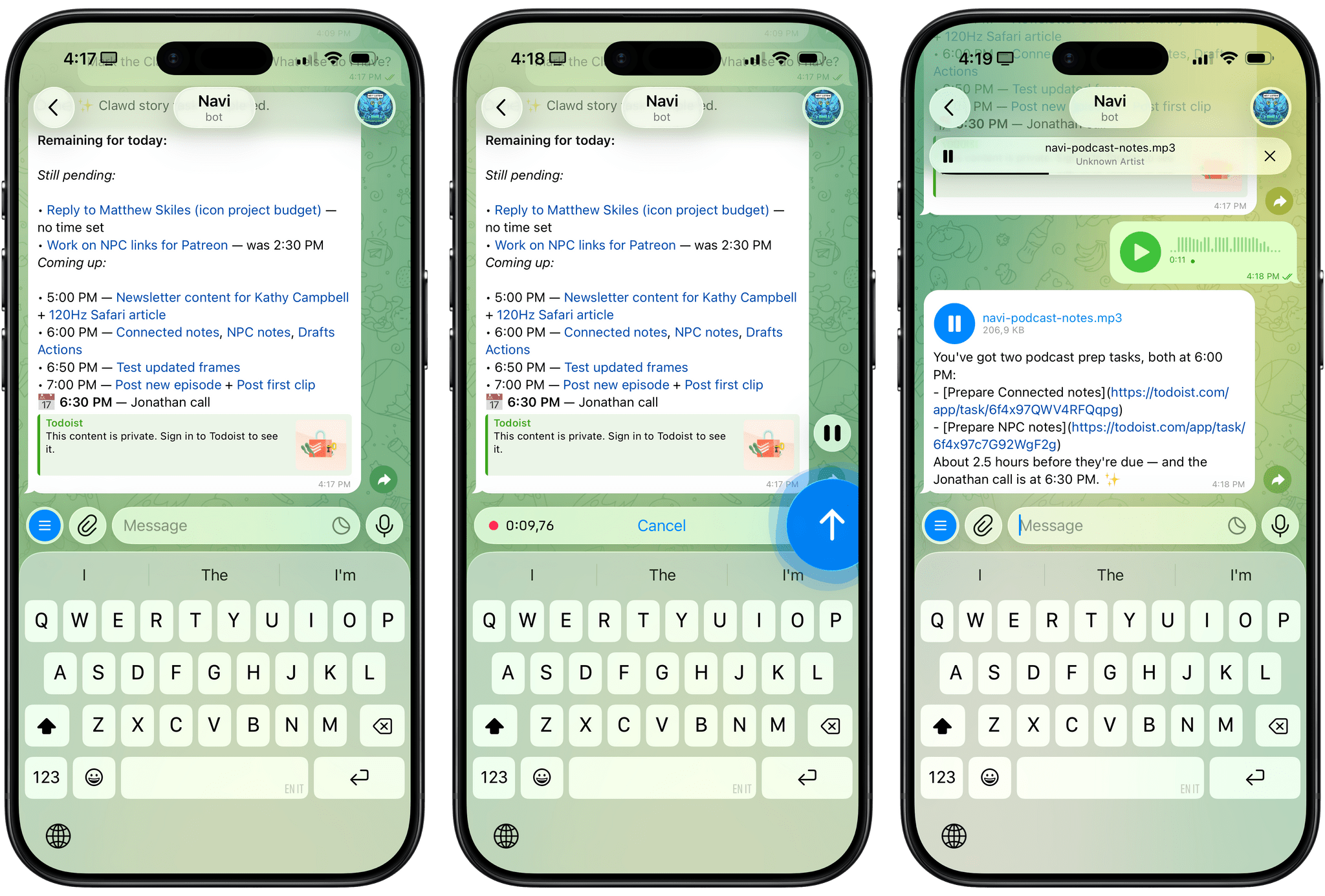

For the past week or so, I’ve been working with a digital assistant that knows my name, my preferences for my morning routine, how I like to use Notion and Todoist, but which also knows how to control Spotify and my Sonos speaker, my Philips Hue lights, as well as my Gmail. It runs on Anthropic’s Claude Opus 4.5 model, but I chat with it using Telegram. I called the assistant Navi (inspired by the fairy companion of Ocarina of Time, not the besieged alien race in James Cameron’s sci-fi film saga), and Navi can even receive audio messages from me and respond with other audio messages generated with the latest ElevenLabs text-to-speech model. Oh, and did I mention that Navi can improve itself with new features and that it’s running on my own M4 Mac mini server?

If this intro just gave you whiplash, imagine my reaction when I first started playing around with Clawdbot, the incredible open-source project by Peter Steinberger (a name that should be familiar to longtime MacStories readers) that’s become very popular in certain AI communities over the past few weeks. I kept seeing Clawdbot being mentioned by people I follow; eventually, I gave in to peer pressure, followed the instructions provided by the funny crustacean mascot on the app’s website, installed Clawdbot on my new M4 Mac mini (which is not my main production machine), and connected it to Telegram.

To say that Clawdbot has fundamentally altered my perspective of what it means to have an intelligent, personal AI assistant in 2026 would be an understatement. I’ve been playing around with Clawdbot so much, I’ve burned through 180 million tokens on the Anthropic API (yikes), and I’ve had fewer and fewer conversations with the “regular” Claude and ChatGPT apps in the process. Don’t get me wrong: Clawdbot is a nerdy project, a tinkerer’s laboratory that is not poised to overtake the popularity of consumer LLMs any time soon. Still, Clawdbot points at a fascinating future for digital assistants, and it’s exactly the kind of bleeding-edge project that MacStories readers will appreciate.

How to Enable Smoother 120Hz Scrolling in Safari→

I came across this incredible tip by Matt Birchler a few weeks ago and forgot to link it on MacStories:

Today I learned something amazing: Safari supports higher than 60Hz refresh. It’s the only mainstream web browser that doesn’t, and I have never understood why, but apparently as of the end of 2025 in Safari version 26.3 (and maybe earlier) you can enable it. Here’s how to do it.

I won’t paste the steps here, so you’ll have to click through and visit Matt’s website (I keep recommending his work, and he’s doing some really interesting work with “micro apps” lately). I can’t believe this feature is disabled by default on iOS and iPadOS; I turned it on several days ago, and it made browsing with Safari significantly nicer.

Also new to me: I discovered this outstandingly weird website that lets you test your browser’s refresh and frame rates. Just trust me and click through that as well – what a great way to show people who “don’t see” refresh rates what they actually feel like in practice.

A Very ‘Just Build It’ Holiday

A Very ‘Just Build It’ Holiday

This week, Federico and John complete their tour of holiday projects with a look at the tools both of them built with the help of Claude Code, Codex, and other tools.

On AppStories+, John pushes Claude Code by building a Safari web extension that integrates with Notion.

We deliver AppStories+ to subscribers with bonus content, ad-free, and at a high bitrate early every week.

To learn more about an AppStories+ subscription, visit our Plans page, or read the AppStories+ FAQ.

AppStories Episode 468 - A Very ‘Just Build It’ Holiday

42:35

Interesting Links

Interesting Links

Spigen announced a new retro-inspired iPhone case called the Classic LS, featuring a boxy design reminiscent of the Macintosh 128K and Apple Lisa, complete with keyboard-style buttons and a beige color scheme. Guess who got one. (Link) Taphouse is a new native macOS client for Homebrew that brings a visual package management experience to...

](https://cdn.macstories.net/banneras-1629219199428.png)