Today is Data Privacy Day, and to mark the occasion, Apple has published a case study titled ‘A Day in the Life of Your Data.’ In the accompanying press release, Craig Federighi, Apple’s senior vice president of Software Engineering explains the company’s approach to privacy:

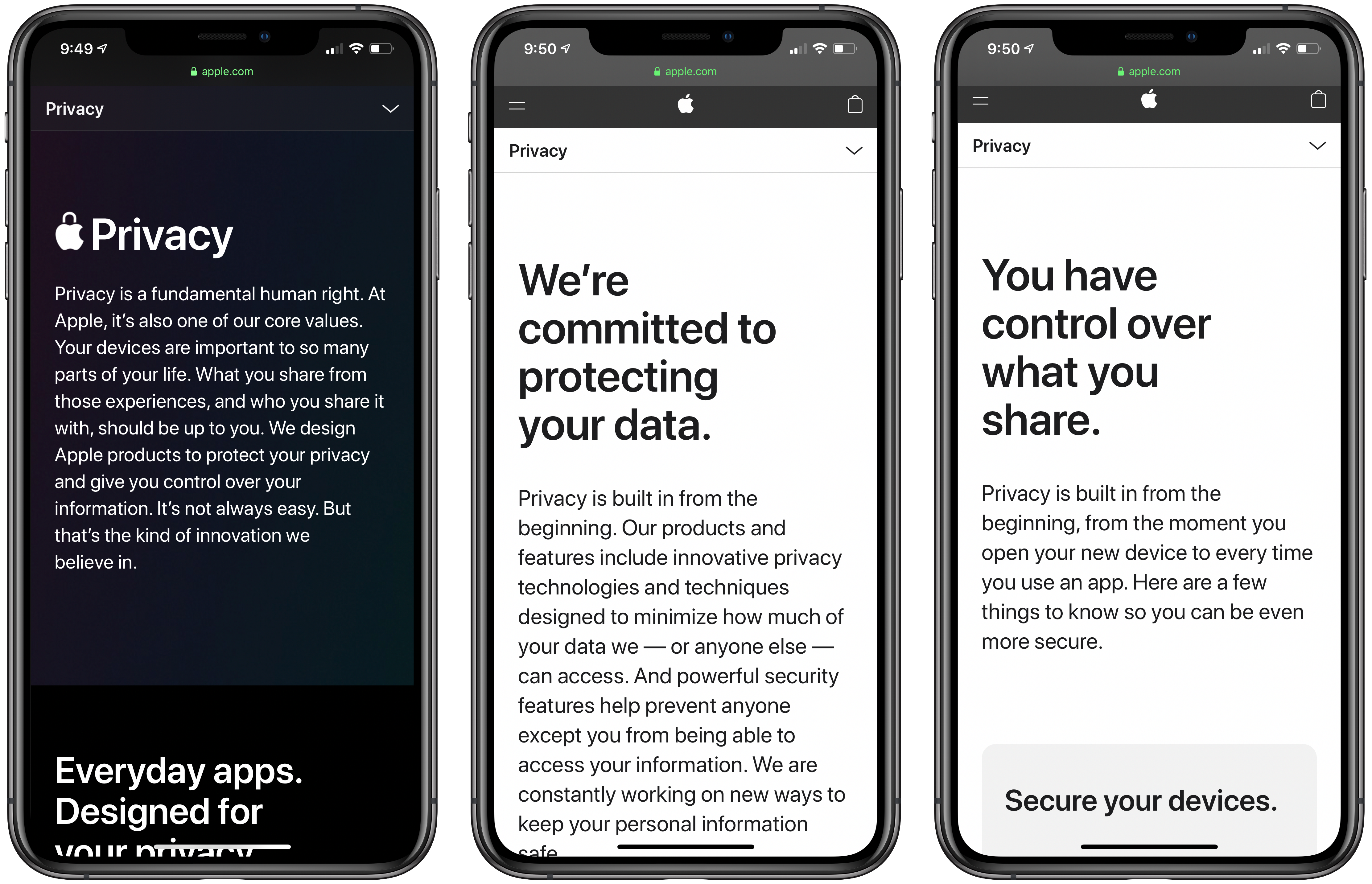

Privacy means peace of mind, it means security, and it means you are in the driver’s seat when it comes to your own data. Our goal is to create technology that keeps people’s information safe and protected. We believe privacy is a fundamental human right, and our teams work every day to embed it in everything we make.

Apple’s efforts to put its customers in control of their data are not new, but as they evolve and expand, so have tensions with other tech industry titans like Facebook. Part of the lastest tensions stems from the fact that as part of the next iOS and iPadOS beta, Apple will begin testing a system that alerts users when an app wants to share data it collects with other apps, websites, and companies. The most common way apps do this is with the Identifier for Advertisers or IDFA, a unique code that identifies your device.

Users can already go into the Privacy section of the Settings app to turn off IDFA-based tracking under ‘Tracking,’ but that requires people to know about the setting and find it. Apple’s new system is similar to other privacy flows throughout iOS in that it displays an alert when an app that wants to use tracking is launched, asking the user to grant it permission.

Facebook and others, whose advertising relies on aggregating data about users from multiple apps and websites and then tying it back to a specific individual, see this as a threat to their business models. Attempting to reframe the issue as one of economics, Facebook argues that the change will hurt small businesses who purchase targeted ads because those ads will no longer be as effective.

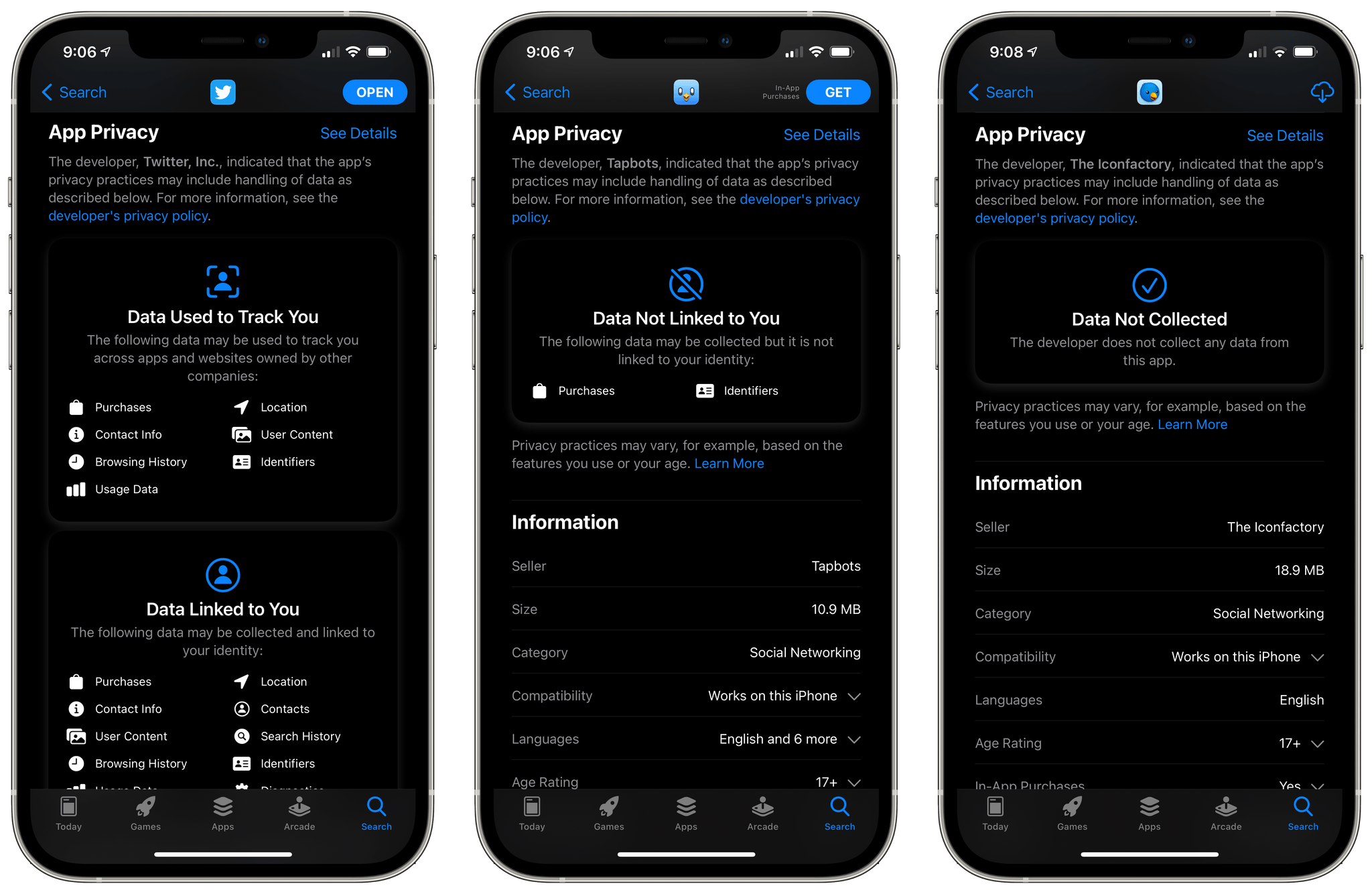

The new privacy feature, which Apple calls App Tracking Transparency, comes on the heels of the standardized privacy disclosures the company began requiring from developers with its Fall 2020 OS updates. For apps like Facebook, the disclosures are extensive, but to its credit, Facebook published its disclosures late last year, while Google still hasn’t.

Apple’s App Store privacy labels make it clear to users that third-party Twitter clients collect far less data than the official app, for example.

Apple’s case study is a day-in-the-life hypothetical that follows a father and daughter throughout their day together. The document is peppered with facts about tracking and data brokers, including a citation to a study that says the average app includes six trackers. Most useful, though, is the case study’s plain-English, practical examples of the kind of tracking that can occur as you go about a typical day’s activities using apps.

It’s impossible to create a case study that users who aren’t security experts will understand without glossing over details and nuances inherent to privacy and tracking. However, the study is extensively footnoted with citations to back up the statements it makes for those who want to learn more, which I think strikes the right balance. I’ve had tracking turned off on my devices since it was possible, and personally, I’m glad to see the feature is going to be surfaced for others who may not be aware of its existence.