In a telephone interview with Matthew Panzarino of TechCrunch, Apple’s Senior Vice President of Software Engineering, Craig Federighi, answered many of the questions that have arisen about Face ID since the September 12th keynote event. Federighi went into depth on how Apple trained Face ID and how it works in practice. Regarding the training,

“Phil [Schiller] mentioned that we’d gathered a billion images and that we’d done data gathering around the globe to make sure that we had broad geographic and ethnic data sets. Both for testing and validation for great recognition rates,” says Federighi. “That wasn’t just something you could go pull of the internet.”

That data was collected worldwide from subjects who consented to having their faces scanned.

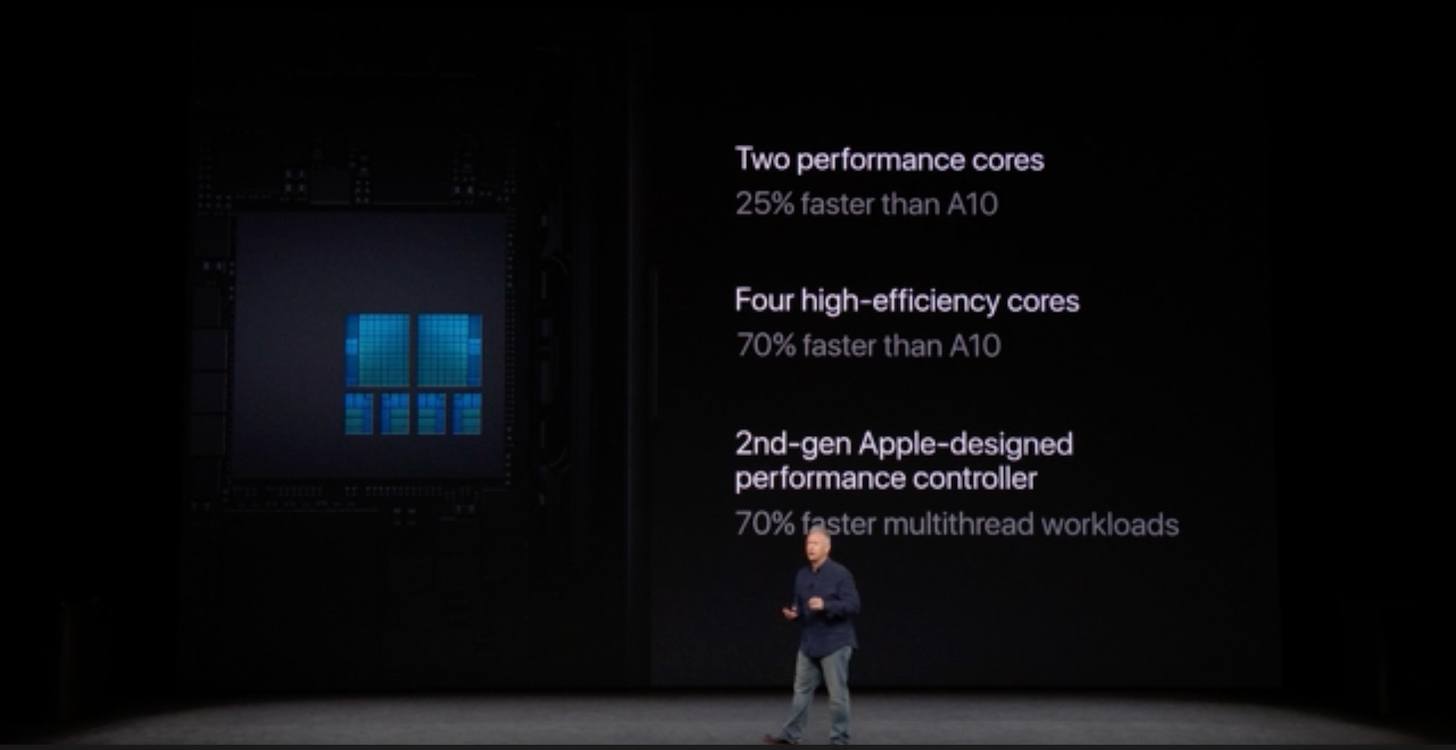

Federighi explained that Apple retains a copy of the depth map data from those scans but does not collect user data to further train its model. Instead, Face ID works on-device only to recognize users. The computational power necessary for that process is supplied by the new A11 Bionic CPU and the data is crunched and stored in the redesigned Secure Enclave.

The process of disabling Face ID differs from the five presses of the power button required on older iPhones. Federighi said,

“On older phones the sequence was to click 5 times [on the power button] but on newer phones like iPhone 8 and iPhone X, if you grip the side buttons on either side and hold them a little while – we’ll take you to the power down [screen]. But that also has the effect of disabling Face ID,” says Federighi. “So, if you were in a case where the thief was asking to hand over your phone – you can just reach into your pocket, squeeze it, and it will disable Face ID. It will do the same thing on iPhone 8 to disable Touch ID.”

In many respects, the approach Apple has taken with Face ID is very close to that taken with Touch ID. User data is stored in the Secure Enclave, and biometric processing happens on your iOS device, not in the cloud. If you have concerns about Face ID’s security, Panzarino’s article is an excellent place to start. Federighi says that closer to the introduction of the iPhone X, Apple will release an in-depth white paper on Face ID security with even more details.