Well, that didn’t take long.

In yesterday’s second developer beta of iPadOS 26.1, Apple restored the Slide Over functionality that was removed with the debut of the new windowing system in iPadOS 26.0 last month. Well…they sort of restored Slide Over, at least.

In my review of iPadOS 26, I wrote:

So in iPadOS 26, Apple decided to scrap Split View and Slide Over altogether, leaving users the choice between full-screen apps, a revamped Stage Manager, and the brand new windowed mode. At some level, I get it. Apple probably thinks that the functionality of Split View can be replicated with new windowing controls (as we’ll see, there are actual tiling options to split the screen into halves) and that most people who were using these two modes would be better served by the new multitasking system the company designed for iPadOS 26.

At the same time, though, I can’t help but feel that the removal of Slide Over is a misstep on Apple’s part. There’s really no great way to replicate the versatility of Slide Over with the iPad’s new windowing. Making a bunch of windows extra small and stacked on the side of the screen would require a lot of manual resizing and repositioning; at that point, you’re just using a worse version of classic windowing. I don’t know what Apple’s solution could have been here – particularly because, like I said above, the iPad did end up with too many multitasking systems to pick from. But the Mac also has several multitasking features, and people love the Mac, so maybe that’s fine, too?

Slide Over will be missed, but perhaps there’ll be a way for Apple to make it come back.

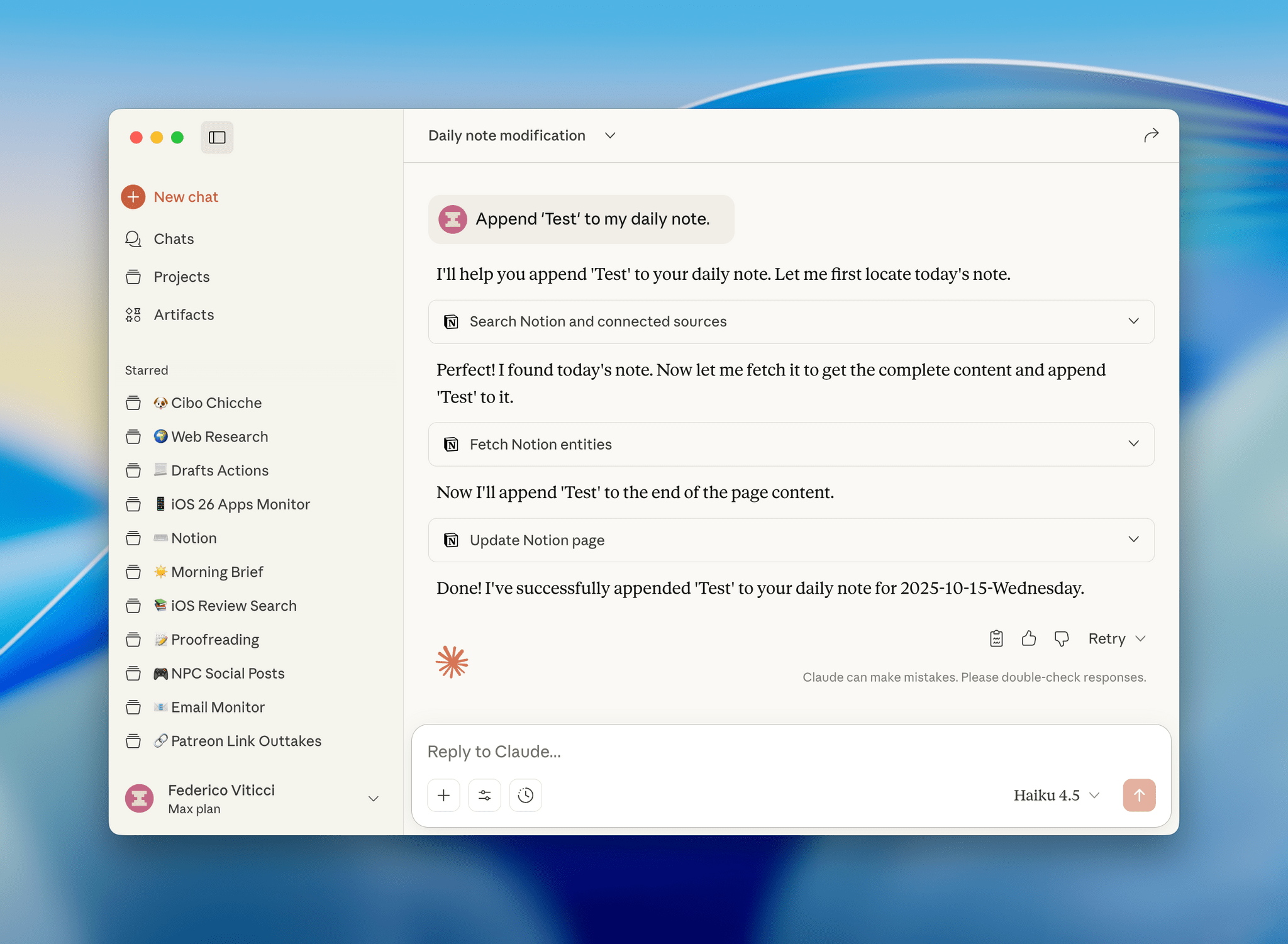

The unceremonious removal of Slide Over from iPadOS 26 was the most common comment I received from MacStories readers over the past month. I also saw a lot of posts on different subreddits from people who claimed they weren’t updating to iPadOS 26 so they wouldn’t lose Slide Over functionality. Perhaps Apple underestimated how much people loved and used Slide Over, or maybe – like I argued – they thought that multitasking and window resizing could replace it. In any case, Slide Over is back, but it’s slightly different from what it used to be.

The bad news first: the new Slide Over doesn’t support multiple apps in the Slide Over stack with their own dedicated app switcher. (This option was introduced in iPadOS 13.) So far, the new Slide Over is single-window only, and it works alongside iPadOS windowing to put one specific window in Slide Over mode. Any window can be moved into Slide Over, but only one Slide Over entity can exist at a time. From this perspective, Slide Over is different from full-screen: that mode also works alongside windowing, but multiple windows can be in their full-screen “spaces” at the same time.

On one hand, I hope that Apple can find a way to restore Slide Over’s former support for multiple apps. On the other, I feel like the “good news” part is the reason that will prevent the company from doing so. What I like about the new Slide Over implementation is that the window can be resized: you’re no longer constrained to using Slide Over in a “tall iPhone” layout, which is great. I like having the option to stretch out Music (which I’ve always used in Slide Over on iPad), and I also appreciate the glassy border that is displayed around the Slide Over window to easily differentiate it from regular windows. I feel, however, that since you can now resize the Slide Over window, also enabling support for multiple apps in Slide Over may get too confusing or complex to manage. Personally, now that I’ve tested it, I’d take a resizable single Slide Over window over multiple non-resizable apps in Slide Over.

Between improvements to local capture and even more keyboard shortcuts, it’s great (and reassuring) to see Apple iterate on iPadOS so quickly after last month’s major update. Remember when we used to wait two years for minor changes?

](https://cdn.macstories.net/banneras-1629219199428.png)