On June 8, 2009, at the end of a two-hour WWDC keynote, Phil Schiller was running through a long list of new features and apps that would be available on the iPhone 3GS, due to ship on June 19 of that year. Phil was pinch-hitting as keynote master of ceremonies for Steve Jobs, who was then on leave, recovering from a liver transplant.

At 1:51:54 in the video, just after he showed off Voice Control and the new Compass app, Apple’s version of the accessibility logo appeared on screen. It’s a stick-style figure with arms and legs outstretched. The icon is still used today.

“We also care greatly about accessibility,” Schiller said, and the slide switched to an iPhone settings screen.

For a total of 36 seconds, Schiller spoke somewhat awkwardly about VoiceOver, Zoom, White on Black (called Invert Colors from iOS 6 onward), and Mono Audio – the first real accessibility features on the iPhone OS platform, as it was then called.

And then it was over. No demo. No applause break.

Schiller moved on to describe the Nike+ app and how it would allow iPhone users to meet fitness goals.

I surveyed a number of liveblogs from that day. About half noted the mention of accessibility features in iPhone OS. The others jumped directly from Compass to Nike+. Accessibility hadn’t made much of a splash.

But in the blindness community, things were very different. Time seemed to stop somewhere after 1:51:54 in the video. Something completely amazing had happened, and only a few people seemed to understand what it meant.

Some were overjoyed, some were skeptical, some were in shock. They all had questions. Would this be a half-hearted attempt that would allow Apple to fill in the checkboxes required by government regulations, a PR stunt to attract good will? Or would it mean that people who had previously been completely locked out of the iPhone would have a way in?

You can probably guess what the answer is, now that we have ten years of an accessible mobile platform in the rearview mirror – now that Apple is widely credited with offering the best mobile accessibility experience available. But it didn’t all happen at once, and not every step along the way was a positive one.

1:51

Excerpt from ‘36 Seconds That Changed Everything.’

As a companion to my audio documentary, “36 Seconds That Changed Everything: How the iPhone Learned to Talk,” I’ve put together a timeline of iOS accessibility milestones from the past ten years. I’ve focused on Apple hardware and operating systems, though there have also been important Apple app updates, and third-party apps that opened doors to new ways of using iOS accessibly. It’s a list that’s simply too long for this article. And, with a few exceptions, I’ve addressed accessibility-specific features of iOS. Many mainstream features have accessibility applications and benefits, even if they don’t fit here directly.

Before the iPhone Was Accessible (2007-2009)

The number of blind Mac users tuned in to hear Steve Jobs introduce the iPhone at the 2007 Macworld keynote was probably very small. After all, the Mac had only been accessible for a couple of years at that point, by which I mean usable via screen reading software for people who were blind or had low vision.

Apple’s Mac screen reader was (and is) called VoiceOver, and it had been introduced with 10.4 Tiger, in 2005. Prior to Tiger, no version of Mac OS X had provided accessibility tools. Pre-Mac OS X, Apple did offer a few accessibility settings, and the Outspoken screen reader was available from a third party. Some blind tech enthusiasts had adopted the Mac following VoiceOver’s arrival with Tiger, and it was they who were most interested in whether the iPhone would be accessible.

But in 2007, the hordes of eager iPhone purchasers did not include people who were blind or had low vision. The iPhone wasn’t accessible to them. And lots of people, both blind and sighted, assumed it never would be. After all, how can someone with no vision navigate a cold, smooth piece of glass?

In the spring of 2008, Apple added VoiceOver to the iPod nano; at the same time, iTunes on the Mac became accessible to VoiceOver. That’s right, the old Carbon app had not worked with the screen reader before.

This meant that a blind person could now connect a nano to iTunes, enable VoiceOver on the device, copy songs to it, and use VoiceOver to find and play them. In fact, “VoiceOver” on the nano was actually a series of text-to-speech-created audio files that the device generated when you enabled the feature in iTunes. But it was a lot better than no speech at all.

With iTunes now accessible via the Mac, Apple had laid the groundwork for iPhone accessibility by making it possible for a blind person to sync an iPhone to their computer accessibly. Next stop: a real, accessible iPhone.

iPhone OS 3 and the 3GS (June 2009)

The iPhone announcement everyone expected at WWDC 2009 promised to be a big one. With the App Store a year old, and two years of development time under Apple’s belt, the common expectation was for a substantive iPhone update with a long list of new features. The iPhone 3GS, subsequently regarded as an important step forward for the platform, was a solid release, and iPhone OS 3.0 brought such important and overdue advancements as copy/paste.

A few people who followed Apple’s accessibility trajectory received word, shortly before WWDC, that something might be coming that would interest them. But they didn’t know what. Expectations were kept in check regarding what a presumed VoiceOver for iPhone might be. The hurried, late-in-the-day unveiling of VoiceOver, Zoom, White on Black, and Mono Audio came with its own kind of uncertainty. The lack of demo inspired no confidence, and that aside, existing devices weren’t even compatible with iPhone OS 3. To get accessibility, you’d have to wait and buy an iPhone 3GS, or, if you couldn’t get out of your phone contract, or didn’t have the money, you’d need to wait until the new iPod touch (eventually released in September of 2009) became available. Users who had been content with, or at least resigned to, their phones, suddenly found themselves signing up with AT&T and plunking down for the new, accessible iPhone.

How VoiceOver Works

Of the four new accessibility features in iPhone OS 3, VoiceOver was the most important. When enabled, touching the iPhone screen caused the device to speak what was under your finger – app name, menu item, or button label. And the gestures you used to operate the phone were changed from the default. Instead of tapping once to open an app, you would double-tap, flicks became two-finger flicks, and typing on the virtual keyboard was accomplished by first selecting (tapping) a key, then double-tapping. VoiceOver also included an interface device called the rotor, which functions a bit like a contextual menu. It’s among the most ingenious and underrated elements of VoiceOver, and it’s only grown in importance and functionality over time. To use it, you place two fingers on the screen and twist – like turning a knob; iOS then speaks contextual options in response. If, for example, you’re in Notes, the options allow you to read by line, word, or character, enabling the editing of text.

The Rest of the Accessibility Features

Zoom, as you might imagine, magnifies the iPhone screen. Pinch-to-zoom was available in the original iPhone OS, but only worked within certain supported apps, like Safari. Systemwide Zoom magnified the screen throughout the interface. White on Black (later renamed Invert Colors) was a sort of early dark mode, turning the screen image inside out – dark background with light text. Low vision users with sensitivity to light find this arrangement easier to see, even with the inconvenience of images that appear as negatives. Mono Audio allows a user with limited hearing to replace stereo output with mono, making the output louder and more focused.

Third-Generation iPod touch (Fall 2009)

If you couldn’t get out of your phone contract, or didn’t want to move to AT&T, your next opportunity for an accessible Apple device came with the release of the third-gen iPod touch; older models didn’t support iPhone OS 3.

The biggest drawback to the Wi-Fi-only iPod, of course, was the lack of navigation features provided by the GPS-equipped iPhone. Navigation was of profound interest to blind users. Even so, the touch was clearly the best Internet-capable mobile device available for people with disabilities if you were on a budget, or yolked to a phone contract that didn’t allow an iPhone.

First-Generation iPad and iBooks (Spring 2010)

One of the iPad’s hallmark features makes it an obvious choice for accessibility: it’s considerably larger than an iPhone, and therefore easier to see if you have low vision. It was also easier to hold for some people with physical disabilities, though more cumbersome for others. But another feature of the first iPad was also interesting to people with blindness or reading disabilities: the iPad was the first Apple device to include the iBooks app and the iBooks Store. Not only could you add any book Apple offered to the iPad, you could use VoiceOver to read it aloud. This meant that if a book wasn’t available in Braille, on tape, or in another accessible format, an iPad owner could gain access to all sorts of new reading material.

The first-gen iPad also provided a preview of new features that would end up in iOS 4 in the fall. A few were new VoiceOver gestures, like the two-finger scrub, and one was a major upgrade for a VoiceOver user who wanted to type on the virtual keyboard. It’s called Touch Typing. But I’ll save the description of this feature for the next section, because it got a bit easier to use with iOS 4.

iOS 4 (Fall 2010)

Accessibility received important updates in iOS 4, some of which conferred legitimacy on iOS in the eyes of Windows-centric skeptics. Bluetooth keyboard support meant that a blind user need not struggle to type on the virtual keyboard. Bluetooth also made support for refreshable Braille displays possible. The number of such displays supported by iOS increases with each version, and Apple keeps a running list online. With a Braille display, you can type in Braille rather than with a print keyboard. What you type is translated into print when it reaches the iPhone. You can also have VoiceOver’s spoken interface translated into Braille and delivered via the Braille display. This allows someone who is both deaf and blind to use an iOS device with VoiceOver. Braille support continues to evolve, even through iOS 13.

iOS 4 also brought Touch Typing to the phone. It had first been introduced as a second typing mode on the iPad. Touch Typing is a faster way for VoiceOver users to type on the virtual keyboard than Standard Typing. You don’t need to select, then double-tap a key on the keyboard to enter it. Just tap once to press the key. The split-tap gesture is what really makes Touch Typing a far more efficient way to type with VoiceOver. You can leave your hands on the keyboard, raising a finger to type a key. You enter Touch Typing mode with a twist of the VoiceOver rotor. iOS 4 added a web-centric rotor, with specific options for navigating through elements in Safari. This feature would eventually be folded into the general-purpose rotor, but iOS 4 is where web-specific options first appeared.

iPhone 4s and Siri (Fall 2011)

The iPhone 4s was the first phone to include Siri. It isn’t an accessibility feature, per se, but a person with a physical or vision disability can control iOS via voice, saving time and complexity.

iOS 5 (Fall 2011)

iOS 5 freed all users from the need to set up a device from a computer. And VoiceOver users got the ability to perform their own setup with the screen reader. It had been possible to initiate setup via iTunes, since Mac OS X also included a screen reader. This was made easier with an adjustment to the behavior of the triple-click Home button. Previously, a triple-click brought up a choice between Zoom and VoiceOver. Unfortunately, a VoiceOver user couldn’t identify the option needed if he or she couldn’t see. In iOS 5, triple-clicking launched VoiceOver by default, and that meant you could triple-click during the setup process to invoke VoiceOver and proceed without sighted assistance.

New voices became available in iOS 5. They were enhanced versions of the existing voices, which makes a difference if you’re using them all day, especially if you’re reading web articles or other long-form content.

Low vision users got a new Zoom gesture that made it easy to activate the feature only when you needed it. Enable Zoom in Settings, then do a three-finger double-tap to actually enlarge your screen view. Repeat the gesture to exit the zoomed-in view. A three-finger tap and drag up or down adjusts the amount of zoom. iOS also added a couple of new speech features: Speak Selection and Speak Auto-Text. With Speak Selection enabled you can drag to select some text anywhere in iOS; the menu that appears now includes a Speak button. Speak Auto-Text does just that when an auto-correction or auto-capitalization is applied. Both of these speech features utilize the same selection of voices as VoiceOver – you can select the voice you want to use independently of VoiceOver, and choose the speed at which you want it to speak.

The VoiceOver Item Chooser also made its debut in iOS 5. When visiting a webpage with lots of links and content items, invoke the Item Chooser to bring up an alphabetical list of elements on the page. If you know the one you want, start typing to narrow your search, then double-tap the item you want to be taken there. The Item Chooser can be a useful timesaver when navigating a familiar site. As an alternative to the Item Chooser for Bluetooth keyboard users, a number of web-specific VoiceOver keyboard shortcuts were added too, allowing a user to move through headings by typing ‘H’ on a connected keyboard, for example.

Face detection in the Camera app made its debut in iOS 5. With VoiceOver on, point the device at a subject or subjects and VoiceOver will indicate how many faces the camera sees, and where they are in the frame.

The former web rotor features were collapsed back into the main rotor, and more rotor options were added, as well as more tools for managing the way the rotor behaved. You could reorder rotor items, and add or remove items to simplify and customize what appeared there.

Custom VoiceOver labeling was also added, allowing the user to give a label to a control in an app that the developer had not labeled. Many apps are partially accessible to VoiceOver, meaning they can be navigated and used with the screen reader, though one or more buttons or other controls might not have an accessibility label. Custom labeling allows the user to compensate for that flaw.

Hearing Features

The iPhone always supported custom ringtones, of course, but iOS 5 brought custom vibration patterns. If you have a hearing loss, or just want to get fancy with vibration patterns for individual callers, choose one that’s provided or tap out your own on-screen and save it.

LED Flash for Alerts can be used in addition to, or instead of an audible ringtone to get your attention visually.

A new Hearing Aid mode allowed iOS devices to work better with Bluetooth hearing aids. This was the beginning of enhanced support for hearing aids that would blossom into a larger initiative in later iOS releases: Made for iPhone hearing aids. iOS devices going back to the iPhone 5 are Hearing Aid Compatible (HAC) as defined by the US Federal Communications Commission.

iOS 6 (Fall 2012)

The 2012 release of iOS included accessibility features in one entirely new category – physical accessibility – and one category that ended up mattering a lot to educators and parents. AssistiveTouch was the first iOS feature that supported those who otherwise had difficulty making gestures effectively because of motor delays. Home-Click Speed allowed the user to adjust the sensitivity of the Home button, making it easier for a person with a motor delay to effectively click it. Guided Access even got a WWDC keynote demo.

It’s worth noting – just because I’ve recently had reason to pick up an old iPhone 3GS – that iOS 6 was the last version of iOS that would run on the first accessible iPhone. But by this point accessibility had come a long way, even in the three years since the 3GS and iPhone OS 3 were launched.

VoiceOver added Actions in iOS 6. Select an item and flick up or down to hear actions you could take, like flagging or deleting an email, for example; a double-tap performs the action. It’s a bit like using the VoiceOver rotor without having to do the rotor gesture to activate it first.

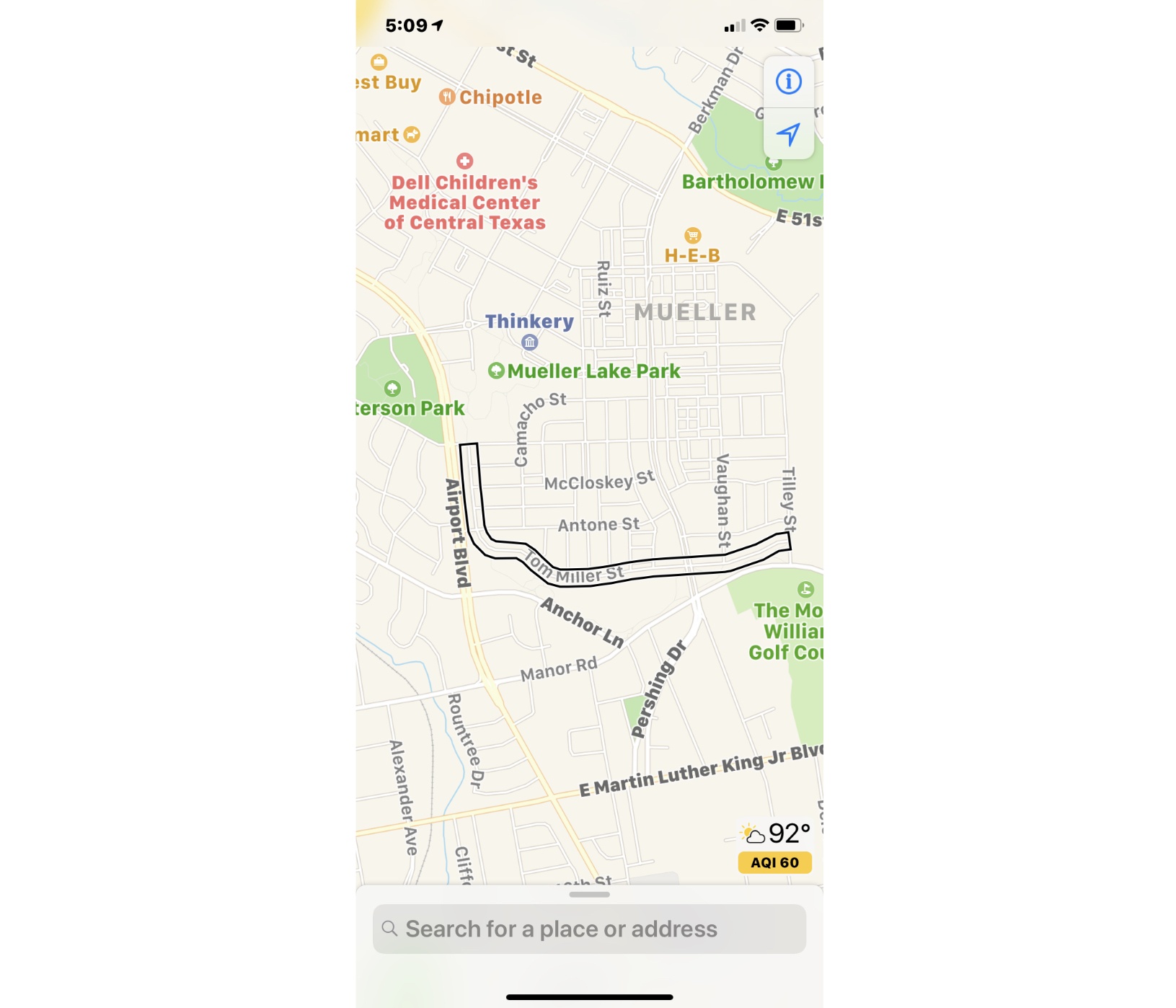

Maps got a major update in iOS 6, and so did VoiceOver users. Pause to Follow allows you to locate and select a street, then drag a finger when you hear “Pause to Follow,” giving you a sense of what direction the road takes, and how straight or curvy it is. Audible feedback guides you as you follow the street, and it’s highlighted visually, too, for use by someone with low vision. The VoiceOver rotor also included a Points of Interest item, allowing the user to flick though nearby POIs to find a specific one, or just to browse one’s surroundings.

Guided Access

New in iOS 6, Guided Access enabled restricting access to elements of iOS – for example, disabling the volume or Home buttons, or preventing users from accessing certain apps. Guided Access makes iPad-based kiosks possible, but it also allows teachers to focus the attention of students – often kids on the autism spectrum. Invoke Guided Access and freely hand the student an iPad that can only run the education app or learning game you want them to use. You can also “mask off” buttons or other interface elements within the chosen app.

AssistiveTouch

Some people with physical disabilities – motor delays – have the ability to perform some touch screen gestures, but may not be able to do so as quickly or reliably as required to use them consistently. Or pressing the Home or side button might be a challenge. AssistiveTouch creates a contextual, hierarchical on-screen menu of gestures that is collapsed at the edge of the screen until the user taps to view the menu. A user can also create custom gestures and save them as AssistiveTouch items.

The Woes of iOS 7 (Fall 2013)

Apple famously redesigned the look of the iOS interface and practically all of its own apps. Skeuomorphism was out, and thin fonts, transparent layers, and animation were in. These were profoundly bad moves for accessibility, especially for low vision users who count on high contrast, and interface elements whose functions are apparent without requiring sharp eyes and a visual frame of reference. The iOS 7 backlash actually produced a number of improvements to accessibility, as Apple fixed what it had broken, either by toning down the initial design of the OS, or adding accessibility settings that could compensate if you found a particular design element to be problematic. These included Button Shapes, Reduce Motion, and On/Off Labels. In addition, iOS 7 introduced Increase Contrast, Bold Text, and a more sophisticated version of Larger Text. These became more important as a means of dealing with the 7.0 redesign. Things evened out in iOS 7.1, which I said at the time was the only iOS release created especially for low-vision users like myself.

Again, iOS 7 was, flaws notwithstanding, a big release for accessibility. That became apparent with a look inside the Settings app. Accessibility was moved up from the very bottom of the General category to near the top in iOS 7. It mattered on both an emotional level for users of these tools, and on a practical level for those who didn’t spend a significant amount of time using access tools, but planned to explore now that iOS 7 had added so much.

iOS 7 introduced many mainstream interface updates, including Control Center and a beefed-up app switcher. These were immediately accessible to VoiceOver and AssistiveTouch, as well as the new Switch Control interface. Apple points out that FaceTime, also introduced in iOS 7, functions as an accessibility feature for people who communicate with sign language or lip-reading, since these methods are transmitted via video.

Dynamic Type

A feature called Larger Text had been available in iOS 6, though it didn’t actually affect much of iOS. You could choose from six enlarged text sizes, and you’d see them in a few apps, like Messages or Mail. Larger Text in iOS 7 and later is the user-side interface to Dynamic Type. If a developer supports Dynamic Type in an app, the user can use the Text Size slider to make it larger or smaller. Even Apple didn’t support Dynamic Type in all of its iOS 7 apps, but they’ve gradually fixed that. Many other developers have embraced the feature, but many, most notably Google, have not.

Made for iPhone Hearing Aids

Technological improvements in Bluetooth Low Energy (BLE) specs, plus a concerted effort by Apple, extended the Made for iPhone (MFi) program to hearing aids that the company began certifying as compatible with iOS devices. Apple never built hearing aids of its own, but it did work with manufacturers to add to the number of MFi products that were, and are, available.

Subtitles and Captioning

Movies and TV shows purchased from iTunes, or acquired from other sources can be viewed with closed captions or subtitles. iOS 7 introduced an interface for customizing the style and size of text that appears on captioned videos.

VoiceOver Handwriting

VoiceOver Handwriting support is an interesting, seemingly niche feature that allows a VoiceOver user to input text by drawing print text characters with a finger, on-screen. If a blind user is familiar with the shape of those characters, he or she enters Handwriting mode via the rotor and can then start writing with a finger. There are gestures to indicate a space, and for common punctuation symbols. You can also use Handwriting to locate apps on the Home screen. Enter Handwriting mode with the rotor, then draw the first letter of the app you want, and iOS finds the ones that match; Handwriting can be used to navigate webpages, too.

Switch Control

AssistiveTouch had been a part of iOS since iOS 6. Switch Control extended the idea of an interface that supported users with motor delays. With it, you use external switch devices, which are two-state buttons. It’s also possible to use the iOS screen itself as a switch, or even the camera. By looking left or right, you activate a camera-based switch. To work with switches, you assign each to a function, like tap or flick left, or open Control Center or invoke Siri. In Switch Control mode, iOS scans from item to item on-screen until a switch is pressed to activate or otherwise act on the item. Many switch users equip their iOS setups with multiple devices. And the iPad, mounted on a wheelchair, along with switches, can create a very effective iOS rig for someone with severe motor disabilities.

Accessibility Shortcut

The feature formerly known as Triple-Click Home allows you to turn accessibility features on and off with a triple-click. Multiple accessibility features can be added to the shortcut. When triggered, iOS presents a menu of those you’ve included.

iPhone 6 and 6 Plus (Fall 2014)

The first “big” iPhones included a new feature called Display Zoom. It’s not an accessibility feature, but it does enlarge your view of the screen by decreasing its resolution. Fewer app icons appear on-screen, and selected iOS screens appear to have larger text. Display Zoom can be used in conjunction with the accessibility zoom feature, or alone.

iOS 8 (Fall 2014)

A couple of mainstream features that were new in iOS 8 had important accessibility implications. The QuickType keyboard, which enabled text prediction and displayed a bar with typing suggestions above the virtual keyboard, also had a profound effect on the way VoiceOver users were able to type on-screen. The new Direct Touch Typing mode allowed a VoiceOver user to put both hands on the virtual keyboard and just begin to type. QuickType, auto-correction, and VoiceOver’s own know-how combine to correct the typist’s mistakes with a high degree of accuracy. It’s not for everyone, but good typists, especially iPad users, can dramatically improve their typing with Direct Touch.

Support for third-party keyboards is an iOS-wide feature with accessibility implications. A few keyboards that were intended to improve visual access have been released over the years, as well as keyboards aimed at kids with physical or learning disabilities.

The highly-regarded Mac OS X voice, Alex, came to iOS with version 8. Alex provides natural-sounding “breath” as it speaks, making it much better for longform reading than older alternatives, even the high-quality voices added in iOS 5. iOS 8 also added a grayscale mode. The lack of bright colors on-screen can be easier for some people to see, and provides less glare on-screen, too. Guided Access got an update, including timers and support for Touch ID activation. Zoom was improved too: the new Zoom Controller allowed a user to choose a region of the screen to zoom, adjust the rate of zoom, and use color filters to accommodate color blindness.

Braille Screen Input

Like the Handwriting feature added in iOS 7, Braille Screen Input facilitates on-screen text entry. Instead of drawing characters with your finger, you use a Braille cell metaphor to enter Braille cells on-screen. It’s a useful feature for Braille natives, who might not have a Braille display handy when working on an iPhone.

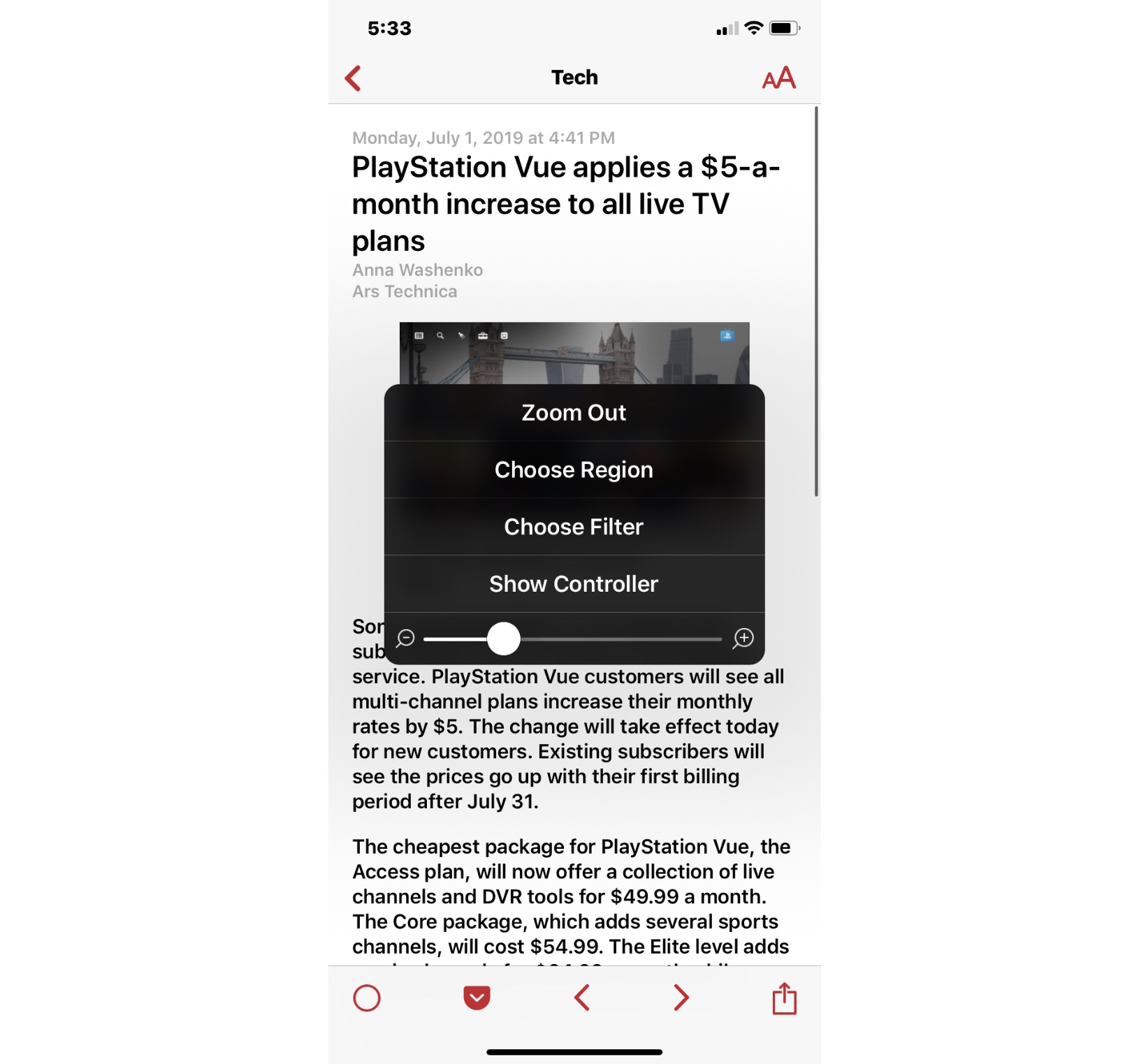

Speak Screen

Once enabled, just two-finger flick down from the top of the screen to have its contents – email, webpage, or book, for example – spoken. This is useful for non-VoiceOver users who, nonetheless, benefit from hearing the contents of a long text passage. Speak Screen places a pop-up menu on the screen, with options for changing the reading rate, moving forward or back, and stopping and starting speech.

Apple Watch (Spring 2015)

The Apple Watch is relevant in an iOS timeline because you generally need an iPhone to use one. When the first Watch was announced, it wasn’t clear whether it would include accessibility features. It did, including VoiceOver and Zoom. Later versions have added wheelchair workouts, and a variety of health features.

iOS 9 (Fall 2015)

3D Touch came to new iPhones in iOS 9, and people who focused on accessibility wondered whether the feature would be available to them, given the need for alternate VoiceOver gestures, and the need to press firmly to invoke 3D Touch. The answer was “yes” on all counts, as VoiceOver users with supported phones got access to the first-level 3D Touch gesture, as well as Peek and Pop. 3D Touch items joined the AssistiveTouch menu, meaning you could use the menu, rather than pressing firmly on an app, to reveal its 3D Touch options. New accessibility settings also made the phone more forgiving of unsteady 3D Touch attempts, if you wished to enable those features. iPad multitasking meant new gestures that allowed a VoiceOver user to work with Split View or Slide Over. Again, users of accessibility tools had parity with everyone else, right out of the box.

Accessibility-wise, iOS 9 was a fairly minor release, but it did include new settings for people with physical disabilities, along with some VoiceOver updates. Here’s an exhaustive list of VoiceOver-related updates, minor and less minor.

Touch Accommodation

This new feature allows a user to adjust the sensitivity of the touch screen. It’s useful for people with motor delays who either activate items inadvertently with a touch, or need a more sensitive action to register their fingers’ movements. Timing for several options can be adjusted under Touch Accommodation.

Keyboard Behavior

New options allowed a user with a physical disability to control the way a hardware keyboard responded to their touch.

Switch Recipes for Switch Control

When using Switch Control, each switch performs a discrete function. The Switch Recipes feature effectively allows a user to create combinations of actions that can be performed collectively by giving that task to a specific switch.

VoiceOver Text Selection Rotor Changes

Changes to the VoiceOver rotor made it easier to select text by character, word, line, or page.

iOS 10 (Fall 2016)

By 2016, accessibility support in iOS could be counted on to improve with each release. Sometimes, as in iOS 9, updates would be cosmetic, or there would be bug fixes. iOS 10 changes were mostly on that scale, too, but still welcome. However, there were a couple of entirely new features as well.

On the mainstream front, voicemail transcription became available in this release, which is certainly helpful to anyone, but offered extra value to users with hearing impairments who might find sorting through voicemail easier with some textual guidance. VoiceOver users got a new pronunciation editor, enabling you to say or type a name the way VoiceOver should say it, and save your correct pronunciation. VoiceOver audio routing also made its debut, meaning you could pipe VO audio to an output of your choice, like a Bluetooth speaker. Switch Control now allowed users to control devices connected to an iOS device, including the Apple TV.

VoiceOver Image Description

iOS 10 allowed you to select an image in Photos, or in a Messages thread, and have VoiceOver attempt to describe its characteristics. This wasn’t full-on machine learning, but it did act as sort of a photography clinic. An image might be “one face, blurry, dark,” or “crisp, well-lit.”

Magnifier

iOS magnification apps have been around for years. In addition to simply using the camera to zoom in on the item you wanted to see better, most offered access to the flash as a magnification light, and they allowed you to freeze the image you were looking at. When Magnifier became an iOS feature, it was accessible directly from Control Center or using the Accessibility Shortcut, and you could apply color filters, as is common in electronic handheld magnifiers aimed at the low vision market.

Color Filters

iOS had offered a grayscale toggle since iOS 8, but now there were color filters, designed to adjust the screen view based on the needs of users with three common forms of color blindness.

Software TTY

iPhone users have always been able to connect their phones to a TDD (Telecommunications Device for the Deaf), provided they had the proper hardware adapter. As of iOS 10, you could place or receive a TTY call entirely in software, as well as connect to the TTY relay service.

iOS 11 (Fall 2017)

The introduction of the first Home button-less iOS device, the iPhone X, had implications for accessibility, not only because of changes in gestures, but because of Face ID and the challenges it poses to some blind users. iOS 11 also brought several new and updated accessibility features, including VoiceOver support for drag and drop, and VO-specific gestures for iPhone X owners, since the absence of a Home button changed the default gestures used to do things like open Control Center or the app switcher. VoiceOver also added to the new method of moving apps that was a part of iOS 10. Now, it was possible to use the rotor to select and drag multiple apps anywhere on any Home screen.

Face ID

iOS 11 was the first iOS release to support Face ID devices. Because some people with disabilities, particularly those who are blind and have prosthetic eyes, are unable to give the required attention to the Face ID sensor, iOS added a toggle that allows the phone to unlock without requiring eye contact. Face ID is somewhat controversial in the blind community because of the perceived security risk of not requiring attention, and because it is difficult for some blind users to get Face ID to work. Others love the feature, though. The Require Attention toggle, which is on by default, is a security feature that prevents a phone from unlocking if it’s simply passed in front of the face of its owner. Turning the toggle off allows more blind people to unlock a phone with Face ID, but the user is potentially at risk of having the phone unlocked without their consent. When setting up a Face ID-equipped device using VoiceOver, the user is asked whether to leave Require Attention on or turn it off.

Smart Invert Colors

As dark mode comes to iOS for the first time with iOS 13, it’s worth remembering that Invert Colors has been part of accessibility settings since iPhone OS 3, and Smart Invert Colors joined the roster in iOS 11. Where the original Invert Colors rendered everything as a reverse of its normal appearance, Smart Invert displays images as positives, not negatives, so long as the app in question supports it. You can still choose between Invert Colors and Smart Invert Colors, and add either to the Accessibility Shortcut. Why would you choose the original Invert Colors? Well, it’s like this: some apps’ menus or backgrounds are not rendered correctly in colors that contrast with the text or icons atop them. This even happens occasionally in Apple apps. But mostly it’s a problem for developers who haven’t really considered how their interface will behave with Smart Invert Colors turned on. Additionally, a number of apps do not correctly render images in Smart Invert Colors. I believe that the combination of these two problems is why neither invert option functions as an effective dark mode for iOS. From what I’ve seen, dark mode offers developers the opportunity to use shading and layers to customize the look of an interface. That may be a benefit to Smart Invert Colors users, too, as will general awareness that people other than those with low vision are trying to use iOS in a dark mode context.

Type to Siri

If you can’t speak, or it’s inconvenient to, Type to Siri gives you the option to issue Siri commands from the keyboard. You can type from the virtual or Bluetooth keyboard, or in Braille from a Braille display.

iOS 12 (Fall 2018)

All iOS releases include bugs, and users of accessibility often find those that relate to features they use every day, only to wait longer than seems right for Apple to fix them. Braille and VoiceOver itself were buggy in iOS 11, but like iOS overall, iOS 12 seemed to squash many nasty accessibility pests, as well as add some new functionality.

Shortcuts

Automating tasks with a voice command, or by pressing a single button, offers great accessibility benefits for people with all kinds of challenges. The interface for creating Shortcuts is fully accessible, too.

Live Listen

It’s been possible for a while now to use the iPhone’s microphone as an ambient sound amplifier, sending audio to a hearing aid. In iOS 12, the Live Listen feature was extended to AirPods. Once enabled, wearing AirPods and placing your phone on a table in a noisy restaurant with the microphone aimed at a dinner companion will assist in hearing them better.

iOS 13 (Fall 2019)

Accessibility received a lot of attention at WWDC 2019. We don’t yet know which features will be updated, or how they will interact with the mainstream changes planned for the OS. But here are a couple of features discussed at WWDC that will be important.

Voice Control

The latest, and completely new accessibility feature announced for iOS 13, as well as macOS Catalina, has a very old name. Before Siri, iOS had a feature (still does, actually) called Voice Control. You could initiate a phone call or play a song with a voice command, and that was about it. The new Voice Control is a modern, and much more thorough take on controlling your device with your voice. It’s designed for people with physical disabilities who have difficulty with touch gestures, or using a mouse and keyboard. Voice Control can create a numbered grid on the screen, that way you can include a grid area’s number in your command to the device and act on items in that grid location.

Dark Mode

We don’t know all of the differences between the coming mainstream dark mode feature and Smart Invert Colors. Apple has said that developers will be able to use a layered approach to their interfaces, so that not all screens or parts thereof will have to be the same dark shade. That sounds like an improvement over Smart Invert Colors, and if developers apply layers carefully, dark mode could be a suitable alternative to Smart Invert Colors for some people with low vision who use it.

If you measure iOS accessibility by the sheer number of features available today, 2009 seems like a primitive time. Just four items appeared on Phil Schiller’s WWDC keynote slide that year. But at least one of them – VoiceOver – revolutionized real accessibility on the iPhone almost immediately. And despite the fact that Apple has continued to make big and little updates to access during the past ten years, they are almost unknown to most who track the platform’s progress. The good news for those who do know about accessibility advancements is that Apple still manages to surprise and empower new groups of people by building the tools those people need to thrive.