Amazon’s Big Deal Days runs today and tomorrow (October 7–8), so I thought I’d share some of the best deals I’ve discovered so far. We’ll be keeping an eye out for other deals and posting them on the MacStories Deals accounts on Mastodon and Bluesky, too, so be sure to follow either for the very latest finds.

For prices, be sure to visit the MacStories Amazon storefront.

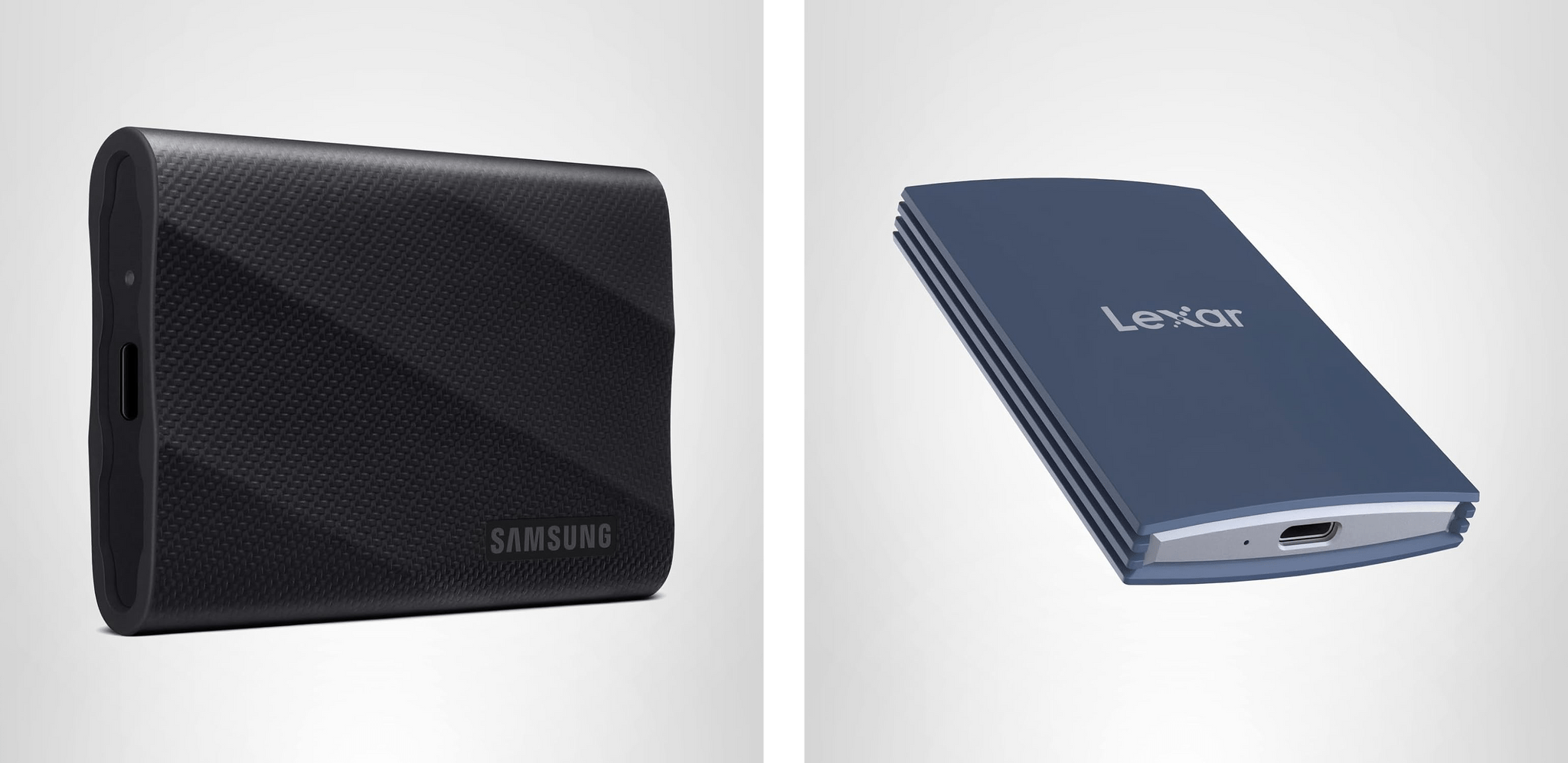

Portable SSDs: Samsung T9 and Lexar Armor 700

Events like Big Deal Days are always a great opportunity to pick up fast external SSD storage. I’ve used Samsung’s T line of external drives for years, starting with the T5. Lately, I’ve switched to the company’s T9 portable SSDs because they feature USB 3.2 Gen 2x2, which offers the fastest transfers you can get short of something like Thunderbolt 4 or 5, delivering 2,000 MB/s. That’s plenty fast for working with big files or moving a bunch of smaller files from one device to another. I’ve used the T9 for Carbon Copy Cloner backups, Time Machine backups, editing in Final Cut Pro and Logic Pro, and moving my ROM library from one handheld device to another, and it’s been reliable in every case.

During Big Deal Days, the biggest discount is on the 2TB model, with the 1TB model a close second. Depending on your storage needs, you can’t go wrong with either.

More recently, I also started using Lexar’s Armor 700 Portable SSD. In fact, it was one of these drives that we used to transfer huge video and audio files from Apple’s podcast studio at WWDC back to my hotel room for editing.

Like Samsung’s T9, Lexar’s drive features 2,000 MB/s transfer speeds, plus an aluminum case for heat dissipation, a rubberized exterior to help protect against drops, and IP66 water and dust resistance. I’ve been using the 1TB model of the Armor 700, but it’s the 4TB model that’s on the deepest discount during Big Deal Days.

For prices, visit the MacStories Amazon storefront.

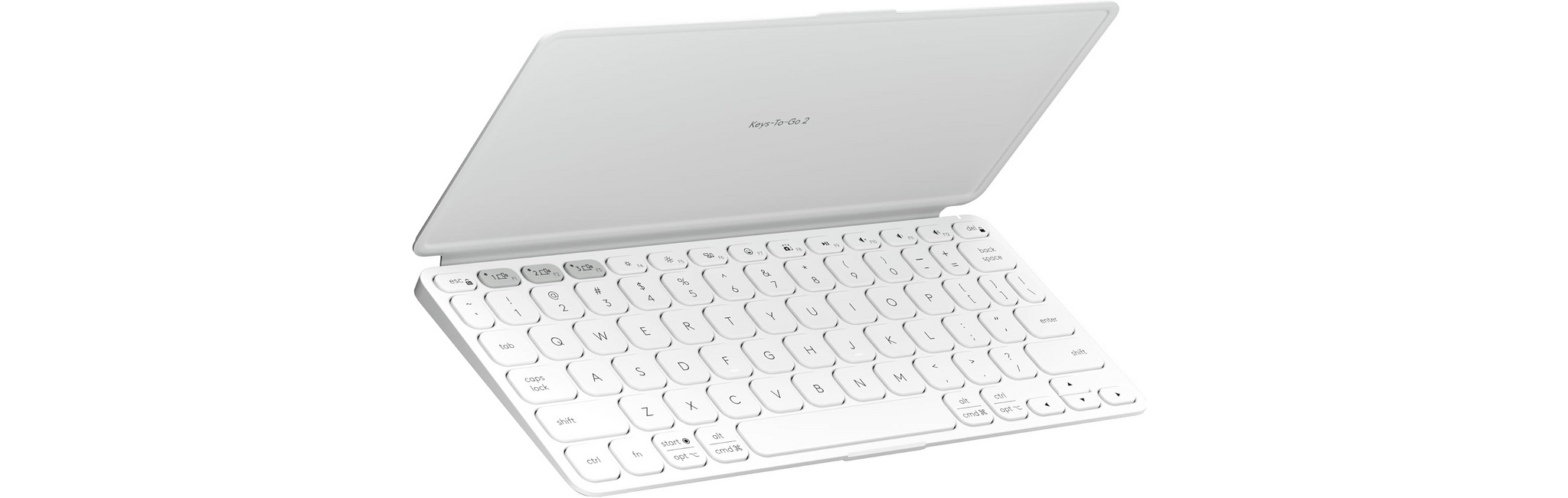

Logitech: MX Keys S, Keys-to-Go 2, and POP Mouse

Logitech has gone all-in for Amazon’s big sales event, too. There are a bunch of keyboards, mice, and other accessories on sale, but I’d focus on these three:

The MX Keys S for Mac was my go-to keyboard for years. I’ve switched to Apple’s Magic Keyboard more recently, but if you’re looking for an alternative low-profile keyboard made for the Mac, this is a great pick.

Keys-to-Go 2 is the keyboard I throw in my bag when I need to connect to a handheld gaming device, or when I want a keyboard “just in case” but don’t want to carry my iPad’s Magic Keyboard case. The replaceable watch battery it came with is still going strong, and the integrated cover keeps it from getting damaged. It’s not the most ergonomic keyboard ever designed, but it’s perfect for lightweight travel.

I got a POP Mouse for the same reason I have Logitech’s Keys-to-Go 2 keyboard. It’s a good lightweight on-the-go mouse I can connect to multiple devices. I love that it comes in bright colors, too.

For prices, visit the MacStories Amazon storefront.