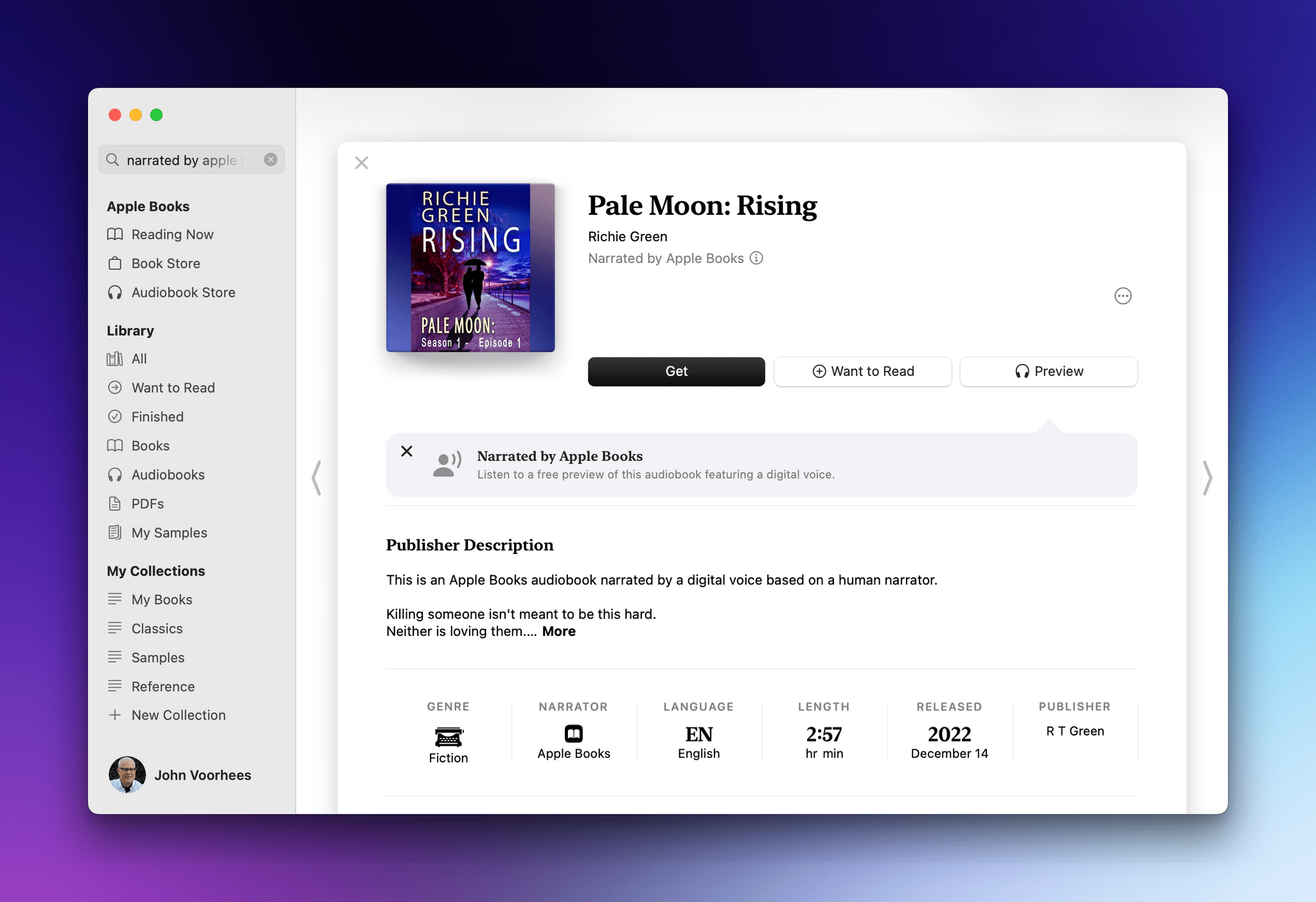

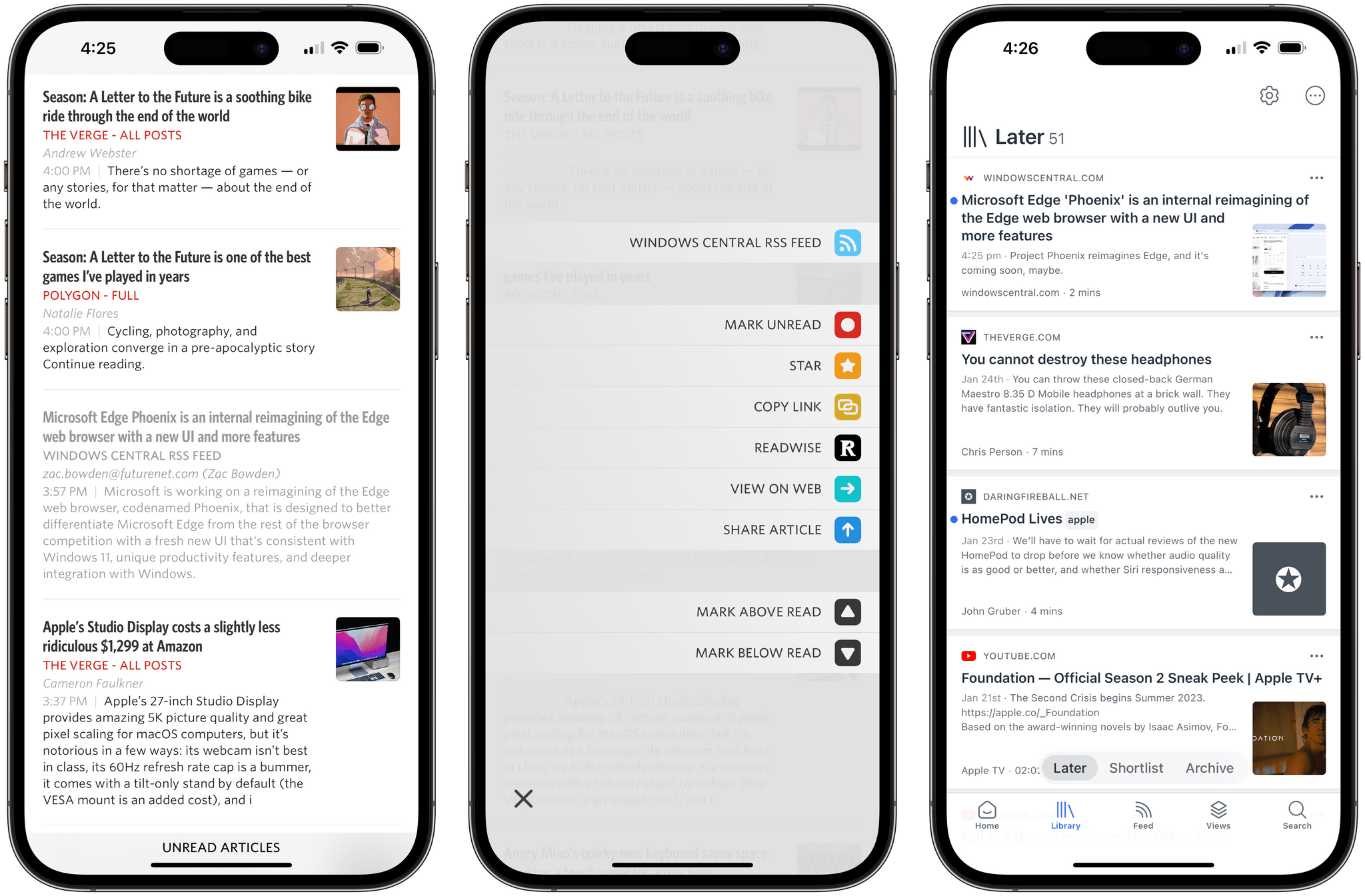

Unread, the elegant RSS reader by Golden Hill Software that we’ve covered before on MacStories, received its 3.3 update today, and it’s an interesting one I’ve been playing around with for the past week. There are two features I want to mention.

The first one is the ability to set up an article action to instantly send a headline from the article list in the app to Readwise Reader. As I explained on AppStories, I decided to go all-in with Reader as my read-later app (at least for now), and this Unread integration makes it incredibly easy to save articles for later. Sure, the Readwise Reader extension in the share sheet is one of the best ones I’ve seen for a read-later app (you can triage and tag articles directly from the share sheet), but if you’re in a hurry and checking out headlines on your phone, the one-tap custom action in Unread is phenomenal. To start using it, you need to be an Unread subscriber and paste in your Readwise API token.

The second feature is the ability to save any webpage from Safari as an article in Unread, even if you’re not subscribed to that website’s RSS feed. Essentially, this is a way to turn Unread into a quasi-read-later tool: the app’s parser will extract text and images from the webpage, which will be then be saved as a ‘Saved Article’ in Unread Cloud, Local feeds, or NewsBlur, or as a ‘Page’ in Feedbin.

If you’re a new Readwise Reader user, I recommend checking out Unread 3.3, which is available on the App Store for iPhone and iPad.

](https://cdn.macstories.net/banneras-1629219199428.png)