A week ago, an article in the Harvard Business Review byKate Niederhoffer,Gabriella Rosen Kellerman,Angela Lee,Alex Liebscher,Kristina Rapuano,andJeffrey T. Hancock got a lot of press for coining the term “workslop” to define a particular type of generative AI found in the workplace. The authors came up with the term as part of a quest to solve...

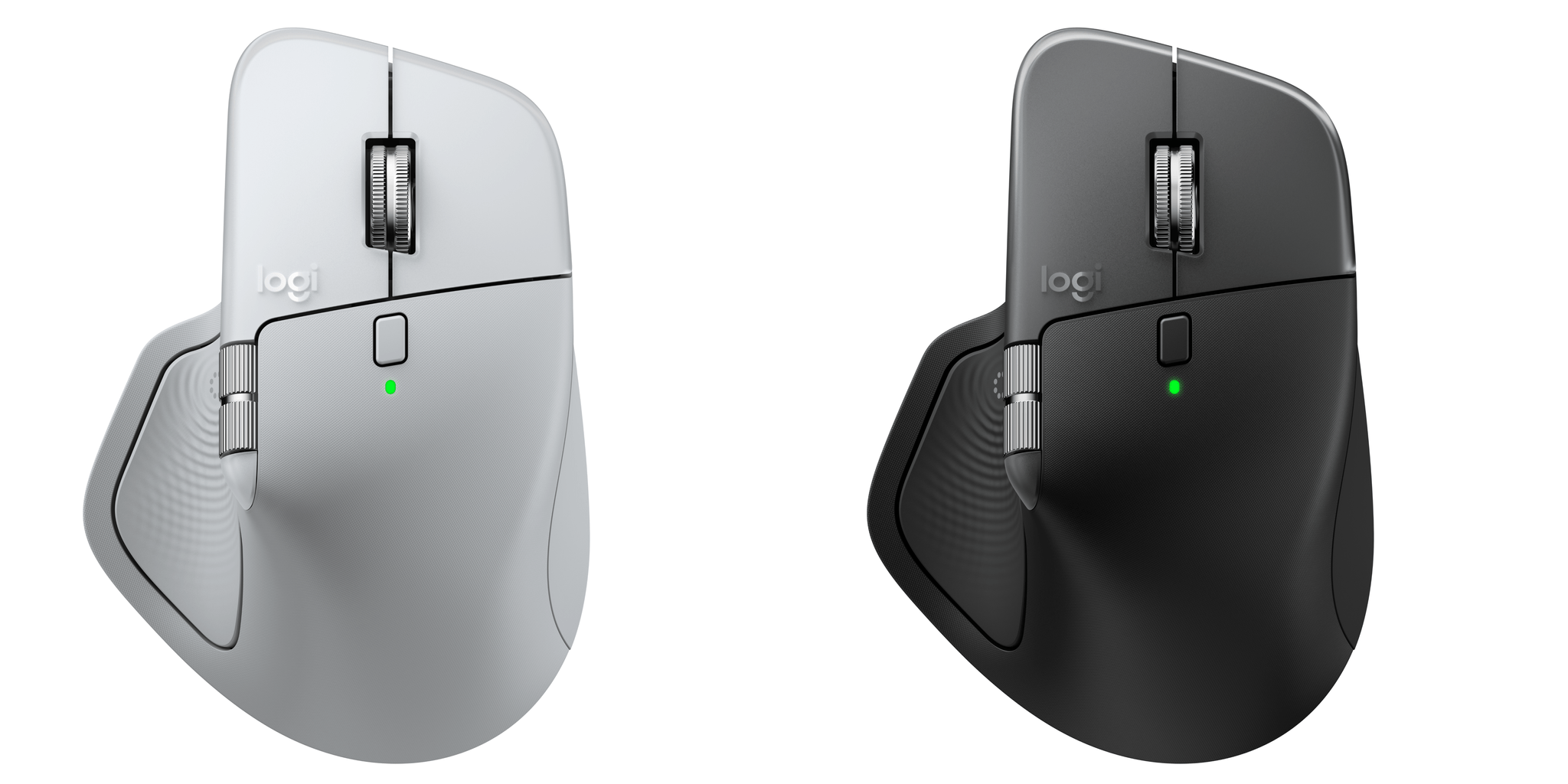

First Look: Logitech’s MX Master 4 Adds Haptics, Actions Ring, and a USB-C Bolt Receiver

Today, Logitech introduced an updated version of its MX Master series mouse dubbed the MX Master 4. It’s a good upgrade, but the changes are largely incremental; while I like it a lot, the MX Master 4 won’t be for everyone. Logitech sent me the MX Master 4 to try, and I’ve been using it for the past couple of weeks, so I thought I would share what the experience has been like so far.

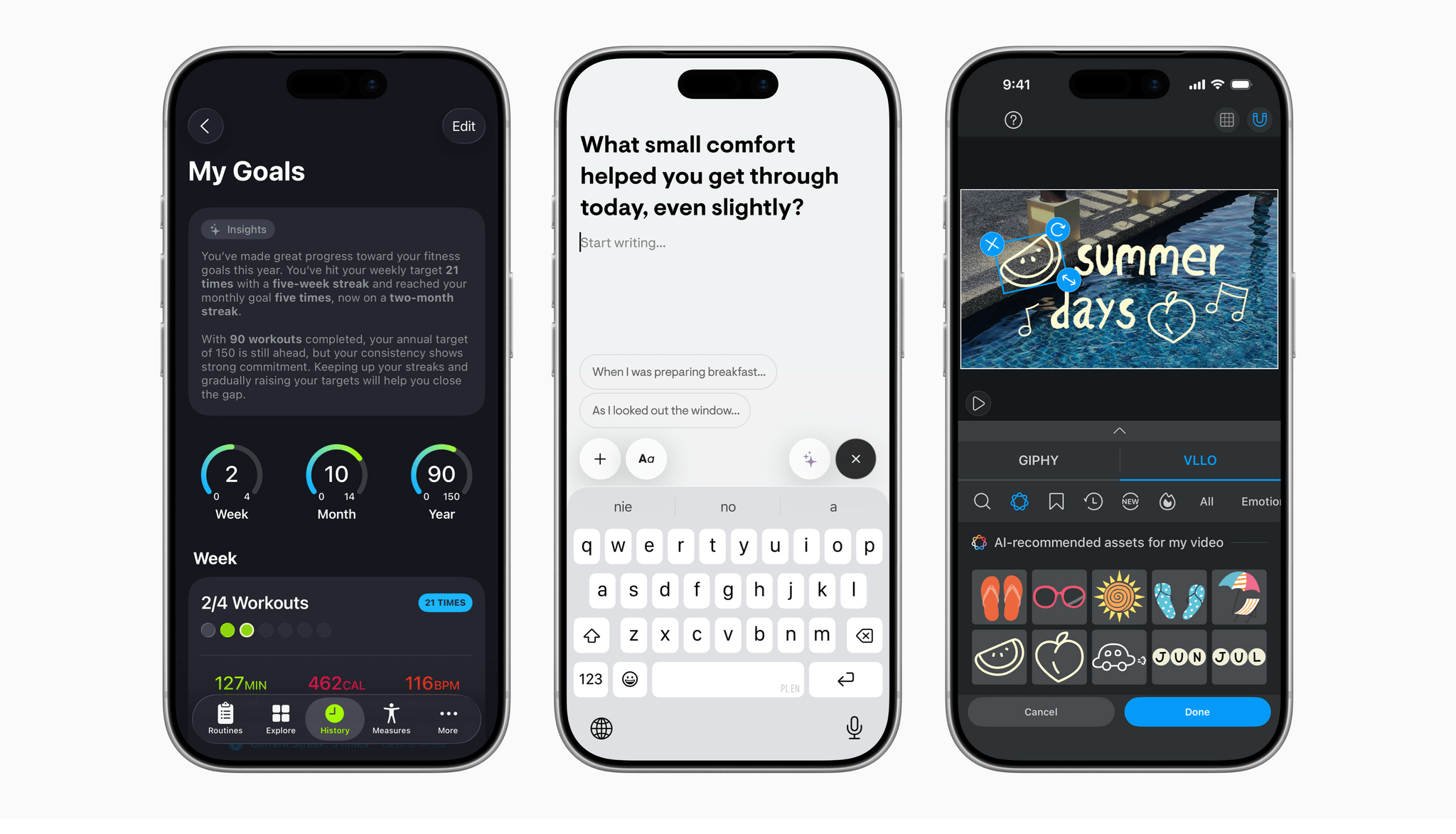

Apple Highlights Apps Using Its Foundation Models Framework

Earlier today, Apple published a press release highlighting some of the apps that are taking advantage of its new Foundation Models framework. As you’d expect, indie developers and small teams are well-represented among the apps promoted in the press release. Among them are:

It’s a group of apps that does a great job of demonstrating the breadth of creativity among developers who can leverage these privacy-first, on-device models to enhance their users’ experiences.

Apple’s happy to see developers adopting the new framework, too. Susan Prescott, Apple’s vice president of Worldwide Developer Relations, said:

We’re excited to see developers around the world already bringing privacy-protected intelligence features into their apps. The in-app experiences they’re creating are expansive and creative, showing just how much opportunity the Foundation Models framework opens up. From generating journaling prompts that will spark creativity in Stoic, to conversational explanations of scientific terms in CellWalk, it’s incredible to see the powerful new capabilities that are already enhancing the apps people use every day.

Judging what we’ve seen from developers here at MacStories, these examples are just the tip of the iceberg. I expect you’ll see more and more of your favorite apps adding features that take advantage of the Apple Foundation Models in the coming months.

AirPods Pro 3, iPhone Air, and a Cable Quest

AirPods Pro 3, iPhone Air, and a Cable Quest

This week, Federico and John follow up after a week with new Apple hardware and dig into watchOS and visionOS 26.

On AppStories+, John is mixing up his link and data organization systems - again.

We deliver AppStories+ to subscribers with bonus content, ad-free, and at a high bitrate early every week.

To learn more about an AppStories+ subscription, visit our Plans page, or read the AppStories+ FAQ.

AppStories Episode 454 - AirPods Pro 3, iPhone Air, and a Cable Quest

37:12

This episode is sponsored by:

- Claude – Get 50% off Claude Pro, including access to Claude Code.

Interesting Links

Interesting Links

Apple has documented all the changes that have arrived in Safari 26.0, which include anchor positioning and scroll-driven animations for CSS, HDR image support, the new HTML

Moving From the Apple Watch Ultra 1 to the 3

Moving From the Apple Watch Ultra 1 to the 3

It’s been three years since I bought my Apple Watch Ultra, so I was excited to try the Ultra 3 this fall. I updated my watch primarily because its battery health was at 84%. That’s not terrible, but charging had become a daily chore, and if I recorded multiple walks or other exercises, I occasionally...

App Debuts

App Debuts

Working Copy Working Copy, the powerful Git client for iPhone and iPad that I’ve been using for a long time to share and edit drafts of all my articles, received a major update this week that brings full compatibility with iOS and iPadOS 26. The app now sports a fresh Liquid Glass design language...

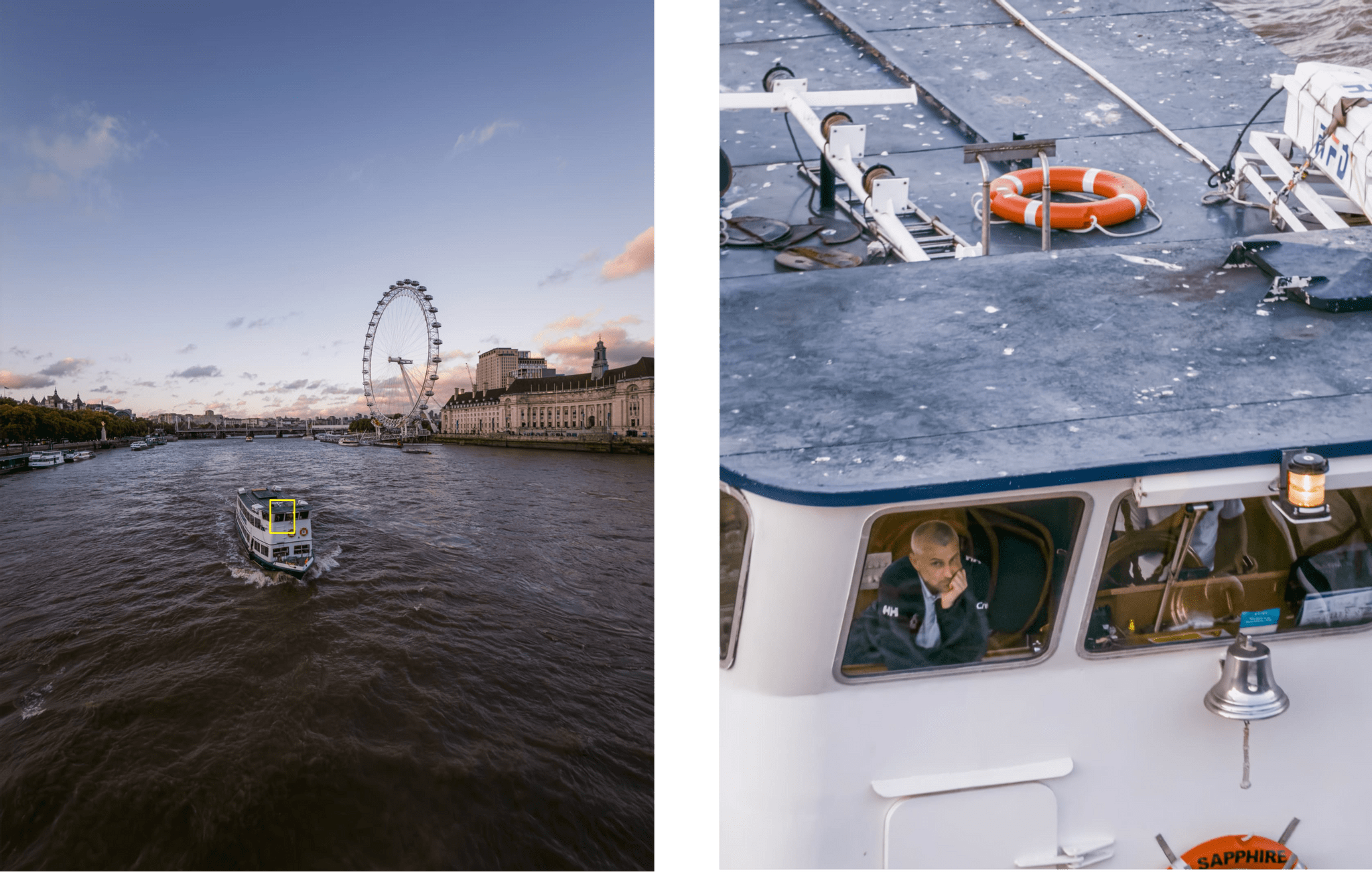

Halide and Kino Developers Review the iPhone 17 Pro’s Cameras→

Earlier this week, I shared my early impressions of the iPhone 17 Pro Max and included a few galleries of photos I’d taken at each of the model’s standard zoom levels. I was impressed by the results, which made me all the more excited to learn more of how Apple pulled this off.

One of my favorite annual iPhone camera reviews is from the team at Lux, the makers of Halide and Kino. Their experience with the iPhone 17 Pro’s cameras was similar to mine, but with a lot of nerdy camera detail that I love. The overall conclusion of their testing in New York, Iceland, and London is that:

This is, without a doubt, a great back camera system. With all cameras at 48MP, your creative choices are tremendous. I find Apple’s quip of it being ‘like having eight lenses in your pocket’ a bit much, but it does genuinely feel like having at least 5 or 6: Macro, 0.5×, 1×, 2×, 4× and 8× .

The story covers every camera and each zoom distance. Of the 2x, Lux found that:

Shooting at 2× on iPhone 17 Pro did produce noticeably better shots; I believe this can be chalked up to significantly better processing for these ‘crop shots’. Many people think Apple is dishonest in calling this an ‘optical quality’ zoom, but it’s certainly not a regular digital zoom either. I am very content with it, and I was a serious doubter when it was introduced.

Lux’s highest praise was probably for the 8x zoom:

The overall experience of shooting a lens this long should not be this good. I’ve not seen it mentioned in reviews, but the matter of keeping a 200mm lens somehow steady and not an exercise in tremendous frustration is astonishing. Apple is using both its very best hardware stabilization on this camera and software stabilization, as seen in features like Action Mode.

There are loads of beautiful photos in the post and a lot more detail than I’ve quoted here. Be sure to read through the entire post because what Apple is doing with camera hardware and software is really quite remarkable.

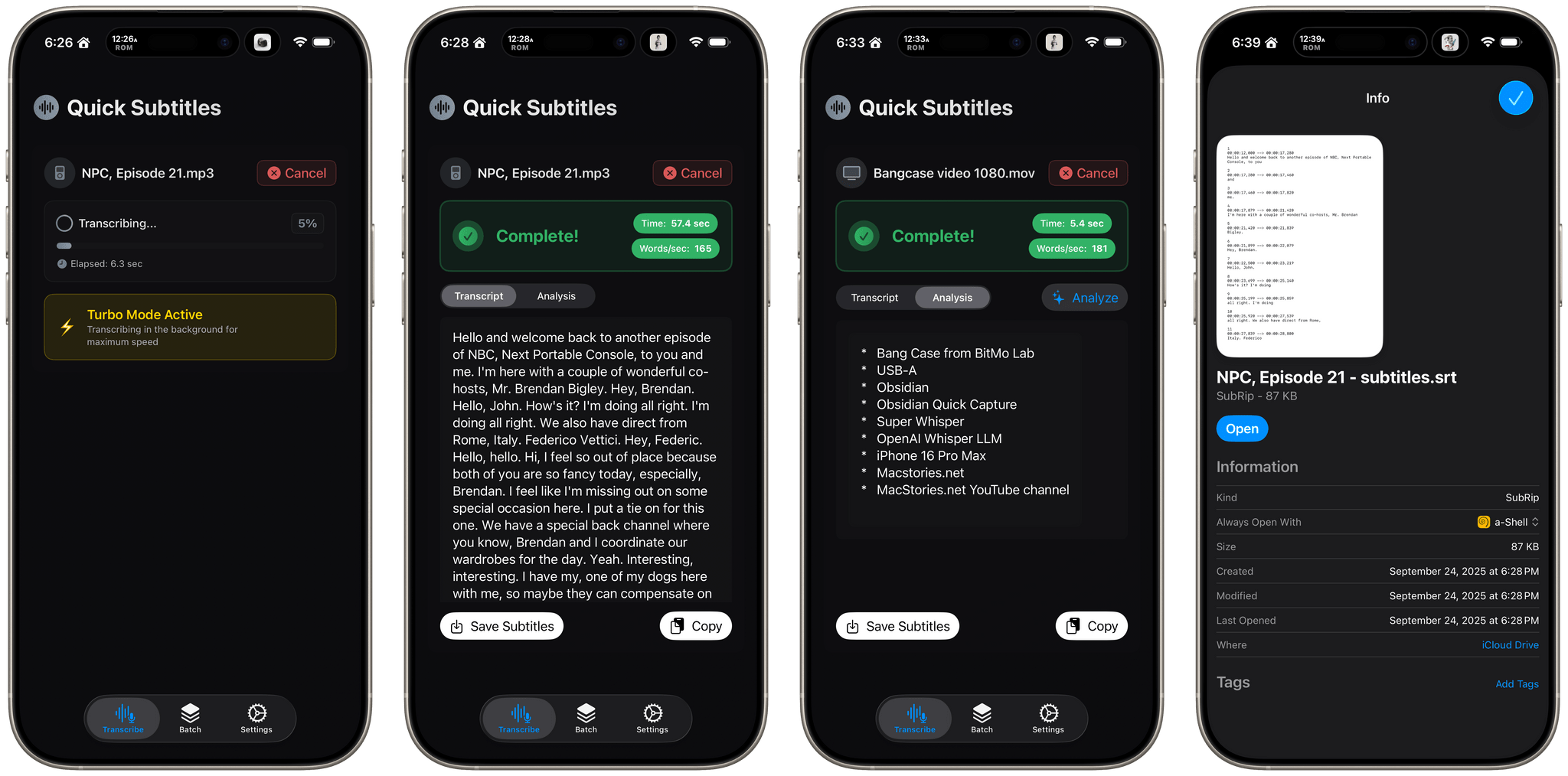

Quick Subtitles Shows Off the A19 Pro’s Remarkable Transcription Speed→

Matt Birchler makes a great utility for the iPhone and iPad called Quick Subtitles that generates transcripts from a wide variety of audio and video files, something I do a lot. Sometimes it’s for adding subtitles to a podcast’s YouTube video and other times, I just want to recall a bit of information from a long video without scrubbing through it. In either case, I want the process to be fast.

As Matt prepared Quick Subtitles for release, he tested it on a MacBook Pro with an M4 Pro chip, an iPhone 17 Pro with the new A19 Pro, an iPhone 16 Pro Max with the A18 Pro, and an iPhone 16e with the A18. The results were remarkable, with the iPhone 17 Pro nearly matching the performance of Matt’s M4 Pro MacBook Pro and 60% faster than the A18 Pro.

I got a preview of this sort of performance over the summer when I ran an episode of NPC: Next Portable Console through Yap, an open-source project my son Finn built to test Apple’s Speech framework, which Quick Subtitles also uses. The difference is that with the release of the speedy A19 Pro, the kind of performance I was seeing in June on a MacBook Pro is essentially now possible on an iPhone, meaning you don’t have to sacrifice speed to do this sort of task if all you have with you is an iPhone 17 Pro, which I love.

If you produce podcasts or video, or simply want transcripts that you can analyze with AI, check out Quick Subtitles. In addition to generating timestamped SRT files ready for YouTube and other video projects, the app can batch-transcribe files, and use a Google Gemini or OpenAI API key that you supply to analyze the transcripts it generates. Transcription happens on-device and your API keys don’t leave your device either, which makes it more private than transcription apps that rely on cloud servers.

Quick Subtitles is available on the App Store as a free download and comes with 10 free transcriptions. A one-time In-App Purchase of $19.99 unlocks unlimited transcription and batch processing. The In-App Purchase is currently stuck in app review, but should be available soon, when I’ll be grabbing it immediately.

](https://cdn.macstories.net/banneras-1629219199428.png)