Apple Intelligence

It’s fair to say that Apple’s AI strategy hasn’t exactly panned out so far. The company would like to remind us that they’ve been shipping machine learning features – only later retrofitted as “AI” – for years, and that they’ve been doing so across multiple apps in their operating systems rather than via chatbots. All of that is true. Apple also outlined an interesting vision for LLM-powered, Siri-centric functionalities based on users’ context and apps, and that felt like a genuine innovation in the AI space that – theoretically – only Apple could deliver at scale thanks to their vibrant third-party app ecosystem. But that Siri never shipped, and it’s still unclear when – and in what form – it will.

The proof is in the pudding, as they say, and the reality is that – like it or not! – people love the current generation of chatbots. If ChatGPT is on track to being used by 700 million people every week, who are we, professional Apple commentators, to say that no, actually, this current wave of AI is a fad but Apple’s AI will be so different, and so much better, when, eventually, it ships?

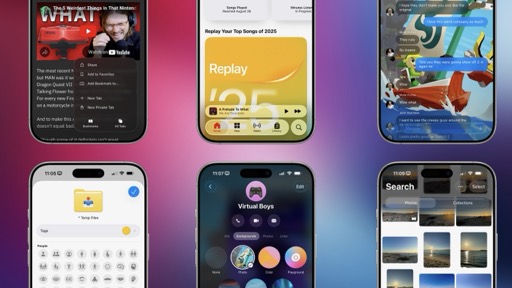

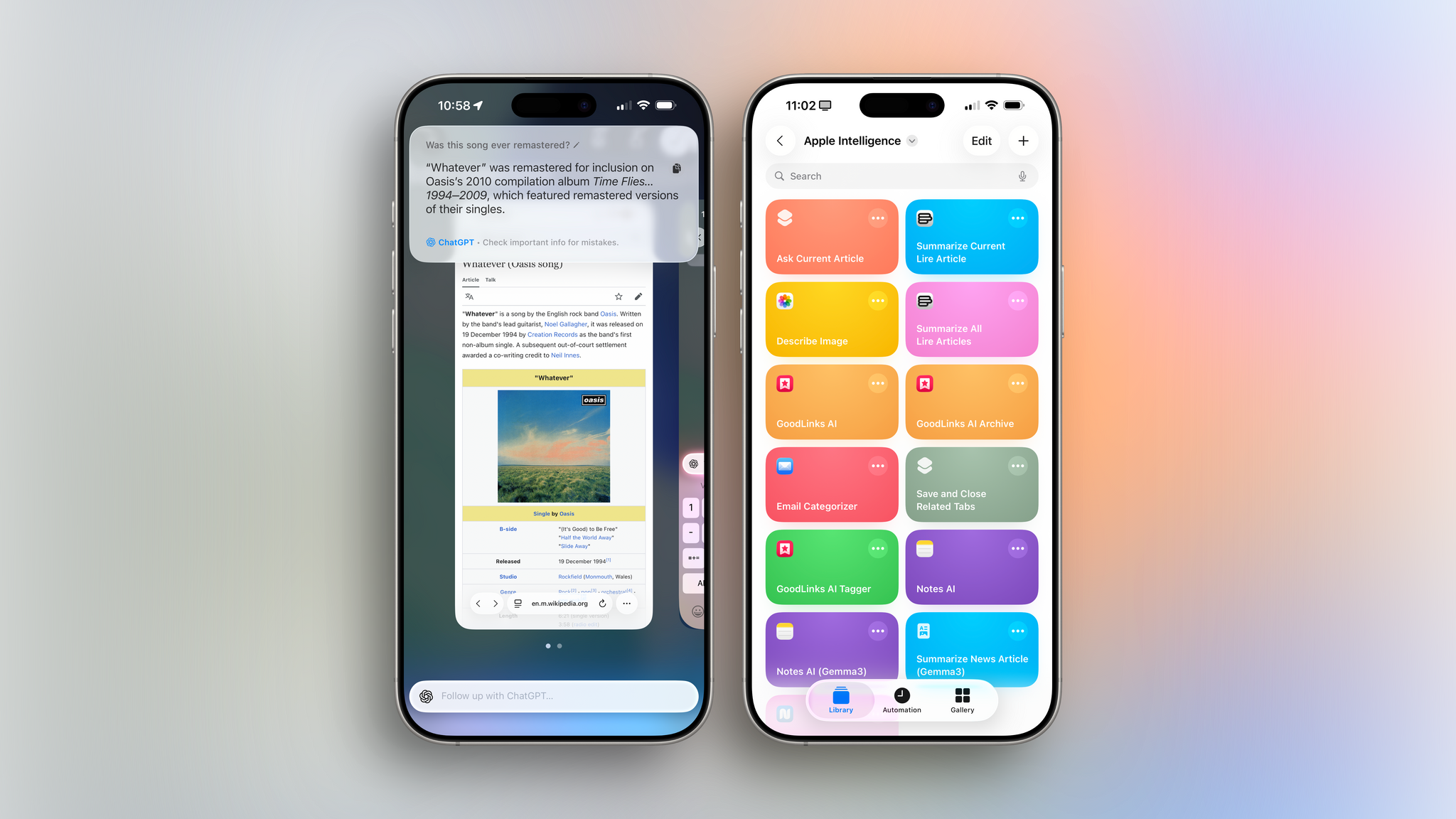

The way I see it, Apple Intelligence is going to have traditional LLM features with a chatbot in the future because it has to. And I think there’s a good chance I’ll be writing about it in next year’s review. For now, what we have in Apple Intelligence is a ragtag assortment of text and vision features – some of them powered by the company’s (not-so-intelligent) Foundation models, some of them reliant on ChatGPT – that are being integrated across iOS and iPadOS primarily via a new Foundation Models framework, Visual Intelligence, and Shortcuts. Apple has to enact this strategy now; without a core chatbot product, they have to show that they can pull off something unique with cross-pollination of apps and system services…even though it is actually not that unique anymore following Google I/O and the Pixel 10 phone series.

There aren’t any groundbreaking changes in Apple Intelligence this year, but there are some good additions that I’ve been using more than I expected. I’ve even come to rely on some Apple Intelligence features in my everyday workflow, which is not something I would have said last year.

Let’s take a look.

Visual Intelligence

For my taste, the Camera Control button on modern iPhones is only good for two things:

- A single click to instantly open the camera from anywhere on iOS and

- A long-press to open Visual Intelligence.

I’m not a heavy Visual Intelligence user, but it has been useful for “simple” visual queries that I want to get an answer to using ChatGPT. For more complex visual analysis tasks, I still prefer to rely on ChatGPT’s visual analysis based on tool-calling and OCR, but if I want to snap a picture and ask ChatGPT a quick question about it, Visual Intelligence is usually good enough.

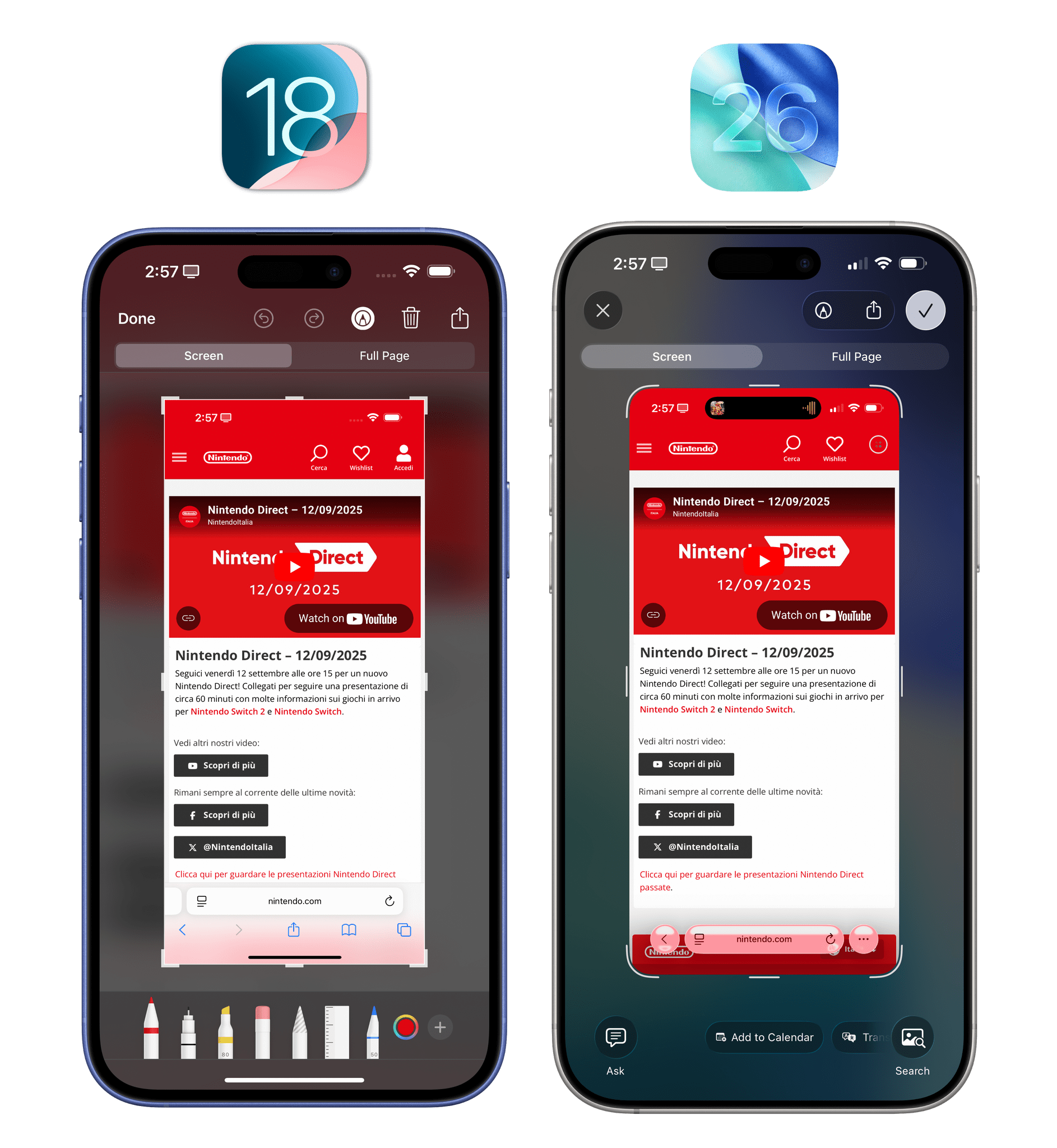

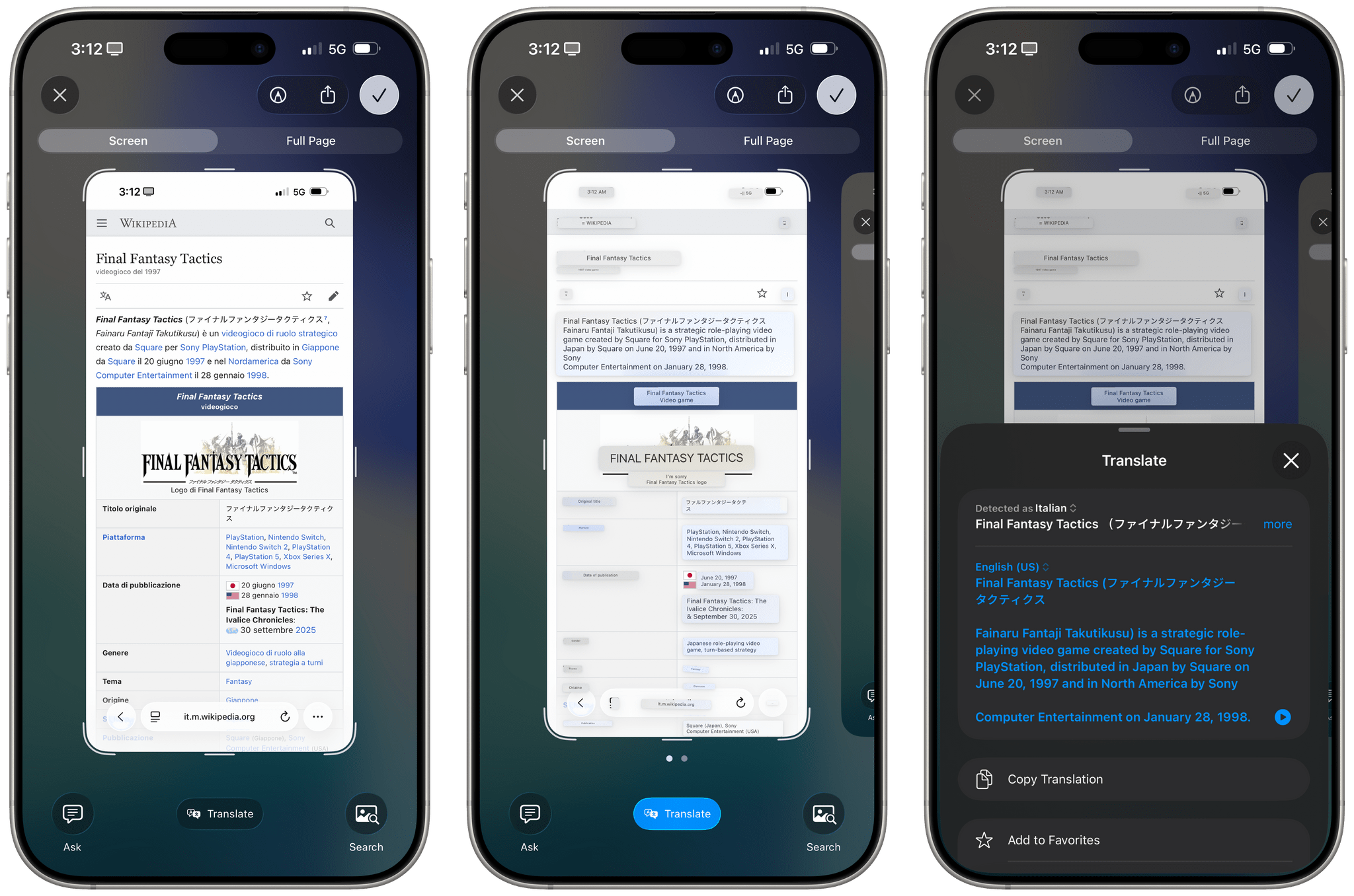

Apple realized there’s an angle worth seeing through here, and they’ve improved Visual Intelligence in iOS 26 in some key ways. The first notable change is that screenshots have been integrated with Visual Intelligence so you can now ask questions about what’s on your screen rather than being limited to taking pictures. In the updated full-screen screenshot UI, you’ll now see two buttons at the bottom for asking a question or searching similar results on Google or other apps. (More on this below.) Markup tools have been removed from the bottom of the screen and can now be invoked via a toolbar at the top. Instead, at the bottom you’ll now see a message that tells you about the ability to highlight parts of a screenshot to search.

If you don’t like this default full-screen screenshot flow, you can disable it in Settings ⇾ General ⇾ Screen Capture, where you’ll also find a toggle to disable the automatic Visual Look Up that happens under the hood to identify objects, places, and other data in your screenshots.

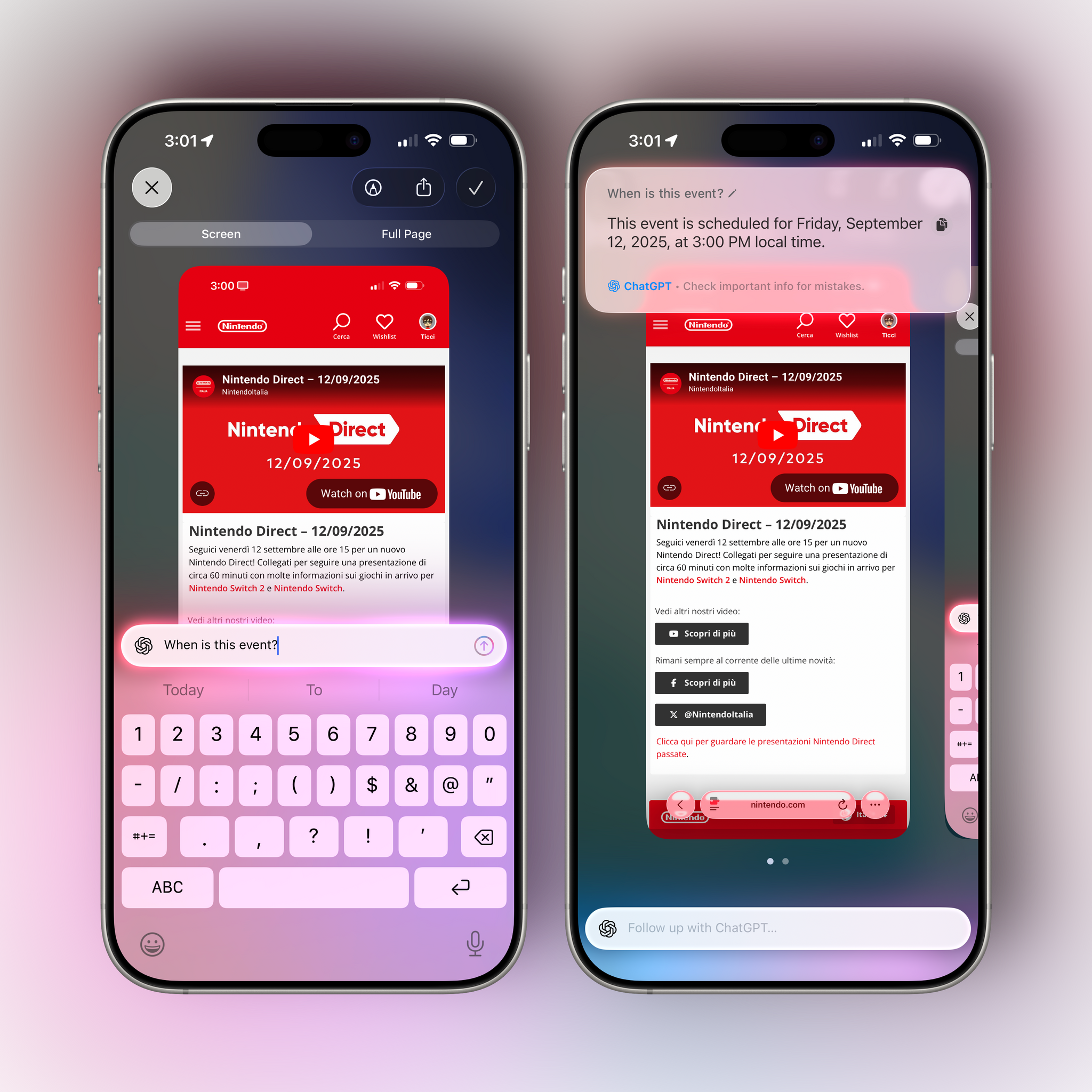

Integrating Visual Intelligence and Look Up with screenshots makes sense for Apple. Some of the most impressive consumer applications of LLMs in the past year have been the real-time screen- and video-sharing capabilities of Gemini and ChatGPT. Apple needs to show they’re in the picture too, but at the same time, they really aren’t since you’re not sharing your screen in real time with an LLM in iOS 26; you’re just sending a screenshot for analysis. Still, I’ve been using this feature for the past three months, and it is nice to be able to ask ChatGPT a question about what’s on screen without having to open the ChatGPT app and manually upload an image.

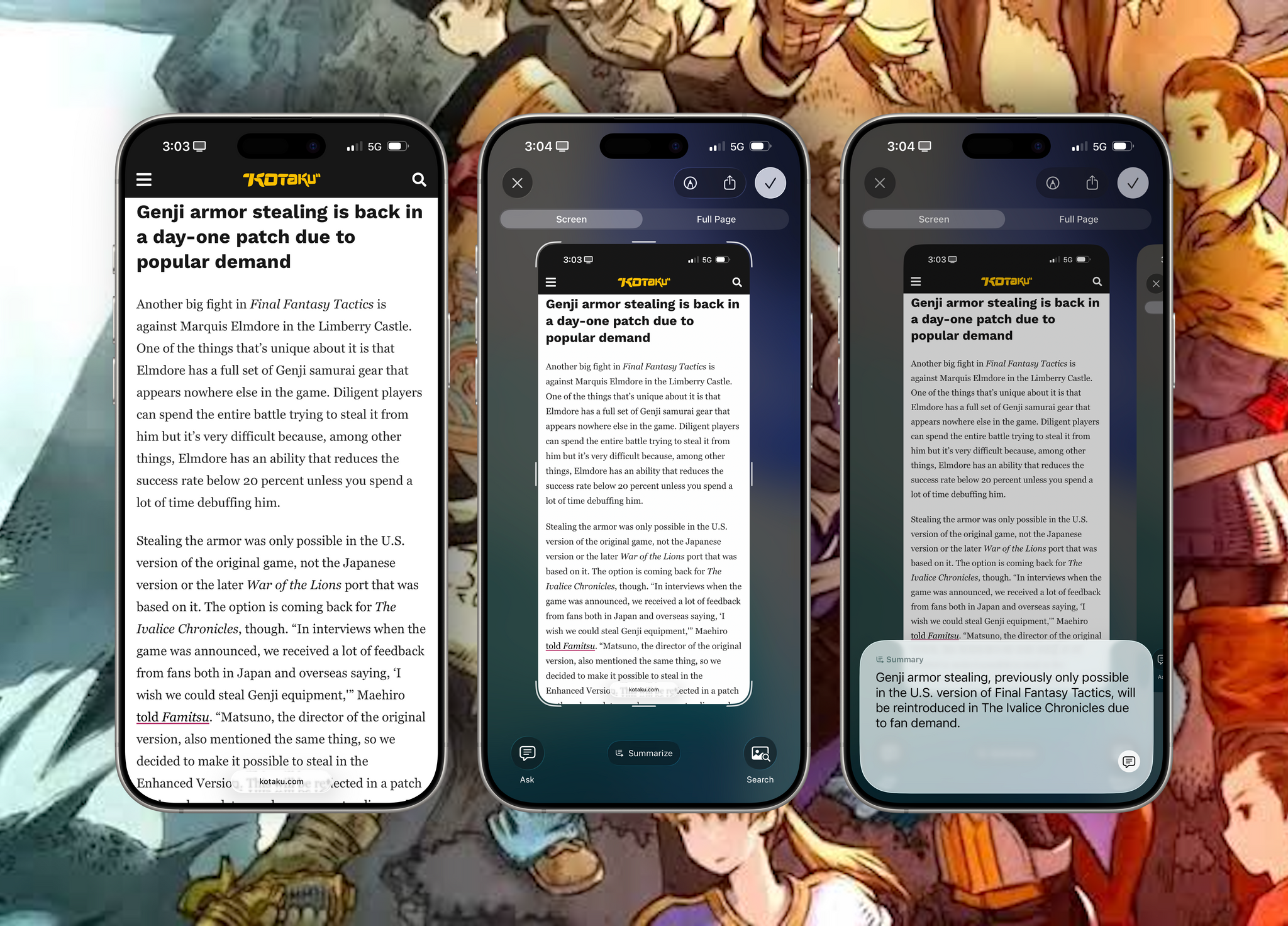

One of my favorite tricks for Visual Intelligence in screenshots is asking the AI to extract bits of text that certain websites don’t let me select and copy in Safari. Visual Intelligence can now also detect if a lot of text is shown on screen and will offer a handy ‘Summarize’ shortcut at the bottom, which I’ve occasionally used for summarizing PDFs I get from my condo’s building manager or long paragraphs of specific webpages. It’s pretty good for that. In an interesting detail, Visual Intelligence knows whether it’s appropriate to offer a summarization button because Apple’s on-device grounding model classifies each image as soon as it’s fed to Visual Intelligence.

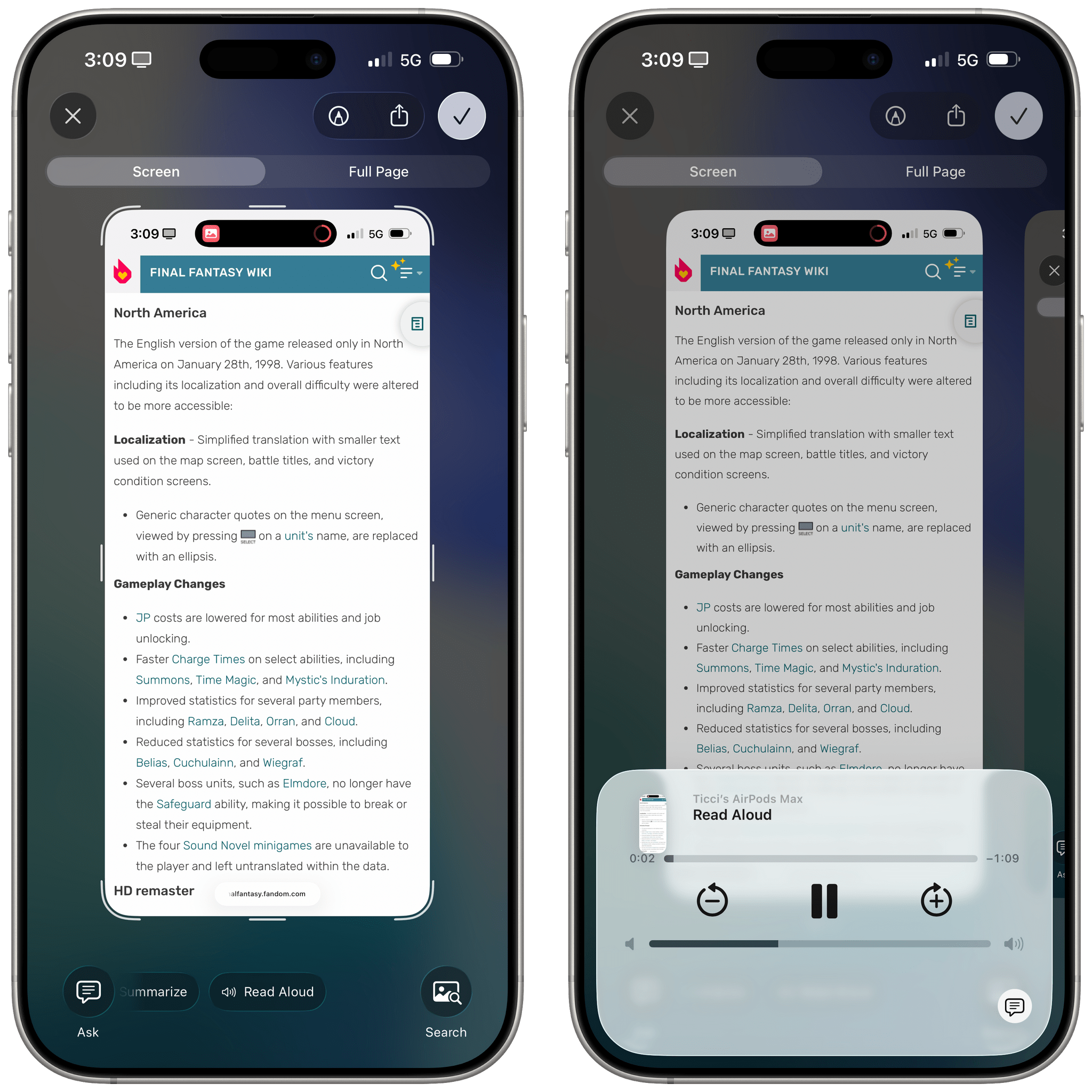

When text is shown, Visual Intelligence will also display a ‘Read Aloud’ shortcut for text-to-speech, and that’s been quite bad in my experience. The voice Apple is using doesn’t seem to understand linebreaks in paragraphs, and it pauses for a second whenever a word wraps on a new line in Safari. It’s clearly a far cry from the latest SOTA TTS models from the rest of the industry.

There’s also integration with Apple’s Translate app/system framework, which will translate whatever is shown in the screenshot to your default language. You can tap on any of the translated blocks to load a modal Translate UI that lets you change languages, copy translations, and more.

Asking ChatGPT about on-screen images has been hit or miss, too. I thought it’d be fun to use Visual Intelligence to take screenshots of people’s Home Screens on social media and ask the AI about app icons, but ChatGPT with Apple Intelligence frequently hallucinated results. Part of the problem, I guess, is that the integration I tested was still based on GPT-4o rather than GPT-5, but also that Apple Intelligence doesn’t support any reasoning models for superior image analysis (even if you’re logged in with a ChatGPT Plus, Business, or Pro account). Furthermore, there is also the problem of persistent chats: I like to revisit my conversations with ChatGPT over time, but using it in Apple Intelligence often feels like shouting into a void. Once again, there is no central Apple Intelligence LLM app on iOS, and it’s not clear when or if ChatGPT queries from Apple Intelligence will show up in the ChatGPT app or on its website. iOS 26 doesn’t improve that. So this screenshot integration is fun and useful if you’re in a pinch, but it’s no replacement for the traditional, iterative, more powerful LLM chatbot experience.

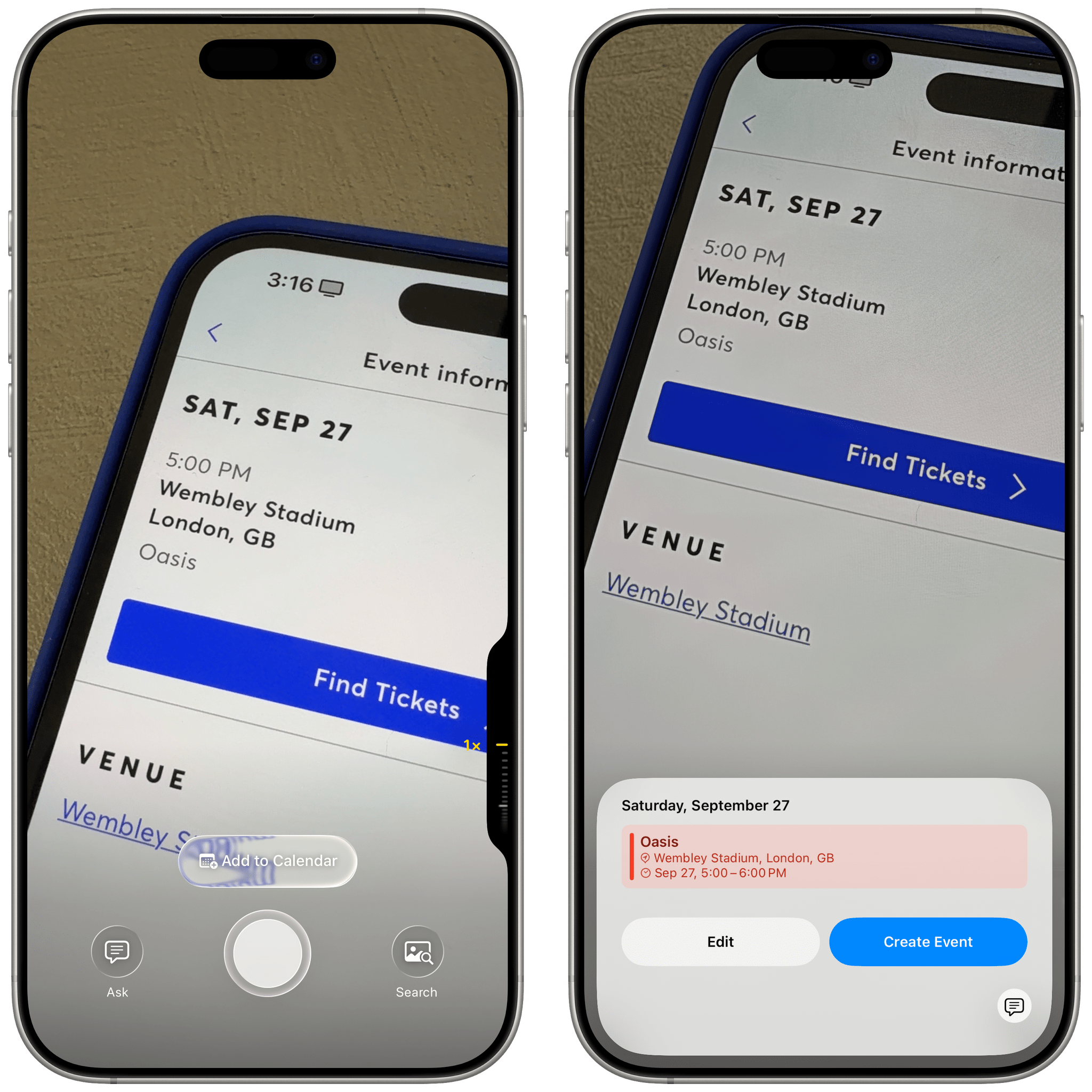

All these text-related additions to Visual Intelligence make a lot of sense in the screenshot environment, but they also work in Visual Intelligence’s classic camera mode. Since Apple is so behind in AI, they don’t work in real time in the viewfinder, like Gemini or ChatGPT; you have to freeze the frame first, then use Visual Intelligence. One of the new integrations that I think works well in camera mode is data detectors for calendar events: take a picture of a flyer or any other sign with a date and time on it, and Visual Intelligence will extract relevant details and offer to create a calendar event for it.

Visual Intelligence can recognize dates in real time, then freeze the frame to let you create a calendar event.

Now, Apple has been doing this sort of stuff with data detectors for ages now, and we’re not really talking about AI in the LLM sense here, but the company can use all the wins they can get these days. So, of course, data detectors for dates recognized in an image get lumped into the broader AI discourse, too, which is alright since the feature works well and other LLMs (except for Perplexity) haven’t chosen to integrate with EventKit, but it’s amusing when you remember that Apple has been doing this stuff since iOS 10 and improved it in iOS 15, well before AI and LLMs were common topics. Such is the situation Apple is in right now.

I should note that Apple has done a pretty good job with the ability to highlight parts of an image to search in Visual Intelligence: just scrub with your finger over an area of an image, and you’ll be able to find results on Google based on that area of the image.

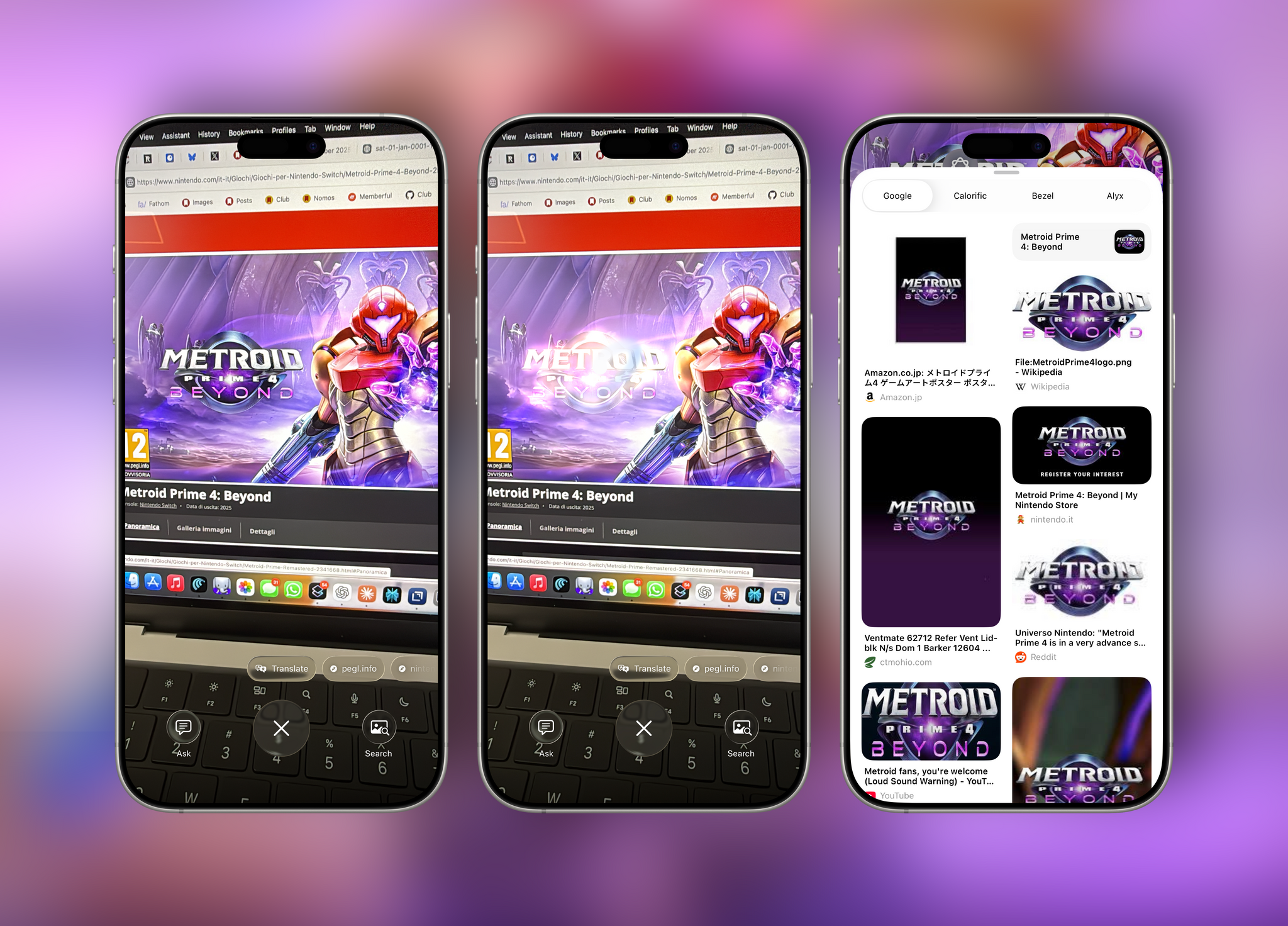

In this case, I highlighted the Metroid Prime logo, and Google presented related search results from Google Images.

The interaction here is reminiscent of Clean Up in Photos in that you can either scrub or draw a shape around an object to highlight it. When you do, Apple sends the selected pixels (plus some of the surrounding ones to form a square shape) to an external provider, which is a nice touch. I’ve been using this to highlight the aforementioned app icons from people’s screenshots in Visual Intelligence, and Google results were always better than ChatGPT’s responses.

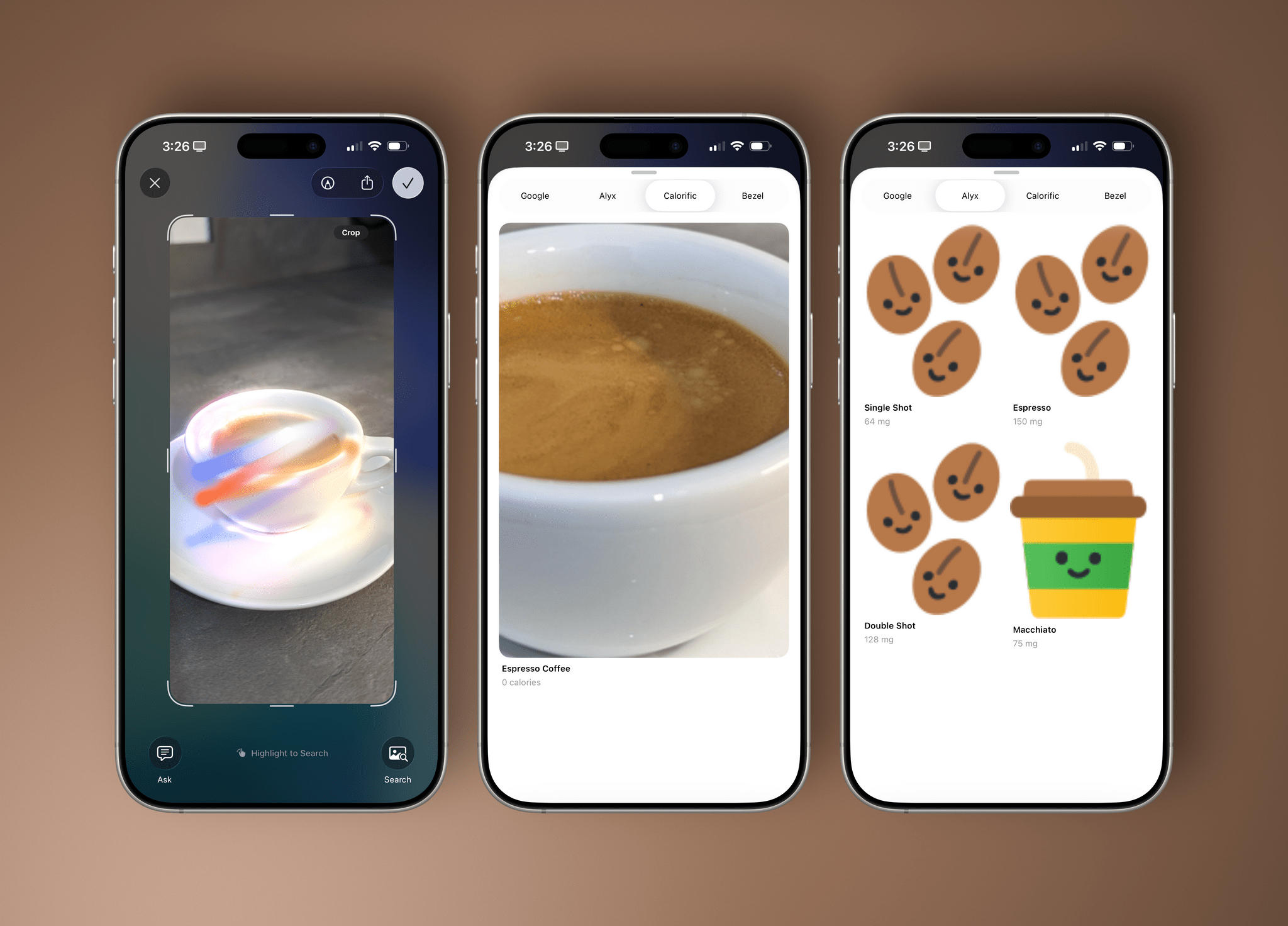

The final addition to Visual Intelligence I’ll mention is support for third-party app extensions in Visual Look Up. This feature has also been hit or miss in my experience, and I’ve had more crashes than successful queries in recent betas of iOS 26, but it is interesting nonetheless. Third-party apps can now provide extensions that sit next to Google in Visual Look Up and allow you to pass an image to their respective apps. I’ve been able to test Alyx, a coffee-logging utility, and Calorific, a calorie-counting app, to take pictures of beverages and food with Visual Intelligence and pass them to those apps for analysis. It worked, but the classification of each image depends on which AI those developers have integrated into their apps.

I’ve also been able to test apps that offer non-AI functionalities in Visual Look Up. Bezel, for instance, can show what a screenshot would look like with a physical Apple device frame and different wallpapers – kind of like an integrated version of my Apple Frames shortcut. I’m not sure I’d ever use this for my work over the automated workflows I’ve made with Apple Frames, but it’s a cool demo of this new extension point in iOS 26.

Visual Intelligence, alongside text-based LLM interactions, will be one of the most important areas for Apple to invest in as they further their AI plans and work on building AI-powered glasses. The changes in Visual Intelligence for iOS 26 aren’t groundbreaking and, on balance, do not offer anything that can’t be accomplished with a good chatbot and image uploads elsewhere, but they also show early signs of a bigger plan that I hope Apple continues to evolve.