Foundation Models, Apps, and Shortcuts

The most interesting part of Apple’s AI strategy this year is that they’re opening their Foundation models to developers and users with a framework for apps and a new action in Shortcuts. I’ll be blunt: both Apple’s small on-device model (~3B parameters, available in third-party apps and Shortcuts) and the bigger one (~17B parameters?) in Private Cloud Compute (only available in Shortcuts) are not great models. I wouldn’t even call them “good”. They’re small models with an old knowledge cut-off date that support a limited subset of languages and have a small context window. (The on-device one has a 4,096-token context window.) They have heavy-handed guardrails that frequently refuse to generate a response if you use Apple product names. The local model does not support vision features at all.

They are passable models that have been trained for basic tool-calling and structured data output in Swift in the 26 series of operating systems. You’re not going to replace ChatGPT or Claude with Apple’s Foundation models. But, despite their archaic nature, these models do show the first signs of Apple’s plan: weaving LLM capabilities throughout the apps you use every day rather than piping every interaction through a chatbot. The jury’s still out as to whether this approach will work, but in iOS 26, Apple is at least shooting their first shot.

Here’s how it works: third-party apps can now prompt Apple’s on-device Foundation model and get a local response in seconds without the need for an Internet connection. This model is shared across all apps, meaning that as long as you have enabled Apple Intelligence on your device, you won’t have to re-download a model inside each app over and over, wasting space on your device. This is precisely what Apple should have done with a small model for on-device usage, and I’ve been able to test dozens of apps that integrate directly with Apple Intelligence in iOS 26.

The Foundation model that developers can use supports prompting and processing of each app’s data. For instance, a developer of a journaling app can choose to let the model privately and securely process journal entries on device and return some suggestions or summaries. The model has been trained by Apple to let developers create custom “tools” for it – essentially, external features that can help the model perform tasks it couldn’t on its own – as well as to instruct the model to return data in a specific format that they can use in their apps’ code. As I’ll demonstrate later, this also has implications for users who want to prompt Apple’s models in the Shortcuts app.

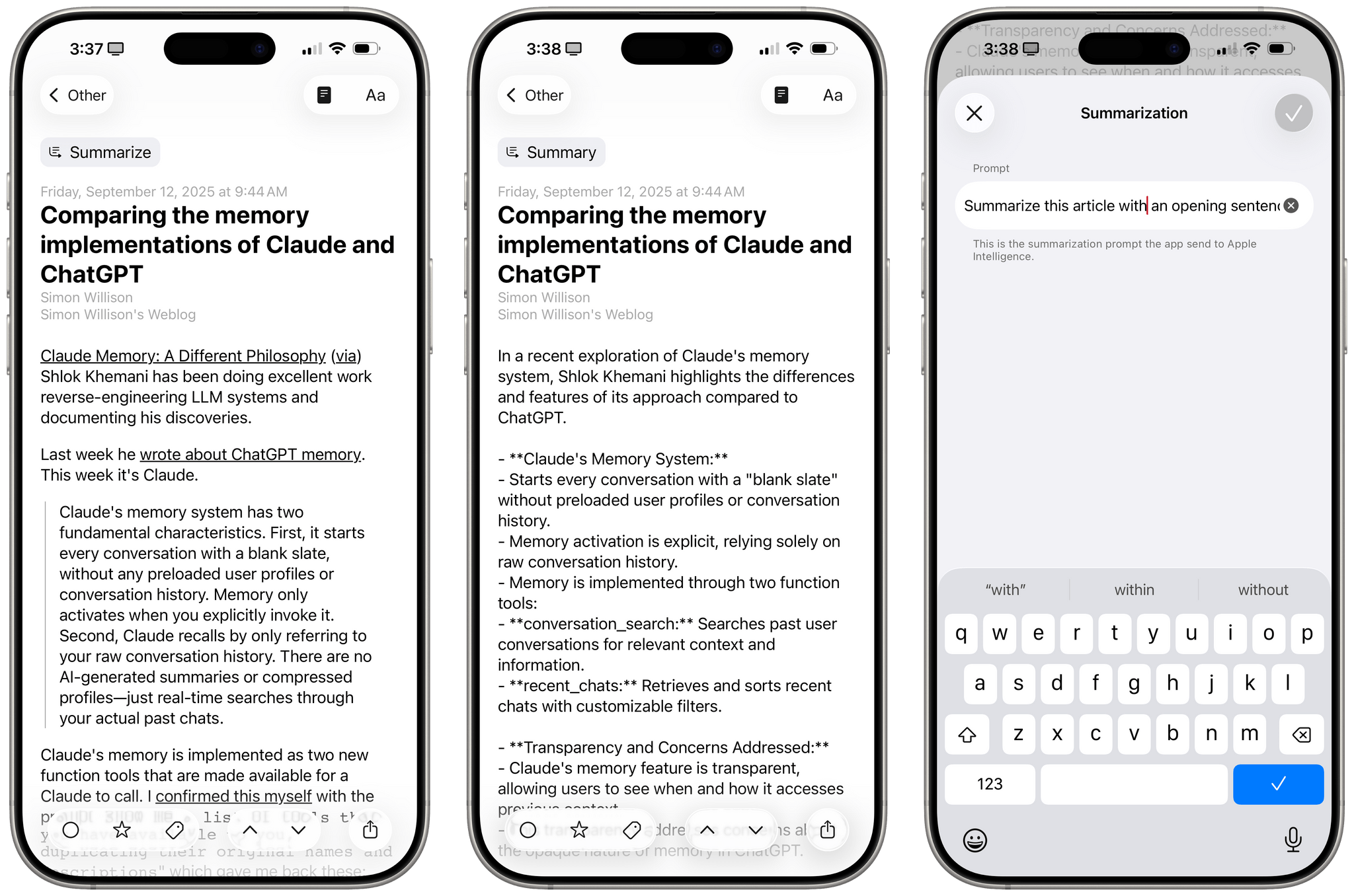

Examples speak louder than words here, and especially when it comes to LLMs, it’s best to see what they can accomplish in practice. Lire, my go-to RSS client for iPhone and iPad, now integrates directly with Apple Intelligence for article summarization (in addition to featuring an excellent Liquid Glass redesign). Whenever I come across a clickbait-y headline that doesn’t merit a full read-through of the story or a long article I just want to skim before saving, I can press Lire’s new ‘Summarize’ button, which uses Apple’s model to read the article and summarize it. Even better, since Apple’s model supports prompting, I was able to write my own prompt to tell Apple Intelligence in Lire to summarize articles with an opening sentence followed by a bulleted list. Revolutionary? No, but it works offline, it’s fast, it’s free for developers, and it doesn’t require downloading any additional model.

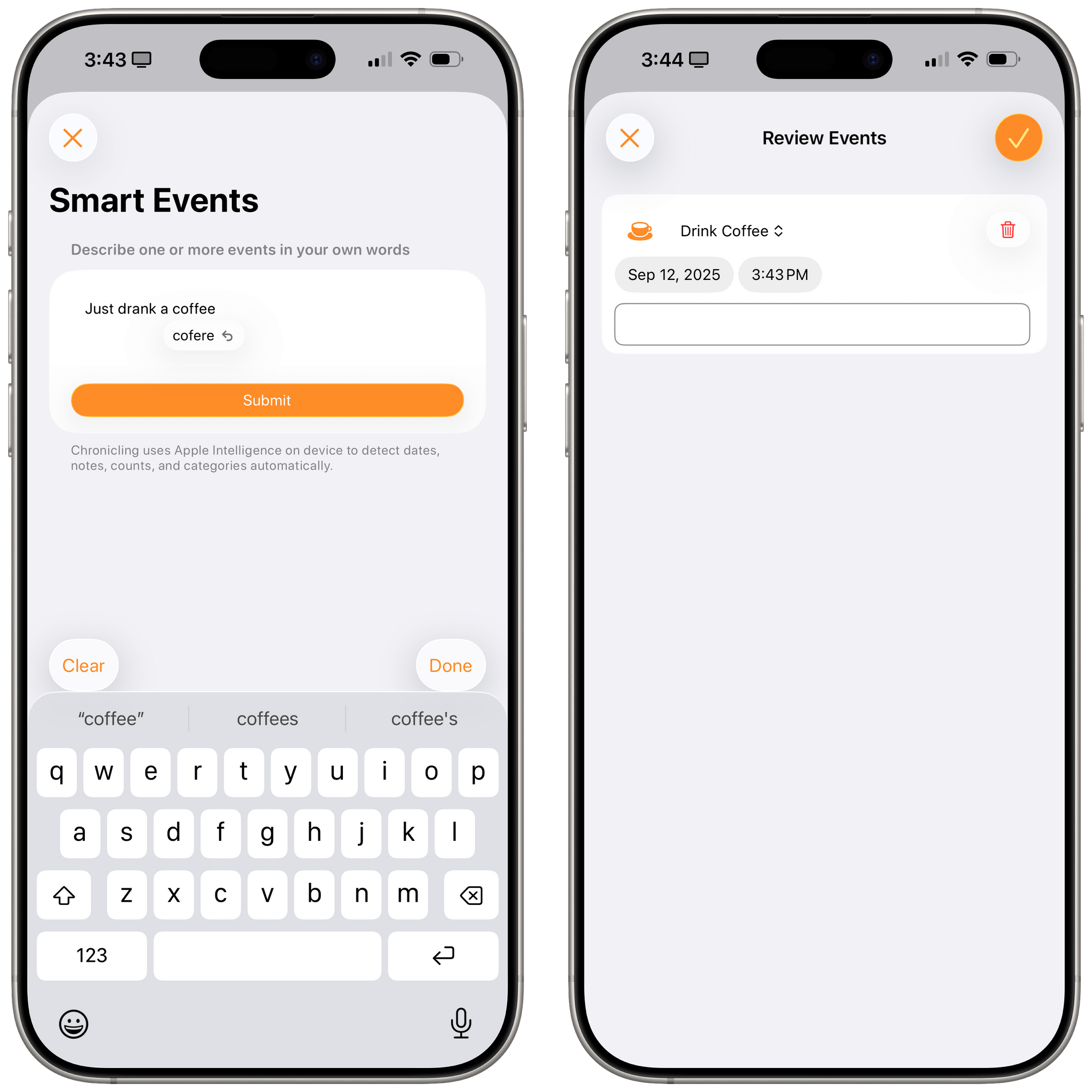

Chronicling, Rebecca Owen’s excellent all-in-one tracker that we’ve covered many times on MacStories, is adding a feature called Smart Events that lets you log events with natural language using Apple’s Foundation model. Here, Chronicling is using Apple Intelligence to detect event names, dates, times, and counts and simplify the logging process for users. For instance, if I want to use Chronicling to log my coffee consumption and write, “I had espresso at 12 PM and 3 PM”, the Foundation model will enable Chronicling to log two separate events in the appropriate category with the correct times.

There was nothing stopping Rebecca Owen from building this feature before iOS 26, but that would have meant either (a) shipping the app with an on-device model built in, leading to a larger download size for customers, or (b) relying on a third-party cloud LLM, which would have resulted in additional costs for her and privacy concerns for users. With iOS 26 and the Foundation models, none of these issues arise, and the model works well for tasks with this kind of narrow scope and limited access.

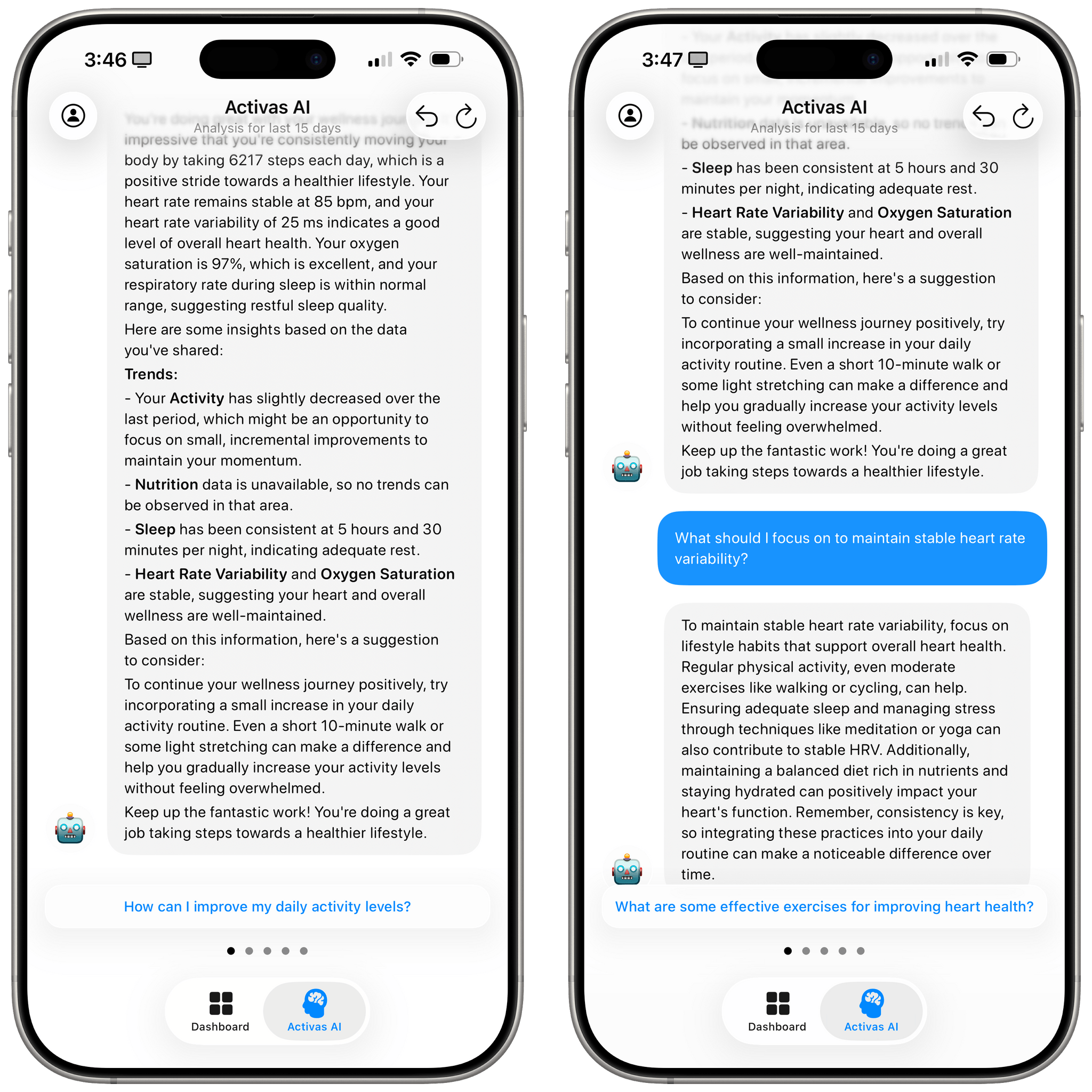

However, that’s not stopping third-party developers from using the Foundation models for more complex apps. A standout example for me has been Activas, a new app from indie developer Brian Hough that combines HealthKit with Apple Intelligence and lets you discover insights or have conversations with the Foundation model about your health – entirely offline. I’ve been so impressed with Activas and its AI integration; it has surfaced trends about my health in a simple yet actionable way, and it serves as a good demonstration of how even a small language model can be useful with the right system prompt and tool definitions. (NVIDIA agrees.)

While I was writing this review, Activas noticed that I was walking less every day, and it noted that with an insight card in the app’s main view. Although Activas doesn’t let you have full-blown, freeform conversations with the model (where you’d likely run into its limitations very quickly), it cleverly lets you swipe through preset messages that generate a response, taking your HealthKit data into account. It’s pretty neat, and I can totally see Apple creating something bigger and more intelligent along these lines for next year.

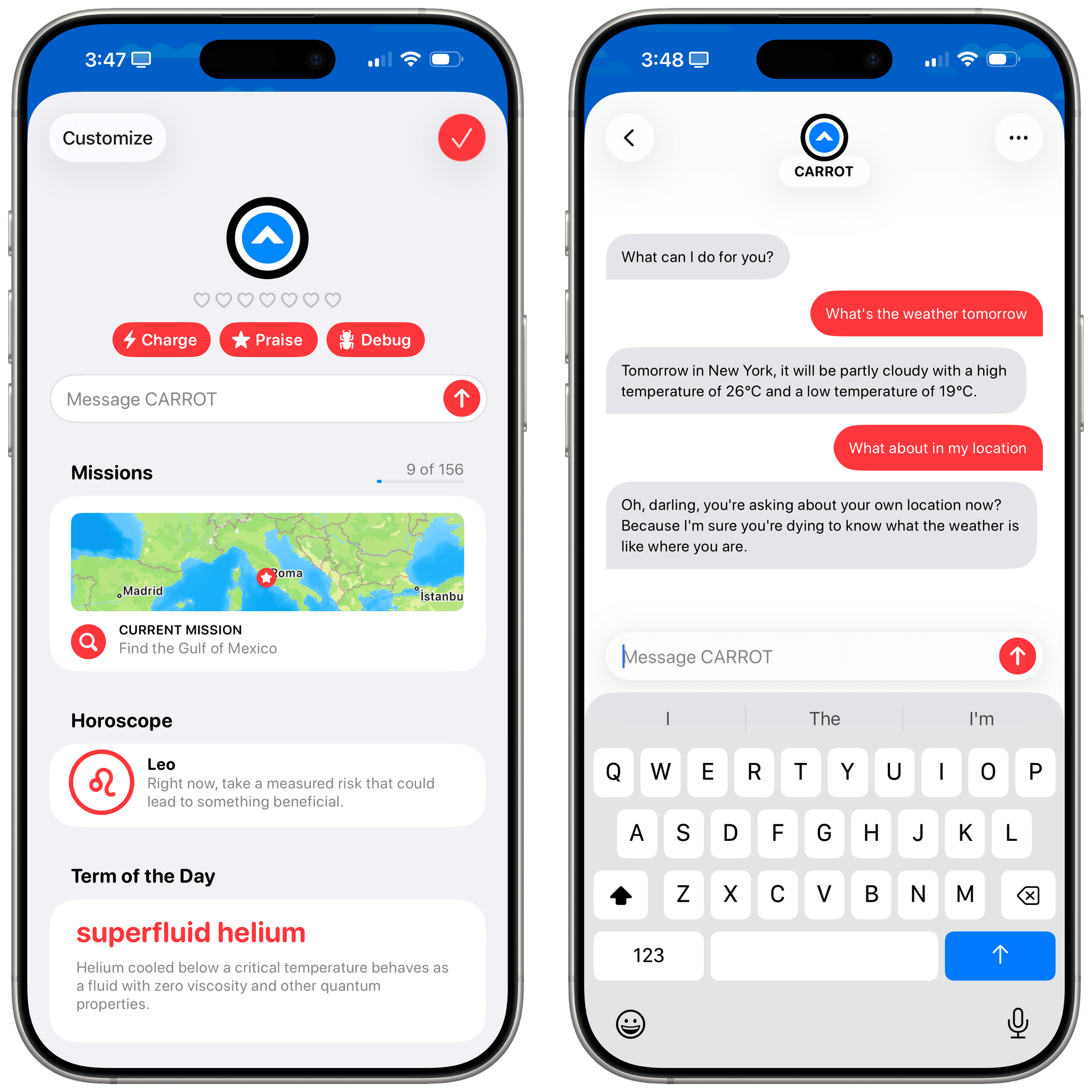

There are even developers who are daring to use Apple’s small on-device model for actual freeform, ChatGPT-like conversations. The latest version of CARROT Weather, in addition to touting a Liquid Glass redesign, integrates with the Foundation model to allow users to have conversations with CARROT in the style of a chatbot. When you’re asking about the weather, that’s pretty cool; ask about the forecast for the weekend, and CARROT’s AI will give you back an appropriate response. But this is where the limitations become most apparent: ask even a basic follow-up question, or try and talk about anything else, and you’ll either get a nonsensical response or a boilerplate “I can’t fulfill that request” message. Such is the nature of a small model these days. You’re probably going to see a lot of developers claim that they have built “ChatGPT, but offline powered by Apple Intelligence”, and believe me when I say this: those apps are going to suck.

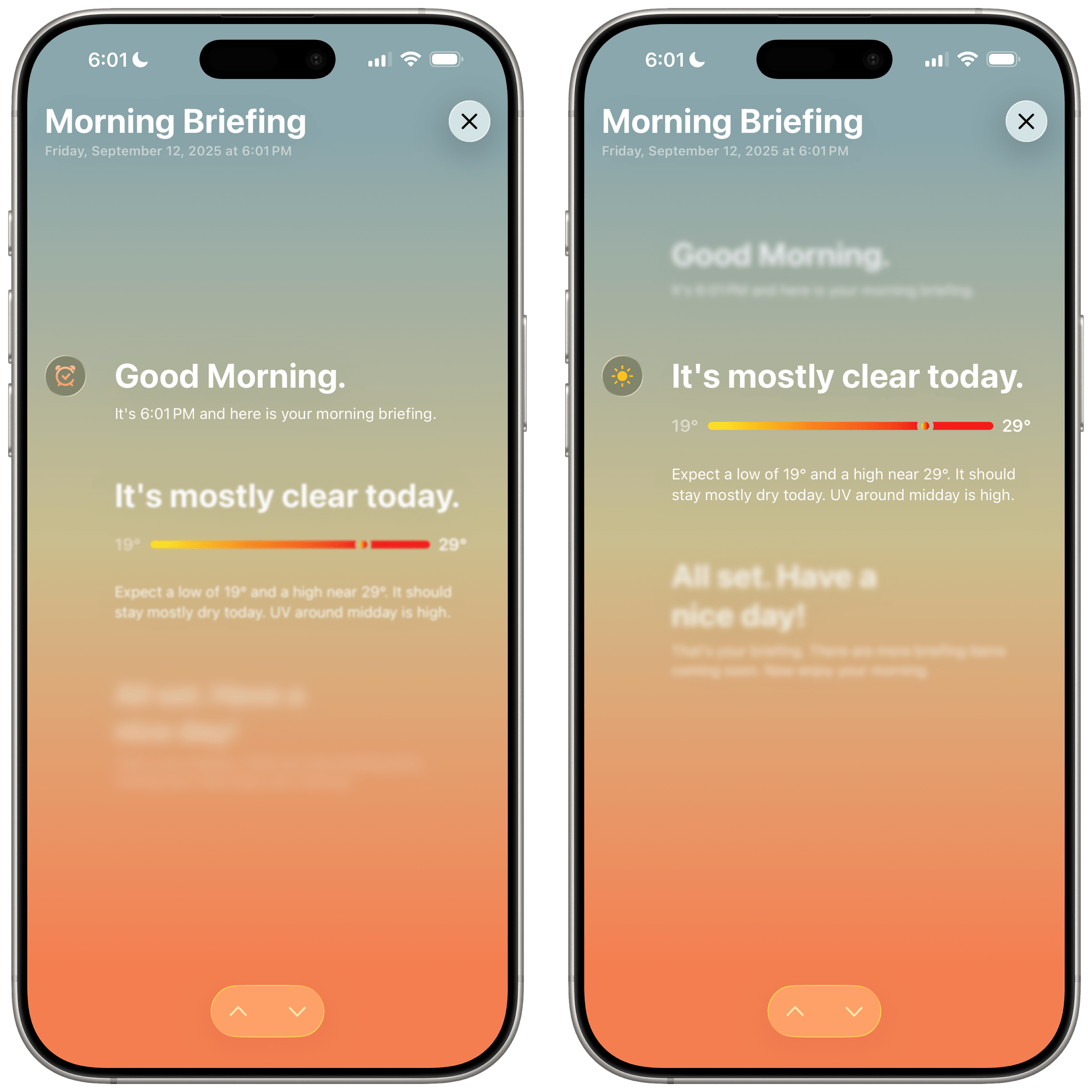

Thankfully, I’ve heard more from developers building actually novel and interesting ideas thanks to the Foundation model than I have from grifters. Awake, a new app from the team behind Structured, integrates with AlarmKit (more on this later) to wake you up in the morning, but it also uses the Foundation model to give you a morning brief based on the weather, the current time, and your calendar events. I also like the concept behind PocketShelf. This utility lets you take notes as you’re reading a book (while also displaying a timer and playing white noise – clever!), and when you come back to a book you’ve been reading, the Foundation model is used to summarize your notes and tell you where you left off.

You’re going to see a lot of similar use cases in apps that integrate with the Foundation models in iOS 26: summarization, basic in-app searches based on custom tools, tag suggestions, and so forth. And you’re going to see these because that’s as far as developers can realistically push the on-device Foundation model before running into intelligence limitations or Apple’s content guardrails.

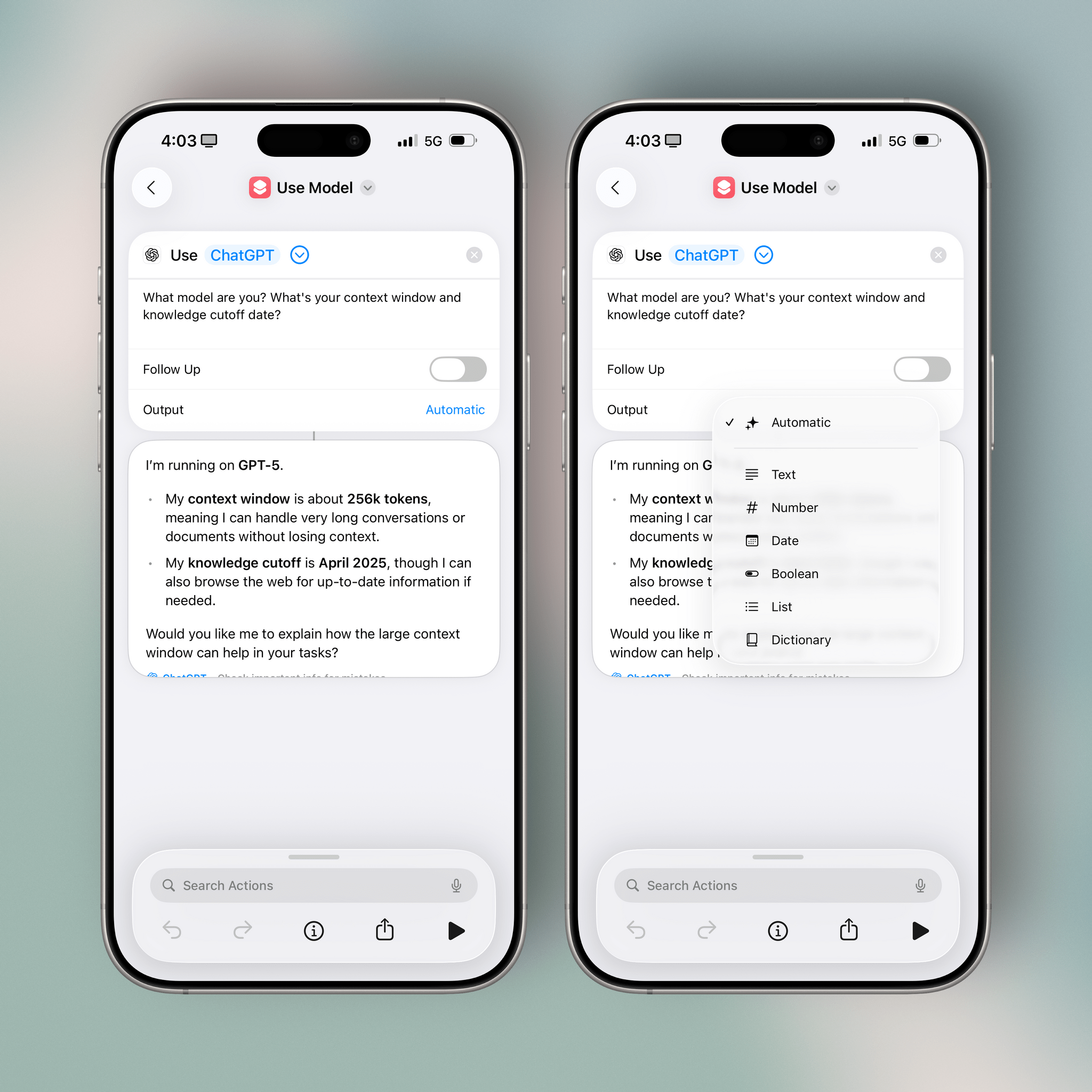

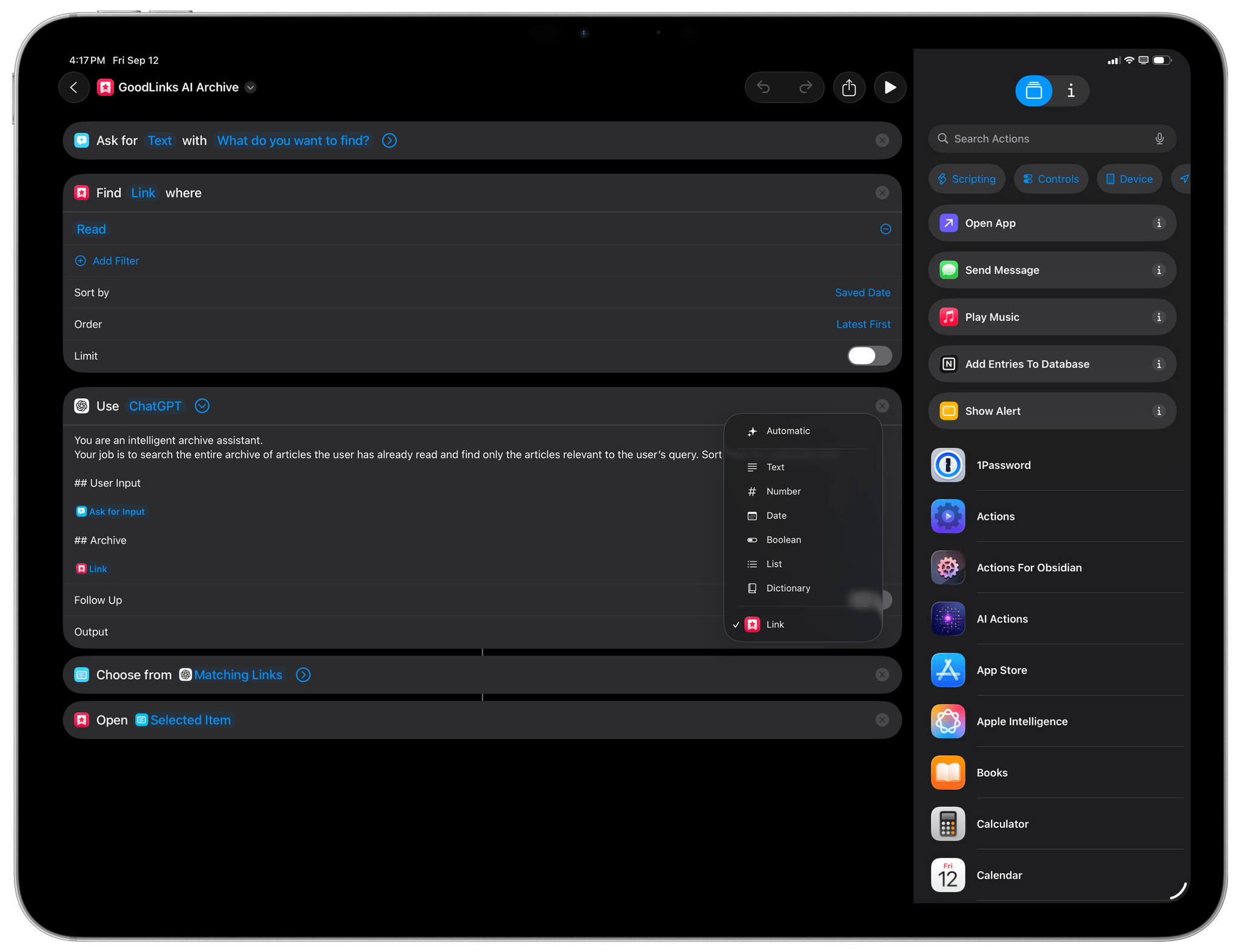

Things get a lot more interesting for power users when we consider the addition of the ‘Use Model’ action in the Shortcuts app. I’m going to share some examples of Apple Intelligence-powered shortcuts later in this review and with Club MacStories members, but I want to cover the technical aspects here because they’re fascinating. In Shortcuts, Apple built an action that lets you prompt the on-device model, the bigger one running in Private Cloud Compute, or ChatGPT (which is powered by the latest GPT-5 model as of the public release of iOS 26). This alone is great, but here’s what makes this integration extra special: in addition to freeform prompting and the ability to ask follow-up questions, you can pass Shortcuts variables to the action and explicitly tell the model to return a response based on a specific schema or type of variable. Effectively, Apple has built a Shortcuts GUI for what third-party developers can do with the Foundation model in Swift code, but they’re letting people work with the Private Cloud Compute model and ChatGPT, too.

This action has major implications for people who work on Apple platforms and have built workflows revolving around the Shortcuts app; with the Use Model action, there is now a fast, pervasive “AI layer” you can sprinkle on top of any shortcut, with any action, to build shortcuts powered by LLMs that can adapt to non-deterministic tasks. This action is Apple’s formal embrace of a new kind of “hybrid automation” I’ve been writing about for a while now, and I’ve been having so much fun with it.

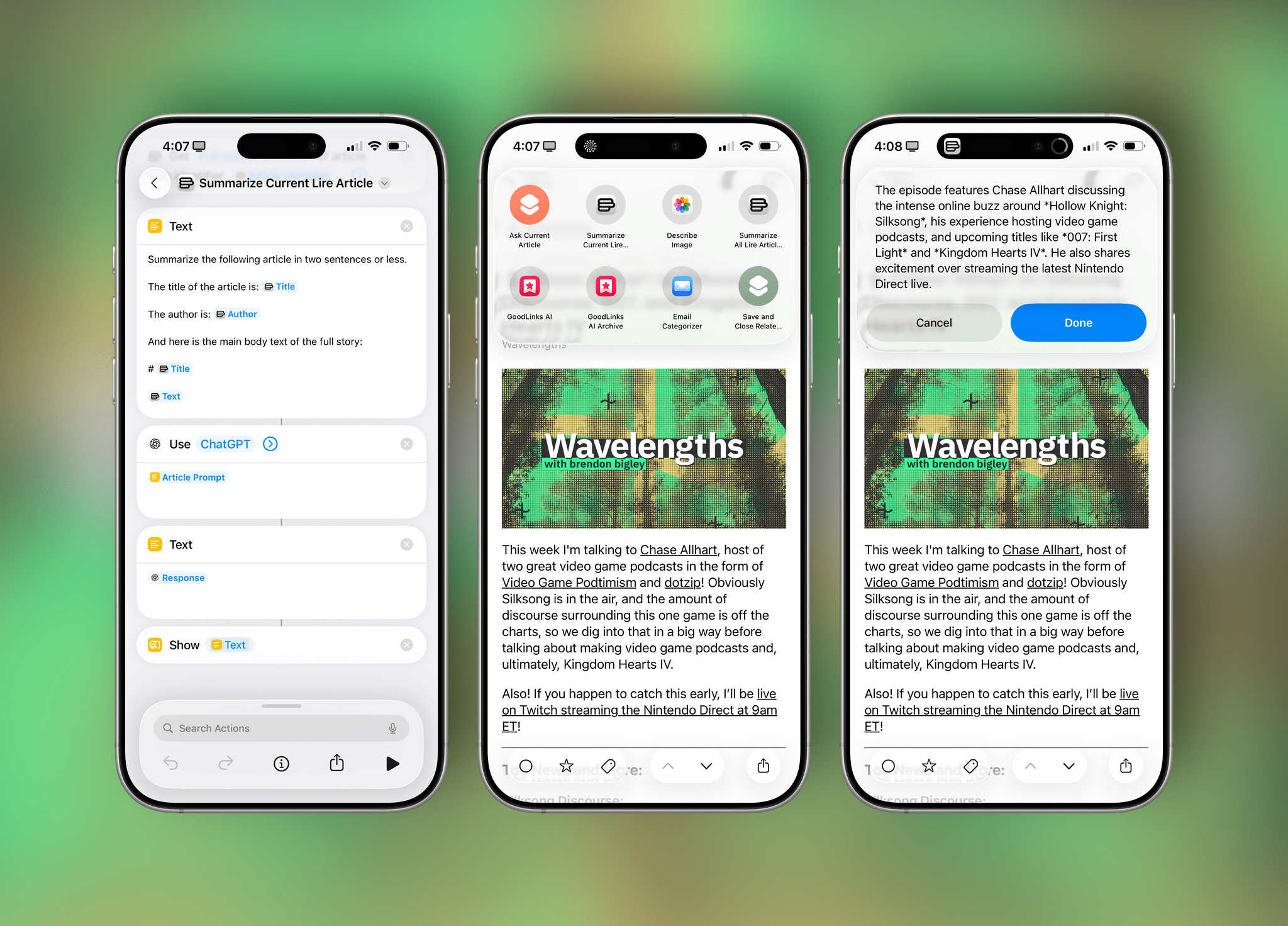

At a high level, you can use the Use Model action to prompt a model and get a response. Since all three supported models know how to interpret Shortcuts variables and return structured output, I recommend using ChatGPT if you can since GPT-5 is the smartest one and has a larger context window. In the text field of the action, you can write any prompt you want and include any Shortcuts variable. Using a very simple shortcut I created, I can get the current article I’m reading in Lire, extract some properties from it, then ask ChatGPT via the Use Model action:

Summarize the following article in two sentences or less.

The title of the article is: Title

The author is: Author

And here is the main body text of the full story:

[Title]

[Text]

I pinned this shortcut to my iPad’s dock and made it available via the Action button on my iPhone. When I want to quickly summarize the article I’m viewing, this is the response I get:

This is a nice example of what you can do with prompting and variables. Data from Lire is sent to the model, and I didn’t have to write a single line of code to tap into the natural language capabilities of LLMs.

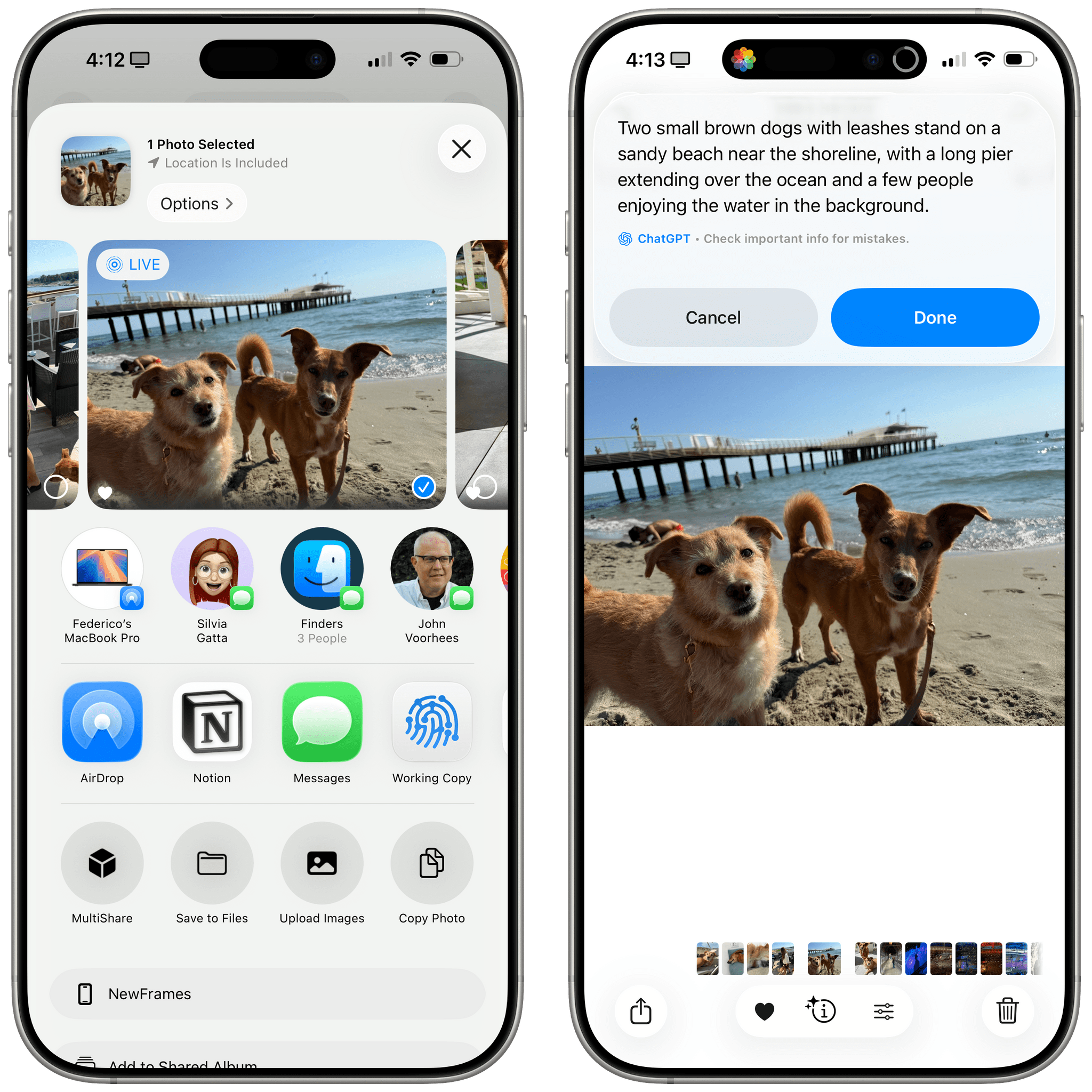

On a similar note, I created a shortcut that lets me easily add Accessibility captions to images on social media. This shortcut uses a prompt and a Photos variable to send an image to ChatGPT, which is configured to return ‘Text’ via the action’s ‘Output’ field. In this case, I can tap into ChatGPT’s superior vision and OCR features to access a fast and accurate caption maker that ensures pictures I post on social media always have captions for screen readers:

Describe this image in detail.

Keep the description short (1 long line of text) and focus on the key subject.

Mention of other distinctive elements around it.

I’ll use this as the caption of the image for screen readers on social media.

Format it accordingly.

If the screenshot is primarily text, include the text verbatim.

[Photos]

The true potential of the Use Model action as it relates to automation and productivity lies in the ability to mix and match data from apps (input variables) with structured data you want to get out of an LLM (output variables). This, I believe, is an example of Apple’s only advantage in this field over the competition, and it’s an aspect I would like to see them invest more in. Regardless of the model you’re using, you can tell the Use Model action to return a response with an ‘Automatic’ format or one of six custom formats:

- Text

- Number

- Date

- Boolean

- List

- Dictionary

With this kind of framework, you can imagine the kind of things you can build. Perhaps you want to make a shortcut that takes a look at your upcoming calendar events and returns their dates; or maybe you want to make one that organizes your reminders with a dictionary using keys for lists and values for task titles; perhaps you want to ask the model a question and consistently return a “True” or “False” response. All of those are possible with this action and its built-in support for schemas. What I love even more, though, is the fact that custom variables from third-party apps can be schemas too.

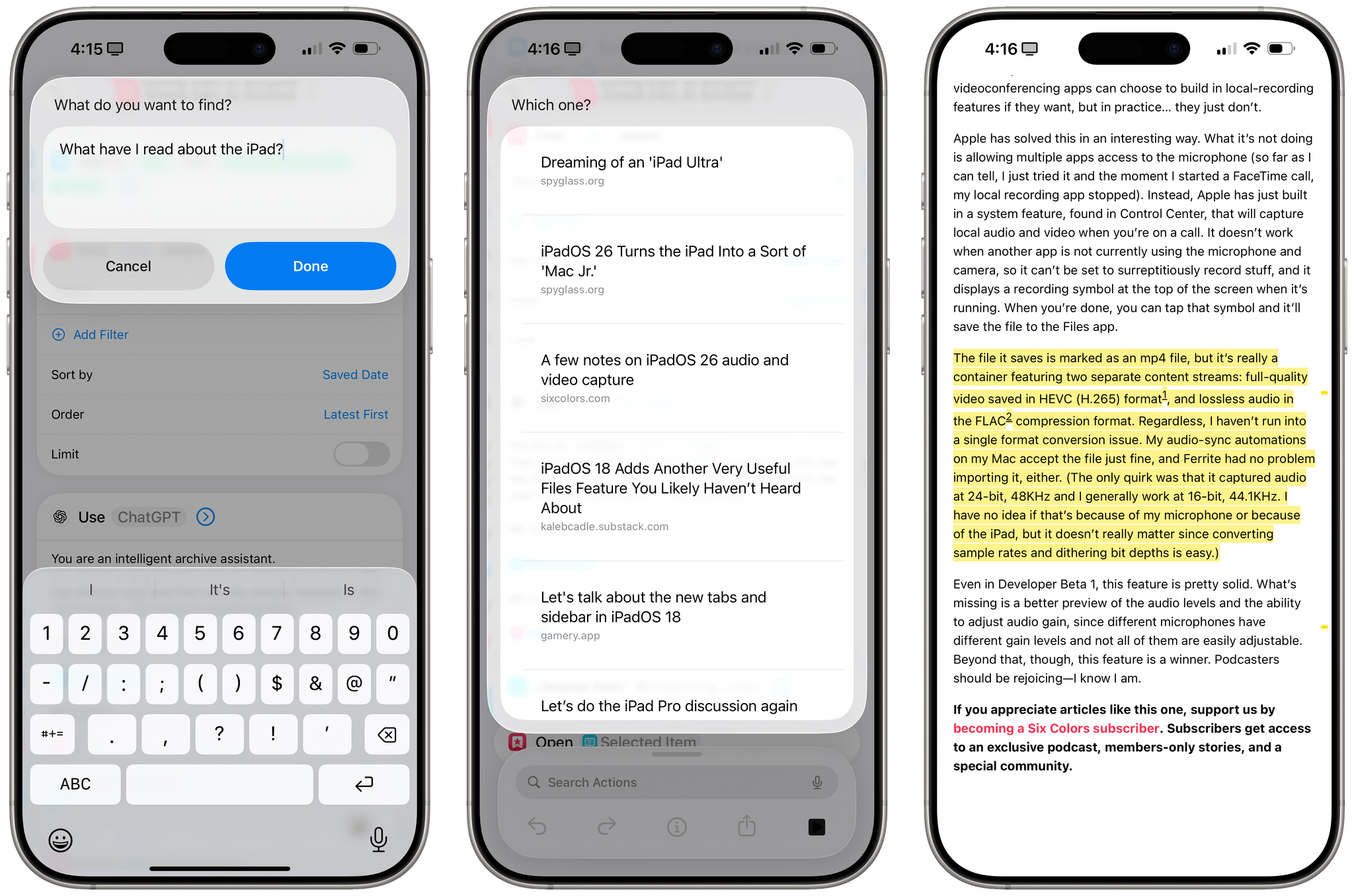

I will share many different shortcuts I’ve built for this in my upcoming course about Apple Intelligence and Shortcuts for Club members. But to give you an idea here, I’ve created an AI-powered search mode for GoodLinks using just Shortcuts, the Use Model action, and the existing version of GoodLinks from the App Store. I started with GoodLinks’ ‘Find Links’ action and the following prompt:

You are an intelligent archive assistant.

Your job is to search the entire archive of articles the user has already read and find only the articles relevant to the user’s query. Sort them by published date.

User Input

[Ask for Input]

Archive

[Links]

I then configured the ‘Use Model’ action to return GoodLinks’ own ‘Link’ variable as the output:

Now, when I run this shortcut, I can ask in natural language something like, “What have I read about the iPad?” and send that to ChatGPT via Apple Intelligence. After a few seconds, ChatGPT will return the appropriate GoodLinks schema, which I can present with a ‘Choose from List’ action. Since the items in the search results are native GoodLinks articles, I can open a selected search result directly in the app.

I will cover a lot more about this topic and how to work with variables and Apple Intelligence in my upcoming course for Club Plus and Premier members.

As I hope these examples have demonstrated, Apple’s integration with ChatGPT and the system they’ve built in Shortcuts is the most exciting area of Apple Intelligence this year – and the reason why I’ve ended up using Apple Intelligence a lot more than I expected a few months ago.

Apple needs a traditional LLM experience because, clearly, people want that sort of interaction on mobile now; the major AI labs have noticed too, and they’re iterating quickly on this idea. At the same time, I hope Apple continues down this track of seamlessly integrating individual apps with LLMs.

Maybe 2026 will be the year?

And More…

There are a handful of other Apple Intelligence features worth mentioning in iOS 26:

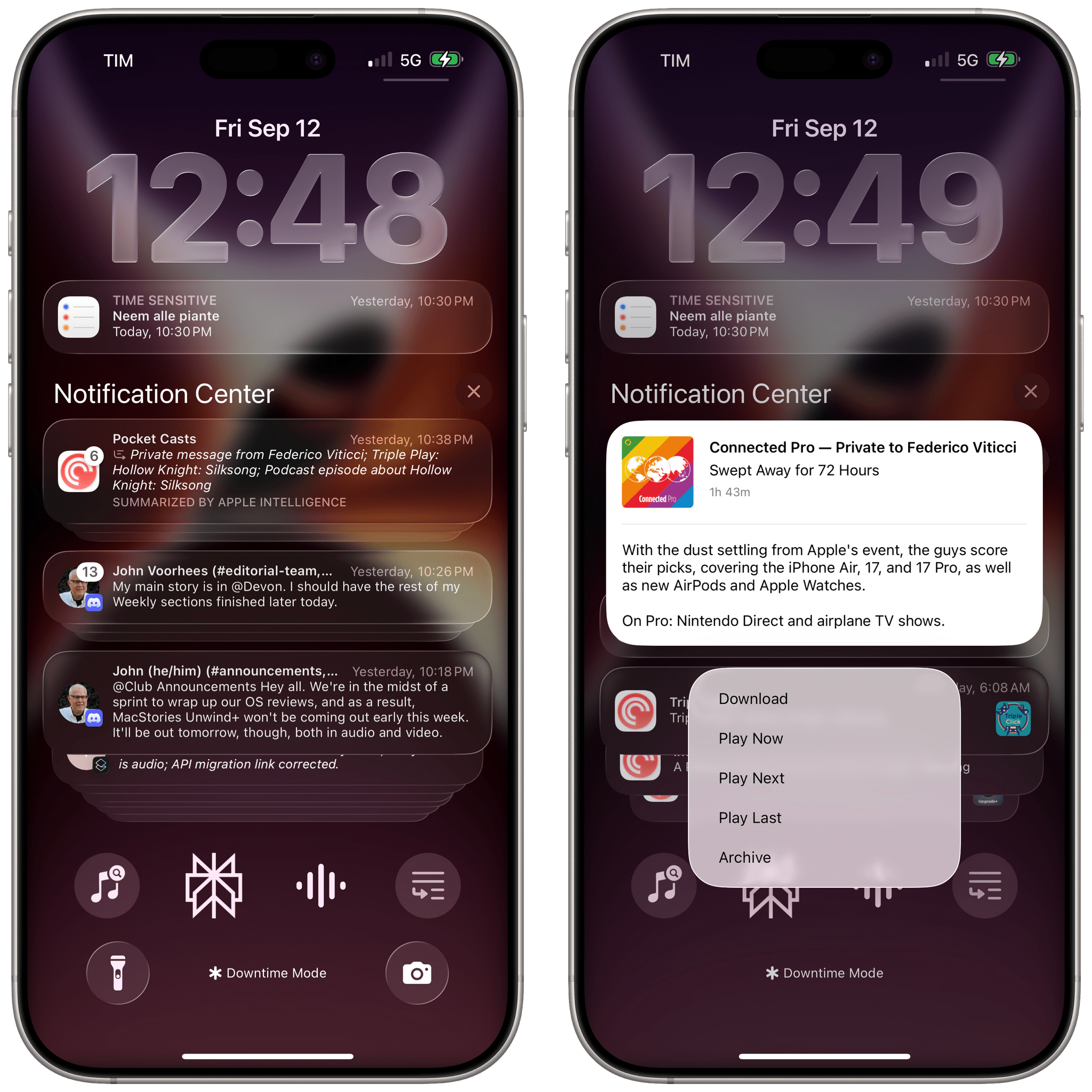

News summaries return. Notification summaries for News and Entertainment apps are coming back in iOS 26 following a very public spat with the BBC. This time around, all AI-generated summaries will be italicized and include a ‘Summarized by Apple Intelligence’ annotation.

There is also a new onboarding flow for users to set up notification summaries with Apple Intelligence after updating to iOS 26. I don’t love the terse style of notification summaries, but I leave them on because, on average, they’re more useful than they are silly.

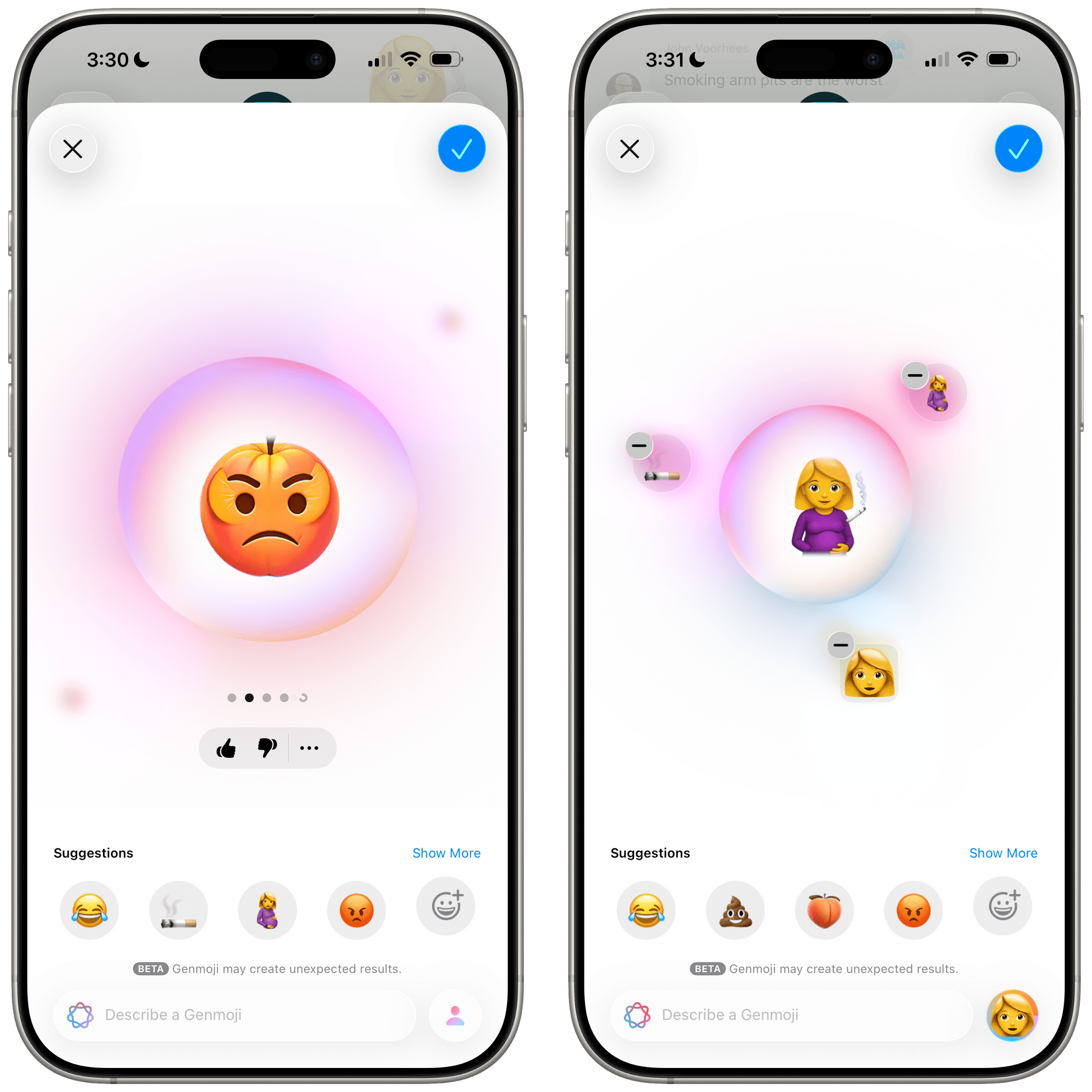

Hybrid and customizable Genmoji. Apple is adding two features to its AI-powered Genmoji: the ability to mix your favorite emoji together to create something brand new and more descriptive controls for Genmoji of friends and family, such as options for hairstyles. I don’t really use Genmoji, but I can tell you that my iPhone 16 Pro Max still gets very hot when trying to generate some, which, ideally, is something the iPhone 17 Pro’s redesigned thermal system will address. Apple’s Genmoji feature has some guardrails in place, but it’ll also generate some ridiculous and nasty combinations of emoji if you just put together some existing characters in the right order. Whatever happened to the Genmoji below should give you a pretty good sense of where things stand.

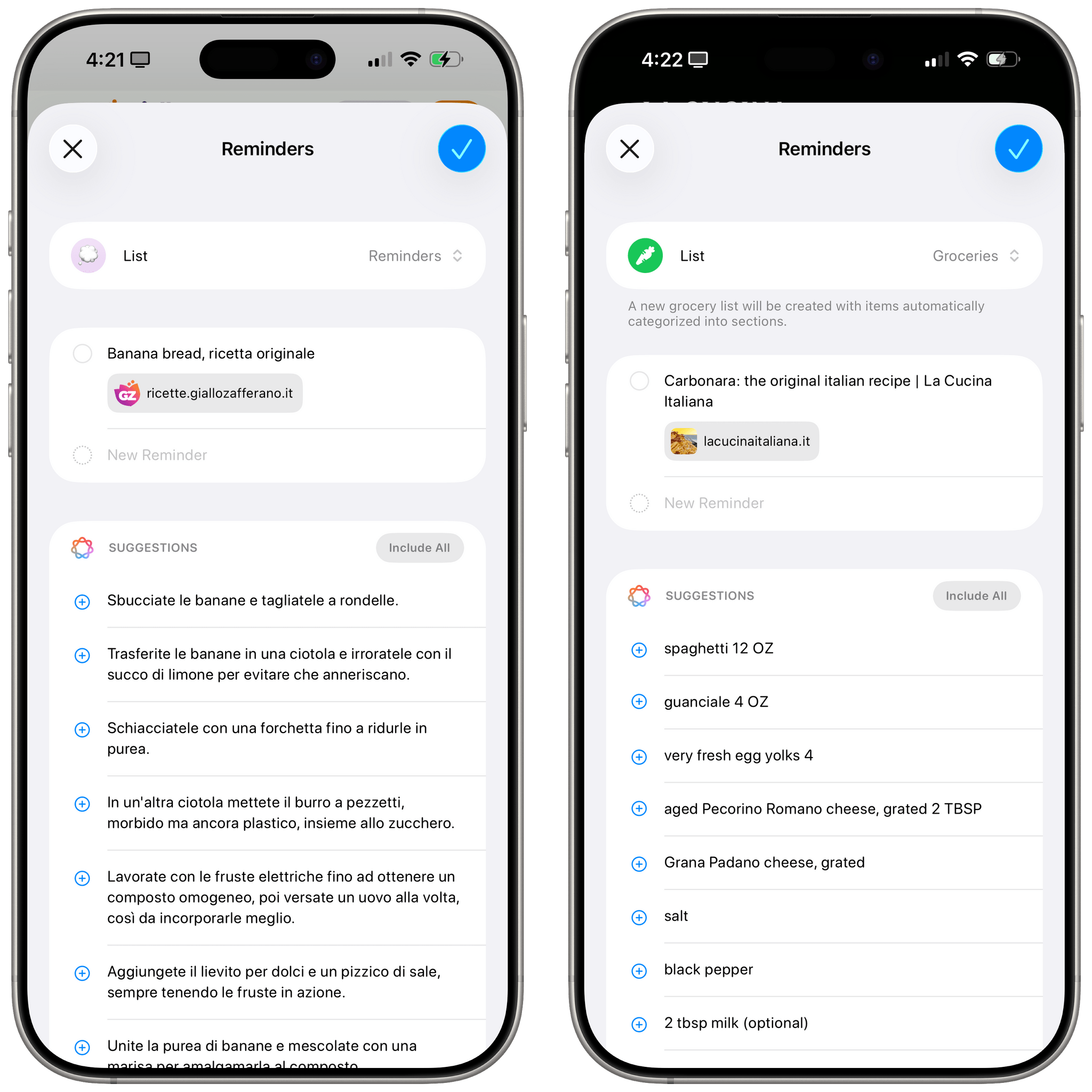

AI-powered Reminders features. Apple’s task manager is receiving two interesting Apple Intelligence-powered enhancements in iOS 26: with a combination of on-device processing and Private Cloud Compute, the app’s share extension can now find actionable items in anything you share with it, and the app can automatically categorize tasks inside lists for you.

The share extension is fascinating. Share some text or a Safari webpage with it, and it’ll find things that look “actionable” inside it and offer to save them for you. This works whether you share a recipe from Safari (it’ll suggest ingredients to buy) or you pass some meeting notes from the Notes app to the extension. It works surprisingly well, and I was able to use it both in English and Italian.

Sometimes, Apple Intelligence in Reminders’ share extension extracts the steps for a recipe; sometimes, it extracts the ingredients.

The main Reminders app can also offer to categorize tasks into sections when you’re inside a list, too. I tested this when I saved dozens of WWDC session videos to the Reminders app, and it did a pretty good job at creating sections based on different areas of Apple’s operating systems. The automatic sections can be turned off at any time and be re-generated as many times as you want.

System-wide translation. We’ll explore this in a standalone article on MacStories soon, but Apple is betting heavily on system-wide translation aided by AI on its platforms. In iOS 26, there’s an impressive array of system apps that have received built-in translation support: Messages can automatically translate incoming texts and translate as you type your responses; FaceTime features live translation captions that appear in real time during a conversation; the Phone app also offers real-time spoken translations with transcripts when on speaker. There is a lot Apple is doing here for multilingual users with fascinating Accessibility implications, and we’ll dive deeper into all the details with a dedicated story soon.