I read this post by Jarrod Blundy a few weeks ago and forgot to link it on MacStories. I think Jarrod did a great job explaining why Apple’s Shortcuts app resonates so strongly with a specific type of person:

But mostly, it just lights up my brain in a way that few other things do.

[…]

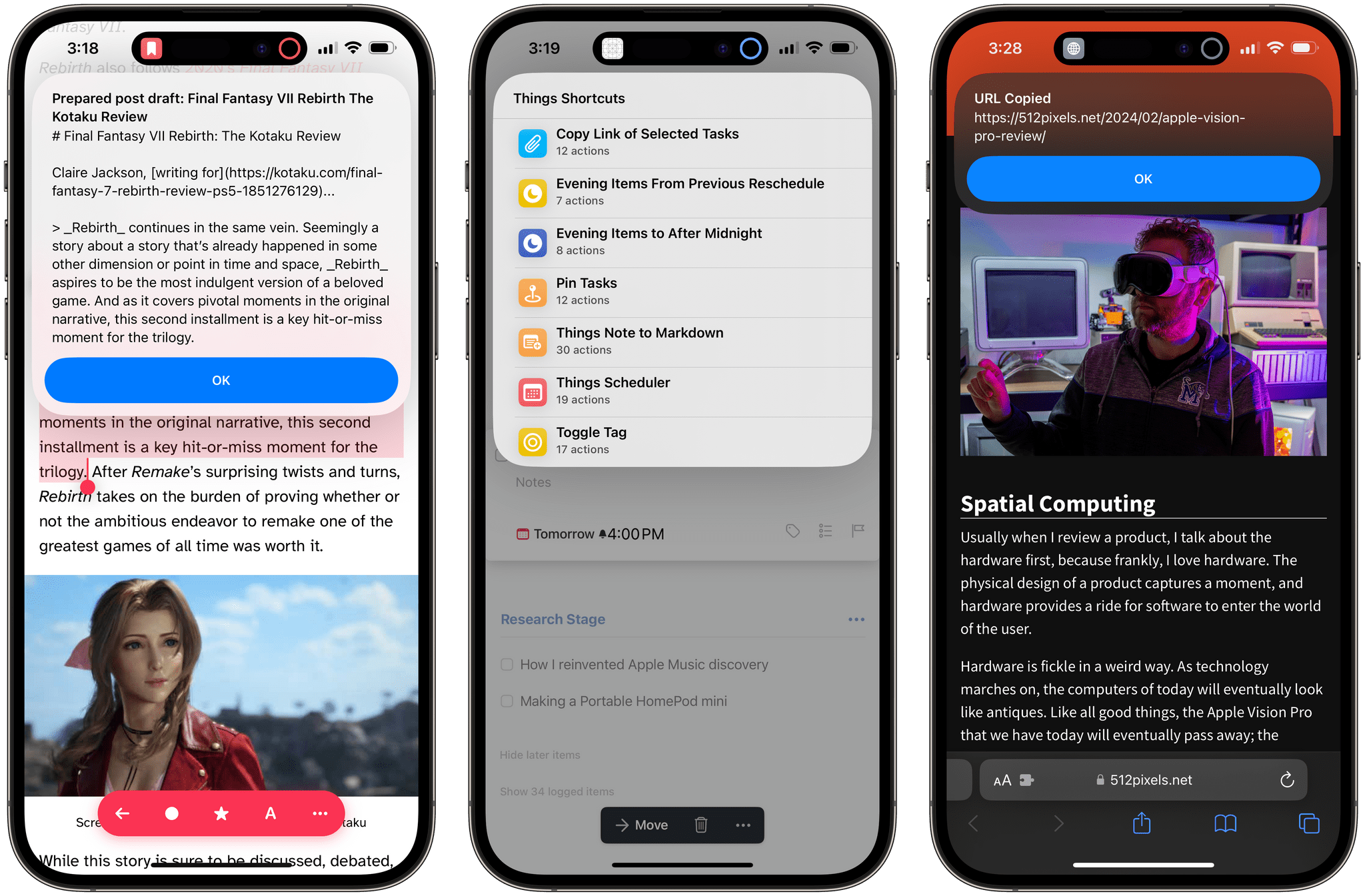

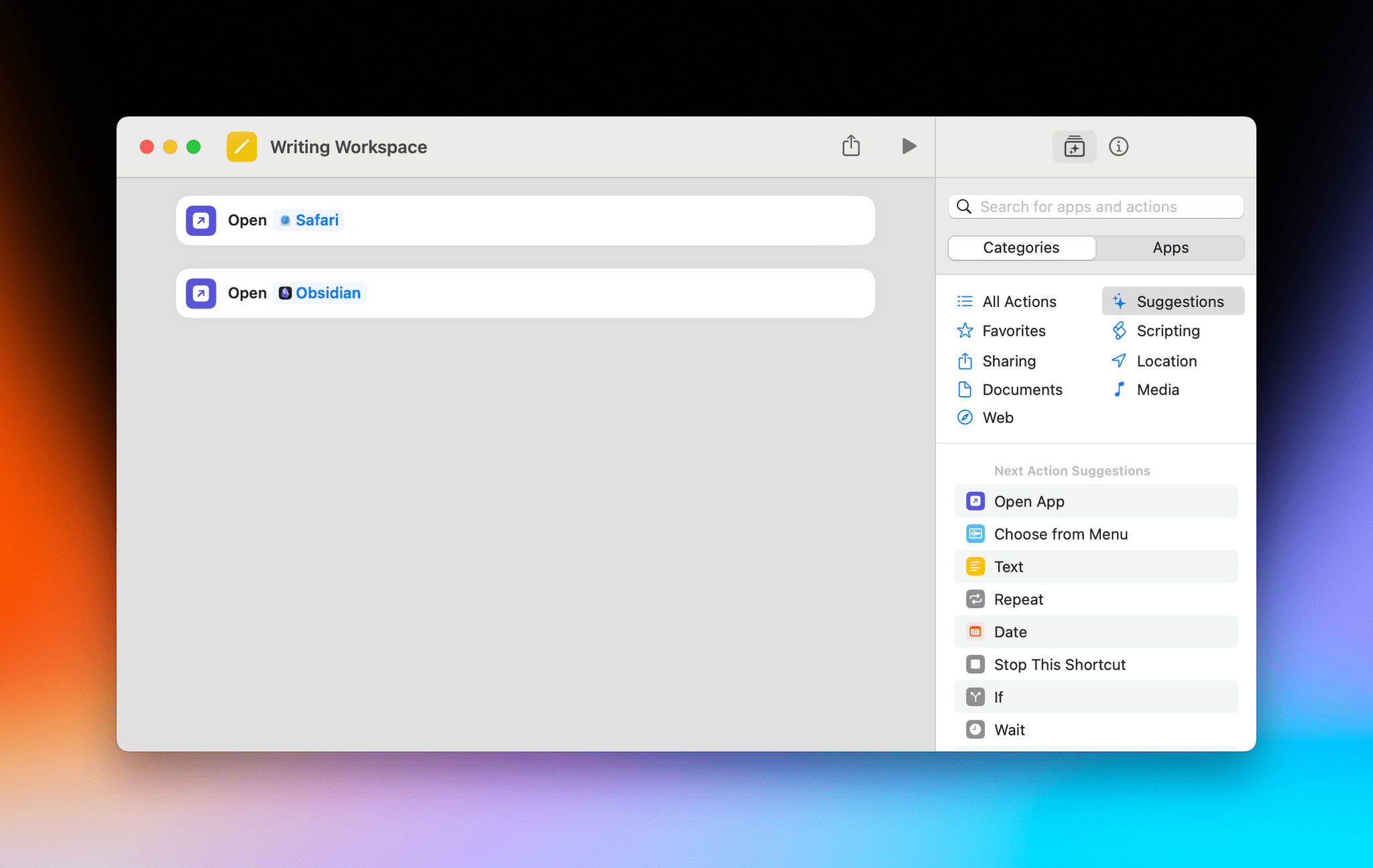

But when there’s a little burr in my computing life that I think could be sanded down with Shortcuts, my wheels get turning and it’s hard to pull myself away from refining, adding features, and solving down to an ideal answer. I’m sure if I learned traditional coding, I’d feel the same. Or if I had a workshop to craft furniture or pound metal into useful shapes. But since I don’t know that much about programming languages nor have the desire to craft physical products, Shortcuts is my IDE, my workshop.

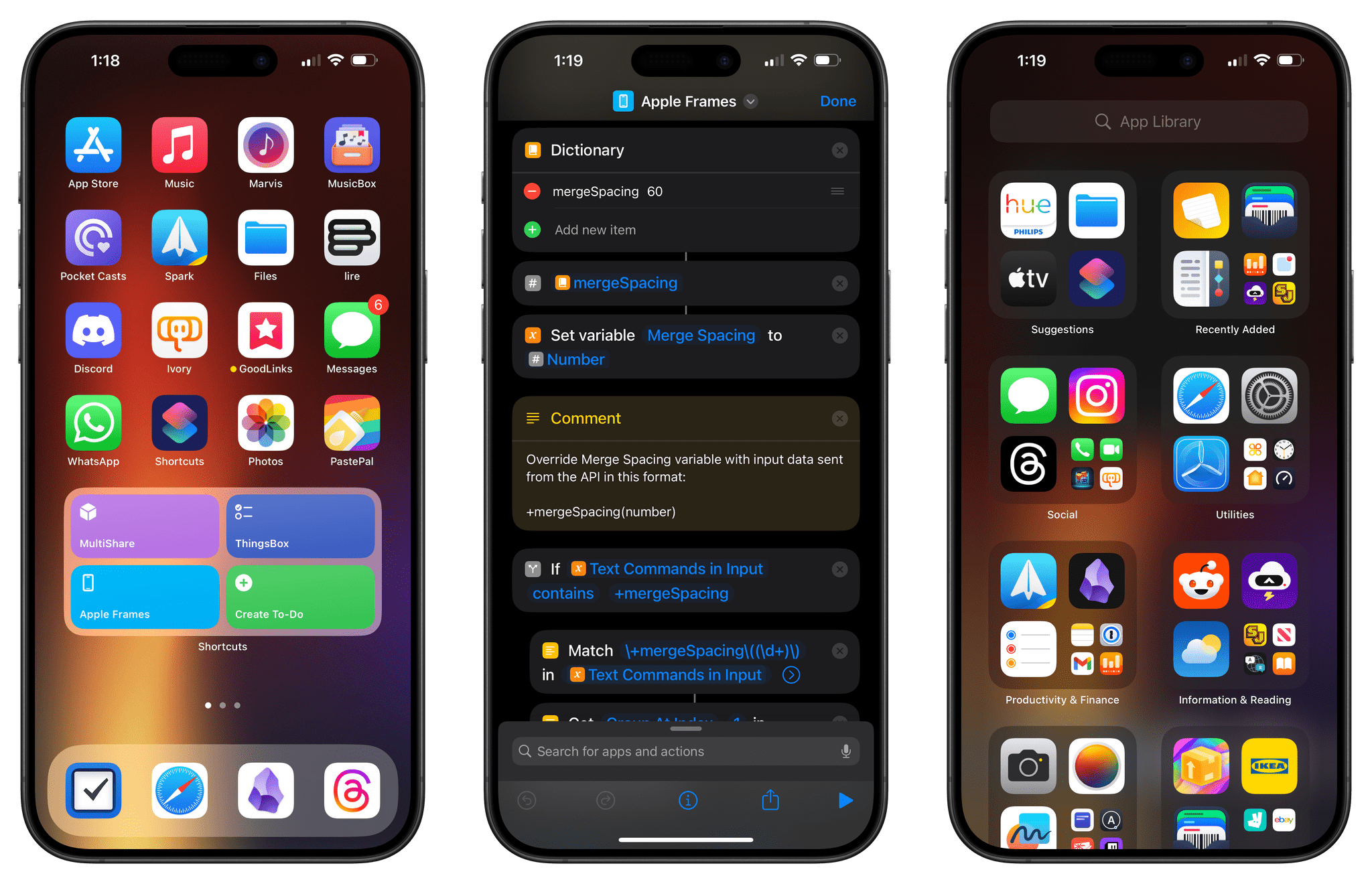

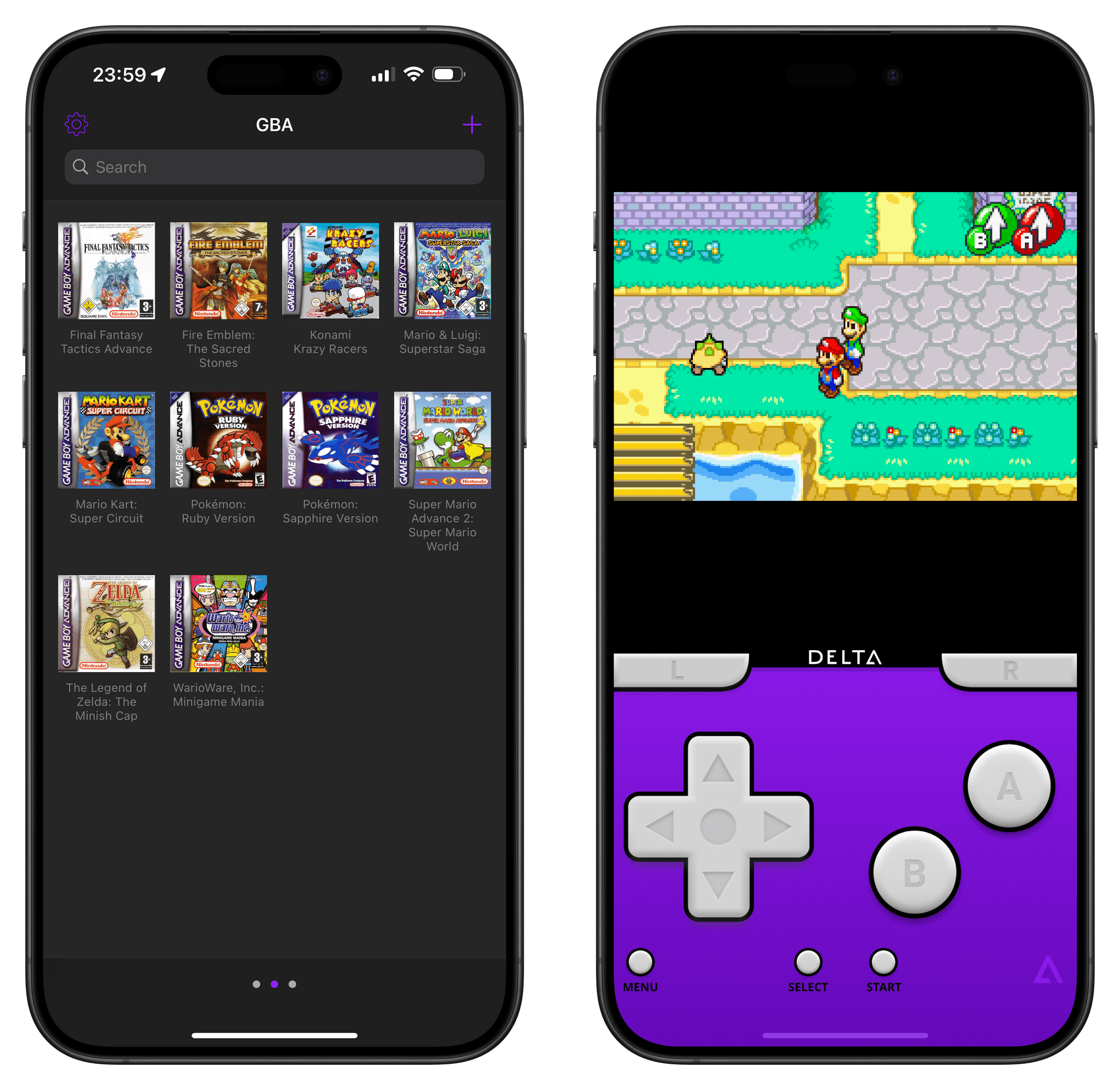

For me, despite the (many) issues of the Shortcuts app on all platforms, the reason I can’t pull myself away from it is that there’s nothing else like it on any modern computing platform (yes, I have tried Tasker and Power Automate and, no, I did not like them). Shortcuts appeals to that part of my brain that loves it when a plan comes together and different things happen in succession. If you’re a gamer, it’s similar to the satisfaction of watching Final Fantasy XII’s Gambits play out in real time, and it’s why I need to check out Unicorn Overlord as soon as possible.

I love software that lets me design a plan and watch it execute automatically. I’ve shared hundreds of shortcuts over the years, and I’m still chasing that high.

I'm using.](https://cdn.macstories.net/img_0872-1711623741629.jpeg)